Get Your Vitamin C! Robust Fact Verification with Contrastive Evidence

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Wwe 2K15 Download Mods Download WWE 2K Mod Apk 1.1.8117 (Unlocked) WWE 2K APK Is a Sports Game That Specializes in Free Wrestling on Android

wwe 2k15 download mods Download WWE 2K Mod Apk 1.1.8117 (Unlocked) WWE 2K APK is a sports game that specializes in free wrestling on Android. In this playground, gamers will be playing the role of muscular wrestlers, choose costumes and participate in fierce battles on the ring. For those who love wrestling, perhaps no one is unaware of the famous wrestling tournament of the US WWE, where muscular wrestlers gather. Because playing in free style and performing. In the match, wrestlers often fought very fiercely, continuously, fighting both on the ring and outside the arena or using tools such as tables, chairs and ladders to attack opponents. Seems to not follow any rules. However, it is the freedom and disobedience that creates appeal and interest for viewers. Now, these games are available on Android, for enthusiasts who immerse themselves in matches and enjoy participating in real battles. WWE 2K allows gamers to transform into muscle wrestlers, choose clothing and appearance and participate in matches. Feel the smooth motion, powerful attacks, and the emotion wells up when you experience WWE 2K for Android. In addition to the basic attacks, gamers can also take advantage of everything to attack the enemy. Because you can play freely, you can fight freely without having to worry about any binding rules that reduce interest in gaming. More specifically, WWE 2K also has a multiplayer mode, allowing you to compete with many other wrestlers in the world. WWE 2K APK – Gameplay Screenshot. Main Features. Participate in WEE matches, move, grapple freely, use your own tactics and play against the opponent you desire. -

Game Console Rating

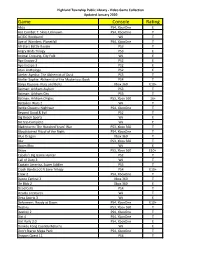

Highland Township Public Library - Video Game Collection Updated January 2020 Game Console Rating Abzu PS4, XboxOne E Ace Combat 7: Skies Unknown PS4, XboxOne T AC/DC Rockband Wii T Age of Wonders: Planetfall PS4, XboxOne T All-Stars Battle Royale PS3 T Angry Birds Trilogy PS3 E Animal Crossing, City Folk Wii E Ape Escape 2 PS2 E Ape Escape 3 PS2 E Atari Anthology PS2 E Atelier Ayesha: The Alchemist of Dusk PS3 T Atelier Sophie: Alchemist of the Mysterious Book PS4 T Banjo Kazooie- Nuts and Bolts Xbox 360 E10+ Batman: Arkham Asylum PS3 T Batman: Arkham City PS3 T Batman: Arkham Origins PS3, Xbox 360 16+ Battalion Wars 2 Wii T Battle Chasers: Nightwar PS4, XboxOne T Beyond Good & Evil PS2 T Big Beach Sports Wii E Bit Trip Complete Wii E Bladestorm: The Hundred Years' War PS3, Xbox 360 T Bloodstained Ritual of the Night PS4, XboxOne T Blue Dragon Xbox 360 T Blur PS3, Xbox 360 T Boom Blox Wii E Brave PS3, Xbox 360 E10+ Cabela's Big Game Hunter PS2 T Call of Duty 3 Wii T Captain America, Super Soldier PS3 T Crash Bandicoot N Sane Trilogy PS4 E10+ Crew 2 PS4, XboxOne T Dance Central 3 Xbox 360 T De Blob 2 Xbox 360 E Dead Cells PS4 T Deadly Creatures Wii T Deca Sports 3 Wii E Deformers: Ready at Dawn PS4, XboxOne E10+ Destiny PS3, Xbox 360 T Destiny 2 PS4, XboxOne T Dirt 4 PS4, XboxOne T Dirt Rally 2.0 PS4, XboxOne E Donkey Kong Country Returns Wii E Don't Starve Mega Pack PS4, XboxOne T Dragon Quest 11 PS4 T Highland Township Public Library - Video Game Collection Updated January 2020 Game Console Rating Dragon Quest Builders PS4 E10+ Dragon -

FCC-21-49A1.Pdf

Federal Communications Commission FCC 21-49 Before the Federal Communications Commission Washington, DC 20554 In the Matter of ) ) Assessment and Collection of Regulatory Fees for ) MD Docket No. 21-190 Fiscal Year 2021 ) ) Assessment and Collection of Regulatory Fees for MD Docket No. 20-105 Fiscal Year 2020 REPORT AND ORDER AND NOTICE OF PROPOSED RULEMAKING Adopted: May 3, 2021 Released: May 4, 2021 By the Commission: Comment Date: June 3, 2021 Reply Comment Date: June 18, 2021 Table of Contents Heading Paragraph # I. INTRODUCTION...................................................................................................................................1 II. BACKGROUND.....................................................................................................................................3 III. REPORT AND ORDER – NEW REGULATORY FEE CATEGORIES FOR CERTAIN NGSO SPACE STATIONS ....................................................................................................................6 IV. NOTICE OF PROPOSED RULEMAKING .........................................................................................21 A. Methodology for Allocating FTEs..................................................................................................21 B. Calculating Regulatory Fees for Commercial Mobile Radio Services...........................................24 C. Direct Broadcast Satellite Regulatory Fees ....................................................................................30 D. Television Broadcaster Issues.........................................................................................................32 -

Federal Register/Vol. 86, No. 91/Thursday, May 13, 2021/Proposed Rules

26262 Federal Register / Vol. 86, No. 91 / Thursday, May 13, 2021 / Proposed Rules FEDERAL COMMUNICATIONS BCPI, Inc., 45 L Street NE, Washington, shown or given to Commission staff COMMISSION DC 20554. Customers may contact BCPI, during ex parte meetings are deemed to Inc. via their website, http:// be written ex parte presentations and 47 CFR Part 1 www.bcpi.com, or call 1–800–378–3160. must be filed consistent with section [MD Docket Nos. 20–105; MD Docket Nos. This document is available in 1.1206(b) of the Commission’s rules. In 21–190; FCC 21–49; FRS 26021] alternative formats (computer diskette, proceedings governed by section 1.49(f) large print, audio record, and braille). of the Commission’s rules or for which Assessment and Collection of Persons with disabilities who need the Commission has made available a Regulatory Fees for Fiscal Year 2021 documents in these formats may contact method of electronic filing, written ex the FCC by email: [email protected] or parte presentations and memoranda AGENCY: Federal Communications phone: 202–418–0530 or TTY: 202–418– summarizing oral ex parte Commission. 0432. Effective March 19, 2020, and presentations, and all attachments ACTION: Notice of proposed rulemaking. until further notice, the Commission no thereto, must be filed through the longer accepts any hand or messenger electronic comment filing system SUMMARY: In this document, the Federal delivered filings. This is a temporary available for that proceeding, and must Communications Commission measure taken to help protect the health be filed in their native format (e.g., .doc, (Commission) seeks comment on and safety of individuals, and to .xml, .ppt, searchable .pdf). -

Wwe 2K18 Game Download for Pc Wwe 2K18 Game Download for Pc

wwe 2k18 game download for pc Wwe 2k18 game download for pc. WWE 2K18 Highly Compressed is a real-time wrestling game. It is the largest game in the WWE game series. The game features main RAW superstar wrestler including Seth Rollins. Due to the powerful action sequences, beautiful graphics, action sequences, special characters, new modes, and modes of modification, this game promises to be interesting than ever. Deep selection and do whatever you want in WWE. This series includes everything that you want. The previous series of the game has some fixed matches. Now you can arrange a match with any character. And it allows you to freely explore the WrestleMania open world as well. Gameplay Of WWE 2K18 Download For PC. Gameplay Of WWE 2K18 Download For PC is a sequel of the previous game series. But this game presents more features on it. Such as the previous series allows 6 players to play at a time in multiplayer mode. Now a maximum of eight characters can play at a time. Furthermore, this game introduces thousands of new moves and actions. Now the player can use any move of his superstar. As well as the player can modify his characters dressing, hairstyle, and many more physical things. However, when the gameplay starts you have to choose the categories of the characters such as Raw characters and NXT characters. And each RAW or NXT team has eight characters. First, you have to choose the category then you can select any one of eight given characters. In addition to the fighting system, there are various selections available. -

2019 Donor List

Children’s Healthcare of Atlanta 2019 Donor List 1 Children’s Healthcare of Atlanta appreciates the many generous donors that understand our vision and share in our mission. Their involvement through financial contributions is vital to our patients, families and community, as Children’s relies on that support to enhance the lives of children. Below is a full list of donors who gave to Children's Healthcare of Atlanta starting at $1,000,000+ to $1,000 from January 1 through December 31, 2019. *We have made every effort to ensure the accuracy of the listing. If you find an error, please accept our apology and alert us by reaching Caroline Qualls, Stewardship Senior Program Coordinator, at 404-785-7351 or [email protected]. 2 Table of Contents Circle of Vision ($1,000,000 and above)..............................................................................4 Circle of Discovery ($500,000-$999,999) ............................................................................4 Circle of Strength ($100,000-$499,999) ..............................................................................5 Circle of Courage ($50,000-$99,999) ..................................................................................6 Circle of Promise ($25,000-$49,999) ..................................................................................7 Circle of Imagination ($10,000-$24,999).............................................................................8 Dream Society ($5,000-$9,999) ....................................................................................... -

Obitel Bilingue Inglês 2019 Final Ok.Indd

IBERO-AMERICAN OBSERVATORY OF TELEVISION FICTION OBITEL 2019 TELEVISION DISTRIBUTION MODELS BY THE INTERNET: ACTORS, TECHNOLOGIES, STRATEGIES IBERO-AMERICAN OBSERVATORY OF TELEVISION FICTION OBITEL 2019 TELEVISION DISTRIBUTION MODELS BY THE INTERNET: ACTORS, TECHNOLOGIES, STRATEGIES Maria Immacolata Vassallo de Lopes Guillermo Orozco Gómez General Coordinators Charo Lacalle Sara Narvaiza Editors Gustavo Aprea, Fernando Aranguren, Catarina Burnay, Borys Bustamante, Giuliana Cassano, James Dettleff, Francisco Fernández, Gabriela Gómez, Pablo Julio, Mónica Kirchheimer, Charo Lacalle, Ligia Prezia Lemos, Pedro Lopes, Guillermo Orozco Gómez, Juan Piñón, Rosario Sánchez, Maria Immacolata Vassallo de Lopes National Coordinators © Globo Comunicação e Participações S.A., 2019 Capa: Letícia Lampert Projeto gráfico e editoração: Niura Fernanda Souza Produção editorial: Felícia Xavier Volkweis Revisão do português: Felícia Xavier Volkweis Revisão do espanhol: Naila Freitas Revisão gráfica: Niura Fernanda Souza Editores: Luis Antônio Paim Gomes, Juan Manuel Guadelis Crisafulli Foto de capa: Louie Psihoyos – High-definition televisions in the information era Bibliotecária responsável: Denise Mari de Andrade Souza – CRB 10/960 T269 Television distribution models by the internet: actors, technologies, strate- gies / general coordinators: Maria Immacolata Vassallo de Lopes and Guillermo Orozco Gómez. -- Porto Alegre: Sulina, 2019. 377 p.; 14x21 cm. ISBN: 978-85-205-0849-7 1. Television – internet. 2. Communication and technology – Ibero- -American television. 3. Television shows – Distribution – Internet. 4. Ibero- -American television. 5. Social media. 6. Social communication I. Lopes, Maria Immacolata Vassallo de. III. Gómez, Guillermo Orozco. CDU: 654.19 659.3 CDD: 301.161 791.445 Direitos desta edição adquiridos por Globo Comunicação e Participações S.A. Edição digital disponível em obitel.net. Editora Meridional Ltda. -

A Three Degrees of Freedom Test-Bed for Nanosatellite and Cubesat Attitude Dynamics, Determination, and Control

Calhoun: The NPS Institutional Archive Theses and Dissertations Thesis Collection 2009-12 A three degrees of freedom test-bed for nanosatellite and Cubesat attitude dynamics, determination, and control Meissner, David M. Monterey, California. Naval Postgraduate School http://hdl.handle.net/10945/4483 NAVAL POSTGRADUATE SCHOOL MONTEREY, CALIFORNIA THESIS A THREE DEGREES OF FREEDOM TEST BED FOR NANOSATELLITE AND CUBESAT ATTITUDE DYNAMICS, DETERMINATION, AND CONTROL by David M. Meissner December 2009 Thesis Co-Advisors: Marcello Romano Riccardo Bevilacqua Approved for public release; distribution is unlimited THIS PAGE INTENTIONALLY LEFT BLANK REPORT DOCUMENTATION PAGE Form Approved OMB No. 0704-0188 Public reporting burden for this collection of information is estimated to average 1 hour per response, including the time for reviewing instruction, searching existing data sources, gathering and maintaining the data needed, and completing and reviewing the collection of information. Send comments regarding this burden estimate or any other aspect of this collection of information, including suggestions for reducing this burden, to Washington headquarters Services, Directorate for Information Operations and Reports, 1215 Jefferson Davis Highway, Suite 1204, Arlington, VA 22202-4302, and to the Office of Management and Budget, Paperwork Reduction Project (0704-0188) Washington DC 20503. 1. AGENCY USE ONLY (Leave blank) 2. REPORT DATE 3. REPORT TYPE AND DATES COVERED December 2009 Master’s Thesis 4. TITLE AND SUBTITLE 5. FUNDING NUMBERS A Three Degrees of Freedom Test Bed for Nanosatellite and CubeSat Attitude Dynamics, Determination, and Control 6. AUTHOR(S) David M. Meissner 7. PERFORMING ORGANIZATION NAME(S) AND ADDRESS(ES) 8. PERFORMING ORGANIZATION Naval Postgraduate School REPORT NUMBER Monterey, CA 93943-5000 9. -

FCC-21-98A1.Pdf

Federal Communications Commission FCC 21-98 Before the Federal Communications Commission Washington, D.C. 20554 In the Matter of ) ) Assessment and Collection of Regulatory Fees for ) MD Docket No. 21-190 Fiscal Year 2021 ) ) REPORT AND ORDER AND NOTICE OF PROPOSED RULEMAKING Adopted: August 25, 2021 Released: August 26, 2021 Comment Date: [30 days after date of publication in the Federal Register] Reply Comment Date: [45 days after date of publication in the Federal Register] By the Commission: Acting Chairwoman Rosenworcel and Commissioners Carr and Simington issuing separate statements. TABLE OF CONTENTS Heading Paragraph # I. INTRODUCTION...................................................................................................................................1 II. BACKGROUND.....................................................................................................................................2 III. REPORT AND ORDER..........................................................................................................................6 A. Allocating Full-time Equivalents......................................................................................................7 B. Commercial Mobile Radio Service Regulatory Fees Calculation ..................................................27 C. Direct Broadcast Satellite Fees .......................................................................................................28 D. Full-Service Television Broadcaster Fees ......................................................................................36 -

Copyright, Competition and Development

Copyright, Competition and Development Report by the Max Planck Institute for Intellectual Property and Competition Law, Munich December 2013 Preliminary note: The preparation of this Report has been mandated by the World Intellectual Property Organization to the Max Planck Institute and has been released in December 2013. Most of the information that is provided in the Report was collected in the second half of 2012 and has only partially been updated until summer 2013. This Report is authored by Professor Josef Drexl, Director of the Max Planck Institute. Many other scholars at the Institute took part in the research that led to this report. These include in particular Doreen Antony, Daria Kim and Moses Muchiri, as well as Dr Mor Bakhoum, Filipe Fischmann, Dr Kaya Köklü, Julia Molestina, Dr Sylvie Nérisson, Souheir Nadde‐Phlix, Dr Gintarė Surblytė and Dr Silke von Lewinski. The author will be happy to receive comments on the Report. These may relate to any corrections that are needed regarding the cases cited in the Report or additional cases that would be interesting and that have come up more recently. It is planned to publish the Report in a revised version in form of a book at a later stage. Comments can be sent to [email protected]. As regards agency and court decisions, the author would highly appreciate if these decisions were delivered in electronic form. Structure of this Document: Summary of the Report 2 Report 13 Annex: Questionnaire 277 1 Summary of the Report 1 Introduction (1) This text presents a summary of the Report of the Max Planck Institute for Intellectual Property and Competition Law on “Copyright, Competition and Development”. -

Obitel 2015 Inglêsl Color.Indd

IBERO-AMERICAN OBSERVATORY OF TELEVISION FICTION OBITEL 2015 GENDER RELATIONS IN TELEVISION FICTION IBERO-AMERICAN OBSERVATORY OF TELEVISION FICTION OBITEL 2015 GENDER RELATIONS IN TELEVISION FICTION Maria Immacolata Vassallo de Lopes Guillermo Orozco Gómez General Coordinators Morella Alvarado, Gustavo Aprea, Fernando Aranguren, Alexandra Ayala-Marín, Catarina Burnay, Borys Bustamante, Giuliana Cassano, Pamela Cruz Páez, James Dettleff, Francisco Fernández, Francisco Hernández, Pablo Julio, Mónica Kirchheimer, Charo Lacalle, Pedro Lopes, Maria Cristina Mungioli, Guillermo Orozco Gómez, Juan Piñón, Rosario Sánchez, Luisa Torrealba and Maria Immacolata Vassallo de Lopes National Coordinators © Globo Comunicação e Participações S.A., 2015 Capa: Letícia Lampert Projeto gráfico e editoração:Niura Fernanda Souza Produção editorial: Felícia Xavier Volkweis Revisão, leitura de originais: Felícia Xavier Volkweis Revisão gráfica:Niura Fernanda Souza Editores: Luis Antônio Paim Gomes, Juan Manuel Guadelis Crisafulli Foto de capa: Louie Psihoyos. High-definition televisions in the information era. Librarian: Denise Mari de Andrade Souza – CRB 10/960 G325 Gender relations in television fiction: 2015 Obitel yearbook / general coordina- tors Maria Immacolata Vassallo de Lopes and Guillermo Orozco Gómez. -- Porto Alegre: Sulina, 2015. 526 p.; il. ISBN: 978-85-205-0738-4 1. Television – Programs. 2. Fiction – Television. 3. Programs Television – Ibero-American. 4. Media. 5. Television – Gender Relations. I. Lopes, Maria Immacolata Vassallo de. II. Gómez, Guillermo Orozco. CDU: 654.19 659.3 CDD: 301.161 791.445 Direitos desta edição adquiridos por Globo Comunicação e Participações S.A. Editora Meridional Ltda. Av. Osvaldo Aranha, 440 cj. 101 – Bom Fim Cep: 90035-190 – Porto Alegre/RS Fone: (0xx51) 3311.4082 Fax: (0xx51) 2364.4194 www.editorasulina.com.br e-mail: [email protected] July/2015 INDEX INTRODUCTION............................................................................................ -

Acronyms and Abbreviations *

APPENDIX A Acronyms and Abbreviations * a atto (10-18) A ampere A angstrom AAR automatic alternate routing AARTS automatic audio remote test set AAS aeronautical advisory station AB asynchronous balanced [mode] (Link Layer OSI-RM) ABCA American, British, Canadian, Australian armies abs absolute ABS aeronautical broadcast station ABSBH average busy season busy hour ac alternating current AC absorption coefficient access charge access code ACA automatic circuit assurance ACC automatic callback calling ACCS associated common-channel signaling ACCUNET AT&T switched 56-kbps service ACD automatic call distributing *Alphabetized letter by letter. Uppercase letters follow lowercase letters. All other symbols are ignored, including superscripts and subscripts. Numerals follow Z, and Greek letters are at the very end. 1103 Appendix A 1104 automatic call distributor ac-dc alternating-current direct-current (ringing) ACE automatic cross-connection equipment ACFNTAM advanced communications facility (VTAM) ACK acknowledge character acknowledgment ACKINAK acknowledgment/negative acknowledgment ACS airport control station asynchronous communications system ACSE association service control element ACTS advanced communications technology satellite ACU automatic calling unit AD addendum NO analog to digital A-D analog to digital ADAPT architectures design, analysis, and planning tool ADC analog-to-digital converter automatic digital control ADCCP Advanced Data Communications Control Procedure (ANSI) ADH automatic data handling ADP automatic data processing