Transformer Visualization Via Dictionary Learning: Contextualized Embedding As a Linear Superposition of Transformer Factors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Direct-82.Pdf

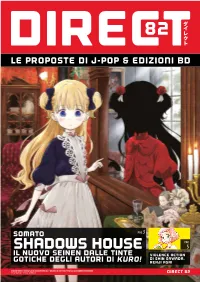

82 LE PROPOSTE DI J-POP & EDIZIONI BD SOMATO PAG 3 PAG SHADOWS HOUSE 5 il nuovo seinen dalle tinte violence action di Shin Sawada, gotiche degli autori di Kuro! Renji Asai SHADOW HOUSE © 2018 by Somato 2018/SHUEISHA Inc. | VIOLENCE ACTION ©2017 Renji ASAI, Shin SAWADA/SHOGAKUKAN. COPIA GRATUITA - VIETATA LA VENDITA DIRECT 82 DAISY JEALOUSY© 2020 Ogeretsu Tanaka/Libre Inc. 82 CHECKLIST! SPOTLIGHT ON DIRECT è una pubblicazione di Edizioni BD S.r.l. Viale Coni Zugna, 7 - 20144 - Milano tel +39 02 84800751 - fax +39 02 89545727 www.edizionibd.it www.j-pop.it Coordinamento Georgia Cocchi Pontalti Art Director Giovanni Marinovich Hanno collaborato Davide Bertaina, Jacopo Costa TITOLO ISBN PREZZO DATA DI USCITA Buranelli, Giorgio Cantù, Ilenia Cerillo, Francesco INDICATIVA Paolo Cusano, Matteo de Marzo, Andrea Ferrari, 86 - EIGHTY SIX 1 9788834906170 € 6,50 MAGGIO Valentina Ghidini, Fabio Graziano, Eleonora 86 - EIGHTY SIX 2 9788834906545 € 6,50 GIUGNO Moscarini, Lucia Palombi, Valentina Andrea Sala, Federico Salvan, Marco Schiavone, Fabrizio Sesana DAISY JEALOUSY 9788834906507 € 7,50 GIUGNO DANMACHI - SWORD ORATORIA 17 9788834906552 € 5,90 GIUGNO UFFICIO COMMERCIALE DEATH STRANDING 1 9788834915455 € 12,50 GIUGNO tel. +39 02 36530450 DEATH STRANDING BOX VOL. 1-2 9788834915448 € 25,00 GIUGNO [email protected] DUNGEON FOOD 9 9788834906569 € 6,90 GIUGNO [email protected] GOBLIN SLAYER 10 9788834906576 € 6,50 GIUGNO [email protected] GOLDEN KAMUI 23 9788834906583 € 6,90 GIUGNO DISTRIBUZIONE IN FUMETTERIE GUNDAM UNICORN 15 9788834906590 € 6,90 GIUGNO Manicomix Distribuzione HANAKO KUN 8 9788834906606 € 5,90 GIUGNO Via IV Novembre, 14 - 25010 - San Zeno Naviglio (BS) JUJIN OMEGAVERSE: REMNANT 2 9788834906682 € 6,90 GIUGNO www.manicomixdistribuzione.it KAKEGURUI 14 9788834906613 € 6,90 GIUGNO DISTRIBUZIONE IN LIBRERIE KASANE 7 9788834906620 € 6,90 GIUGNO A.L.I. -

In for Inclusion, Justice, and Prosperity October 27–29, Los Angeles

All in for inclusion, justice, and prosperity October 27–29, Los Angeles www.equity2015.org 1 Dear Friends and Colleagues, On behalf of the board and staf of PolicyLink, welcome to Los Angeles and to Equity Summit 2015: All in for inclusion, justice, and prosperity. Your time is valuable and we’re honored you’ve chosen to spend some of it with us. We hope you will have an uplifting experience that ofers opportunities to reconnect with colleagues, meet new people, learn, share, stretch, and strength- en your commitment to building an equitable society in which all reach their full potential. The PolicyLink team will do all we can to make your experience meaningful. If you need assistance, let us know. If you don’t know us yet, look for badges with “staf” on them. We’re pleased that you’re here and excited about the program ahead of us. Angela Glover Blackwell President and CEO 2 Equity Summit 2015 All in for inclusion, justice, and prosperity Our Partners and Sponsors For tHeir generous support of Equity Leading Partners Summit 2015, we tHank: • The Kresge Foundation • W.K. Kellogg Foundation • Citi Community Development Partners • The Annie E. Casey Foundation • The California Endowment • The California Wellness Foundation • The Convergence Partnership • Ford Foundation • John D. and Catherine T. MacArthur Foundation Sponsors • The James Irvine Foundation • JP Morgan Chase & Co. • Marguerite Casey Foundation • Prudential • Robert Wood Johnson Foundation • The Rockefeller Foundation • Surdna Foundation • Walter and Elise Haas Fund 3 Delegation -

Speaking of South Park

University of Windsor Scholarship at UWindsor OSSA Conference Archive OSSA 3 May 15th, 9:00 AM - May 17th, 5:00 PM Speaking of South Park Christina Slade University Sydney Follow this and additional works at: https://scholar.uwindsor.ca/ossaarchive Part of the Philosophy Commons Slade, Christina, "Speaking of South Park" (1999). OSSA Conference Archive. 53. https://scholar.uwindsor.ca/ossaarchive/OSSA3/papersandcommentaries/53 This Paper is brought to you for free and open access by the Conferences and Conference Proceedings at Scholarship at UWindsor. It has been accepted for inclusion in OSSA Conference Archive by an authorized conference organizer of Scholarship at UWindsor. For more information, please contact [email protected]. Title: Speaking of South Park Author: Christina Slade Response to this paper by: Susan Drake (c)2000 Christina Slade South Park is, at first blush, an unlikely vehicle for the teaching of argumentation and of reasoning skills. Yet the cool of the program, and its ability to tap into the concerns of youth, make it an obvious site. This paper analyses the argumentation of one of the programs which deals with genetic engineering. Entitled 'An Elephant makes love to a Pig', the episode begins with the elephant being presented to the school bus driver as 'the new disabled kid'; and opens a debate on the virtues of genetic engineering with the teacher saying: 'We could have avoided terrible mistakes, like German people'. The show both offends and ridicules received moral values. However a fine grained analysis of the transcript of 'An Elephant makes love to a Pig' shows how superficially absurd situations conceal sophisticated argumentation strategies. -

![Arxiv:2104.00880V2 [Astro-Ph.HE] 6 Jul 2021](https://docslib.b-cdn.net/cover/4859/arxiv-2104-00880v2-astro-ph-he-6-jul-2021-94859.webp)

Arxiv:2104.00880V2 [Astro-Ph.HE] 6 Jul 2021

Draft version July 7, 2021 Typeset using LATEX twocolumn style in AASTeX63 Refined Mass and Geometric Measurements of the High-Mass PSR J0740+6620 E. Fonseca,1, 2, 3, 4 H. T. Cromartie,5, 6 T. T. Pennucci,7, 8 P. S. Ray,9 A. Yu. Kirichenko,10, 11 S. M. Ransom,7 P. B. Demorest,12 I. H. Stairs,13 Z. Arzoumanian,14 L. Guillemot,15, 16 A. Parthasarathy,17 M. Kerr,9 I. Cognard,15, 16 P. T. Baker,18 H. Blumer,3, 4 P. R. Brook,3, 4 M. DeCesar,19 T. Dolch,20, 21 F. A. Dong,13 E. C. Ferrara,22, 23, 24 W. Fiore,3, 4 N. Garver-Daniels,3, 4 D. C. Good,13 R. Jennings,25 M. L. Jones,26 V. M. Kaspi,1, 2 M. T. Lam,27, 28 D. R. Lorimer,3, 4 J. Luo,29 A. McEwen,26 J. W. McKee,29 M. A. McLaughlin,3, 4 N. McMann,30 B. W. Meyers,13 A. Naidu,31 C. Ng,32 D. J. Nice,33 N. Pol,30 H. A. Radovan,34 B. Shapiro-Albert,3, 4 C. M. Tan,1, 2 S. P. Tendulkar,35, 36 J. K. Swiggum,33 H. M. Wahl,3, 4 and W. W. Zhu37 1Department of Physics, McGill University, 3600 rue University, Montr´eal,QC H3A 2T8, Canada 2McGill Space Institute, McGill University, 3550 rue University, Montr´eal,QC H3A 2A7, Canada 3Department of Physics and Astronomy, West Virginia University, Morgantown, WV 26506-6315, USA 4Center for Gravitational Waves and Cosmology, Chestnut Ridge Research Building, Morgantown, WV 26505, USA 5Cornell Center for Astrophysics and Planetary Science and Department of Astronomy, Cornell University, Ithaca, NY 14853, USA 6NASA Hubble Fellowship Program Einstein Postdoctoral Fellow 7National Radio Astronomy Observatory, 520 Edgemont Road, Charlottesville, VA 22903, USA 8Institute of Physics, E¨otv¨osLor´anUniversity, P´azm´anyP. -

Beyond Traditional Assumptions in Fair Machine Learning

Beyond traditional assumptions in fair machine learning Niki Kilbertus Supervisor: Prof. Dr. Carl E. Rasmussen Advisor: Dr. Adrian Weller Department of Engineering University of Cambridge arXiv:2101.12476v1 [cs.LG] 29 Jan 2021 This thesis is submitted for the degree of Doctor of Philosophy Pembroke College October 2020 Acknowledgments The four years of my PhD have been filled with enriching and fun experiences. I owe all of them to interactions with exceptional people. Carl Rasmussen and Bernhard Schölkopf have put trust and confidence in me from day one. They enabled me to grow as a researcher and as a human being. They also taught me not to take things too seriously in moments of despair. Thank you! Adrian Weller’s contagious positive energy gave me enthusiasm and drive. He showed me how to be considerate and relentless at the same time. I thank him for his constant support and sharing his extensive network. Among others, he introduced me to Matt J. Kusner, Ricardo Silva, and Adrià Gascón who have been amazing role models and collaborators. I hope for many more joint projects with them! Moritz Hardt continues to be an inexhaustible source of inspiration and I want to thank him for his mentorship and guidance during my first paper. I was extremely fortunate to collaborate with outstanding people during my PhD beyond the ones already mentioned. I have learned a lot from every single one of them, thank you: Philip Ball, Stefan Bauer, Silvia Chiappa, Elliot Creager, Timothy Gebhard, Manuel Gomez Rodriguez, Anirudh Goyal, Krishna P. Gummadi, Ian Harry, Dominik Janzing, Francesco Locatello, Krikamol Muandet, Frederik Träuble, Isabel Valera, and Michael Veale. -

The Jays Jay Brown NATION BUILDER

The Jays Jay Brown NATION BUILDER oc Nation co-founder of the arts, having joined the and CEO Jay Brown Hammer Museum’s board of R has succeeded beyond directors in 2018, as well as his wildest dreams. He’s part of a champion of philanthropic Jay-Z’s inner circle, along with causes. In short, this avid fish- longtime righthand man Tyran erman keeps reeling in the big “Tata” Smith, Roc Nation ones. Brown is making his COO Desiree Perez and her mark, indelibly and with a deep- husband, Roc Nation Sports seated sense of purpose. President “OG” Juan Perez. “I think the legacy you He’s a dedicated supporter create…is built on the people RAINMAKERS TWO 71 you help,” Brown told CEO.com in “Pon de Replay,” L.A. told Jay-Z not to Tevin Campbell, The Winans, Patti 2018. “It’s not in how much money you let Rihanna leave the building until the Austin, Tamia, Tata Vega and Quincy make or what you buy or anything like contract was signed. Jay-Z and his team himself—when he was 19. “He mentored that. It’s about how many people you closed a seven-album deal, and since then, me and taught me the business,” Brown touch. It’s in how many jobs you help she’s sold nearly 25 million albums in the says of Quincy. “He made sure if I was people get and how many dreams you U.S. alone, while the biggest of her seven going to be in the business, I was going to help them achieve.” tours, 2013’s Diamonds World Tour, learn every part of the business.” By this definition, Brown’s legacy is grossed nearly $142 million on 90 dates. -

South Park the Fractured but Whole Free Download Review South Park the Fractured but Whole Free Download Review

south park the fractured but whole free download review South park the fractured but whole free download review. South Park The Fractured But Whole Crack Whole, players with Coon and Friends can dive into the painful, criminal belly of South Park. This dedicated group of criminal warriors was formed by Eric Cartman, whose superhero alter ego, The Coon, is half man, half raccoon. Like The New Kid, players will join Mysterion, Toolshed, Human Kite, Mosquito, Mint Berry Crunch, and a group of others to fight the forces of evil as Coon strives to make his team of the most beloved superheroes in history. Creators Matt South Park The Fractured But Whole IGG-Game Stone and Trey Parker were involved in every step of the game’s development. And also build his own unique superpowers to become the hero that South Park needs. South Park The Fractured But Whole Codex The player takes on the role of a new kid and joins South Park favorites in a new extremely shocking adventure. The game is the sequel to the award-winning South Park The Park of Truth. The game features new locations and new characters to discover. The player will investigate the crime under South Park. The other characters will also join the player to fight against the forces of evil as the crown strives to make his team the most beloved South Park The Fractured But Whole Plaza superheroes in history. Try Marvel vs Capcom Infinite for free now. The all-new dynamic control system offers new possibilities to manipulate time and space on the battlefield. -

P36 Layout 1

lifestyle WEDNESDAY, DECEMBER 25, 2013 Gossip Rihanna to release two albums in 2014? ihanna is planning to release two albums in 2014. Spring/Summer 2014 collection advertising campaign RThe ‘Stay’ hitmaker has put out a new record every for the French fashion label and is expected to feature in year since 2009 and although this year she hasn’t magazines from January. The label’s creative director, released another she is hoping to have “big hits” in the Olivier Rousteing, previously said: “In front of the cam- future. A source told The Sun newspaper: “The plan is to era, she makes you feel like she is the only girl in put two records out. Rihanna wants big hits on both the the world. “When the woman that inspires you albums.” The Barbadian beauty’s last album wears your creations, your vision feels complete.” ‘Unapologetic’ hit shelves in November 2012 and she’s since been busy on her ‘Diamonds World Tour’, which kicked off in March this year, in support of her sev- enth studio album. Rihanna has had a successful year and was recently named the new face of Balmain where she will front the Scherzinger shares festive style tips icole Scherzinger loves sequins. The former She said: “When you’re on the go, throw on some NPussycat Dolls singer thinks a bit of sparkle good old shades and a hat. I didn’t realize how valu- can make any outfit look festive, and she is able sleep was until I got older. “I love really fresh, also a big fan of accessorizing. -

UPC Platform Publisher Title Price Available 730865001347

UPC Platform Publisher Title Price Available 730865001347 PlayStation 3 Atlus 3D Dot Game Heroes PS3 $16.00 52 722674110402 PlayStation 3 Namco Bandai Ace Combat: Assault Horizon PS3 $21.00 2 Other 853490002678 PlayStation 3 Air Conflicts: Secret Wars PS3 $14.00 37 Publishers 014633098587 PlayStation 3 Electronic Arts Alice: Madness Returns PS3 $16.50 60 Aliens Colonial Marines 010086690682 PlayStation 3 Sega $47.50 100+ (Portuguese) PS3 Aliens Colonial Marines (Spanish) 010086690675 PlayStation 3 Sega $47.50 100+ PS3 Aliens Colonial Marines Collector's 010086690637 PlayStation 3 Sega $76.00 9 Edition PS3 010086690170 PlayStation 3 Sega Aliens Colonial Marines PS3 $50.00 92 010086690194 PlayStation 3 Sega Alpha Protocol PS3 $14.00 14 047875843479 PlayStation 3 Activision Amazing Spider-Man PS3 $39.00 100+ 010086690545 PlayStation 3 Sega Anarchy Reigns PS3 $24.00 100+ 722674110525 PlayStation 3 Namco Bandai Armored Core V PS3 $23.00 100+ 014633157147 PlayStation 3 Electronic Arts Army of Two: The 40th Day PS3 $16.00 61 008888345343 PlayStation 3 Ubisoft Assassin's Creed II PS3 $15.00 100+ Assassin's Creed III Limited Edition 008888397717 PlayStation 3 Ubisoft $116.00 4 PS3 008888347231 PlayStation 3 Ubisoft Assassin's Creed III PS3 $47.50 100+ 008888343394 PlayStation 3 Ubisoft Assassin's Creed PS3 $14.00 100+ 008888346258 PlayStation 3 Ubisoft Assassin's Creed: Brotherhood PS3 $16.00 100+ 008888356844 PlayStation 3 Ubisoft Assassin's Creed: Revelations PS3 $22.50 100+ 013388340446 PlayStation 3 Capcom Asura's Wrath PS3 $16.00 55 008888345435 -

884-5 111 Places in LA That You Must Not Miss.Pdf

© Emons Verlag GmbH All rights reserved All photos © Lyudmila Zotova, except: Boone Children’s Gallery (p. 31) – Photo © Museum Associates/LACMA; Kayaking on LA River (p. 123) – LA River Kayak Safari; L.A. Derby Dolls (p. 125) – Photo by Marc Campos, L.A. Derby Dolls; Machine Project (p. 133, top image) – Photo of Josh Beckman’s Sea Nymph courtesy of Machine Project; Museum of Broken Relationships (p. 141) – Courtesy of the Museum of Broken Relationships; Norton Simon Museum (p. 157) – Norton Simon Art Foundation; Te Source Restaurant (p. 195, top image) – Te Source Family after morning meditation, photo by Isis Aquarian courtesy of Isis Aquarian Source Archives; Wildlife Waystation (p. 227) – Photo by Billy V Vaughn, Wildlife Waystation Art credits: Machine Project (p. 133, top image) – Sea Nymph by the artist Josh Beckman; Velveteria (p. 217) – artwork pictured reprinted by permission of the artists: Caren Anderson (Liberace in blue vest); Caren Anderson & Cenon (center Liberace); Jennifer Kenworth aka Juanita’s Velvets (Liberace with red cape); CeCe Rodriguez (poodle in square frame, left of center Liberace) © Cover icon Montage: iStockphoto.com/bebecom98, iStockphoto.com/Davel5957 Design: Eva Kraskes, based on a design by Lübbeke | Naumann | Toben Edited by Katrina Fried Maps: altancicek.design, www.altancicek.de Printing and binding: B.O.S.S Medien GmbH, Goch Printed in Germany 2016 ISBN 978-3-95451-884-5 First edition Did you enjoy it? Do you want more? Join us in uncovering new places around the world on: www.111places.com Foreword Dear Los Angeles, So often you are misunderstood, viewed by the world through the narrow lenses of the media and outsiders. -

An Open Door: the Cathedral's Web Portal

Fall 2013 1047 Amsterdam Avenue Volume 13 Number 62 at 112th Street New York, NY 10025 (212) 316-7540 stjohndivine.org Fall 2013 at the Cathedral An Open Door: The Cathedral’s Web Portal cross the city, the great hubs of communication a plethora of new opportunities, many of which society is WHAT’s InsIDE pulse: churches, museums, universities, only beginning to understand. As accustomed as we have government offices, the Stock Exchange. Each become in recent years to having the world at our fingertips, The Cathedral's Web Portal Things That Go Bump can be seen as a microcosm of the city or there is little doubt that in 10, 20, 50 years that connection In the Night Great Music in a Great Space the world. Many, including the Cathedral, were will be more profoundly woven into our culture. The human The New Season founded with this in mind. But in 2013, no heart in prayer, the human voice in song, the human spirit in Blessing of the Animals discussion about connections or centers of communication can poetry: all of these resonate within Cathedral walls, but need Long Summer Days A The Viewer's Salon help but reference the World Wide Web. The Web has only been not be limited by geography. Whether the Internet as a whole Nightwatch's ’13–’14 Season around for a blink of an eye of human history, and only for a works to bring people together and foster understanding is Dean's Meditation: small part of the Cathedral’s existence, but its promise reflects up to each of us as users. -

Current Bicycle Friendly Businesses Through Fall 2016

Current Bicycle Friendly Businesses through Fall 2016 Current Award BFB Number of Business Name Level Since Type of Business Employees City State PLATINUM Platinum 1 California - Platinum Platinum CA University of California, Davis Platinum 2013 Education 20,041 Davis CA Facebook Platinum 2012 Professional Services 5,289 Menlo Park CA Ground Control Systems (previously listed as Park a Bike) Platinum 2014 Manufacturing/Research 14 Sacramento CA Bici Centro/Santa Barbara Bicycle Coalition Platinum 2014 Non-Profit 6 Santa Barbara CA SONOS INC Platinum 2015 Telecommunications & Media 389 Santa Barbara CA Santa Monica Bike Center Platinum 2012 Bicycle Shop 11 Santa Monica CA Colorado - Platinum Platinum CO City of Fort Collins Platinum 2011 Government Agency 551 Fort Collins CO New Belgium Brewing Company Platinum 2009 Hospitality/Food/Retail 410 Fort Collins CO District of Columbia - Platinum Platinum Washington Area Bicyclist Association Platinum 2014 Non-Profit 18 Washington DC Idaho - Platinum Platinum ID Boise Bicycle Project Platinum 2011 Bicycle Shop 12 Boise ID Illinois - Platinum Platinum IL The Burke Group Platinum 2010 Professional Services 168 Rosemont IL Indiana - Platinum Platinum IN Bicycle Garage Indy Downtown Platinum 2016 Bicycle Shop 5 Indianapolis IN Massachusetts - Platinum Platinum MA Urban Adventours Platinum 2008 Hospitality/Food/Retail 25 Boston MA Landry's Bicycles Platinum 2008 Bicycle Shop 24 Natick MA Minnesota - Platinum Platinum MN Quality Bicycle Products Platinum 2008 Bicycle Industry 450 Bloomington MN Target