On the Computation of the HNF of a Module Over the Ring of Integers of A

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

User's Guide to Pari-GP

User's Guide to PARI / GP C. Batut, K. Belabas, D. Bernardi, H. Cohen, M. Olivier Laboratoire A2X, U.M.R. 9936 du C.N.R.S. Universit´eBordeaux I, 351 Cours de la Lib´eration 33405 TALENCE Cedex, FRANCE e-mail: [email protected] Home Page: http://www.parigp-home.de/ Primary ftp site: ftp://megrez.math.u-bordeaux.fr/pub/pari/ last updated 5 November 2000 for version 2.1.0 Copyright c 2000 The PARI Group Permission is granted to make and distribute verbatim copies of this manual provided the copyright notice and this permission notice are preserved on all copies. Permission is granted to copy and distribute modified versions, or translations, of this manual under the conditions for verbatim copying, provided also that the entire resulting derived work is distributed under the terms of a permission notice identical to this one. PARI/GP is Copyright c 2000 The PARI Group PARI/GP is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation. It is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY WHATSOEVER. Table of Contents Chapter 1: Overview of the PARI system . 5 1.1 Introduction . 5 1.2 The PARI types . 6 1.3 Operations and functions . 9 Chapter 2: Specific Use of the GP Calculator . 13 2.1 Defaults and output formats . 14 2.2 Simple metacommands . 20 2.3 Input formats for the PARI types . 23 2.4 GP operators . -

An Application of the Hermite Normal Form in Integer Programming

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector An Application of the Hermite Normal Form in Integer Programming Ming S. Hung Graduate School of Management Kent State University Kent, Ohio 44242 and Walter 0. Rom School of Business Administration Cleveland State University Cleveland, Ohio 44115 Submitted by Richard A. Brualdi ABSTRACT A new algorithm for solving integer programming problems is developed. It is based on cuts which are generated from the Hermite normal form of the basis matrix at a continuous extreme point. INTRODUCTION We present a new cutting plane algorithm for solving an integer program. The cuts are derived from a modified version of the usual column tableau used in integer programming: Rather than doing a complete elimination on the rows corresponding to the nonbasic variables, these rows are transformed into the Her-mite normal form. There are two major advantages with our cutting plane algorithm: First, it uses all integer operations in the computa- tions. Second, the cuts are deep, since they expose vertices of the integer hull. The Her-mite normal form (HNF), along with the basic properties of the cuts, is developed in Section I. Sections II and III apply the HNF to systems of equations and integer programs. A proof of convergence for the algorithm is presented in Section IV. Section V discusses some preliminary computa- tional experience. LINEAR ALGEBRA AND ITS APPLlCATlONS 140:163-179 (1990) 163 0 Else&r Science Publishing Co., Inc., 1990 655 Avenue of the Americas, New York, NY 10010 0024-3795/90/$3.50 164 MING S. -

Fast, Deterministic Computation of the Hermite Normal Form and Determinant of a Polynomial Matrix✩

Journal of Complexity 42 (2017) 44–71 Contents lists available at ScienceDirect Journal of Complexity journal homepage: www.elsevier.com/locate/jco Fast, deterministic computation of the Hermite normal form and determinant of a polynomial matrixI George Labahn a,∗, Vincent Neiger b, Wei Zhou a a David R. Cheriton School of Computer Science, University of Waterloo, Waterloo ON, Canada N2L 3G1 b ENS de Lyon (Laboratoire LIP, CNRS, Inria, UCBL, Université de Lyon), Lyon, France article info a b s t r a c t Article history: Given a nonsingular n × n matrix of univariate polynomials over Received 19 July 2016 a field K, we give fast and deterministic algorithms to compute Accepted 18 March 2017 its determinant and its Hermite normal form. Our algorithms use Available online 12 April 2017 ! Oe.n dse/ operations in K, where s is bounded from above by both the average of the degrees of the rows and that of the columns Keywords: of the matrix and ! is the exponent of matrix multiplication. The Hermite normal form soft- notation indicates that logarithmic factors in the big- Determinant O O Polynomial matrix are omitted while the ceiling function indicates that the cost is Oe.n!/ when s D o.1/. Our algorithms are based on a fast and deterministic triangularization method for computing the diagonal entries of the Hermite form of a nonsingular matrix. ' 2017 Elsevier Inc. All rights reserved. 1. Introduction n×n For a given nonsingular polynomial matrix A in KTxU , one can find a unimodular matrix U 2 n×n KTxU such that AU D H is triangular. -

A Formal Proof of the Computation of Hermite Normal Form in a General Setting?

REGULAR-MT: A Formal Proof of the Computation of Hermite Normal Form In a General Setting? Jose Divason´ and Jesus´ Aransay Universidad de La Rioja, Logrono,˜ La Rioja, Spain fjose.divason,[email protected] Abstract. In this work, we present a formal proof of an algorithm to compute the Hermite normal form of a matrix based on our existing framework for the formal- isation, execution, and refinement of linear algebra algorithms in Isabelle/HOL. The Hermite normal form is a well-known canonical matrix analogue of reduced echelon form of matrices over fields, but involving matrices over more general rings, such as Bezout´ domains. We prove the correctness of this algorithm and formalise the uniqueness of the Hermite normal form of a matrix. The succinct- ness and clarity of the formalisation validate the usability of the framework. Keywords: Hermite normal form, Bezout´ domains, parametrised algorithms, Linear Algebra, HOL 1 Introduction Computer algebra systems are neither perfect nor error-free, and sometimes they return erroneous calculations [18]. Proof assistants, such as Isabelle [36] and Coq [15] allow users to formalise mathematical results, that is, to give a formal proof which is me- chanically checked by a computer. Two examples of the success of proof assistants are the formalisation of the four colour theorem by Gonthier [20] and the formal proof of Godel’s¨ incompleteness theorems by Paulson [39]. They are also used in software [33] and hardware verification [28]. Normally, there exists a gap between the performance of a verified program obtained from a proof assistant and a non-verified one. -

3. Gaussian Elimination

3. Gaussian Elimination The standard Gaussian elimination algorithm takes an m × n matrix M over a field F and applies successive elementary row operations (i) multiply a row by a field element (the in- verse of the left-most non-zero) (ii) subtract a multiple of one from another (to create a new left-most zero) (iii) exchange two rows (to bring a new non- zero pivot to the top) to produce M ′ in reduced row echelon form: • any all-zero rows at bottom • one at left-most position in non-zero row • number of leading zeroes strictly increas- ing. 76 Comments − Complexity O(n3) field operations if m<n. − With little more bookkeeping we can ob- tain LUP decomposition: write M = LUP with L lower triangular, U upper triangular, P a permutation matrix. − Standard applications: • solving system M·x = b of m linear equa- tions in n − 1 unknowns xi, by applying Gaussian elimination to the augmented matrix M|b; • finding the inverse M −1 of a square in- vertible n × n matrix M, by applying Gaussian elimination to the matrix M|In; • finding the determinant det M of a square matrix, for example by using det M = det L det U det P . • find bases for row space, column space (image), null space, find rank or dimen- sion, dependencies between vectors: min- imal polynomials. 77 Fraction-free Gaussian elimination When computing over a domain (rather than a field) one encounters problems entirely analo- gous to that for the Euclidean algorithm: avoid- ing fractions (and passing to the quotient field) leads to intermediate expression swell. -

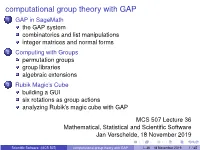

Computational Group Theory With

computational group theory with GAP 1 GAP in SageMath the GAP system combinatorics and list manipulations integer matrices and normal forms 2 Computing with Groups permutation groups group libraries algebraic extensions 3 Rubik Magic’s Cube building a GUI six rotations as group actions analyzing Rubik’s magic cube with GAP MCS 507 Lecture 36 Mathematical, Statistical and Scientific Software Jan Verschelde, 18 November 2019 Scientific Software (MCS 507) computational group theory with GAP L-36 18November2019 1/45 computational group theory with GAP 1 GAP in SageMath the GAP system combinatorics and list manipulations integer matrices and normal forms 2 Computing with Groups permutation groups group libraries algebraic extensions 3 Rubik Magic’s Cube building a GUI six rotations as group actions analyzing Rubik’s magic cube with GAP Scientific Software (MCS 507) computational group theory with GAP L-36 18November2019 2/45 the GAP system GAP stands for Groups, Algorithms and Programming. GAP is free software, under the GNU Public License. Development began at RWTH Aachen (Joachim Neubüser), version 2.4 was released in 1988, 3.1 in 1992. Coordination was transferred to St. Andrews (Steve Linton) in 1997, version 4.1 was released in 1999. Release 4.4 was coordinated in Fort Collins (Alexander Hulpke). We run GAP explicitly in SageMath via 1 the class Gap, do help(gap); or 2 opening a Terminal Session with GAP, type gap_console(); in a SageMath terminal. Scientific Software (MCS 507) computational group theory with GAP L-36 18November2019 3/45 using -

A Pari/GP Tutorial by Robert B

1 A Pari/GP Tutorial by Robert B. Ash, Jan. 2007 The Pari/GP home page is located at http://pari.math.u-bordeaux.fr/ 1. Pari Types There are 15 types of elements (numbers, polynomials, matrices etc.) recognized by Pari/GP (henceforth abbreviated GP). They are listed below, along with instructions on how to create an element of the given type. After typing an instruction, hit return to execute. A semicolon at the end of an instruction suppresses the output. To activate the program, type gp, and to quit, type quit or q \ 1. Integers Type the relevant integer, with a plus or minus sign if desired. 2. Rational Numbers Type a rational number as a fraction a/b, with a and b integers. 3. Real Numbers Type the number with a decimal point to make sure that it is a real number rather than an integer or a rational number. Alternatively, use scientific notation, for example, 8.46E17 The default precision is 28 significant digits. To change the precision, for example to 32 digits, type p 32. \ 4. Complex Numbers Type a complex number as a + b I or a + I b, where a and b are real numbers, rational numbers or integers. ∗ ∗ 5. Integers Modulo n To enter n mod m, type Mod(n, m). 6. Polynomials Type a polynomial in the usual way, but don’t forget that must be used for multiplica- tion. For example, type xˆ3 + 3*xˆ2 -1/7*x +8. ∗ 7. Rational Functions Type a ratio P/Q of polynomials P and Q. -

Equivalences for Linearizations of Matrix Polynomials

Equivalences for Linearizations of Matrix Polynomials Robert M. Corless∗1, Leili Rafiee Sevyeri†1, and B. David Saunders‡2 1David R. Cheriton School of Computer Science, University of Waterloo ,Canada 2Department of Computer and Information Sciences University of Delaware Abstract One useful standard method to compute eigenvalues of matrix poly- nomials P (z) ∈ Cn×n[z] of degree at most ℓ in z (denoted of grade ℓ, for short) is to first transform P (z) to an equivalent linear matrix poly- nomial L(z) = zB − A, called a companion pencil, where A and B are usually of larger dimension than P (z) but L(z) is now only of grade 1 in z. The eigenvalues and eigenvectors of L(z) can be computed numerically by, for instance, the QZ algorithm. The eigenvectors of P (z), including those for infinite eigenvalues, can also be recovered from eigenvectors of L(z) if L(z) is what is called a “strong linearization” of P (z). In this paper we show how to use algorithms for computing the Hermite Normal Form of a companion matrix for a scalar polynomial to direct the dis- covery of unimodular matrix polynomial cofactors E(z) and F (z) which, via the equation E(z)L(z)F (z) = diag(P (z), In,..., In), explicitly show the equivalence of P (z) and L(z). By this method we give new explicit constructions for several linearizations using different polynomial bases. We contrast these new unimodular pairs with those constructed by strict arXiv:2102.09726v1 [math.NA] 19 Feb 2021 equivalence, some of which are also new to this paper. -

A Tutorial for PARI / GP

A Tutorial for PARI / GP C. Batut, K. Belabas, D. Bernardi, H. Cohen, M. Olivier Laboratoire A2X, U.M.R. 9936 du C.N.R.S. Universit´eBordeaux I, 351 Cours de la Lib´eration 33405 TALENCE Cedex, FRANCE e-mail: [email protected] Home Page: http://pari.math.u-bordeaux.fr/ Primary ftp site: ftp://pari.math.u-bordeaux.fr/pub/pari/ last updated September 17, 2002 (this document distributed with version 2.2.7) Copyright c 2000–2003 The PARI Group ° Permission is granted to make and distribute verbatim copies of this manual provided the copyright notice and this permission notice are preserved on all copies. Permission is granted to copy and distribute modified versions, or translations, of this manual under the conditions for verbatim copying, provided also that the entire resulting derived work is distributed under the terms of a permission notice identical to this one. PARI/GP is Copyright c 2000–2003 The PARI Group ° PARI/GP is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation. It is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY WHATSOEVER. This booklet is intended to be a guided tour and a tutorial to the GP calculator. Many examples will be given, but each time a new function is used, the reader should look at the appropriate section in the User’s Manual for detailed explanations. Hence although this chapter can be read independently (for example to get rapidly acquainted with the possibilities of GP without having to read the whole manual), the reader will profit most from it by reading it in conjunction with the reference manual. -

A Fast Las Vegas Algorithm for Computing the Smith Normal Form of a Polynomial Matrix

A Fast Las Vegas Algorithm for Computing the Smith Normal Form of a Polynomial Matrix Arne Storjohann and George Labahn Department of Computer Science University of Waterloo Waterloo, Ontario, Canada N2L 3G1 Submitted by Richard A. Brualdi ABSTRACT A Las Vegas probabilistic algorithm is presented that ¯nds the Smith normal form S 2 Q[x]n£n of a nonsingular input matrix A 2 Z [x]n£n. The algorithm requires an expected number of O~(n3d(d + n2 log jjAjj)) bit operations (where jjAjj bounds the magnitude of all integer coe±cients appearing in A and d bounds the degrees of entries of A). In practice, the main cost of the computation is obtaining a non-unimodular triangularization of a polynomial matrix of same dimension and with similar size entries as the input matrix. We show how to accomplish this in O~(n5d(d + log jjAjj) log jjAjj) bit operations using standard integer, polynomial and matrix arithmetic. These complexity results improve signi¯cantly on previous algorithms in both a theoretical and practical sense. 1. INTRODUCTION The Smith normal form is a diagonalization of a matrix over a principal ideal domain. The concept originated with the work of Smith [17] in 1861 for the special case of integer matrices. Applications of the Smith normal form include, for example, solving systems of Diophantine equations over the domain of entries [4], integer programming [9], determining the canon- ical decomposition of ¯nitely generated abelian groups [8], determining the LINEAR ALGEBRA AND ITS APPLICATIONS xxx:1{xxx (1993) 1 °c Elsevier Science Publishing Co., Inc., 1993 655 Avenue of the Americas, New York, NY 10010 0024- 3795/93/$6.00 2 similarity of two matrices and computing additional normal forms such as Frobenius and Jordan normal forms [5, 12]. -

A Tutorial for Pari/GP

A Tutorial for PARI / GP (version 2.7.0) The PARI Group Institut de Math´ematiquesde Bordeaux, UMR 5251 du CNRS. Universit´eBordeaux 1, 351 Cours de la Lib´eration F-33405 TALENCE Cedex, FRANCE e-mail: [email protected] Home Page: http://pari.math.u-bordeaux.fr/ Copyright c 2000{2014 The PARI Group Permission is granted to make and distribute verbatim copies of this manual provided the copyright notice and this permission notice are preserved on all copies. Permission is granted to copy and distribute modified versions, or translations, of this manual under the conditions for verbatim copying, provided also that the entire resulting derived work is distributed under the terms of a permission notice identical to this one. PARI/GP is Copyright c 2000{2014 The PARI Group PARI/GP is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation. It is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY WHATSOEVER. Table of Contents 1. Greetings! . 4 2. Warming up . 7 3. The Remaining PARI Types . 9 4. Elementary Arithmetic Functions . 14 5. Performing Linear Algebra . 15 6. Using Transcendental Functions . 17 7. Using Numerical Tools . 20 8. Polynomials . 22 9. Power series . 25 10. Working with Elliptic Curves . 25 11. Working in Quadratic Number Fields . 30 12. Working in General Number Fields . 35 12.1. Elements . 35 12.2. Ideals . 39 12.3. Class groups and units, bnf ..................................41 12.4. Class field theory, bnr .....................................43 12.5. -

EML: Linear Algebra Over the Integers

EML: Linear Algebra over the Integers Joris LIMONIER & Dany ALVES MARQUES Supervised by : Gabor WIESE & Luca NOTARNICOLA March-June 2018 I II Preface This article is the result of a three-months project about linear algebra over the integers. The programming part was completed using SageMath and its matrix related features. This topic appeared particularly appealing as it seemed to allow us to find some nice results and tools while being only in first year. We thought it could bring us some interesting knowledge and useful skills that we would be able to reuse during the rest of our degree. We must say we were not disappointed at all so we will try to properly share our enjoyment while presenting our work in this article. We wish to thank Prof. Dr. Gabor Wiese and Luca Notarnicola for their guidance throughout the making of this article. CONTENTS III Contents 1 Introduction 1 2 A systematic HNF computation 3 2.1 Algorithm . 3 2.2 The code . 4 2.3 Applications using the HNF . 8 2.3.1 Linear Diophantine equations . 8 2.3.2 case Ax=0 . 9 1 INTRODUCTION 1 1 Introduction Definition 1.1. Hermite Normal Form (HNF) We will say that a m×n matrix M = (mi;j) with integer coefficients is in HNF if there exists r ≤ n and a strictly increasing map f from [r+1; n] to [1; m] satisfying the following properties : (1) For r + 1 ≤ j ≤ n, mi;j = 0 if i > f(j) and 0 ≤ mf(k);j < mf(k);k if k < j.