Large-Scale Painting of Photographs by Interactive Optimization

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Survey of Contemporary Airbrush Realism a Survey

UNDER PRESSURE A SURVEY OF CONTEMPORARY AIRBRUSH REALISM While conducting research to produce this exhibition, a well-seasoned painter told me that his New York dealer advised him not to tell anyone that he uses airbrush because it could hurt his reputation. I told him it’s time to come out of the closet. Airbrush is a hip medium that has been around for over a century, and is used today to produce a broad range of work, the best of which endures as fine art. DAVID J. WAGNER, PH.D. CURATOR/TOUR DIRECTOR AIRBRUSH HISTORY re-positioned through the bottom, which improved balance and control. Burdick’s invention was represented by Thayer Francis Stanley, who with his twin brother became famous and Chandler, a Chicago mail order arts and crafts retailer, at for the Stanley Steamer (a precursor to the gas-engine the 1892 World Columbian Exposition. Burdick subsequently automobile), patented a simple “atomizer” airbrush to colorize moved to London a year later and formed the Fountain photographs in 1876. An instrument called the “paint distrib- Brush Company which he renamed in 1900, Aerograph utor,” which relied on a hand-operated compressor to supply Company, Ltd. Olaus Wold, a Norwegian immigrant who continuous air, was developed in 1879 by Abner Peeler “for had worked for Thayer and Chandler and did the majority the painting of watercolors and other artistic purposes.” of its airbrush design work, formed his own company in the Charles and Liberty Walkup of Mt. Morris, Illinois, paid Peeler late 1890’s: The Wold Air Brush Company. -

Chapter 21 – Paint and Spray Finish Operations

CHAPTER 21 – PAINT AND SPRAY FINISH OPERATIONS A. INTRODUCTION ........................................................................................................ 1 B. CHAPTER SPECIFIC ROLES AND RESPONSIBILITIES .................................. 1 C. HAZARD IDENTIFICATION ..................................................................................... 2 D. HAZARD CONTROLS ............................................................................................... 3 E. INSPECTIONS AND MAINTENANCE .................................................................. 11 F. PERSONAL PROTECTIVE EQUIPMENT (PPE) AND PERSONAL HYGIENE ..................................................................................................................................... 12 G. TRAINING ................................................................................................................. 12 H. RECORDS AND REPORTS................................................................................... 13 I. REFERENCES ......................................................................................................... 13 21-i Current as of November, 2009 CHAPTER 21 – PAINT AND SPRAY FINISH OPERATIONS A. Introduction 1. The purpose of this Chapter is to establish fire, safety and employee health requirements for all indoor spray finish to include: water-based, flammable or combustible paints, varnishes, lacquers, glues, or similar substances. Spray operations involving these materials typically occur in a dedicated area, (e.g., paint -

View a Portion of This Product

Contents Introduction .......................... 4 Chapter 1 All about paint ........................ 5 Chapter 2 Brush-painting ....................... 12 Chapter 3 Airbrushing and spray-painting .......... 16 Chapter 4 Multicolor paint schemes ............... 28 Chapter 5 Decals and dry transfers................ 33 Chapter 6 Weathering with paint ................. 43 Chapter 7 Weathering with chalk ................. 49 Chapter 8 Weathering freight cars ................ 53 Chapter 9 Weathering locomotives................ 65 Chapter 10 Structures and figures ................. 76 List of manufacturers .................. 86 About the author ..................... 87 10 11 This spray booth from Paasche is large enough to hold most The BearAir Polar Bear (model 1000) is a solid, basic-level models. The exhaust fan and ductwork are out of sight at the compressor. It has a built-in regulator and a moisture trap. rear. 12 13 14 The air regulator allows you to adjust Cans of propellant are expensive, and it’s Use a filter when using a siphon cap on air pressure, and the moisture trap difficult to regulate the air flow because a bottle. Stainless-steel filters work well, filters out water before it reaches your of varying pressure as the can is used. but they require cleaning after each use. airbrush. keeps any water (formed when air Preparation is compressed) from reaching your Start by mixing your paint. It’s airbrush and model. important that paint be thin enough to If you plan to do a lot of painting, flow easily through the openings in the consider spending the extra money for airbrush. (The chart on page 11 lists a silent compressor. Although more recommended mixing ratios and air expensive, as the name implies, they are pressure for various brands of paint.) very quiet, making about as much noise Shake the bottle or stir the paint as a refrigerator. -

ABC's of Spray Painting

ABC’s of Spray Painting $10.00 A-2928-A ABC’s of Spray Painting Forward Table of Contents About this book….. Forward …..…………………..….2 While this book examines the spray finishing operation and its This book has been updated equipment from many viewpoints, several times from “The ABC’s of 1. Introduction ………………..3 there is still much more to be Spray Equipment,” originally learned to become truly proficient published by The DeVilbiss Surface Preparation………...3 at spray finishing. Company in 1954. It focuses on equipment and techniques for Paint Preparation……………3 spray finishing. The best way to become proficient at spray finishing is to 2. Air Atomizing Spray just do it! Many trade technical The format of the original book Guns………………………....4 and community colleges offer was question-and-answer. We Spray Gun Types ……….….4 courses in spray finishing, a great have retained that format in this way to improve your skills. edition. Part Identification and Function……………….……..6 Many of the “tricks” of the This book is organized around the Operation ……………..……..9 professional spray finisher involve major components of an air spray Maintenance ……….………11 paints and coatings. The system… spray guns, material manufacturers of these materials containers, hose, air control Troubleshooting …………...13 routinely publish complete books equipment, compressors, spray on these subjects. These booths, respirators and a short publications are available in section on general cleanliness and 3. Material Containers…...…16 specialty paint stores and will other sources of information. A provide you with considerable thorough understanding of the detail. Many of these books also material in this book - plus a lot of 4. -

ADVISER's APPROVAL: __~~-- """"'~~-~-=-=A.I/.....~Ijjll:: .,:;A-G;:;I::;;-.,=------LACQUER and LACQUERING LACQUER and LACQUERING

Date: July 23, 1952 Name: Harold J. Winburn Pasition: Student Institution: Oklahoma A. & M. College Location: Stillwater, Oklahoma Title of Study: Lacquer and Lacquering Number of Pages in Study:· 66 Under Direction of What Department: School of Industrial Arts Education and Engineering Shopwork Scope of Study: This report deals with the history and devel opment of lacquer, its characteristics, application methods, and the equipment necessary for its proper use. Desirable characteristics of a lacquer finish, as well as some of the common faults, are discussed. General infor mation, rather than technical data, concerning the modern finishing product known as "lacquer" is presented. The equipment described does not include all types that are available, but typical equipment used in industry that is suitable for use in the ·school shop is discussed. A com plete glossary of common finishing terms is not included, but some of the terms used in the lacquer industry are defined. Common faults of lacquer finishes, such as blushing, orange peel, bridging, discoloration, sags and runs, and how to overcome them are discussed. Findings and Conclusions: Lacquer is a very old finishing material, having been in use for more than 2,000 years. Present day lacquers are quite different, in composition and characteristics, from the early lacquers used by craftsmen in the Orient. Within the last thirty years vast improvements have been made in nitrocellulose lac quers, and they have largely replaced other finishes in industry. The automotive industry led the way in making lacquer popular, but in recent years the furniture indus try has become a great consumer of the product. -

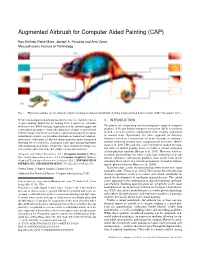

Augmented Airbrush for Computer Aided Painting (CAP)

• 1 Augmented Airbrush for Computer Aided Painting (CAP) Roy Shilkrot, Pattie Maes, Joseph A. Paradiso and Amit Zoran Massachusetts Institute of Technology Fig. 1. Watercolor painting (a) of a chameleon figure (b) using the augmented airbrush, showing unique physical details (canvas width 1.5m, painter A.Z.). We present an augmented airbrush that allows novices to experience the art 1. INTRODUCTION of spray painting. Inspired by the thriving field of smart tools, our hand- held device uses 6DOF tracking; augmentation of the airbrush trigger; and The plastic arts of painting and sketching have inspired computer a specialized algorithm to restrict the application of paint to a preselected graphics (CG) and human-computer interaction (HCI) researchers reference image. Our device acts both as a physical spraying device and as to find a creative process independent from lengthy acquisition an intelligent assistive tool, providing simultaneous manual and computer- of manual skills. Specifically, the na¨ıve approach of Paint-by- ized control. Unlike prior art, here the virtual simulation guides the physical Numbers served as a cornerstone for many attempts at creating a rendering (inverse rendering), allowing for a new spray painting experience manual rendering method with computational assistance [Hertz- with singular physical results. We present our novel hardware design, con- mann et al. 2001]. Beyond that, some researchers studied the man- trol software, and a user study that verifies our research objectives. ual styles of skilled graphic artists -

Friday, February 19Th, 2016 Icons of Female Power: Early Works of Miriam Schapiro by Rebecca Allan Miriam Schapiro: a Visionary

Friday, February 19th, 2016 Icons of Female Power: Early Works of Miriam Schapiro by Rebecca Allan Miriam Schapiro: A Visionary at the National Academy Museum and Miriam Schapiro: The California Years, 1967-1975 at Eric Firestone Loft National Academy: February 4 to May 8, 2016 1083 Fifth Avenue at 89th Street New York City, (212) 369-4880 Firestone: February 4 to March 6, 2016 4 Great Jones Street, between Broadway and Lafayette Street New York City, (917) 324-3386 Miriam Schapiro, Big Ox, 1967. Acrylic on canvas, 90 x 108 inches © The Estate of Miriam Schapiro, courtesy of Eric Firestone Gallery In 1972, the year that Miriam Schapiro and Judy Chicago were creating Womanhouse with their students at Cal Arts, my older cousin Annie taught me a game called Masculine/Feminine. Two players would alternate, pointing to an object and asking, “Masculine, or feminine?” Telephone, driveway, rec-room: masculine. Paint brush, river, rhinestone: feminine. This game was a lot of fun, but it was also strange, because, as a ten-year-old kid, I couldn’t understand why things would have a gender. Two concurrent exhibitions of the work of Miriam Schapiro (1923–2015) in New York have me playing this game all over again. As I stand in front of her iconic Dollhouse (1972), on view at the National Academy Museum, I think about the broader impact of Schapiro’s legacy, as well as the new knowledge that we can acquire by focusing on a distinct period in the work of this luminary of feminist art. Miriam Schapiro: The California Years, 1967-1975 inaugurates the Eric Firestone Loft at 4 Great Jones Street, a fourth-floor walk-up that is redolent with the histories of artists including Walter De Maria, Jean-Michel Basquiat, and Keith Haring, who once had studios nearby. -

The Airbrush and the Impact of the Conservation Treatment of Airbrushed Canvas Paintings

NORTHUMBRIA UNIVERSITY An Investigation into the History of the Airbrush and the Impact of the Conservation Treatment of Airbrushed Canvas Paintings Mohamed Abdeldayem Ahmed Soltan PhD 2015 An Investigation into the History of the Airbrush and the Impact of the Conservation Treatment of Airbrushed Canvas Paintings Mohamed Abdeldayem Ahmed Soltan A thesis submitted in partial fulfilment of the requirements of the University of Northumbria at Newcastle for the degree of Doctor of Philosophy Research undertaken in the School of Arts and Social Sciences April 2015 Abstract The research considers whether traditional approaches to easel paintings conservation are appropriate for the treatment of air brush paintings. The objectives were: . To investigate the aesthetic and technical history of the airbrush . To investigate surface changes in paint layers . To investigate the appropriateness of traditional conservation treatments for airbrush paintings and evaluate alternative approaches Although the first airbrush was introduced in 1883 it was initially rejected by many fine art circles as being too ‘mechanical’. Airbrush techniques have been little discussed in the field of fine art and the field of the conservation of fine art. A mixed methodology was followed for this research, qualitative through literature review carried out in line with the interdisciplinary nature of the research, and quantitative through various approaches including surveys. One survey was carried out in order to establish the use of air brush techniques by artists and its eventual acceptance as a fine art technique. A second survey was conducted to discover how well conservators understood the degradation characteristics of air brush paintings and their appropriate treatment. -

ABC's of Spray Finishing

ABC’s of Spray Finishing $10.00 1-239-C 11/01 ABC’s of Spray Finishing Forward Table of Contents While this book examines the About this book..... Forward..........................................2 spray finishing operation and its This book has been updated equipment from many viewpoints, several times from “The ABC’s of 1. Introduction..............................3 there is still much more to be Spray Equipment,” originally learned to become truly proficient Surface Preparation ..................3 published by The DeVilbiss at spray finishing. Company in 1954. It focuses on Paint Preparation ......................3 equipment and techniques for spray finishing. The best way to become proficient 2. Air Atomizing Spray Guns......4 at spray finishing is to just do it! Many trade technical and The format of the original book was Spray Gun Types ......................4 community colleges offer courses question-and-answer. We have in spray finishing, a great way to retained that format in this edition. Part Identification and improve your skills. Function.....................................6 This book is organized around the Operation...................................9 Many of the “tricks” of the major components of an air spray professional spray finisher involve Maintenance ...........................11 system… spray guns, material paints and coatings. The containers, hose, air control manufacturers of these materials Troubleshooting ......................13 equipment, compressors, spray routinely publish complete books booths, respirators and a short on these subjects. These section on general cleanliness and publications are available in 3. Material Containers ..............16 other sources of information. A specialty paint stores and will thorough understanding of the provide you with considerable material in this book - plus a lot of detail. -

Spray Painting Trouble Shooting Guide

SPRAY PAINTING TROUBLE SHOOTING GUIDE Manufacturers of Quality Coatings 1300 BCCOAT (222 628) www.bccoatings.com.au You may come across problems like some of these below sometimes which can be caused from anything from the weather to misuse of the product. You can read about the causes of these problems, how to fix them and how to prevent them in the future by reading this troubleshooting guide. PG NO. PROBLEM ALTERNATE TERM 3 1 Air Entrapment Craters 3 2 Bleeding Discoloration 4 3 Blistering Pimples, Bubbles, Bumps 4 4 Blushing Milkiness 5 5 Chalking Fading, Oxidation, Weathering 5 6 Colour Mismatch Wrong colour 6 7 Cracking Checking, Crazing, Splitting, Alligatoring, Crows feet 7 8 Dust Contamination Dirt in finish 8 9 Fish eyes Silicon Contaminations, Cratering 8 10 Lifting Shriveling, Swelling, Raising ,Alligatoring, Wrinkling 9 11 Loss of Gloss Hazing Dulling, Die-back, Matting, Weathering 9 12 Mottling Streaking, Tiger / Zebra Stripes, Floating, Flooding 10 13 Orange Peel Poor Flow, Texture 10 14 Peeling De-lamination, Flaking 11 15 Pin Holes Holes 11 16 Runs / Sags Hangers, Curtains, Signatures 12 17 Sanding Marks Streaked Finish, Sand scratches 12 18 Sand Scratches Swelling, Sinking, Shrinkage 13 19 Seediness Gritty, Dirty, Grainy, Speckled 13 20 Shrinkage Bull eyes, Ringing, Edge Mapping 14 21 Slow Film Slow Dry 14 22 Solvent Popping Boiling, Blowing, Gassing 15 23 Wave Uneven surface 2 Air Entrapment 1 Description Small crater like openings in or on the paint film. AKA: Craters Cause Trapped or buried air pockets in the wet paint film that rise to the surface and “burst’ causing small craters. -

Technical Handbook BARIL

Technical handbook BARIL Contents Page Chapter 1: General terms and conditions of Baril Coatings B.V for protective coatings on steel 1 1.1 Before blasting 1.2 During blasting 1.3 After blasting 1.4 During paint application 1.5 Storage 1.6 Transport 1.7 Assembly 1.8 Coating contractor 1.9 Maintenance projects 1.10 General Chapter 2: Supervision & technical support 4 Chapter 3: Maintenance 5 Chapter 4: Corrosion 6 4.1 Protecting metal 4.2 Desired life span 4.3 Influence of climate 4.4 Moisture 4.5 Corrosive influences Chapter 5: Removal of rust, mill scale and contaminants 8 5.1 Rust 5.2 Mill scale 5.3 Pickling 5.4 Flame blasting Chapter 6: Blast cleaning 10 6.1 Airblasting 6.2 Turbine blasting 6.3 Wet blasting 6.4 High-pressure water blasting 6.5 Special blast cleaning methods 6.6 Blasting standards 6.7 ISO-8501-1: 1988 Chapter 7: Preliminary treatment standards 12 7.1 Scraping and wire-brushing 7.2 Blast cleaning Chapter 8: Sanding base surfaces and coatings 14 8.1 Why sanding? 8.2 What type of sanding machine? 8.3 Particle size of abrasive material? 8.4 Hand sanding Contents Page Chapter 9: Preliminary surface treatment 16 9.1 Steel 9.2 Galvanized steel 9.3 Metal-sprayed steel Chapter 10: General information on floor finishing 19 10.1 Floor requirements 10.2 Preliminary treatment of floor 10.3 Concrete floors 10.4 Finish 10.5 Epoxy (EP) 10.6 Polyurethane (PUR) Chapter 11: Atmospheric conditions 22 11.1 Atmospheric conditions Chapter 12: Application information 23 12.1 General 12.2 Pneumatic/Conventional (air) spraying 12.3 Airless spraying -

Alternatives to Solvent-Based Painting

Paint It Green: Alternatives to Solvent-Based Painting Michael Heaney I. INTRODUCTION A myriad of technologies are available today that are significantly greener, safer, and less costly than conventional solvent-based spray painting. There is no single greenest painting technology. Because of the high performance demands for surface coatings in terms of appearance, protection and other properties, the best technology depends on the specific application. Organic paints for original equipment manufacturing (OEM) products, the focus of this paper, account for 30% of the paints and coatings market. OEM paints are distinguished from architectural and maintenance paints by the fact that they are applied at high production volumes and are often thermally cured. Painting is a multi-step process. To gain optimal corrosion protection and adhesion, paints are usually applied as primer and one or more topcoat layers. This paper focuses primarily on paint application and curing. The cleaning and/or pretreatment steps that precede painting are also critical part of overall surface coating application with their own potentially significant potential environmental impacts. However, this paper does not address these surface preparation steps. II. EMISSIONS FROM CONVENTIONAL SOLVENT-BASED PAINTING Solvent-based painting generates several hazardous wastes including: l volatile organic compound (VOC) air emissions, l paint sludges, l waste solvents, l spent air filters, l off-spec or left-over paint, particularly with multi-component paints, and l paint stripping wastes from hangers and other equipment. Solvent-based painting is one of the largest sources of hazardous wastes. Although the amount of hazardous painting waste is difficult to quantify because it is generated across 38 many industrial sectors, painting results in more hazardous waste than any other process in several major industrial categories including automobile manufacturing.