Search and Strategies in OPL

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On-Line Computing with a Hierarchy of Processors

University of Pennsylvania ScholarlyCommons Technical Reports (CIS) Department of Computer & Information Science December 1968 On-Line Computing With a Hierarchy of Processors Richard P. Morton University of Pennsylvania Follow this and additional works at: https://repository.upenn.edu/cis_reports Recommended Citation Richard P. Morton, "On-Line Computing With a Hierarchy of Processors", . December 1968. University of Pennsylvania Department of Computer and Information Science Technical Report No. MS-CIS-69-13. This paper is posted at ScholarlyCommons. https://repository.upenn.edu/cis_reports/804 For more information, please contact [email protected]. On-Line Computing With a Hierarchy of Processors Abstract Time shared computer systems have been based upon the two techniques of multiprogramming and swapping. Multiprogramming is based on restricting each program to a portion of the total computer memory. Swapping requires considerable overhead time for loading and unloading programs. To alleviate the size restriction due to multiprogramming, segmentation is employed, resulting in fact in vastly increased swapping. A new system architecture is proposed for time shared computing that alleviates the high overhead or program size restriction. It utilizes a hierarchy of processors, where each processor is assigned tasks on the basis of four factors: interactive requirements, frequency of use, execution time, and program length. In order to study the hierarchical approach to system architecture, the Moore School Problem Solving Facility (MSPSF) was built and used. The study of the manner of operation and the reactions of the users clarified and defined the Hierarchy of Processors system architecture. The Moore School Problem Solving Facility was implemented on second generation equipment, the IBM 7040, and therefore it is not possible to adequately compare the efficiency with third generation computers operating in a swapping mode. -

Data Definition Language

1 Structured Query Language SQL, or Structured Query Language is the most popular declarative language used to work with Relational Databases. Originally developed at IBM, it has been subsequently standard- ized by various standards bodies (ANSI, ISO), and extended by various corporations adding their own features (T-SQL, PL/SQL, etc.). There are two primary parts to SQL: The DDL and DML (& DCL). 2 DDL - Data Definition Language DDL is a standard subset of SQL that is used to define tables (database structure), and other metadata related things. The few basic commands include: CREATE DATABASE, CREATE TABLE, DROP TABLE, and ALTER TABLE. There are many other statements, but those are the ones most commonly used. 2.1 CREATE DATABASE Many database servers allow for the presence of many databases1. In order to create a database, a relatively standard command ‘CREATE DATABASE’ is used. The general format of the command is: CREATE DATABASE <database-name> ; The name can be pretty much anything; usually it shouldn’t have spaces (or those spaces have to be properly escaped). Some databases allow hyphens, and/or underscores in the name. The name is usually limited in size (some databases limit the name to 8 characters, others to 32—in other words, it depends on what database you use). 2.2 DROP DATABASE Just like there is a ‘create database’ there is also a ‘drop database’, which simply removes the database. Note that it doesn’t ask you for confirmation, and once you remove a database, it is gone forever2. DROP DATABASE <database-name> ; 2.3 CREATE TABLE Probably the most common DDL statement is ‘CREATE TABLE’. -

Origins of Operating Systems OS/360 Martin Grund

Origins of Operating Systems OS/360 Martin Grund HPI Table of Contents ● IBM System 360 ● Functional Structure of OS/360 ● Virtual Machine Time Sharing Juni 2006 Origins of Operating Systems - OS/360 2 Martin Grund Welcome to Big Blue Juni 2006 Origins of Operating Systems - OS/360 3 Martin Grund IBM System 360 ● In 1964 IBM announced the IBM-360 family for computers ● All machines, despite their differences, had the same user instruction set ● Different operating systems available for these machines ● Only midrange and high-end system run OS/360 ● IBM introduced the new term of hardware architecture ● In 1970 IBM announced System 370 with hardware virtual memory support Juni 2006 Origins of Operating Systems - OS/360 4 Martin Grund IBM System 360 ● High-end machines established 32 bit as standard for computers ● Virtual Memory Support – hardware support for dynamic address translation ● Within ten years S/360 achieved standard status ● Flashback prices: ● 1970 – $279/MB hard disk ● 1980 - $35/MB hard disk | $50.000 /MB DRAM Juni 2006 Origins of Operating Systems - OS/360 5 Martin Grund IBM System 360 Specials ● Introduced 8bit entities ● Introduction of 32 or 64 bit floating point words based on a hexadecimal base ● Variable length strings using length field in the first byte ● All registers are universal registers – accumulators as well as address registers ● Registers use 32 bit, 24 bit for addressing -> 16MB Juni 2006 Origins of Operating Systems - OS/360 6 Martin Grund IBM S/360 - Pictures Juni 2006 Origins of Operating Systems - -

IBM Application System/400 System/38-Compatible COBOL User's Guide and Reference

IBM IBM Application System/400 SC09-1814-00 System/38-Compatible COBOL User’s Guide and Reference Note! Before using this information and the product it supports, be sure to read the general information under “Notices” on page xv. First Edition (June 1994) This edition applies to the System/38-Compatible feature of the IBM* ILE* COBOL/400* licensed program, (Program 5763-CB1), Version 3 Release 0 Modification 5, and to all subsequent releases and modifications until otherwise indi- cated in new editions. Make sure you are using the proper edition for the level of the product. Order publications through your IBM representative or the IBM branch serving your locality. Publications are not stocked at the address given below. A form for readers’ comments is provided at the back of this publication. If the form has been removed, you may address your comments to: IBM Canada Ltd. Laboratory Information Development 2G/345/1150/TOR 1150 Eglinton Avenue East, North York, Ontario, Canada M3C1H7 You can also send your comments by facsimile (attention: RCF Coordinator), or you can send your comments elec- tronically to IBM. See "Communicating your Comments to IBM" for a description of the methods. This page imme- diately precedes the Readers' Comment Form at the back of this publication. When you send information to IBM, you grant IBM a non-exclusive right to use or distribute the information in any way it believes appropriate without incurring any obligation to you. Copyright International Business Machines Corporation 1994. All rights reserved. Note to U.S. Government Users — Documentation related to restricted rights — Use, duplication or disclosure is subject to restrictions set forth in GSA ADP Schedule Contract with IBM Corp. -

Preparation of Papers for AIAA Technical Conferences

NASA KSC – Internship Final Report Unit Testing and Remote Display Development Nicholas Costa Kennedy Space Center Computer Science USRA Summer Session 18 07 2014 NASA Center 1 18/7/2014 NASA KSC – Internship Final Report Unit Testing and Remote Display Development Nicholas Costa Denison University, Granville, Ohio, Abstract The Kennedy Space Center is currently undergoing an extremely interesting transitional phase. The final Space Shuttle mission, STS-135, was completed in July of 2011. NASA is now approaching a new era of space exploration. The development of the Orion Multi- Purpose Crew Vehicle (MPCV) and the Space Launch System (SLS) launch vehicle that will launch the Orion are currently in progress. An important part of this transition involves replacing the Launch Processing System (LPS) which was previously used to process and launch Space Shuttles and their associated hardware. NASA is creating the Spaceport Command and Control System (SCCS) to replace the LPS. The SCCS will be much simpler to maintain and improve during the lifetime of the spaceflight program that it will support. The Launch Control System (LCS) is a portion of the SCCS that will be responsible for launching the rockets and spacecraft. The Integrated Launch Operations Applications (ILOA) group of SCCS is responsible for creating displays and scripts, both remote and local, that will be used to monitor and control hardware and systems needed to launch a spacecraft. It is crucial that the software contained within be thoroughly tested to ensure that it functions as intended. Unit tests must be written in Application Control Language (ACL), the scripting language used by LCS. -

Digital = Flight = Control =System Software Written in Automated

NASA Technical Memorandum 88313 . Digital= Flight= Control =System Software Written in Automated- Engineering-Design Language: A Usets Guide of Verification and Validation Tools Jim Saito, Ames Research Center, Moffett Field, California January 1987 National Aeronautics and Space Administration Ames Research Center Moffett Field, California 94035 CONTENTS LIST OF SYMBOLS .................................................................... v SUMMARY ............................................................................ 1 INTRODUCTION ....................................................................... 1 DFCSVL OVERVIEW .................................................................... 2 DFCSVL Environment ............................................................ 2 Environment Computer ..................................................... 2 Remote Link .............................................................. 2 DFCSVL Software .......................................................... 3 Univac Environment ............................................................ 4 TESTING ............................................................................ 5 AED V & V TOOL DESCRIPTIONS........................................................ 5 Static Tools .................................................................. 8 -d option: Module Dependencies ......................................... 11 -g option: Global Cross Reference ...................................... 11 -i option: Interface .................................................. -

Dictionary of Ibm & Computing Terminology 1 8307D01a

1 DICTIONARY OF IBM & COMPUTING TERMINOLOGY 8307D01A 2 A AA (ay-ay) n. Administrative Assistant. An up-and-coming employee serving in a broadening assignment who supports a senior executive by arranging meetings and schedules, drafting and coordinating correspondence, assigning tasks, developing presentations and handling a variety of other administrative responsibilities. The AA’s position is to be distinguished from that of the executive secretary, although the boundary line between the two roles is frequently blurred. access control n. In computer security, the process of ensuring that the resources of a computer system can be accessed only by authorized users in authorized ways. acknowledgment 1. n. The transmission, by a receiver, of acknowledge characters as an affirmative response to a sender. 2. n. An indication that an item sent was received. action plan n. A plan. Project management is never satisfied by just a plan. The only acceptable plans are action plans. Also used to mean an ad hoc short-term scheme for resolving a specific and well defined problem. active program n. Any program that is loaded and ready to be executed. active window n. The window that can receive input from the keyboard. It is distinguishable by the unique color of its title bar and window border. added value 1. n. The features or bells and whistles (see) that distinguish one product from another. 2. n. The additional peripherals, software, support, installation, etc., provided by a dealer or other third party. administrivia n. Any kind of bureaucratic red tape or paperwork, IBM or not, that hinders the accomplishment of one’s objectives or goals. -

Red Hat Linux 7.3 the Official Red Hat Linux Reference Guide

Red Hat Linux 7.3 The Official Red Hat Linux Reference Guide Red Hat Linux 7.3: The Official Red Hat Linux Reference Guide Copyright © 2002 by Red Hat, Inc. Red Hat, Inc. 1801 Varsity Drive Raleigh NC 27606-2072 USA Phone: +1 919 754 3700 Phone: 888 733 4281 Fax: +1 919 754 3701 PO Box 13588 Research Triangle Park NC 27709 USA rhl-rg(EN)-7.3-HTML-RHI (2002-04-05T17:09-0400) Copyright © 2002 by Red Hat, Inc. This material may be distributed only subject to the terms and conditions set forth in the Open Publication License, V1.0 or later (the latest version is presently available at http://www.opencontent.org/openpub/). Distribution of substantively modified versions of this document is prohibited without the explicit permission of the copyright holder. Distribution of the work or derivative of the work in any standard (paper) book form for commercial purposes is prohibited unless prior permission is obtained from the copyright holder. The admonition graphics (note, tip, and so on) were created by Marianne Pecci <[email protected]>. They may be redistributed with written permission from Marianne Pecci and Red Hat, Inc.. Red Hat, Red Hat Network, the Red Hat "Shadow Man" logo, RPM, Maximum RPM, the RPM logo, Linux Library, PowerTools, Linux Undercover, RHmember, RHmember More, Rough Cuts, Rawhide and all Red Hat-based trademarks and logos are trademarks or registered trademarks of Red Hat, Inc. in the United States and other countries. Linux is a registered trademark of Linus Torvalds. Motif and UNIX are registered trademarks of The Open Group. -

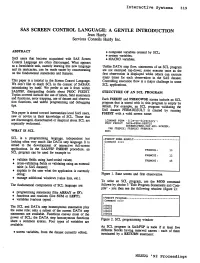

SAS SCREEN CONTROL LANGUAGE: a GENTLE INTRODUCTION Jean Hardy Services Conseils Hardy Inc

Interactive systems 319 SAS SCREEN CONTROL LANGUAGE: A GENTLE INTRODUCTION Jean Hardy Services Conseils Hardy Inc. ABSTRACT • computed variables created by SCL; • system variables; SAS users that become acquainted with SAS Screen • MACRO variables. Control Language are often discouraged. What appears as a formidable task, namely learning this new language Unlike DATA step flow, statements of an SCL program and its intricacies, can be made easier by concentrating are not executed top-dawn; some execute once as the on the fundamental statements and features. first observation is displayed while others can execute many times for each observation in the SAS dataset. This paper is a tutorial to the Screen Control Language. Controlling execution flow is a major challenge in some We don't like to teach SCL in the context of SASIAF, SCL applications. intimidating by itself. We prefer to see it from within SASIFSP, disregarding details about PROC FSEDIT. STRUCTURE OF AN SCL PROGRAM Topics covered include the use of labels, field statements and functions, error trapping, use of dataset and observa Each FSEDIT and FSBROWSE screen include an SCL tion functions, and useful programming and debugging program that is stored with it; this program is empty by tips. default. For example, an SCL program validating the SAS dataset PERM.RESULT is created by running This paper is aimed toward intermediate level SAS users, FSEDIT with a valid screen name: new or novice in their knowledge of SCL. Those that are discouraged, disenchanted or skeptical about SCL are LIBNAME PERM 'file-or-direccory'; PROC FSEDIT DATA=PERM.RESULT especially welcomed. -

FORTRAN 77 User's Guide

A FORTRAN 77 User's Guide ASCALE 7000 DPS7000/XT NOV Languages: FORTRAN REFERENCE 47 A2 16UL 03 DPS7000/XTA NOVASCALE 7000 FORTRAN 77 User's Guide Languages: FORTRAN July 1990 BULL CEDOC 357 AVENUE PATTON B.P.20845 49008 ANGERS CEDEX 01 FRANCE REFERENCE 47 A2 16UL 03 The following copyright notice protects this book under Copyright laws which prohibit such actions as, but not limited to, copying, distributing, modifying, and making derivative works. Copyright Bull SAS 1990 Printed in France Suggestions and criticisms concerning the form, content, and presentation of this book are invited. A form is provided at the end of this book for this purpose. To order additional copies of this book or other Bull Technical Publications, you are invited to use the Ordering Form also provided at the end of this book. Trademarks and Acknowledgements We acknowledge the right of proprietors of trademarks mentioned in this book. Intel® and Itanium® are registered trademarks of Intel Corporation. Windows® and Microsoft® software are registered trademarks of Microsoft Corporation. UNIX® is a registered trademark in the United States of America and other countries licensed exclusively through the Open Group. Linux® is a registered trademark of Linus Torvalds. The information in this document is subject to change without notice. Bull will not be liable for errors contained herein, or for incidental or consequential damages in connection with the use of this material. Preface MANUAL OBJECTIVES This manual provides information about FORTRAN 77, as it is implemented under the GCOS 7 operating system. It describes how to compile, link, execute,debug and maintain FORTRAN 77 programs with a maximum of efficiency. -

Ed 085 578 Title Institution Spons Agency Pub

DOCUMENT RESUME ED 085 578 95 CE 000 768 TITLE Computers and Careers: A Suggested Curriculum for Grades 9-12. INSTITUTION Central Texas Coll., Killeen. SPONS AGENCY Bureau of Adult, Vocational, and Technical Education (DHEW/OE), Washington, D.C. PUB DATE 73 NOTE 67p.. AVAILABLE FROM Suprintendent of Documents, U.S. Government Printing Office, Washington, D. C. 20402 (Stock Number 1780-01241) EDRS PRICE MF-$0.65 HC-$3.29 DESCRIPTORS *Computer Science Education; *Curriculum Guides; Equipment; *High-School Curriculum; Library Services; Programing; *Programing Languages; Technical Education; Units of Study (Subject Fields); Vocational Education IDENTIFIERS Keypunching ABSTRACT The curriculum guide is designed to help high school -administrators, teachers, and others to develop or expand a program to introduce all students to general computer capabilities, to provide certain students with a problem solving tool, or to prepare other students for entry into the job market. Help is given in planning and organizing the program, curriculum outlines for the four high school years are included, and the courses are described briefly. Fourteen instructional units cover advanced COBOL programing, beginning keypunch, business applications development, computer operations, FORTRAN applications programing, introduction to COBOL programing, introduction to computers, introduction to programing, keypunch and data entry, office machines, procedures for organizing information, programing projects, punched card data processing I, and punched card data processing -

Application System/400 System/38 Environment Programming Version 3 Publication No

Application System/400 SC41-3735-00 System/38 Environment Programming Version 3 IBM Application System/400 SC41-3735-00 System/38 Environment Programming Version 3 Take Note! Before using this information and the product it supports, be sure to read the general information under “Notices” on page v. | First Edition (September 1994) | This edition applies to the licensed program IBM Operating System/400, (Program 5763-SS1), Version 3 Release 1 Modification 0, and to all subsequent releases and modifications until otherwise indicated in new editions. Make sure you are using the proper edition for the level of the product. | Order publications through your IBM representative or the IBM branch serving your locality. If you live in the United States, Puerto | Rico, or Guam, you can order publications through the IBM Software Manufacturing Company at 800+879-2755. Publications are not stocked at the address given below. A form for readers' comments is provided at the back of this publication. If the form has been removed, you may address your comments to: Attn Department 245 IBM Corporation 3605 Highway 52 N | Rochester, MN 55901-9986 USA | or you can fax your comments to: | United States and Canada: 800+937-3430 | Other countries: (+1)+507+253-5192 | If you have access to Internet, you can sent your comments electronically to [email protected]; IBMMAIL, to | ibmmail(usib56rz). When you send information to IBM, you grant IBM a non-exclusive right to use or distribute the information in any way it believes appropriate without incurring any obligation to you or restricting your use of it.