Numerical Differentiation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lecture 9: Partial Derivatives

Math S21a: Multivariable calculus Oliver Knill, Summer 2016 Lecture 9: Partial derivatives ∂ If f(x,y) is a function of two variables, then ∂x f(x,y) is defined as the derivative of the function g(x) = f(x,y) with respect to x, where y is considered a constant. It is called the partial derivative of f with respect to x. The partial derivative with respect to y is defined in the same way. ∂ We use the short hand notation fx(x,y) = ∂x f(x,y). For iterated derivatives, the notation is ∂ ∂ similar: for example fxy = ∂x ∂y f. The meaning of fx(x0,y0) is the slope of the graph sliced at (x0,y0) in the x direction. The second derivative fxx is a measure of concavity in that direction. The meaning of fxy is the rate of change of the slope if you change the slicing. The notation for partial derivatives ∂xf,∂yf was introduced by Carl Gustav Jacobi. Before, Josef Lagrange had used the term ”partial differences”. Partial derivatives fx and fy measure the rate of change of the function in the x or y directions. For functions of more variables, the partial derivatives are defined in a similar way. 4 2 2 4 3 2 2 2 1 For f(x,y)= x 6x y + y , we have fx(x,y)=4x 12xy ,fxx = 12x 12y ,fy(x,y)= − − − 12x2y +4y3,f = 12x2 +12y2 and see that f + f = 0. A function which satisfies this − yy − xx yy equation is also called harmonic. The equation fxx + fyy = 0 is an example of a partial differential equation: it is an equation for an unknown function f(x,y) which involves partial derivatives with respect to more than one variables. -

Differentiation Rules (Differential Calculus)

Differentiation Rules (Differential Calculus) 1. Notation The derivative of a function f with respect to one independent variable (usually x or t) is a function that will be denoted by D f . Note that f (x) and (D f )(x) are the values of these functions at x. 2. Alternate Notations for (D f )(x) d d f (x) d f 0 (1) For functions f in one variable, x, alternate notations are: Dx f (x), dx f (x), dx , dx (x), f (x), f (x). The “(x)” part might be dropped although technically this changes the meaning: f is the name of a function, dy 0 whereas f (x) is the value of it at x. If y = f (x), then Dxy, dx , y , etc. can be used. If the variable t represents time then Dt f can be written f˙. The differential, “d f ”, and the change in f ,“D f ”, are related to the derivative but have special meanings and are never used to indicate ordinary differentiation. dy 0 Historical note: Newton used y,˙ while Leibniz used dx . About a century later Lagrange introduced y and Arbogast introduced the operator notation D. 3. Domains The domain of D f is always a subset of the domain of f . The conventional domain of f , if f (x) is given by an algebraic expression, is all values of x for which the expression is defined and results in a real number. If f has the conventional domain, then D f usually, but not always, has conventional domain. Exceptions are noted below. -

Calculus Terminology

AP Calculus BC Calculus Terminology Absolute Convergence Asymptote Continued Sum Absolute Maximum Average Rate of Change Continuous Function Absolute Minimum Average Value of a Function Continuously Differentiable Function Absolutely Convergent Axis of Rotation Converge Acceleration Boundary Value Problem Converge Absolutely Alternating Series Bounded Function Converge Conditionally Alternating Series Remainder Bounded Sequence Convergence Tests Alternating Series Test Bounds of Integration Convergent Sequence Analytic Methods Calculus Convergent Series Annulus Cartesian Form Critical Number Antiderivative of a Function Cavalieri’s Principle Critical Point Approximation by Differentials Center of Mass Formula Critical Value Arc Length of a Curve Centroid Curly d Area below a Curve Chain Rule Curve Area between Curves Comparison Test Curve Sketching Area of an Ellipse Concave Cusp Area of a Parabolic Segment Concave Down Cylindrical Shell Method Area under a Curve Concave Up Decreasing Function Area Using Parametric Equations Conditional Convergence Definite Integral Area Using Polar Coordinates Constant Term Definite Integral Rules Degenerate Divergent Series Function Operations Del Operator e Fundamental Theorem of Calculus Deleted Neighborhood Ellipsoid GLB Derivative End Behavior Global Maximum Derivative of a Power Series Essential Discontinuity Global Minimum Derivative Rules Explicit Differentiation Golden Spiral Difference Quotient Explicit Function Graphic Methods Differentiable Exponential Decay Greatest Lower Bound Differential -

CHAPTER 3: Derivatives

CHAPTER 3: Derivatives 3.1: Derivatives, Tangent Lines, and Rates of Change 3.2: Derivative Functions and Differentiability 3.3: Techniques of Differentiation 3.4: Derivatives of Trigonometric Functions 3.5: Differentials and Linearization of Functions 3.6: Chain Rule 3.7: Implicit Differentiation 3.8: Related Rates • Derivatives represent slopes of tangent lines and rates of change (such as velocity). • In this chapter, we will define derivatives and derivative functions using limits. • We will develop short cut techniques for finding derivatives. • Tangent lines correspond to local linear approximations of functions. • Implicit differentiation is a technique used in applied related rates problems. (Section 3.1: Derivatives, Tangent Lines, and Rates of Change) 3.1.1 SECTION 3.1: DERIVATIVES, TANGENT LINES, AND RATES OF CHANGE LEARNING OBJECTIVES • Relate difference quotients to slopes of secant lines and average rates of change. • Know, understand, and apply the Limit Definition of the Derivative at a Point. • Relate derivatives to slopes of tangent lines and instantaneous rates of change. • Relate opposite reciprocals of derivatives to slopes of normal lines. PART A: SECANT LINES • For now, assume that f is a polynomial function of x. (We will relax this assumption in Part B.) Assume that a is a constant. • Temporarily fix an arbitrary real value of x. (By “arbitrary,” we mean that any real value will do). Later, instead of thinking of x as a fixed (or single) value, we will think of it as a “moving” or “varying” variable that can take on different values. The secant line to the graph of f on the interval []a, x , where a < x , is the line that passes through the points a, fa and x, fx. -

Antiderivatives 307

4100 AWL/Thomas_ch04p244-324 8/20/04 9:02 AM Page 307 4.8 Antiderivatives 307 4.8 Antiderivatives We have studied how to find the derivative of a function. However, many problems re- quire that we recover a function from its known derivative (from its known rate of change). For instance, we may know the velocity function of an object falling from an initial height and need to know its height at any time over some period. More generally, we want to find a function F from its derivative ƒ. If such a function F exists, it is called an anti- derivative of ƒ. Finding Antiderivatives DEFINITION Antiderivative A function F is an antiderivative of ƒ on an interval I if F¿sxd = ƒsxd for all x in I. The process of recovering a function F(x) from its derivative ƒ(x) is called antidiffer- entiation. We use capital letters such as F to represent an antiderivative of a function ƒ, G to represent an antiderivative of g, and so forth. EXAMPLE 1 Finding Antiderivatives Find an antiderivative for each of the following functions. (a) ƒsxd = 2x (b) gsxd = cos x (c) hsxd = 2x + cos x Solution (a) Fsxd = x2 (b) Gsxd = sin x (c) Hsxd = x2 + sin x Each answer can be checked by differentiating. The derivative of Fsxd = x2 is 2x. The derivative of Gsxd = sin x is cos x and the derivative of Hsxd = x2 + sin x is 2x + cos x. The function Fsxd = x2 is not the only function whose derivative is 2x. The function x2 + 1 has the same derivative. -

Review of Differentiation and Integration

Schreyer Fall 2018 Review of Differentiation and Integration for Ordinary Differential Equations In this course you will be expected to be able to differentiate and integrate quickly and accurately. Many students take this course after having taken their previous course many years ago, at another institution where certain topics may have been omitted, or just feel uncomfortable with particular techniques. Because understanding this material is so important to being successful in this course, we have put together this brief review packet. In this packet you will find sample questions and a brief discussion of each topic. If you find the material in this pamphlet is not sufficient for you, it may be necessary for you to use additional resources, such as a calculus textbook or online materials. Because this is considered prerequisite material, it is ultimately your responsibility to learn it. The topics to be covered include Differentiation and Integration. 1 Differentiation Exercises: 1. Find the derivative of y = x3 sin(x). ln(x) 2. Find the derivative of y = cos(x) . 3. Find the derivative of y = ln(sin(e2x)). Discussion: It is expected that you know, without looking at a table, the following differentiation rules: d [(kx)n] = kn(kx)n−1 (1) dx d h i ekx = kekx (2) dx d 1 [ln(kx)] = (3) dx x d [sin(kx)] = k cos kx (4) dx d [cos(kx)] = −k sin x (5) dx d [uv] = u0v + uv0 (6) dx 1 d u u0v − uv0 = (7) dx v v2 d [u(v(x))] = u0(v)v0(x): (8) dx We put in the constant k into (1) - (5) because a very common mistake to make is something d e2x like: e2x = (when the correct answer is 2e2x). -

Just the Definitions, Theorem Statements, Proofs On

MATH 3333{INTERMEDIATE ANALYSIS{BLECHER NOTES 55 Abbreviated notes version for Test 3: just the definitions, theorem statements, proofs on the list. I may possibly have made a mistake, so check it. Also, this is not intended as a REPLACEMENT for your classnotes; the classnotes have lots of other things that you may need for your understanding, like worked examples. 6. The derivative 6.1. Differentiation rules. Definition: Let f :(a; b) ! R and c 2 (a; b). If the limit lim f(x)−f(c) exists and is finite, then we say that f is differentiable at x!c x−c 0 df c, and we write this limit as f (c) or dx (c). This is the derivative of f at c, and also obviously equals lim f(c+h)−f(c) by setting x = c + h or h = x − c. If f is h!0 h differentiable at every point in (a; b), then we say that f is differentiable on (a; b). Theorem 6.1. If f :(a; b) ! R is differentiable at at a point c 2 (a; b), then f is continuous at c. Proof. If f is differentiable at c then f(x) − f(c) f(x) = (x − c) + f(c) ! f 0(c)0 + f(c) = f(c); x − c as x ! c. So f is continuous at c. Theorem 6.2. (Calculus I differentiation laws) If f; g :(a; b) ! R is differentiable at a point c 2 (a; b), then (1) f(x) + g(x) is differentiable at c and (f + g)0(c) = f 0(c) + g0(c). -

MATH 162: Calculus II Differentiation

MATH 162: Calculus II Framework for Mon., Jan. 29 Review of Differentiation and Integration Differentiation Definition of derivative f 0(x): f(x + h) − f(x) f(y) − f(x) lim or lim . h→0 h y→x y − x Differentiation rules: 1. Sum/Difference rule: If f, g are differentiable at x0, then 0 0 0 (f ± g) (x0) = f (x0) ± g (x0). 2. Product rule: If f, g are differentiable at x0, then 0 0 0 (fg) (x0) = f (x0)g(x0) + f(x0)g (x0). 3. Quotient rule: If f, g are differentiable at x0, and g(x0) 6= 0, then 0 0 0 f f (x0)g(x0) − f(x0)g (x0) (x0) = 2 . g [g(x0)] 4. Chain rule: If g is differentiable at x0, and f is differentiable at g(x0), then 0 0 0 (f ◦ g) (x0) = f (g(x0))g (x0). This rule may also be expressed as dy dy du = . dx x=x0 du u=u(x0) dx x=x0 Implicit differentiation is a consequence of the chain rule. For instance, if y is really dependent upon x (i.e., y = y(x)), and if u = y3, then d du du dy d (y3) = = = (y3)y0(x) = 3y2y0. dx dx dy dx dy Practice: Find d x d √ d , (x2 y), and [y cos(xy)]. dx y dx dx MATH 162—Framework for Mon., Jan. 29 Review of Differentiation and Integration Integration The definite integral • the area problem • Riemann sums • definition Fundamental Theorem of Calculus: R x I: Suppose f is continuous on [a, b]. -

ATMS 310 Math Review Differential Calculus the First Derivative of Y = F(X)

ATMS 310 Math Review Differential Calculus dy The first derivative of y = f(x) = = f `(x) dx d2 x The second derivative of y = f(x) = = f ``(x) dy2 Some differentiation rules: dyn = nyn-1 dx d() uv dv du = u( ) + v( ) dx dx dx ⎛ u ⎞ ⎛ du dv ⎞ d⎜ ⎟ ⎜v − u ⎟ v dx dx ⎝ ⎠ = ⎝ ⎠ dx v2 dy ⎛ dy dz ⎞ Chain Rule If y = f(z) and z = f(x), = ⎜ *⎟ dx ⎝ dz dx ⎠ Total vs. Partial Derivatives The rate of change of something following a fluid element (e.g. change in temperature in the atmosphere) is called the Langrangian rate of change: d / dt or D / Dt The rate of change of something at a fixed point is called the Eulerian (oy – layer’ – ian) rate of change: ∂/∂t, ∂/∂x, ∂/∂y, ∂/∂z The total rate of change of a variable is the sum of the local rate of change plus the 3- dimensional advection of that variable: d() ∂( ) ∂( ) ∂( ) ∂( ) = + u + v + w dt∂ t ∂x ∂y ∂z (1) (2) (3) (4) Where (1) is the Eulerian rate of change, and terms (2) – (4) is the 3-dimensional advection of the variable (u = zonal wind, v = meridional wind, w = vertical wind) Vectors A scalar only has a magnitude (e.g. temperature). A vector has magnitude and direction (e.g. wind). Vectors are usually represented in bold font. The wind vector is specified as V or r sometimes as V i, j, and k known as unit vectors. They have a magnitude of 1 and point in the x (i), y (j), and z (k) directions. -

Efficient Computation of Sparse Higher Derivative Tensors⋆

Efficient Computation of Sparse Higher Derivative Tensors? Jens Deussen and Uwe Naumann RWTH Aachen University, Software and Tools for Computational Engineering, Aachen, Germany fdeussen,[email protected] Abstract. The computation of higher derivatives tensors is expensive even for adjoint algorithmic differentiation methods. In this work we in- troduce methods to exploit the symmetry and the sparsity structure of higher derivatives to considerably improve the efficiency of their compu- tation. The proposed methods apply coloring algorithms to two-dimen- sional compressed slices of the derivative tensors. The presented work is a step towards feasibility of higher-order methods which might benefit numerical simulations in numerous applications of computational science and engineering. Keywords: Adjoints · Algorithmic Differentiation · Coloring Algorithm · Higher Derivative Tensors · Recursive Coloring · Sparsity. 1 Introduction The preferred numerical method to compute derivatives of a computer program is Algorithmic Differentiation (AD) [1,2]. This technique produces exact derivatives with machine accuracy up to an arbitrary order by exploiting elemental symbolic differentiation rules and the chain rule. AD distinguishes between two basic modes: the forward mode and the reverse mode. The forward mode builds a directional derivative (also: tangent) code for the computation of Jacobians at a cost proportional to the number of input parameters. Similarly, the reverse mode builds an adjoint version of the program but it can compute the Jacobian at a cost that is proportional to the number of outputs. For that reason, the adjoint method is advantageous for problems with a small set of output parameters, as it occurs frequently in many fields of computational science and engineering. -

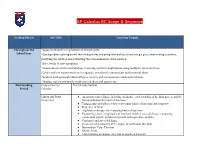

AP Calculus BC Scope & Sequence

AP Calculus BC Scope & Sequence Grading Period Unit Title Learning Targets Throughout the *Apply mathematics to problems in everyday life School Year *Use a problem-solving model that incorporates analyzing information, formulating a plan, determining a solution, justifying the solution and evaluating the reasonableness of the solution *Select tools to solve problems *Communicate mathematical ideas, reasoning and their implications using multiple representations *Create and use representations to organize, record and communicate mathematical ideas *Analyze mathematical relationships to connect and communicate mathematical ideas *Display, explain and justify mathematical ideas and arguments First Grading Preparation for Pre Calculus Review Period Calculus Limits and Their ● An introduction to limits, including an intuitive understanding of the limit process and the Properties formal definition for limits of functions ● Using graphs and tables of data to determine limits of functions and sequences ● Properties of limits ● Algebraic techniques for evaluating limits of functions ● Comparing relative magnitudes of functions and their rates of change (comparing exponential growth, polynomial growth and logarithmic growth) ● Continuity and one-sided limits ● Geometric understanding of the graphs of continuous functions ● Intermediate Value Theorem ● Infinite limits ● Understanding asymptotes in terms of graphical behavior ● Using limits to find the asymptotes of a function, vertical and horizontal Differentiation ● Tangent line to a curve and -

Review of Differentiation and Integration Rules from Calculus I and II for Ordinary Differential Equations, 3301 General Notatio

Review of di®erentiation and integration rules from Calculus I and II for Ordinary Di®erential Equations, 3301 General Notation: a; b; m; n; C are non-speci¯c constants, independent of variables e; ¼ are special constants e = 2:71828 ¢ ¢ ¢, ¼ = 3:14159 ¢ ¢ ¢ f; g; u; v; F are functions f n(x) usually means [f(x)]n, but f ¡1(x) usually means inverse function of f a(x + y) means a times x + y, but f(x + y) means f evaluated at x + y fg means function f times function g, but f(g) means output of g is input of f t; x; y are variables, typically t is used for time and x for position, y is position or output 0;00 are Newton notations for ¯rst and second derivatives. d d2 d d2 Leibnitz notations for ¯rst and second derivatives are and or and dx dx2 dt dt2 Di®erential of x is shown by dx or ¢x or h f(x + ¢x) ¡ f(x) f 0(x) = lim , derivative of f shows the slope of the tangent line, rise ¢x!0 ¢x over run, for the function y = f(x) at x General di®erentiation rules: dt dx 1a- Derivative of a variable with respect to itself is 1. = 1 or = 1. dt dx 1b- Derivative of a constant is zero. 2- Linearity rule (af + bg)0 = af 0 + bg0 3- Product rule (fg)0 = f 0g + fg0 µ ¶ f 0 f 0g ¡ fg0 4- Quotient rule = g g2 5- Power rule (f n)0 = nf n¡1f 0 6- Chain rule (f(g(u)))0 = f 0(g(u))g0(u)u0 7- Logarithmic rule (f)0 = [eln f ]0 8- PPQ rule (f ngm)0 = f n¡1gm¡1(nf 0g + mfg0), combines power, product and quotient 9- PC rule (f n(g))0 = nf n¡1(g)f 0(g)g0, combines power and chain rules 10- Golden rule: Last algebra action speci¯es the ¯rst di®erentiation rule to