The Goals of the Generative Enterprise ROBERT FREIDIN Princeton

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

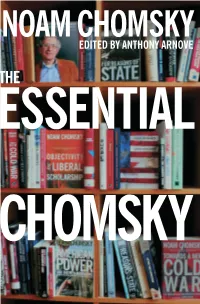

Essential Chomsky

CURRENT AFFAIRS $19.95 U.S. CHOMSKY IS ONE OF A SMALL BAND OF NOAM INDIVIDUALS FIGHTING A WHOLE INDUSTRY. AND CHOMSKY THAT MAKES HIM NOT ONLY BRILLIANT, BUT HEROIC. NOAM CHOMSKY —ARUNDHATI ROY EDITED BY ANTHONY ARNOVE THEESSENTIAL C Noam Chomsky is one of the most significant Better than anyone else now writing, challengers of unjust power and delusions; Chomsky combines indignation with he goes against every assumption about insight, erudition with moral passion. American altruism and humanitarianism. That is a difficult achievement, —EDWARD W. SAID and an encouraging one. THE —IN THESE TIMES For nearly thirty years now, Noam Chomsky has parsed the main proposition One of the West’s most influential of American power—what they do is intellectuals in the cause of peace. aggression, what we do upholds freedom— —THE INDEPENDENT with encyclopedic attention to detail and an unflagging sense of outrage. Chomsky is a global phenomenon . —UTNE READER perhaps the most widely read voice on foreign policy on the planet. ESSENTIAL [Chomsky] continues to challenge our —THE NEW YORK TIMES BOOK REVIEW assumptions long after other critics have gone to bed. He has become the foremost Chomsky’s fierce talent proves once gadfly of our national conscience. more that human beings are not —CHRISTOPHER LEHMANN-HAUPT, condemned to become commodities. THE NEW YORK TIMES —EDUARDO GALEANO HO NE OF THE WORLD’S most prominent NOAM CHOMSKY is Institute Professor of lin- Opublic intellectuals, Noam Chomsky has, in guistics at MIT and the author of numerous more than fifty years of writing on politics, phi- books, including For Reasons of State, American losophy, and language, revolutionized modern Power and the New Mandarins, Understanding linguistics and established himself as one of Power, The Chomsky-Foucault Debate: On Human the most original and wide-ranging political and Nature, On Language, Objectivity and Liberal social critics of our time. -

On the Irrelevance of Transformational Grammar to Second Language Pedagogy

ON THE IRRELEVANCE OF TRANSFORMATIONAL GRAMMAR TO SECOND LANGUAGE PEDAGOGY John T. Lamendella The University of Michigan Many scholars view transformational grammar as an attempt to represent the structure of linguistic knowledge in the mind and seek to apply transformational descriptions of languages to the de- velopment of second language teaching materials. It will be claimed in this paper that it is a mistake to look to transformational gram- mar or any other theory of linguistic description to provide the theoretical basis for either second language pedagogy or a theory of language acquisition. One may well wish to describe the ab- stract or logical structure of a language by constructing a trans- formational grammar which generates the set of sentences identi- fied with that language. However, this attempt should not be con- fused with an attempt to understand the cognitive structures and processes involved in knowing or using a language. It is a cogni- tive theory of language within the field of psycholinguistics rather than a theory of linguistic description which should underlie lan- guage teaching materials. A great deal of effort has been expended in the attempt to demonstrate the potential contributions of the field of descriptive linguistics to the teaching of second languages and, since the theory of transformational grammar has become the dominant theory in the field of linguistics, it is not surprising that applied linguists have sought to apply transformational grammar to gain new in- sights into the teaching of second languages. It will be claimed in this paper that it is a mistake to look to transformational grammar or any other theory of linguistic description to provide the theoretical basis for either second language pedagogy or a theory of language acquisition. -

1A Realistic Transformational Grammar

1 A Realistic Transformational Grammar JOAN BRESNAN The Realization Problem More than ten years ago Noam Chomsky expressed a fundamental assumption of transformational grammar : . A reasonable model of language use will incorporate , as a basic component , the generative grammar that express es the speaker - hearer ' s knowledge of the language . " This assumption is fundamental in that it defines basic research objectives for transformational grammar : to characterize the grammar that is to represent the language user ' s knowledge of language , and to specify the relation between the grammar and the model of language use into which the grammar is to be incorporated as a basic component . We may call these two research objectives the grammatical characterization problem and the grammatical realization problem . In the past ten years linguistic research has been devoted almost exclusively to the characterization problem ; the crucial question posed by the realization problem has been neglected - How } \ ' ould a reasonable model of language use incorporate a transformational grammar ? If we go back to the context in which Chomsky expressed this assumption about the relation of grammar to language use , we find that it is couched within an admonition : . A generative grammar is not a model for a speaker or a hearer . No doubt , a reasonable model of language use will incorporate , as a basic component , the generative grammar that express es speaker - hearer ' s knowledge of the language ; but this generative grammar does not , in itself , prescribe the character or functioning of a perceptual model or a model of speech - production " ( 1965 , p . 9 ) . In retrospect , this caution appears to have been justified by the rather pessimistic conclusions that have since been drawn in This chapter discuss es research in progress by the author , which is likely to be modified and refined. -

Psycholinguistics

11/09/2013 Psycholinguistics What do these activities have in common? What kind of process is involved in producing and understanding language? 1 11/09/2013 Questions • What is psycholinguistics? • What are the main topics of psycholinguistics? 9.1 Introduction • * Psycholinguistics is the study of the language processing mechanisms. Psycholinguistics deals with the mental processes a person uses in producing and understanding language. It is concerned with the relationship between language and the human mind, for example, how word, sentence, and discourse meaning are represented and computed in the mind. 2 11/09/2013 9.1 Introduction * As the name suggests, it is a subject which links psychology and linguistics. • Psycholinguistics is interdisciplinary in nature and is studied by people in a variety of fields, such as psychology, cognitive science, and linguistics. It is an area of study which draws insights from linguistics and psychology and focuses upon the comprehension and production of language. • The scope of psycholinguistics • The common aim of psycholinguists is to find out the structures and processes which underline a human’s ability to speak and understand language. • Psycholinguists are not necessarily interested in language interaction between people. They are trying above all to probe into what is happening within the individual. 3 11/09/2013 The scope of psycholinguistics • At its heart, psycholinguistic work consists of two questions. – What knowledge of language is needed for us to use language? – What processes are involved in the use of language? The “knowledge” question • Four broad areas of language knowledge: Semantics deals with the meanings of sentences and words. -

Psychology, Meaning Making and the Study of Worldviews: Beyond Religion and Non-Religion

Psychology, Meaning Making and the Study of Worldviews: Beyond Religion and Non-Religion Ann Taves, University of California, Santa Barbara Egil Asprem, Stockholm University Elliott Ihm, University of California, Santa Barbara Abstract: To get beyond the solely negative identities signaled by atheism and agnosticism, we have to conceptualize an object of study that includes religions and non-religions. We advocate a shift from “religions” to “worldviews” and define worldviews in terms of the human ability to ask and reflect on “big questions” ([BQs], e.g., what exists? how should we live?). From a worldviews perspective, atheism, agnosticism, and theism are competing claims about one feature of reality and can be combined with various answers to the BQs to generate a wide range of worldviews. To lay a foundation for the multidisciplinary study of worldviews that includes psychology and other sciences, we ground them in humans’ evolved world-making capacities. Conceptualizing worldviews in this way allows us to identify, refine, and connect concepts that are appropriate to different levels of analysis. We argue that the language of enacted and articulated worldviews (for humans) and worldmaking and ways of life (for humans and other animals) is appropriate at the level of persons or organisms and the language of sense making, schemas, and meaning frameworks is appropriate at the cognitive level (for humans and other animals). Viewing the meaning making processes that enable humans to generate worldviews from an evolutionary perspective allows us to raise news questions for psychology with particular relevance for the study of nonreligious worldviews. Keywords: worldviews, meaning making, religion, nonreligion Acknowledgments: The authors would like to thank Raymond F. -

The Three Meanings of Meaning in Life: Distinguishing Coherence, Purpose, and Significance

The Journal of Positive Psychology Dedicated to furthering research and promoting good practice ISSN: 1743-9760 (Print) 1743-9779 (Online) Journal homepage: http://www.tandfonline.com/loi/rpos20 The three meanings of meaning in life: Distinguishing coherence, purpose, and significance Frank Martela & Michael F. Steger To cite this article: Frank Martela & Michael F. Steger (2016) The three meanings of meaning in life: Distinguishing coherence, purpose, and significance, The Journal of Positive Psychology, 11:5, 531-545, DOI: 10.1080/17439760.2015.1137623 To link to this article: http://dx.doi.org/10.1080/17439760.2015.1137623 Published online: 27 Jan 2016. Submit your article to this journal Article views: 425 View related articles View Crossmark data Full Terms & Conditions of access and use can be found at http://www.tandfonline.com/action/journalInformation?journalCode=rpos20 Download by: [Colorado State University] Date: 06 July 2016, At: 11:55 The Journal of Positive Psychology, 2016 Vol. 11, No. 5, 531–545, http://dx.doi.org/10.1080/17439760.2015.1137623 The three meanings of meaning in life: Distinguishing coherence, purpose, and significance Frank Martelaa* and Michael F. Stegerb,c aFaculty of Theology, University of Helsinki, P.O. Box 4, Helsinki 00014, Finland; bDepartment of Psychology, Colorado State University, 1876 Campus Delivery, Fort Collins, CO 80523-1876, USA; cSchool of Behavioural Sciences, North-West University, Vanderbijlpark, South Africa (Received 25 June 2015; accepted 3 December 2015) Despite growing interest in meaning in life, many have voiced their concern over the conceptual refinement of the con- struct itself. Researchers seem to have two main ways to understand what meaning in life means: coherence and pur- pose, with a third way, significance, gaining increasing attention. -

A Naturalist Reconstruction of Minimalist and Evolutionary Biolinguistics

A Naturalist Reconstruction of Minimalist and Evolutionary Biolinguistics Hiroki Narita & Koji Fujita Kinsella & Marcus (2009; K&M) argue that considerations of biological evo- lution invalidate the picture of optimal language design put forward under the rubric of the minimalist program (Chomsky 1993 et seq.), but in this article it will be pointed out that K&M’s objection is undermined by (i) their misunderstanding of minimalism as imposing an aprioristic presumption of optimality and (ii) their failure to discuss the third factor of language design. It is proposed that the essence of K&M’s suggestion be reconstructed as the sound warning that one should refrain from any preconceptions about the object of inquiry, to which K&M commit themselves based on their biased view of evolution. A different reflection will be cast on the current minima- list literature, arguably along the lines K&M envisaged but never completed, converging on a recommendation of methodological (and, in a somewhat unconventional sense, metaphysical) naturalism. Keywords: evolutionary/biological adequacy; language evolution; metho- dological/metaphysical naturalism; minimalist program; third factor of language design 1. Introduction A normal human infant can learn any natural language(s) he or she is exposed to, whereas none of their pets (kittens, dogs, etc.) can, even given exactly the same data from the surrounding speech community. There must be something special, then, to the genetic endowment of human beings that is responsible for the emer- gence of this remarkable linguistic capacity. Human language is thus a biological object that somehow managed to come into existence in the evolution of the hu- man species. -

Between Psychology and Philosophy East-West Themes and Beyond

PALGRAVE STUDIES IN COMPARATIVE EAST-WEST PHILOSOPHY Between Psychology and Philosophy East-West Themes and Beyond Michael Slote Palgrave Studies in Comparative East-West Philosophy Series Editors Chienkuo Mi Philosophy Soochow University Taipei City, Taiwan Michael Slote Philosophy Department University of Miami Coral Gables, FL, USA The purpose of Palgrave Studies in Comparative East-West Philosophy is to generate mutual understanding between Western and Chinese philoso- phers in a world of increased communication. It has now been clear for some time that the philosophers of East and West need to learn from each other and this series seeks to expand on that collaboration, publishing books by philosophers from different parts of the globe, independently and in partnership, on themes of mutual interest and currency. The series also publishs monographs of the Soochow University Lectures and the Nankai Lectures. Both lectures series host world-renowned philosophers offering new and innovative research and thought. More information about this series at http://www.palgrave.com/gp/series/16356 Michael Slote Between Psychology and Philosophy East-West Themes and Beyond Michael Slote Philosophy Department University of Miami Coral Gables, FL, USA ISSN 2662-2378 ISSN 2662-2386 (electronic) Palgrave Studies in Comparative East-West Philosophy ISBN 978-3-030-22502-5 ISBN 978-3-030-22503-2 (eBook) https://doi.org/10.1007/978-3-030-22503-2 © The Editor(s) (if applicable) and The Author(s) 2020. This book is an open access publication. Open Access This book is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence and indicate if changes were made. -

Introduction to Transformational Grammar

Introduction to Transformational Grammar Kyle Johnson University of Massachusetts at Amherst Fall 2004 Contents Preface iii 1 The Subject Matter 1 1.1 Linguisticsaslearningtheory . 1 1.2 The evidential basis of syntactic theory . 7 2 Phrase Structure 15 2.1 SubstitutionClasses............................. 16 2.2 Phrases .................................... 20 2.3 Xphrases................................... 29 2.4 ArgumentsandModifiers ......................... 41 3 Positioning Arguments 57 3.1 Expletives and the Extended Projection Principle . ..... 58 3.2 Case Theory and ordering complements . 61 3.3 Small Clauses and the Derived Subjects Hypothesis . ... 68 3.4 PROandControlInfinitives . .. .. .. .. .. .. 79 3.5 Evidence for Argument Movement from Quantifier Float . 83 3.6 Towards a typology of infinitive types . 92 3.7 Constraints on Argument Movement and the typology of verbs . 97 4 Verb Movement 105 4.1 The “Classic” Verb Movement account . 106 4.2 Head Movement’s role in “Verb Second” word order . 115 4.3 The Pollockian revolution: exploded IPs . 123 4.4 Features and covert movement . 136 5 Determiner Phrases and Noun Movement 149 5.1 TheDPHypothesis ............................. 151 5.2 NounMovement............................... 155 Contents 6 Complement Structure 179 6.1 Nouns and the θ-rolestheyassign .................... 180 6.2 Double Object constructions and Larsonian shells . 195 6.3 Complement structure and Object Shift . 207 7 Subjects and Complex Predicates 229 7.1 Gettingintotherightposition . 229 7.2 SubjectArguments ............................. 233 7.2.1 ArgumentStructure ........................ 235 7.2.2 The syntactic benefits of ν .................... 245 7.3 The relative positions of µP and νP: Evidence from ‘again’ . 246 7.4 The Minimal Link Condition and Romance causatives . 254 7.5 RemainingProblems ............................ 271 7.5.1 The main verb in English is too high . -

The Language Faculty – Mind Or Brain?

The Language Faculty – Mind or Brain? TORBEN THRANE Aarhus University, School of Business, Denmark Artiklens sigte er at underkaste Chomskys nyskabende nominale sammensætning – “the mind/brain” – en nøjere undersøgelse. Hovedargumentet er at den skaber en glidebane, således at det der burde være en funktionel diskussion – hvad er det hjernen gør når den behandler sprog? – i stedet bliver en systemdiskussion – hvad er den interne struktur af den menneskelige sprogevne? Argumentet søges ført igennem under streng iagttagelse af de kognitive videnskabers opfattelse af information og repræsentation, specielt som forstået af den amerikanske filosof Frederick I. Dretske. 1. INTRODUCTION Cartesian dualism is dead, but the central problems it was supposed to solve persist: What kinds of substances exist? Are bodily things (only) physical, mental ‘things’ (only) metaphysical? How is the mental related to the physical? Although “we are all materialists now”, such questions still need answers, and they still need answers from within realist and naturalist bounds to count as scientific. Linguistics is a discipline that may be expected to contribute with answers to such questions. No one in their right mind would deny that our ability to acquire and use human language is lodged between our ears – inside our heads. That is, in our brains and/or minds. In some quarters it has been customary for the past 50 odd years or so to regard this ability as a distinct and dedicated cognitive ability on a par with our abilities to see (vision) or to keep our balance (equilibrioception), and to refer to it as the Human Language Faculty (HLF). This has been the axiomatic starting point for the development of main stream generative linguistics as founded by Chomsky in 1955 in what I shall refer to as ‘Chomsky’s programme’. -

The Role of Affect in Language Development∗

The Role of Affect in Language Development∗ Stuart G. SHANKER and Stanley I. GREENSPAN BIBLID [0495-4548 (2005) 20: 54; pp. 329-343] ABSTRACT: This paper presents the Functional/Emotional approach to language development, which explains the process leading up to the core capacities necessary for language (e.g., pattern-recognition, joint attention); shows how this process leads to the formation of internal symbols; and how it shapes and is shaped by the child’s development of language. The heart of this approach is that, through a series of affective transfor- mations, a child develops these core capacities and the capacity to form meaningful symbols. Far from be- ing a sudden jump, the transition from pre-symbolic communication to language is enabled by the advances taking place in the child’s affective gesturing. Key words: Functional/Emotional hypothesis; affective transformations; usage-based linguistics; pattern-recognition; joint attention; learning-based interactions; nativism; autism 1. The F/E View of Language Development Chomsky began Cartesian Linguistics with the following quotation from Whitehead’s Science and the Modern World: A brief, and sufficiently accurate, description of the intellectual life of the European races during the succeeding two centuries and a quarter up to our own times is that they have been living upon the accumulated capital of ideas provided for them by the genius of the seventeenth cen- tury (Chomsky 1967). Chomsky was certainly right: both in regards to the accuracy of Whitehead’s ob- servation, and in regards to the significance of this outlook for Chomsky’s whole ap- proach to language acquisition. -

New Horizons in the Study of Language and Mind Noam Chomsky Frontmatter More Information

Cambridge University Press 0521651476 - New Horizons in the Study of Language and Mind Noam Chomsky Frontmatter More information New Horizons in the Study of Language and Mind This book is an outstanding contribution to the philosophical study of language and mind, by one of the most influential thinkers of our time. In a series of penetrating essays, Noam Chomsky cuts through the confusion and prejudice which has infected the study of language and mind, bringing new solutions to traditional philosophical puzzles and fresh perspectives on issues of general interest, ranging from the mind–body problem to the unification of science. Using a range of imaginative and deceptively simple linguistic ana- lyses, Chomsky argues that there is no coherent notion of “language” external to the human mind, and that the study of language should take as its focus the mental construct which constitutes our knowledge of language. Human language is therefore a psychological, ultimately a “biological object,” and should be analysed using the methodology of the natural sciences. His examples and analyses come together in this book to give a unique and compelling perspective on language and the mind. is Institute Professor at the Department of Linguistics and Philosophy, Massachusetts Institute of Technology. Professor Chomsky has written and lectured extensively on a wide range of topics, including linguistics, philosophy, and intellectual history. His main works on linguistics include: Syntactic Structures; Current Issues in Linguistic Theory; Aspects of the Theory of Syntax; Cartesian Linguistics; Sound Pattern of English (with Morris Halle); Language and Mind; Reflections on Language; The Logical Structure of Linguistic Theory; Lec- tures on Government and Binding; Modular Approaches to the Study of the Mind; Knowledge of Language: its Nature, Origins and Use; Language and Problems of Knowledge; Generative Grammar: its Basis, Development and Prospects; Language in a Psychological Setting; Language and Prob- lems of Knowledge; and The Minimalist Program.