Visualization of Point Cloud Models in Mobile Augmented Reality Using Continuous Level of Detail Method

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Google Apps: an Introduction to Picasa

[Not for Circulation] Google Apps: An Introduction to Picasa This document provides an introduction to using Picasa, a free application provided by Google. With Picasa, users are able to add, organize, edit, and share their personal photos, utilizing 1 GB of free space. In order to use Picasa, users need to create a Google Account. Creating a Google Account To create a Google Account, 1. Go to http://www.google.com/. 2. At the top of the screen, select “Gmail”. 3. On the Gmail homepage, click on the right of the screen on the button that is labeled “Create an account”. 4. In order to create an account, you will be asked to fill out information, including choosing a Login name which will serve as your [email protected], as well as a password. After completing all the information, click “I accept. Create my account.” at the bottom of the page. 5. After you successfully fill out all required information, your account will be created. Click on the “Show me my account” button which will direct you to your Gmail homepage. Downloading Picasa To download Picasa, go http://picasa.google.com. 1. Select Download Picasa. 2. Select Save File. Information Technology Services, UIS 1 [Not for Circulation] 3. Click on the downloaded file, and select Run. 4. Follow the installation procedures to complete the installation of Picasa on your computer. When finished, you will be directed to a new screen. Click Get Started with Picasa Web Albums. Importing Pictures Photos can be uploaded into Picasa a variety of ways, all of them very simple to use. -

Picasa Getting Started Guide

Picasa Getting Started Guide Picasa is free photo management software from Google that helps you find, edit and share your photos in seconds. We recommend that you print out this brief overview of Picasa's main features and consult it as you use the program for the first time to learn about new features quickly. Organize Once you start Picasa, it scans your hard drive to find and automatically organize all your photos. Picasa finds the following photo and movie file types: • Photo file types: JPG, GIF, TIF, PSD, PNG, BMP, RAW (including NEF and CRW). GIF and PNG files are not scanned by default, but you can enable them in the Tools > Options dialog. • Movie file types: MPG, AVI, ASF, WMV, MOV. If you are upgrading from an older version of Picasa, you will likely want to keep your existing database, which contains any organization and photo edits you have made. To transfer all this information, simply install Picasa without uninstalling Picasa already on your computer. On your first launch of Picasa you will be prompted to transfer your existing database. After this process is complete, you can uninstall Picasa. Library view Picasa automatically organizes all your photo and movie files into collections of folders inside its main Library view. Layout of main Library screen: Picasa Getting Started Guide Page 1 of 9 Folder list The left-hand list in Picasa's Library view shows all the folders containing photos on your computer and all the albums you've created in Picasa. These folders and albums are grouped into collections that are described in the next section. -

Mobile GPS Mapping Applications Forensic Analysis & SNAVP the Open Source, Modular, Extensible Parser

Journal of Digital Forensics, Security and Law Volume 12 Article 7 3-31-2017 Find Me If You Can: Mobile GPS Mapping Applications Forensic Analysis & SNAVP the Open Source, Modular, Extensible Parser Jason Moore Ibrahim Baggili University of New Haven Frank Breitinger Follow this and additional works at: https://commons.erau.edu/jdfsl Part of the Computer Engineering Commons, Computer Law Commons, Electrical and Computer Engineering Commons, Forensic Science and Technology Commons, and the Information Security Commons Recommended Citation Moore, Jason; Baggili, Ibrahim; and Breitinger, Frank (2017) "Find Me If You Can: Mobile GPS Mapping Applications Forensic Analysis & SNAVP the Open Source, Modular, Extensible Parser," Journal of Digital Forensics, Security and Law: Vol. 12 , Article 7. DOI: https://doi.org/10.15394/jdfsl.2017.1414 Available at: https://commons.erau.edu/jdfsl/vol12/iss1/7 This Article is brought to you for free and open access by the Journals at Scholarly Commons. It has been accepted for inclusion in Journal of Digital Forensics, Security and Law by an authorized administrator of (c)ADFSL Scholarly Commons. For more information, please contact [email protected]. Find me if you can: Mobile GPS mapping ... JDFSL V12N1 FIND ME IF YOU CAN: MOBILE GPS MAPPING APPLICATIONS FORENSIC ANALYSIS & SNAVP THE OPEN SOURCE, MODULAR, EXTENSIBLE PARSER Jason Moore, Ibrahim Baggili and Frank Breitinger Cyber Forensics Research and Education Group (UNHcFREG) Tagliatela College of Engineering University of New Haven, West Haven CT, 06516, United States e-Mail: [email protected], fIBaggili, [email protected] ABSTRACT The use of smartphones as navigation devices has become more prevalent. -

XP8 Bell Canada User Guide English

XP8 USER GUIDE © 2018 by Sonim Technologies, Inc. All rights reserved. CONTENT GENERAL INFORMATION Congratulations on the purchase of a Sonim XP8 (XP8800) mobile phone! This phone is LTE smartphone Copyright © 2018 Sonim Technologies, Inc. enabled and has an intuitive feature-rich user interface, Sonim and the Sonim logo are trademarks of Sonim which allows you to make the best use of offered Technologies, Inc. Other company and product names functions. may be trademarks or registered trade-marks of the respective owners with whom they are associated. PHONE MODELS COVERED THIRD PARTY TRADEMARKS This user guide covers Sonim XP8 phone with the model number XP8800. The model name can be seen on Google, Android, Google Calendar, Google Now, Google backside of the phone. Play and other marks are trademarks of Google Inc. Disposal of Old Electrical and Electronic Equipment SONIM SUPPORT INFORMATION The symbol of the crossed-out wheeled For additional product and support information, visit bin indicates that within the countries in the www.sonimtech.com. European Union, this product, and any en- hancements marked with this symbol, cannot USE THE GUIDE EFFECTIVELY be disposed as unsorted waste but must be Familiarize yourself with the terminology and symbols taken to separate collection at their end- of- used in the guide to help you use your phone effectively. life. DISPOSAL OF BATTERY HOME This is the screen displayed when the SCREEN phone is in standby mode. Please check local regulations for disposal of batteries. The battery should never be placed TOUCH & Touch and hold an item on the screen by in municipal waste. -

Instructions for Using the Google Maps™ App on Your Smartphone to Navigate in Italy Or Ireland

Instructions for Using the Google Maps™ App on Your Smartphone to Navigate in Italy or Ireland Click on one of the links below to go directly to that section of these instructions: Overview Note Quick Summary Complete Step-by-Step Directions A User Tip Using the Google Maps App in Offline Mode – be sure to read this! Contact Us OVERVIEW Our books are provided with an activation code which enables the reader to download from our website a proprietary set of GPS coordinates in one of three dif- ferent file formats: For use with Garmin® GPS devices (a GPX file) For use with TomTom® GPS devices (an OV2 file) For use with the Google Maps™ app on an iPhone® or an Android™ phone (a KML file) These GPS coordinates cover all of the locations referenced in each of our books. The KML file we supply for use when navigating with a smartphone inter- faces with Google Maps. This means you must: Have a Google account (free) Install the Google Maps app onto your smartphone Using a Windows® PC or Mac® computer, download our KML file to your computer and then upload that file to your personal Google Maps account Once you have added our KML file to your personal Google Maps account, you can then access all of the locations referenced in each of our books using the Google Maps app your smartphone! NOTE Navigating with Google Maps differs in some important respects from navi- gating with a Garmin or TomTom GPS device. All three approaches use the same ex- act underlying data (the files we supply for download). -

Browser Tips for Peoplesoft

PeopleSoft User Guide: Browser Tips BROWSER TIPS FOR PEOPLESOFT TABLE OF CONTENTS Overview ............................................................................................................................................. 3 Preferred Browsers for Working in PeopleSoft .................................................................................... 3 Working in Financials and Human Resources Simultaneously ............................................................. 3 Browser Versions ................................................................................................................................ 3 Clearing Browser Cache ....................................................................................................................... 4 Why is it necessary to clear the browser cache?.................................................................................... 4 How to clear browser cache ................................................................................................................... 4 Allowing Pop-Up Windows in PeopleSoft ............................................................................................ 4 How to allow PeopleSoft pop-ups in Mozilla Firefox ............................................................................. 5 How to allow PeopleSoft pop-ups in Microsoft Internet Explorer (versions 9-11) ................................ 6 How to allow PeopleSoft pop-ups in Microsoft Edge ............................................................................ -

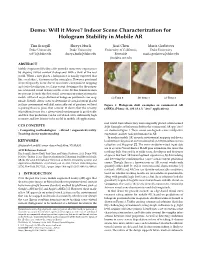

Indoor Scene Characterization for Hologram Stability in Mobile AR

Demo: Will it Move? Indoor Scene Characterization for Hologram Stability in Mobile AR Tim Scargill Shreya Hurli Jiasi Chen Maria Gorlatova Duke University Duke University University of California, Duke University [email protected] [email protected] Riverside [email protected] [email protected] ABSTRACT Mobile Augmented Reality (AR) provides immersive experiences by aligning virtual content (holograms) with a view of the real world. When a user places a hologram it is usually expected that like a real object, it remains in the same place. However, positional errors frequently occur due to inaccurate environment mapping and device localization, to a large extent determined by the proper- ties of natural visual features in the scene. In this demonstration we present SceneIt, the first visual environment rating system for mobile AR based on predictions of hologram positional error mag- (a) Time 0 (b) Time 1 (c) Time 2 nitude. SceneIt allows users to determine if virtual content placed in their environment will drift noticeably out of position, without Figure 1: Hologram drift examples in commercial AR requiring them to place that content. It shows that the severity (ARKit, iPhone 11, iOS 13.1.3, "Avo!" application) of positional error for a given visual environment is predictable, and that this prediction can be calculated with sufficiently high accuracy and low latency to be useful in mobile AR applications. real world from where they were originally placed, often termed CCS CONCEPTS drift. Examples of hologram drift in the commercial AR app ‘Avo!’ • Computing methodologies ! Mixed / augmented reality; are shown in Figure 1. -

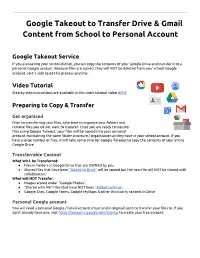

Google Takeout to Transfer Drive & Gmail Content from School To

Google Takeout to Transfer Drive & Gmail Content from School to Personal Account Google Takeout Service If you are leaving your school district, you can copy the contents of your Google Drive and transfer it to a personal Google account. Because files are copied, they will NOT be deleted from your school Google account, so it’s safe to do this process anytime. Video Tutorial Step by step instructions are available in this short tutorial video HERE. Preparing to Copy & Transfer Get organized Prior to transferring your files, take time to organize your folders and remove files you do not want to transfer. Once you are ready to transfer files using Google Takeout, your files will be copied into your personal account maintaining the same folder structure / organization as they have in your school account. If you have a large number of files, it will take some time for Google Takeout to copy the contents of your entire Google Drive. Transferrable Content What WILL be Transferred: ● Files in Folders in Google Drive that are OWNED by you ● Shared files that have been “Added to Drive” will be copied but the new file will NOT be shared with collaborators What will NOT Transfer: ● Images stored under “Google Photos” ● “Shared with Me” Files that have NOT been “Added to Drive” ● Google Sites, Google Forms, Google MyMaps & other third party content in Drive Personal Google account You will need a personal Google / Gmail account (must end in @gmail.com) to transfer your files to. If you don’t already have one, visit https://accounts.google.com/SignUp to create your free account. -

CS-15-02 Managing Applications and Google Play Store with PIVOT

Technical Bulletin CS-15-02 Managing Applications and Google Play Store with PIVOT This technical bulletin provides some basic application management best practices with PIVOT. System Affected Spectralink 87-series devices (8741/8753) Description PIVOT software release version 1.4 (and later) includes support for Google’s Play Store & many other Google capabilities. The Play Store allows users to download Android applications (“apps”) directly to PIVOT, and subsequent application updates can be automatically pushed down to the device. For consumer-owned devices, Play Store access provides a user easy access to the 1M+ available applications, but for a WorkSmart device such as PIVOT, often serving mission-critical purposes, greater application management by an administrator is necessary. Customers should have a comprehensive mobile device strategy, but the following provides a basic set of recommendations to manage applications and Google Play access for WorkSmart devices. 1. Disable Play Store App Why: Typically a user of a dedicated work device (such as PIVOT) should not be able to install applications as these may not be appropriate for work or introduce security risks. Disabling the Play Store app prevents a user installing apps from the Play Store. How: The Play Store app can be disabled via (a) the SLIC tool during initial provisioning, or (b) using the UI Settings> Admin settings [enter pw] >Apps >All [tab]. Swipe down to the Google Play Store icon and tap it. The app window opens. Tap Disable. Once disabled, the Play Store icon will disappear, and the device will no longer be able to access the Play Store via the app, and applications will not be updated automatically via Play Store. -

Geoar Android App and Backend POI Helper

GeoAR Android App and Backend POI Helper Aishna Agrawal, Aakash Thakkar, Alex Pang University of California, Santa Cruz Abstract Augmented Reality (AR) is an enhanced version of reality where live direct or indirect views of physical real-world environments are augmented with su- perimposed computer-generated images over a user's view of the real-world, thus enhancing one's current perception of reality. In this project, we exper- iment with Markerless AR which relies on location data instead of physical markers. Points of interest (POI) are saved in the backend as latitude and longitude [1] information that are queried by the front-end Android applica- tion. The app integrates Google's Augmented Reality SDK called ARCore [2] to help render objects at various points of interest in the real world. To provide significant value to a users life, any geo-location based ap- plication must be able to model the real world objects and provide unique dynamic data about them in a creative manner. They must also have a well defined API to remain platform independent. We try to solve this problem with the use of POI Helper which creates a backend as a scalable API service. This project strives to make it easy for developers to make a wide variety of AR applications by abstracting the commonly performed tasks and confin- ing them to a basic structure without constraining the developer's ability to customize their product. This report describes the development of an open- source Android application, general purpose back-end server and a scalable web-application to visualize the POIs. -

4 Ways to Use Google Drive - Wikihow 8/19/13 12:05 AM

4 Ways to Use Google Drive - wikiHow 8/19/13 12:05 AM How to Use Google Drive Google Drive is Google's challenge to Dropbox--a file storage system linked to your Google account and accessible via the Internet, so you can access your files from almost any device on-the- go. Drive is currently available for Microsoft Windows, Mac OS X, iOS and Android. Users are given 5 gigabytes (5GB) of free cloud storage to start; additional storage can be purchased for a small fee. Read this article to learn how to get started using Google Drive. Steps Setting Up Google Drive 1 Sign into Google Drive with your Google account at http ://drive.google.com . If you're a Gmail user, you can use your Gmail account to sign in. There's a red sign-up button on the top right-hand corner of the login page if you don't have a Google account. 2 Once you've launched Google Drive in your web browser, you will be taken to a page that shows all of the Google Documents you currently have associated with your Google account. A tab will pop up asking if you would like to download Google Drive for PC or http://www.wikihow.com/Use-Google-Drive Page 1 of 22 4 Ways to Use Google Drive - wikiHow 8/19/13 12:05 AM Mac--whichever system you are currently on. Do so if you'd like to access your documents from more than your web browser. However, you don't have to use this option. -

Android Application Development Exam Sample

AND-801: Android Application Development Exam Sample Q1. Android is an open source, Linux-based software stack. a. True b. False Answer: a -------------------------------------------------------------------- Q2- Which of the following choices is the main role of the button which is illustrated in the red rectangle over the Android Studio tool bar? a- Run button. b. Debug button. c- Apply changes button. d- ADV manager button. Answer: c -------------------------------------------------------------------- Q3. Each Android application runs in its own process and with its own instance of ART on devices running Android version 8.0 (API Level 26) or higher. a. True b. False Answer: a Q4-Which of the following choices is the main role of the Preview button which is illustrated in the red circle of the vertical Android Studio tool bar? a- It shows the activity layout in text (XML) and design mode at the same time. b- It shows the activity layout in print review. c- It shows the Java or Kotlin code of an activity. d- It shows the activity blue print preview mode. Answer: a -------------------------------------------------------------------- Q5. The Android library code is organized in such a way that it can be used by multiple Android applications. a. True b. False Answer: a Q6- The following image shows part of the content inside file activity_main.xml in an Android application: Which of the following options is correct? a- The app user can select one radio button only at a time. b- The app user can select the two radio buttons at the same time. c- The only purpose of RadioGroup tag is adding a group of colors to the inside of the radio buttons.