Discrete Cosine Transform Residual Feature Based Filtering Forgery and Splicing Detection in JPEG Images

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Poisson Image Editing

Poisson Image Editing Patrick Perez´ ∗ Michel Gangnet† Andrew Blake‡ Microsoft Research UK Abstract able effect. Conversely, the second-order variations extracted by the Laplacian operator are the most significant perceptually. Using generic interpolation machinery based on solving Poisson Secondly, a scalar function on a bounded domain is uniquely de- equations, a variety of novel tools are introduced for seamless edit- fined by its values on the boundary and its Laplacian in the interior. ing of image regions. The first set of tools permits the seamless The Poisson equation therefore has a unique solution and this leads importation of both opaque and transparent source image regions to a sound algorithm. into a destination region. The second set is based on similar math- So, given methods for crafting the Laplacian of an unknown ematical ideas and allows the user to modify the appearance of the function over some domain, and its boundary conditions, the Pois- image seamlessly, within a selected region. These changes can be son equation can be solved numerically to achieve seamless filling arranged to affect the texture, the illumination, and the color of ob- of that domain. This can be replicated independently in each of jects lying in the region, or to make tileable a rectangular selection. the channels of a color image. Solving the Poisson equation also CR Categories: I.3.3 [Computer Graphics]: Picture/Image has an alternative interpretation as a minimization problem: it com- Generation—Display algorithms; I.3.6 [Computer Graphics]: putes the function whose gradient is the closest, in the L2-norm, to Methodology and Techniques—Interaction techniques; I.4.3 [Im- some prescribed vector field — the guidance vector field — under age Processing and Computer Vision]: Enhancement—Filtering; given boundary conditions. -

Digital Backgrounds Using GIMP

Digital Backgrounds using GIMP GIMP is a free image editing program that rivals the functions of the basic versions of industry leaders such as Adobe Photoshop and Corel Paint Shop Pro. For information on which image editing program is best for your needs, please refer to our website, digital-photo- backgrounds.com in the FAQ section. This tutorial was created to teach you, step-by-step, how to remove a subject (person or item) from its original background and place it onto a Foto*Fun Digital Background. Upon completing this tutorial, you will have the skills to create realistic portraits plus photographic collages and other useful functions. If you have any difficulties with any particular step in the process, feel free to contact us anytime at digital-photo-backgrounds.com. How to read this tutorial It is recommended that you use your own image when following the instructions. However, one has been provided for you in the folder entitled "Tutorial Images". This tutorial uses screenshots to visually show you each step of the process. Each step will be numbered which refers to a number within the screenshot image to indicate the location of the function or tool being described in that particular step. Written instructions are first and refer to the following screenshot image. Keyboard shortcuts are shown after most of the described functions and are in parentheses as in the following example (ctrl+T); which would indicate that the "ctrl" key and the letter "T" key should be pressed at the same time. These are keyboard shortcuts and can be used in place of the described procedure; not in addition to the described procedure. -

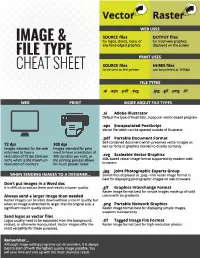

Image & File Type Cheat Sheet

Vecto� Raste� WEB USES SOURCE flies OUTPUT flies for logos, charts, icons, or for most web graphics IMAGE & any hard-edged graphics displayed on the screen FILE TYPE PRINT USES SOURCE files HI-RES flies CHEAT SHEET to be sent to the printer can be printed at 300dpi FILE TYPES .ai .eps .pdf .svg .jpg .gif .png .tif WEB PRINT MORE ABOUT FILE TYPES .ai Adobe Illustrator Default file typeof Illustrator, a popular vector-based program .eps Encapsulated Postscript Vector file which can be opened outside of Illustrator .pdf Portable Document Format Self-contained document which preservesvector images as 72dpl 300 dpl well as fonts or graphics needed to display correctly Images intended for the web Images intended for print only need to have a need to have a resolutlon of resolution of 72 dpi (dots per 300 dpi (dots per inch), as .svg Scaleable Vector Graphics inch), which is the maximum the printing process allows XML-based vector image format supportedby modern web resolution of monitors for much greater detail browsers .jpg Joint Photographic Experts Group WHEN SENDING IMAGES TO A DESIGNER••• Sometimes displayed as .jpeg-this raster image format is best fordisplaying photographic images on web browsers Don't put images in a Word doc It is difficult to extract them and results in lower quality. .gif Graphics Interchange Format Raster image format best forsimple images made up of solid Always send a larger image than needed colors with no gradients Raster images can be sized down without a loss in quality,but when an image is stretched to larger than its original size, a .png Portable Network Graphics significant loss in quality occurs. -

How to Make Basic Image Adjustments Using Microsoft Office

oit UMass Offi ce of Information Technologies How to Make Basic Image Adjustments using Microsoft Offi ce Picture Manager (Windows) The Microsoft Picture Manager application is included in recent versions of Microsoft Offi ce for Windows. Picture manager can be used to make overall adjustments to an image, and to modify fi le type and size. To locate Picture Manager, from the desktop go to: Start > All Programs > Microsoft Offi ce > Microsoft Offi ce Tools > Microsoft Picture Manager. Open a Picture in Picture Manager 1. In the right pane, under “Getting Started,” choose ‘Add a new picture shortcut.’ (If you choose ‘Locate pictures’ Picture manager will search available drives for image fi les.) 2. Navigate to the folder in which your pictures are stored. You will not see fi les in the navigation window, just folders. 3. Click Add to add a shortcut to a folder. • Every shortcut you add will be listed in the left-hand pane. • Thumbnail previews of each image fi le within the folder will display in the center Browse area. 4. To open a fi le, double click it’s thumbnail. 5. To return to the thumbnail view of folder contents, click the Browse button in the main tool bar. Or select the Filmstrip view (to right of Thumbnail button), which provides a row of thumbnails under a picture editing window. Picture Folders Thumbnail View Edit Pictures Button Task Pane OIT Academic Computing, Instructional Media Lab [email protected] (413) 545-2823 http://www.oit.umass.edu/academic/ MS Offi ce/Microsoft Picture Manager (Windows) page 2 Editing Pictures Picture manager provides basic image editing tools. -

Image Formats

Image Formats Ioannis Rekleitis Many different file formats • JPEG/JFIF • Exif • JPEG 2000 • BMP • GIF • WebP • PNG • HDR raster formats • TIFF • HEIF • PPM, PGM, PBM, • BAT and PNM • BPG CSCE 590: Introduction to Image Processing https://en.wikipedia.org/wiki/Image_file_formats 2 Many different file formats • JPEG/JFIF (Joint Photographic Experts Group) is a lossy compression method; JPEG- compressed images are usually stored in the JFIF (JPEG File Interchange Format) >ile format. The JPEG/JFIF >ilename extension is JPG or JPEG. Nearly every digital camera can save images in the JPEG/JFIF format, which supports eight-bit grayscale images and 24-bit color images (eight bits each for red, green, and blue). JPEG applies lossy compression to images, which can result in a signi>icant reduction of the >ile size. Applications can determine the degree of compression to apply, and the amount of compression affects the visual quality of the result. When not too great, the compression does not noticeably affect or detract from the image's quality, but JPEG iles suffer generational degradation when repeatedly edited and saved. (JPEG also provides lossless image storage, but the lossless version is not widely supported.) • JPEG 2000 is a compression standard enabling both lossless and lossy storage. The compression methods used are different from the ones in standard JFIF/JPEG; they improve quality and compression ratios, but also require more computational power to process. JPEG 2000 also adds features that are missing in JPEG. It is not nearly as common as JPEG, but it is used currently in professional movie editing and distribution (some digital cinemas, for example, use JPEG 2000 for individual movie frames). -

An Introduction to the Digital Reproduction of Photographs | 3

An Introduction to the C HAPTER Digital Reproduction of Photographs || OBJECTIVES After completing this chapter you will be able to: ■ explain the sources and attributes of digital photographs. ■ explain the methods used to reproduce digital photographs. ■ explain the conventions used by professional printers/ publishers to describe halftones. ■ use Photoshop’s tools to measure halftone dot shape, screen angle, and screen frequency. ■ explain the different types of resolution measurements. ■ use a step-by-step process to choose the correct dot shape, screen angle, and screen frequency for a given printing process and substrate using a given output device. ■ choose the best file format in which to save a given photograph. | 1 2 | C HAPTER ONE || Printing and publishing technicians are required to prepare photographs for reproduction so that the printed photographs appear pleasing to the viewer. This is not an easy task. When a photograph is printed, many variables, including the type of printing press, the type of paper, the ink, and even weather conditions combine to alter the appearance of the reproduction. Even if the photograph is to be reproduced by electronic means—on the Internet or CD-ROM, for instance—the viewer’s computer and monitor will affect the appearance of the image. If the technician knows in advance the variables that will affect a reproduction, corrections can be built into the photograph so that the reproduction will appear pleasing to the eye. If these corrections are not made, the reproduced photograph will probably look unsightly. In the past, such corrections required the skill of highly talented photographic retouchers who knew all the variables that could affect a printed reproduction. -

Online Image Editing: Pixlr Basics

ONLINE IMAGE EDITING: PIXLR BASICS Edit and save your photos with PIXLR! 125 S. Prospect Avenue, Elmhurst, IL 60126 630-279-8696 ● www.elmhurstpubliclibrary.org Create, Make, and Build INTRODUCTION TO PIXLR What is PIXLR? PIXLR is a free tool you can use on a computer (online with a web browser) or on a mobile device (with an app) to edit photos and create images. This class will focus on PIXLR’s web apps and how to get started using them by practicing on a few images. Course Topics • Web Apps • Mobile App • PIXLR Account • Getting Started with PIXLR’s Web Apps Web Apps PIXLR O MATIC — This is a fun photo-editing tool you can use to quickly transform your photos by adding effects, overlays and borders. PIXLR EXPRESS — With EXPRESS, you can make quick fixes or personalize your photos with creative effects, overlays, borders, filters and stickers. PIXLR EDITOR — With EDITOR, you can use layers and effects to transform and edit your photos. The variety of tools and menus is similar to those in Photoshop and can be used for more complex photo editing. Mobile App Mobile PIXLR (available on Apple Store or Google Play) is a fun and powerful photo editor that lets you quickly crop, rotate, and fine-tune any picture. PIXLR Account You can use PIXLR for free with or without creating an account. If you create an account, you can save your work in a private folder online and go back to it later by accessing your account from anywhere. Otherwise, you can save your edited photos to your computer, a flash drive or your mobile device (if you are using the mobile app). -

DCT Based Digital Forgery Identification

DCT Based Digital Forgery Identification Giuseppe Messina Dipartimento di Matematica e Informatica, Università di Catania Presented to Interpol Crime Against Children Group, Lyon, 25 March 2009 Image Processing LAB – http://iplab.dmi.unict.it Overview • What is a Forgery? o Using Graphical Software o The content has been modified o The context has been modified • The JPEG Standard • Exif Information o Thumbnails Analysis • JPEG DCT Techniques o Measuring Inconsistencies of Block Artifact o Digital Forgeries From JPEG Ghosts What is a Forgery? • “Forgery” is a subjective word. • An image can become a forgery based upon the context in which it is used. • An image altered for fun or someone who has taken an bad photo, but has been altered to improve its appearance cannot be considered a forgery even though it has been altered from its original capture. What is a Forgery? • The other side of forgery are those who perpetuate a forgery for gain and prestige • They create an image in which to dupe the recipient into believing the image is real and from this be able to gain payment and fame • Three type of forgery can be identified: 1. Created using graphical software 2. The content has been altered 3. The context has been altered Using graphical software Can you to tell which among the array of images are real, and which are CG? Using graphical software CG CG REALCG REAL CG REAL CG REAL REAL http://www.autodesk.com/eng/etc/fakeorfoto/about.html The content has been altered • Creating an image by altering its content is another method • Duping the recipient into believing that the objects in an image are something else from what they really are! • The image itself is not altered, and if examined will be proven as so. -

GIMP HELP GUIDE GIMP Useful Tools

HELP GUIDE GIMP This section contains help on how to edit and manipulate images using the Gnu Image Manipulation Program. GIMP is an image editing program used for creating and editing images. GIMP is available to download from http://www.gimp.org/. Other image editing software tools that are available are: >> Paint.net (available from http://www.getpaint.net) >> Photo Editor by Axiem Systems (App for iPad) >> Adobe Photoshop Touch (App for Android and iPad) >> Photo Editor by Aviary (App for Android and iPad) www.nervecentre.org/teachingdividedhistories GIMP Using Layers and Adding Text Layers Explained A GIMP image is sometimes made up of a “stack” of layers. Every time a new element is added, it is added as a new Layer. These Layers then appear stacked on one another in the Layers Dialog window. The Layer at the top of the stack is the one that can be seen first (imagine viewing the layers from a top down point of view). // Layers can be renamed by double clicking on them. // Reorganising the order of the layers can be achieved by clicking on them and dragging them. // You can affect the Opacity of a layer by selecting it and then adjusting the level in the Opacity bar (positioned above the layers). Decreasing the Opacity level will reveal the layers under the selected layer. // REMEMBER!! Before performing an action on a layer, MAKE SURE IT IS SELECTED FROM THE LAYERS DIALOG!!!! Adding a Text Layer to an image // Add text to an image by selecting the Text Tool from the Toolbox. -

Display-Aware Image Editing

Display-aware Image Editing Won-Ki Jeong Micah K. Johnson Insu Yu Jan Kautz Harvard University Massachusetts Institute of Technology University College London Hanspeter Pfister Sylvain Paris Harvard University Adobe Systems, Inc. Abstract e.g. [14, 16, 17, 28], major hurdles remain to interactively edit larger images. For instance, optimization tools such We describe a set of image editing and viewing tools that as least-squares systems and graph cuts become unpractical explicitly take into account the resolution of the display on when the number of unknowns approaches or exceeds a bil- which the image is viewed. Our approach is twofold. First, lion. Furthermore, even simple editing operations become we design editing tools that process only the visible data, costly when repeated for hundreds of millions of pixels. The which is useful for images larger than the display. This en- basic insight of our approach is that the image is viewed on compasses cases such as multi-image panoramas and high- a display with a limited resolution, and only a subset of the resolution medical data. Second, we propose an adaptive image is visible at any given time. We describe a series way to set viewing parameters such brightness and contrast. of image editing operators that produce results only for the Because we deal with very large images, different locations visible portion of the image and at the displayed resolution. and scales often require different viewing parameters. We A simple and efficient multi-resolution data representation let users set these parameters at a few places and interpo- (x 2) allows each image operator to quickly access the cur- late satisfying values everywhere else. -

GIMP Image Editor for Photographers

A Guide to the GIMP Image Editor for Photographers Timothy A. Gonsalves IIT Mandi Himachal, India 24th February 2018 Table of Contents 1 Preamble...............................................................................................................................1 2 Quick Start............................................................................................................................1 2.1 Useful Tips....................................................................................................................3 3 Common Tasks – How To....................................................................................................3 3.1 Crop an Image...............................................................................................................3 3.2 Scale or resize an image................................................................................................4 3.3 Automatic exposure and colour....................................................................................5 3.4 Correct the exposure of an image.................................................................................5 3.4.1 Quick Method........................................................................................................5 3.4.2 Fine control by colour...........................................................................................5 3.4.3 Backlit subject.......................................................................................................6 3.5 Correct the white-balance -

Edit Your Images

3/4/20 “We don’t learn from our good images; we learn from the ones that can be improved on.” Jen Rozenbaum 1 3/4/20 Photo editing software or applications are used to manipulate or enhance digital Images. This category of software ranges from basic apps to easily resize images and add basic effects to industry standard programs used by professional photographers. Editing Software Photoshop & Lightroom $9.99 a month Photoshop Elements $59 to $99 ON1 Photo Raw 2020 $99.99 DXO Photo Lab 3 $129 DXO NIK $149 (6 Programs) Topaz Studio 2 $99.99 Coupon Code RADDREW Luminar 4 $89 Coupon Code JIMNIX Luminar 3 FREE 2 3/4/20 Your best friend in Photo editing software is 3 3/4/20 Your best friend in Photo editing software is Questions to ask as you edit: • Does the Image meet the category requirements? • Does the Image have a main focus? • Does the Image tell a story? • Does the Image have distracting artifacts in the composition? • Does the Image have the correct white balance? • Does the image have more impact if you flip it horizontally? • Is the main subject not to close to the edge – (is there room to move in the photo?) • Are strong compositional elements present (leading lines, diagonals, rule of thirds, etc?) • Are elements “cut-off” at the edge of the image? • Is the horizon in the center of the image? (generally, less desirable) • Is the horizon level? • Is a moving subject going in the correct direction for the most impact? • Have you looked at your image upside down to see if anything sticks out and needs to be corrected? 4 3/4/20 PSA Allowed Adjustments The FIRST step in Image Editing is NOISE Reduction 5 3/4/20 In photography, the term digital NOISE refers to visual distortion.