Automated Music Emotion Recognition: a Systematic Evaluation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Russell-Mills-Credits1

Russell Mills 1) Bob Marley: Dreams of Freedom (Ambient Dub translations of Bob Marley in Dub) by Bill Laswell 1997 Island Records Art and design: Russell Mills (shed) Design assistance, image melts: Michael Webster (storm) Paintings: Russell Mills 2) The Cocteau Twins: BBC Sessions 1999 Bella Union Records Art and design: Russell Mills (shed) Design assistance and image melts: Michael Webster (storm) 3) Gavin Bryars: The Sinking Of The Titanic / Jesus' Blood Never Failed Me Yet 1998 Virgin Records Art and design: Russell Mills Design assistance and image melts: Michael Webster (storm) Paintings and assemblages: Russell Mills 4) Gigi: Illuminated Audio 2003 Palm Pictures Art and design: Russell Mills (shed) Design assistance: Michael Webster (storm) Photography: Jean Baptiste Mondino 5) Pharoah Sanders and Graham Haynes: With a Heartbeat - full digipak 2003 Gravity Art and design: Russell Mills (shed) Design assistance: Michael Webster (storm) Paintings and assemblages: Russell Mills 6) Hector Zazou: Songs From The Cold Seas 1995 Sony/Columbia Art and design: Russell Mills and Dave Coppenhall (mc2) Design assistance: Maggi Smith and Michael Webster 7) Hugo Largo: Mettle 1989 Land Records Art and design: Russell Mills Design assistance: Dave Coppenhall Photography: Adam Peacock 8) Lori Carson: The Finest Thing - digipak front and back 2004 Meta Records Art and design: Russell Mills (shed) Design assistance: Michael Webster (storm) Photography: Lori Carson 9) Toru Takemitsu: Riverrun 1991 Virgin Classics Art & design: Russell Mills Cover -

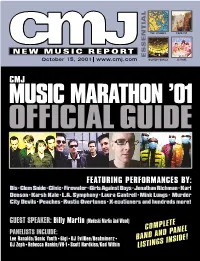

Complete Band and Panel Listings Inside!

THE STROKES FOUR TET NEW MUSIC REPORT ESSENTIAL October 15, 2001 www.cmj.com DILATED PEOPLES LE TIGRE CMJ MUSIC MARATHON ’01 OFFICIALGUIDE FEATURING PERFORMANCES BY: Bis•Clem Snide•Clinic•Firewater•Girls Against Boys•Jonathan Richman•Karl Denson•Karsh Kale•L.A. Symphony•Laura Cantrell•Mink Lungs• Murder City Devils•Peaches•Rustic Overtones•X-ecutioners and hundreds more! GUEST SPEAKER: Billy Martin (Medeski Martin And Wood) COMPLETE D PANEL PANELISTS INCLUDE: BAND AN Lee Ranaldo/Sonic Youth•Gigi•DJ EvilDee/Beatminerz• GS INSIDE! DJ Zeph•Rebecca Rankin/VH-1•Scott Hardkiss/God Within LISTIN ININ STORESSTORES TUESDAY,TUESDAY, SEPTEMBERSEPTEMBER 4.4. SYSTEM OF A DOWN AND SLIPKNOT CO-HEADLINING “THE PLEDGE OF ALLEGIANCE TOUR” BEGINNING SEPTEMBER 14, 2001 SEE WEBSITE FOR DETAILS CONTACT: STEVE THEO COLUMBIA RECORDS 212-833-7329 [email protected] PRODUCED BY RICK RUBIN AND DARON MALAKIAN CO-PRODUCED BY SERJ TANKIAN MANAGEMENT: VELVET HAMMER MANAGEMENT, DAVID BENVENISTE "COLUMBIA" AND W REG. U.S. PAT. & TM. OFF. MARCA REGISTRADA./Ꭿ 2001 SONY MUSIC ENTERTAINMENT INC./ Ꭿ 2001 THE AMERICAN RECORDING COMPANY, LLC. WWW.SYSTEMOFADOWN.COM 10/15/2001 Issue 735 • Vol 69 • No 5 CMJ MUSIC MARATHON 2001 39 Festival Guide Thousands of music professionals, artists and fans converge on New York City every year for CMJ Music Marathon to celebrate today's music and chart its future. In addition to keynote speaker Billy Martin and an exhibition area with a live performance stage, the event features dozens of panels covering topics affecting all corners of the music industry. Here’s our complete guide to all the convention’s featured events, including College Day, listings of panels by 24 topic, day and nighttime performances, guest speakers, exhibitors, Filmfest screenings, hotel and subway maps, venue listings, band descriptions — everything you need to make the most of your time in the Big Apple. -

UNIVERSAL MUSIC • Paul Mccartney – New • Mary J Blige – a Mary

Paul McCartney – New Mary J Blige – A Mary Christmas Luciano Pavarotti – The 50 Greatest Tracks New Releases From Classics And Jazz Inside!!! And more… UNI13-42 “Our assets on-line” UNIVERSAL MUSIC 2450 Victoria Park Ave., Suite 1, Willowdale, Ontario M2J 5H3 Phone: (416) 718.4000 Artwork shown may not be final UNIVERSAL MUSIC CANADA NEW RELEASE Artist/Title: KATY PERRY / PRISM Bar Code: Cat. #: B001921602 Price Code: SPS Order Due: Release Date: October 22, 2013 File: POP Genre Code: 33 Box Lot: 6 02537 53232 2 Key Tracks: ROAR ROAR Artist/Title: KATY PERRY / PRISM (DELUXE) Bar Code: Cat. #: B001921502 Price Code: AV Order Due: October 22, 2013 Release Date: October 22, 2013 6 02537 53233 9 File: POP Genre Code: 33 Box Lot: Key Tracks: ROAR Artist/Title: KATY PERRY / PRISM (VINYL) Bar Code: Cat. #: B001921401 Price Code: FB Order Due: October 22, 2013 Release Date: October 22, 2013 6 02537 53234 6 File: POP Genre Code: 33 Box Lot: Key Tracks: Vinyl is one way sale ROAR Project Overview: With global sales of over 10 million albums and 71 million digital singles, Katy Perry returns with her third studio album for Capitol Records: PRISM. Katy’s previous release garnered 8 #1 singles from one album (TEENAGE DREAM). She also holds the title for the longest stay in the Top Ten of Billboard’s Hot 100 – 66 weeks – shattering a 20‐year record. Katy Perry is the most‐followed female artist on Twitter, and has the second‐largest Twitter account in the world, with over 41 million followers. -

EV16 Inner DP

16 extreme voice JohnJJohnohn FoxxFoxx Exploring an Ocean of possibilities Extreme Voice 16 : Introduction All text and pictures © Extreme Voice 1997 except where stated. 1 Reproduction by permission only. have please. , g.uk . ears in My Eyes Cerise A. Reed [email protected] , oice Dancing with T obinhar r and eme V Extr hope you’ll like it! The Gift http://www.ultravox.org.uk , e ris, URL: has just been finished, and should be in the shops in June. EMAIL (ROBIN): Monument g.uk Rage In Eden enewal form will be enclosed. Cheques etc payable to (0117) 939 7078 We’ll be aiming for a Christmas issue, though of course this depends on news and events. We’ll Cerise Reed and Robin Har [email protected] UK £7.00 • EUROPE £8.00 • OUTSIDE EUROPE £11.00 as follows: It’s been an exceptionally busy year for us, what with CD re-releases and Internet websites, as you’ll been an exceptionally busy year for us, what with CD re-releases It’s TEL / FAX: THIS YEAR! 19 SALISBURY STREET, ST GEORGE, BRISTOL BS5 8EE ENGLAND STREET, 19 SALISBURY best! ”, and a yellow subscription r y eatment. At the time of writing EV16 EMAIL (CERISE): ry them, or at least be able to order them. So far ry them, or at least be able to order essages to the band, chat with fellow fans, download previously unseen photographs, listen to messages from Midge unseen photographs, listen to messages from essages to the band, chat with fellow fans, download previously ee in the following pages. -

Worldnewmusic Magazine

WORLDNEWMUSIC MAGAZINE ISCM During a Year of Pandemic Contents Editor’s Note………………………………………………………………………………5 An ISCM Timeline for 2020 (with a note from ISCM President Glenda Keam)……………………………..……….…6 Anna Veismane: Music life in Latvia 2020 March – December………………………………………….…10 Álvaro Gallegos: Pandemic-Pandemonium – New music in Chile during a perfect storm……………….....14 Anni Heino: Tucked away, locked away – Australia under Covid-19……………..……………….….18 Frank J. Oteri: Music During Quarantine in the United States….………………….………………….…22 Javier Hagen: The corona crisis from the perspective of a freelance musician in Switzerland………....29 In Memoriam (2019-2020)……………………………………….……………………....34 Paco Yáñez: Rethinking Composing in the Time of Coronavirus……………………………………..42 Hong Kong Contemporary Music Festival 2020: Asian Delights………………………..45 Glenda Keam: John Davis Leaves the Australian Music Centre after 32 years………………………….52 Irina Hasnaş: Introducing the ISCM Virtual Collaborative Series …………..………………………….54 World New Music Magazine, edition 2020 Vol. No. 30 “ISCM During a Year of Pandemic” Publisher: International Society for Contemporary Music Internationale Gesellschaft für Neue Musik / Société internationale pour la musique contemporaine / 国际现代音乐协会 / Sociedad Internacional de Música Contemporánea / الجمعية الدولية للموسيقى المعاصرة / Международное общество современной музыки Mailing address: Stiftgasse 29 1070 Wien Austria Email: [email protected] www.iscm.org ISCM Executive Committee: Glenda Keam, President Frank J Oteri, Vice-President Ol’ga Smetanová, -

Låtlista Från DJ Uthyrning 1

DJ Uthyrning Tel nr. 0761 - 122 123 E-post [email protected] Låtlista från DJ uthyrning 1. Engelbert Humperdinck - My World 2. Dean martin - that`s amore 3. Beatles - I Saw Her Standing There 4. Unforgettable - Nat king cole 5. Toto - Rosanna 6. Maxi Priest - Wild world 7. Engelbert Humperdinck - How To Win Your Love 8. Elton John - Nikita 9. Christina Aguilera - Candyman 10. Engelbert humperdinck - Release me 11. E.Humperdink - Quando Quando Quando 12. Nine de angel - Guardian angel 13. Lutricia McNeal - Stranded 14. Tom Jones - Help Yourself 15. The art company - Susanna 16. T.Jones - It's not unusual 17. Simply Red - It's only love 18. Toto - Africa 19. Frank Sinatra - Stranger in the nigth 20. Cher - Dov'e Lamore 21. M.Gaye - Sexual Healing Ultimix 22. Hanne Boel - I Wanna Make Love To You 23. tom jones - Delilah 24. Toto - Hold the line 25. Michel Teló Feat Magnate y Valentino - Ai Se Eu Te Pego (Remix) 26. Tina Turner - What's Love Got To Do With It (Special Extended Mix) 27. Tina Turner - We Don't Need Another Hero 28. DJ.bobo - Everybody 29. Enrique Iglesias - Bailamos 30. Mendez - Carnaval 31. MR President - Coco jamboo 32. Madonna - La isla bonita 33. Culture club - Do you really want to hurt me 34. Carl douglas - Kung fu fighting 35. Tina Turner & Jimmy Barnes - The Best (Extended Version) [Capitol Records] 36. ENGELBERT HUMPERDINK - SPANISH EYES 37. The four seasons - December 1963 38. Tom Jones - Love Me Tonight 39. L.Armostrong - What a wonderful world 40. Elton John - Sad song 41. -

Swr2-Musikpassagen-20160619

SWR2 MANUSKRIPT ESSAYS FEATURES KOMMENTARE VORTRÄGE _______________________________________________________________________________ SWR2 Musikpassagen Drohkulisse Die Stadt, die Nacht und ihre Klänge Von Harry Lachner Sendung: Sonntag, 19. Juni 2016, 23.03 Uhr Redaktion: Anette Sidhu-Ingenhoff Produktion: SWR 2016 __________________________________________________________________________ Bitte beachten Sie: Das Manuskript ist ausschließlich zum persönlichen, privaten Gebrauch bestimmt. Jede weitere Vervielfältigung und Verbreitung bedarf der ausdrücklichen Genehmigung des Urhebers bzw. des SWR. __________________________________________________________________________ Service: Mitschnitte aller Sendungen der Redaktion SWR2 Musikpassagen sind auf CD erhältlich beim SWR Mitschnittdienst in Baden-Baden zum Preis von 12,50 Euro. Bestellungen über Telefon: 07221/929-26030 Bestellungen per E-Mail: [email protected] __________________________________________________________________________ Kennen Sie schon das Serviceangebot des Kulturradios SWR2? Mit der kostenlosen SWR2 Kulturkarte können Sie zu ermäßigten Eintrittspreisen Veranstaltungen des SWR2 und seiner vielen Kulturpartner im Sendegebiet besuchen. Mit dem Infoheft SWR2 Kulturservice sind Sie stets über SWR2 und die zahlreichen Veranstaltungen im SWR2-Kulturpartner-Netz informiert. Jetzt anmelden unter 07221/300 200 oder swr2.de 2 -------- Musik Kancheli, Yula Yayoi -------- SP: Sieh, des Verbrechers Freund, der holde Abend, naht Mit leisem Raubtierschritt, der Helfer bei der Tat; -

Title Artist Gangnam Style Psy Thunderstruck AC /DC Crazy In

Title Artist Gangnam Style Psy Thunderstruck AC /DC Crazy In Love Beyonce BoomBoom Pow Black Eyed Peas Uptown Funk Bruno Mars Old Time Rock n Roll Bob Seger Forever Chris Brown All I do is win DJ Keo Im shipping up to Boston Dropkick Murphy Now that we found love Heavy D and the Boyz Bang Bang Jessie J, Ariana Grande and Nicki Manaj Let's get loud J Lo Celebration Kool and the gang Turn Down For What Lil Jon I'm Sexy & I Know It LMFAO Party rock anthem LMFAO Sugar Maroon 5 Animals Martin Garrix Stolen Dance Micky Chance Say Hey (I Love You) Michael Franti and Spearhead Lean On Major Lazer & DJ Snake This will be (an everlasting love) Natalie Cole OMI Cheerleader (Felix Jaehn Remix) Usher OMG Good Life One Republic Tonight is the night OUTASIGHT Don't Stop The Party Pitbull & TJR Time of Our Lives Pitbull and Ne-Yo Get The Party Started Pink Never Gonna give you up Rick Astley Watch Me Silento We Are Family Sister Sledge Bring Em Out T.I. I gotta feeling The Black Eyed Peas Glad you Came The Wanted Beautiful day U2 Viva la vida Coldplay Friends in low places Garth Brooks One more time Daft Punk We found Love Rihanna Where have you been Rihanna Let's go Calvin Harris ft Ne-yo Shut Up And Dance Walk The Moon Blame Calvin Harris Feat John Newman Rather Be Clean Bandit Feat Jess Glynne All About That Bass Megan Trainor Dear Future Husband Megan Trainor Happy Pharrel Williams Can't Feel My Face The Weeknd Work Rihanna My House Flo-Rida Adventure Of A Lifetime Coldplay Cake By The Ocean DNCE Real Love Clean Bandit & Jess Glynne Be Right There Diplo & Sleepy Tom Where Are You Now Justin Bieber & Jack Ü Walking On A Dream Empire Of The Sun Renegades X Ambassadors Hotline Bling Drake Summer Calvin Harris Feel So Close Calvin Harris Love Never Felt So Good Michael Jackson & Justin Timberlake Counting Stars One Republic Can’t Hold Us Macklemore & Ryan Lewis Ft. -

The Innocence Mission

Il I * ***4>a>a*** * **3 DIGIT 908 000817973 4401 8936 MAR9OCHZ MONTY GREENLY APT A 3740 ELM LONG BEACH CA 90807 I I Follows page 50 VOLUME 101 NO. 36 THE INTERNATIONAL NEWSWEEKLY OF MUSIC AND HOME ENTERTAINMENT September 9, 1989/$4.50 (U.S.), $5.50 (CAN.), £3.50 (U.K.) As New FCC Acts Against `Dark Knight'A Holiday-Sell-Thru White Knight? Radio 'Shock Jocks' ... Warner Cloaks `Batman' Vid Plans ple even more so now. It's stupid in into video stores Nov. 15 with a a home video decision until after La- BY BILL HOLLAND a major city to lose a multi -million BY JIM McCULLAUGH $24.95 suggested list, probably bor Day." and CRAIG ROSEN dollar license on this stuff." LOS ANGELES Although Warner without a promotional tie -in part- A number of sources close to the WASHINGTON In its first major Scott Shannon, PD /morning man Home Video remains tight -lipped, ner. studio, however, say Warner was broadcast-related action under new for KQLZ (Pirate Radio) Los Ange- trade sources continue to contend The studio's Mike Finnegan, di- preparing an official announcement chairman Alfred Sikes, the Federal les, says, "Some of the material we that "Batman "- 1989's undisputed rector of public relations, editorial for Tuesday (5) or Wednesday (6). Communications Commission has do is questionable, but if you really box -office champ at more than $230 and program services, will only say, Some distributors and major ac- launched enforcement proceedings listen to the complaints, you can po- million so far -will wing its way "Upper management will not make counts also indicate that Warner against three commercial stations (Continued on page 87) may have already replicated 5 mil- for allegedly indecent programming lion copies of "Batman," although by those stations' "shock jocks." VCA /Technicolor, the studio's du- The FCC will begin assessment plicator, would not return calls on action this month after receiving re- the matter. -

Dreaming of Virtuosity”: Michael Byron, Dreamers of Pearl

Dreaming of Virtuosity Breathless. Difficult. Obsessive. Audacious. Abstract. Shimmering. Extreme. Such adjectives come to mind when I consider the impact of Michael Byron’s latest composition for solo piano. Byron has been writing piano music for more than thirty years (having produced twelve solo or multiple-keyboard pieces to date), but if we compare his earliest modest effort for solo piano, Song of the Lifting Up of the Head (1972) with his most recent achievement recorded here, Dreamers of Pearl (2004– 05), we might be perplexed by the differences in scope, scale, material, complexity, and technical demands. The pieces have in common a sensitivity for the sound of the piano, a sensibility of extended playing/listening, and a sustained attention toward repetition through seemingly unsystematic processes that gradually transform what we hear. Both pieces create situations demanding a great deal of relaxed yet relentless concentration on the part of the performer and the listener. Indeed, Dreamers of Pearl belongs to a rare class of recent piano music—monumental compositions of great length, beauty, and depth—all self- consciously bound to traditional piano genres and their deeply ingrained structures, yet inventive and thrilling in ways that inspire only a few brave pianists to dedicate themselves wholeheartedly to these often mercilessly difficult pieces. Joseph Kubera, the tremendously gifted pianist for whom Dreamers of Pearl was written, is one of those brave few. Los Angeles, Toronto, New York Michael Byron was born in 1953 in Chicago, and spent his childhood in Los Angeles where he played trumpet from second grade on. Briefly, around age six, he had piano lessons with his aunt; he also studied trumpet with Mario Guarneri of the Los Angeles Philharmonic. -

KMGC Productions KMGC Song List

KMGC Productions KMGC Song List Title Title Title (We Are) Nexus 2 Faced Funks 2Drunk2Funk One More Shot (2015) (Cutmore Club Powerbass (2 Faced Funks Club Mix) I Can't Wait To Party (2020) (Original Mix) Powerbass (Original Club Mix) Club Mix) One More Shot (2015) (Cutmore Radio 2 In A Room Something About Your Love (2019) Mix) El Trago (The Drink) 2015 (Davide (Original Club Mix) One More Shot (2015) (Jose Nunez Svezza Club Mix) 2elements Club Mix) Menealo (2019) (AIM Wiggle Mix) You Don't Stop (Original Club Mix) They'll Never Stop Me (2015) (Bimbo 2 Sides Of Soul 2nd Room Jones Club Mix) Strong (2020) (Original Club Mix) Set Me Free (Original Club Mix) They'll Never Stop Me (2015) (Bimbo 2Sher Jones Radio Mix) 2 Tyme F. K13 Make It Happ3n (Original Club Mix) They'll Never Stop Me (2015) (Jose Dance Flow (Jamie Wright Club Mix) Nunez Radio Mix) Dance Flow (Original Club Mix) 3 Are Legend, Justin Prime & Sandro Silva They'll Never Stop Me (2015) (Sean 2 Unlimited Finn Club Mix) Get Ready For This 2020 (2020) (Colin Raver Dome (2020) (Original Club Mix) They'll Never Stop Me (2015) (Sean Jay Club Mix) 3LAU & Nom De Strip F. Estelle Finn Radio Mix) Get Ready For This 2020 (2020) (Stay The Night (AK9 Club Mix) 10Digits F. Simone Denny True Club Mix) The Night (Hunter Siegel Club Mix) Calling Your Name (2016) (Olav No Limit 2015 (Cassey Doreen Club The Night (Original Club Mix) Basoski Club Mix) Mix) The Night (Original Radio Mix) Calling Your Name (Olav Basoski Club 20 Fingers F. -

Brian Eno • • • His Music and the Vertical Color of Sound

BRIAN ENO • • • HIS MUSIC AND THE VERTICAL COLOR OF SOUND by Eric Tamm Copyright © 1988 by Eric Tamm DEDICATION This book is dedicated to my parents, Igor Tamm and Olive Pitkin Tamm. In my childhood, my father sang bass and strummed guitar, my mother played piano and violin and sang in choirs. Together they gave me a love and respect for music that will be with me always. i TABLE OF CONTENTS DEDICATION ............................................................................................ i TABLE OF CONTENTS........................................................................... ii ACKNOWLEDGEMENTS ....................................................................... iv CHAPTER ONE: ENO’S WORK IN PERSPECTIVE ............................... 1 CHAPTER TWO: BACKGROUND AND INFLUENCES ........................ 12 CHAPTER THREE: ON OTHER MUSIC: ENO AS CRITIC................... 24 CHAPTER FOUR: THE EAR OF THE NON-MUSICIAN........................ 39 Art School and Experimental Works, Process and Product ................ 39 On Listening........................................................................................ 41 Craft and the Non-Musician ................................................................ 44 CHAPTER FIVE: LISTENERS AND AIMS ............................................ 51 Eno’s Audience................................................................................... 51 Eno’s Artistic Intent ............................................................................. 55 “Generating and Organizing Variety in