Sensor and Sensor Fusion Technology in Autonomous Vehicles: a Review

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cognitive Radar (STO-TR-SET-227)

NORTH ATLANTIC TREATY SCIENCE AND TECHNOLOGY ORGANIZATION ORGANIZATION AC/323(SET-227)TP/947 www.sto.nato.int STO TECHNICAL REPORT TR-SET-227 Cognitive Radar (Radar cognitif) Final Report of Task Group SET-227. Published October 2020 Distribution and Availability on Back Cover NORTH ATLANTIC TREATY SCIENCE AND TECHNOLOGY ORGANIZATION ORGANIZATION AC/323(SET-227)TP/947 www.sto.nato.int STO TECHNICAL REPORT TR-SET-227 Cognitive Radar (Radar cognitif) Final Report of Task Group SET-227. The NATO Science and Technology Organization Science & Technology (S&T) in the NATO context is defined as the selective and rigorous generation and application of state-of-the-art, validated knowledge for defence and security purposes. S&T activities embrace scientific research, technology development, transition, application and field-testing, experimentation and a range of related scientific activities that include systems engineering, operational research and analysis, synthesis, integration and validation of knowledge derived through the scientific method. In NATO, S&T is addressed using different business models, namely a collaborative business model where NATO provides a forum where NATO Nations and partner Nations elect to use their national resources to define, conduct and promote cooperative research and information exchange, and secondly an in-house delivery business model where S&T activities are conducted in a NATO dedicated executive body, having its own personnel, capabilities and infrastructure. The mission of the NATO Science & Technology Organization -

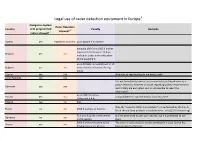

Legal Use of Radar Detection Equipment in Europe1 Navigation System Radar Detectors Country with Programmed Penalty Remarks Allowed? 3 Radars Allowed? 2

Legal use of radar detection equipment in Europe1 Navigation System Radar Detectors Country with programmed Penalty Remarks allowed? 3 radars allowed? 2 Austria yes legislation not clear up to 4000 € if no license between 100 € and 1000 € and/or imprisonment between 15 days Belgium yes no and up to 1 year and confiscation of the equipment up to 50 BGN and withdrawal of 10 Bulgaria yes no control points of control driving ticket Cyprus yes yes Attempts to regulate legally are being made Czech Republic yes yes It is not forbidden to own or use a radar detector (also known as a police detector). However all canals regarding police, rescue services Denmark yes yes and military are encrypted, so it is not possible to reach the information. up to 100 fine unites Estonia yes no not prohibited to own the device, but so to use it (1 fine unit = 4 €) Finland yes no fine Only the "assistant d'aide à la conduite" are authorized by the law. A France no no 1500 € and loss of 3 points list of the conform products is available here : http://a2c.infocert.org/ 75 € and 4 points in the central it is not prohibited to own such devices, but it is prohibited to use Germany no no traffic registry them 2000 € and confiscation of the The use of a radar detector can be permitted if a state licence has Greece yes no driving licence for 30 days been granted to the user Legal use of radar detection equipment in Europe1 Navigation System Radar Detectors Country with programmed Penalty Remarks allowed? 3 radars allowed? 2 Hungary yes yes Iceland yes yes Ireland no no no specific penalty yes (the Police also provides a map of from €761,00 to €3.047,00 (Art. -

LONG RANGE Radar/Laser Detector User's Manual R7

R7 LONG RANGE Radar/Laser Detector User’s Manual © 2019 Uniden America Corporation Issue 1, March 2019 Irving, Texas Printed in Korea CUSTOMER CARE At Uniden®, we care about you! If you need assistance, please do NOT return this product to your place of purchase Save your receipt/proof of purchase for warranty. Quickly find answers to your questions by: • Reading this User’s Manual. • Visiting our customer support website at www.uniden.com. Images in this manual may differ slightly from your actual product. DISCLAIMER: Radar detectors are illegal in some states. Some states prohibit mounting any object on your windshield. Check applicable law in your state and any state in which you use the product to verify that using and mounting a radar detector is legal. Uniden radar detectors are not manufactured and/or sold with the intent to be used for illegal purposes. Drive safely and exercise caution while using this product. Do not change settings of the product while driving. Uniden expects consumer’s use of these products to be in compliance with all local, state, and federal law. Uniden expressly disclaims any liability arising out of or related to your use of this product. CONTENTS CUSTOMER CARE .......................................................................................................... 2 R7 OVERVIEW .............................................................................................5 FEATURES ....................................................................................................................... -

Getting Started with Motionfx Sensor Fusion Library in X-CUBE-MEMS1 Expansion for Stm32cube

UM2220 User manual Getting started with MotionFX sensor fusion library in X-CUBE-MEMS1 expansion for STM32Cube Introduction The MotionFX is a middleware library component of the X-CUBE-MEMS1 software and runs on STM32. It provides real-time motion-sensor data fusion. It also performs gyroscope bias and magnetometer hard iron calibration. This library is intended to work with ST MEMS only. The algorithm is provided in static library format and is designed to be used on STM32 microcontrollers based on the ARM® Cortex® -M0+, ARM® Cortex®-M3, ARM® Cortex®-M4 or ARM® Cortex®-M7 architectures. It is built on top of STM32Cube software technology to ease portability across different STM32 microcontrollers. The software comes with sample implementation running on an X-NUCLEO-IKS01A2, X-NUCLEO-IKS01A3 or X-NUCLEO- IKS02A1 expansion board on a NUCLEO-F401RE, NUCLEO-L476RG, NUCLEO-L152RE or NUCLEO-L073RZ development board. UM2220 - Rev 8 - April 2021 www.st.com For further information contact your local STMicroelectronics sales office. UM2220 Acronyms and abbreviations 1 Acronyms and abbreviations Table 1. List of acronyms Acronym Description API Application programming interface BSP Board support package GUI Graphical user interface HAL Hardware abstraction layer IDE Integrated development environment UM2220 - Rev 8 page 2/25 UM2220 MotionFX middleware library in X-CUBE-MEMS1 software expansion for STM32Cube 2 MotionFX middleware library in X-CUBE-MEMS1 software expansion for STM32Cube 2.1 MotionFX overview The MotionFX library expands the functionality of the X-CUBE-MEMS1 software. The library acquires data from the accelerometer, gyroscope (6-axis fusion) and magnetometer (9-axis fusion) and provides real-time motion-sensor data fusion. -

D2.1 Sensor Data Fusion V1

Grant Agreement Number: 687458 Project acronym: INLANE Project full title: Low Cost GNSS and Computer Vision Fusion for Accurate Lane Level Naviga- tion and Enhanced Automatic Map Generation D2.1 Sensor Data Fusion Due delivery date: 31/12/2016 Actual delivery date: 30/12/2016 Organization name of lead participant for this deliverable: TCA Project co-funded by the European Commission within Horizon 2020 and managed by the European GNSS Agency (GSA) Dissemination level PU Public x PP Restricted to other programme participants (including the GSA) RE Restricted to a group specified by the consortium (including the GSA) CO Confidential , only for members of the consortium (including the GSA) Document Control Sheet Deliverable number: D2.1 Deliverable responsible: TeleConsult Austria Workpackage: 2 Editor: Axel Koppert Author(s) – in alphabetical order Name Organisation E-mail Axel Koppert TCA [email protected] Document Revision History Version Date Modifications Introduced Modification Reason Modified by V0.1 18/11/2016 Table of Contents Axel Koppert V0.2 05/12/2016 Filling the document with content Axel Koppert V0.3 19/12/2016 Internal Review François Fischer V1.0 23/12/2016 First final version after internal review Claudia Fösleitner Abstract This Deliverable is the first release of the Report on the development of the sensor fusion and vision based software modules. The report presents the INLANE sensor-data fusion and GNSS processing approach. This first report release presents the status of the development (tasks 2.1 and 2.5) at M12. Legal Disclaimer The information in this document is provided “as is”, and no guarantee or warranty is given that the information is fit for any particular purpose. -

Owner's Manual

OWNER’S MANUAL Download this Manual at: v1gen2.info/manual With exclusive Analyzer Modes: ® N All -Bogeys ® N Logic ® N Advanced-Logic powered Contents Welcome to Full Coverage Full Coverage .........................................................................................1-2 What’s Included .....................................................................................3 Controls and Functions. ..........................................................................4 Mounting — Where and How. ...............................................................5 How to connect to 12V, USB jack...........................................................5 How to connect a headphone ................................................................6 How to set Muted Volume ......................................................................6 Display readings .....................................................................................6 How to set Analyzer Modes ....................................................................7 How to get our free app ..........................................................................7 How to connect to your phone ...............................................................7 Lighter Adapter. ......................................................................................8 Installation — Direct-Wire Power Adapter ..............................................8 Changing the Fuse ..................................................................................9 Concealed Display -

The Impact of Radar Detectors on Highway Traffic Safety

U.S. Department of Transportation National Highway Traffic Safety Administration DOT HS 807 518 August 1988 Final Report The Impact of Radar Detectors on Highway Traffic Safety This document is available to the public from the National Technical Information Service, Springfield, Virginia 22161. i The United States Government does not endorse products or manufacturers. Trade or manufacturers' names appear only because they are considered essential to the object of this report. TECHNICAL REPORT STANDARD TITLE PATE 1. Report No. 2. Government Accession No. 3. Recipient's Catalog No. DOT HS 807 518 4. T,tli and Subtitle 5. Report Dote August, 1988 The Impact of Radar Detectors 6. Performing Organization Code on Highway Traffic Safety 7 Author's. 8. Performing Organ zation Report No. Pezoldt, V.J. and Brackett, R.Q. RF7095 9. Performing Organization Name and Address 10. Work Unit No. Texas Transportation Institute -^ Human Factors Div. VI 11. Contract or Grant No. TTI/CE Building, Suite 601 DTNH22-87-C-05111 Texas A&M University, College Station, TX 77843-3135 13. Type of Report and Period Covered .12. Sponsoring Agency Nome and Address Department of Transportation National Highway Traffic Safety Administration Final 400 Seventh Street, SW 14. Sponsoring Agency Code Washington, D.C. 20590 15. Supplementary Notes 16. Abstract As part of a program to examine the relationship between highway traffic safety and the use of radar detectors, comparisons were made between speed distributions when a detectable radar transmission was present and when it was not. The impact of detectors on speeds varied as a function of the states sampled, highway facility type and vehicle classification. -

LONG RANGE Radar/Laser Detector User's Manual R7

R7 LONG RANGE Radar/Laser Detector User’s Manual © 2020 Uniden America Corporation Issue 2, February 2020 Irving, Texas Printed in Korea CUSTOMER CARE At Uniden®, we care about you! If you need assistance, please do NOT return this product to your place of purchase Save your receipt/proof of purchase for warranty. Quickly find answers to your questions by: • Reading this User’s Manual. • Visiting our customer support website at www.uniden.com. Images in this manual may differ slightly from your actual product. DISCLAIMER: Radar detectors are illegal in some states. Some states prohibit mounting any object on your windshield. Check applicable law in your state and any state in which you use the product to verify that using and mounting a radar detector is legal. Uniden radar detectors are not manufactured and/or sold with the intent to be used for illegal purposes. Drive safely and exercise caution while using this product. Do not change settings of the product while driving. Uniden expects consumer’s use of these products to be in compliance with all local, state, and federal law. Uniden expressly disclaims any liability arising out of or related to your use of this product. RELEASE NOTES - V1.27 New/Improved • MRCD detection delay improved. • Rear Balance mode added. • Overspeed alarm and Radar alert alternate when both are detected at the same time. • All Threat display signal lockout and multiple signal displays improved. FIXES • Auto Mute Volume range changed to 0 - 7. • Arrow and Band Color apply to All Threat display. • MRCD now has priority over Ka alerts. -

XRS 9345 Version a A1 English

Important Information and Customer Assistance Introduction Intro Operation Customer Warranty Important InformationAssistance • Federal Laws Governing the Use of Radar Detectors It is not against federal law to receive radar transmissions with your Cobra radar/ laser detector. The Communications Act of 1924 guarantees your right to receive radio Secondarytransmissions Icons on any frequency. Local laws that contravene this Act, while illegal, may be enforced by your local law enforcement officials until and unless they are prohibited from doing so by federal court action. Operating Instructions Safety Alert Use of this product is not intended to, and does not, ensure that motorists or passengers will not beNotice involved in traffic accidents. It is only intended to alert the motorist that an emergency vehicle equipped with a Cobra Safety Alert transmitter is within range as defined by that product. Please call local fire and police departments to learn if coverage exists in your area. The Cobra line of quality products includes: Safe Driving Motorists, as well as operators of emergency or service vehicles, are expected to exercise all due caution while using this product, and to obey all applicable traffic laws. Security of Your Vehicle CB Radios Main Icons Before leaving your vehicle, always remember to conceal your radar detector in order to reduce the possibility of break-in and theft. microTALK® Radios Radar/Laser Detectors Customer Assistance Intro Operation Customer Warranty ® CustomerAssistance Assistance Safety Alert Traffic Warning Systems • Should you encounter any problems with this product, or not understand its many features, Mobile GPS Navigation Systems please refer to this owner’s manual. -

Multi-Sensor Perceptual System for Mobile Robot and Sensor Fusion-Based Localization

2012 International Conference on Control, Automation and Information Sciences (ICCAIS) Multi-Sensor Perceptual System for Mobile Robot and Sensor Fusion-based Localization T. T. Hoang, P. M. Duong, N. T. T. Van, D. A. Viet and T. Q. Vinh Department of Electronics and Computer Engineering University of Engineering and Technology Vietnam National University, Hanoi Abstract - This paper presents an Extended Kalman Filter (EKF) dealing with Dirac delta function [1]. Al-Dhaher and Makesy approach to localize a mobile robot with two quadrature proposed a generic architecture that employed an standard encoders, a compass sensor, a laser range finder (LRF) and an Kalman filter and fuzzy logic techniques to adaptively omni-directional camera. The prediction step is performed by incorporate sensor measurements in a high accurate manner employing the kinematic model of the robot as well as estimating [2]. In [3], a Kalman filter update equation was developed to the input noise covariance matrix as being proportional to the obtain the correspondence of a line segment to a model, and wheel’s angular speed. At the correction step, the measurements this correspondence was then used to correct position from all sensors including incremental pulses of the encoders, line estimation. In [4], an extended Kalman filter was conducted to segments of the LRF, robot orientation of the compass and fuse the DGPS and odometry measurements for outdoor-robot deflection angular of the omni-directional camera are fused. navigation. Experiments in an indoor structured environment were implemented and the good localization results prove the In our work, one novel platform of mobile robot with some effectiveness and applicability of the algorithm. -

Choice of Security Professionals Worldwide

www.isaso.com Choice of Security Professionals Worldwide Product Catalog Contents Outdoor Radar Detector RB-100F ----------------------------------- 02 RB-100F with RF-001 ----------------------------------- 03 Perimeter Tower Series TW-001 ----------------------------------- 04 Photoelectric Beam Detector PB-200L ----------------------------------- 05 MPB Series ----------------------------------- 06 PB-D Series ----------------------------------- 07 PB-SA Series ----------------------------------- 08 PB-S Series ----------------------------------- 09 Indoor IoT System Rayhome ----------------------------------- 10 Passive Infrared Detector PM-4010P / PM-4110P ----------------------------------- 12 PA-4610P ----------------------------------- 13 PA-0112 /PA-0112DD ----------------------------------- 14 Others Other Detectors ----------------------------------- 15 Magnetic Contacts ----------------------------------- 16 Accessories ----------------------------------- 17 Company Introduction KMT is a 100% private Korean capital-owned company active in designing, production, and global sales of high-quality intrusion alarm detectors. Both hardware and firmware are based on innovative technological solutions, developed by KMT’s expert engineering staff on the in-house R&D center. SASO products have won international acclaim among distributors, installers, surveillance companies and end users, including Tyco, Honeywell, Samsung, etc. The authorized distribution network is constituted by national and international partners from over twenty -

THE ULTIMATE GUIDE to AVOIDING (AND FIGHTING) SPEEDING TICKETS

THE ULTIMATE GUIDE to AVOIDING (AND FIGHTING) SPEEDING TICKETS A GUIDE BY: Rocky Mountain Radar SPEEDING TICKETS AVOID | FIGHT | WIN Rocky Mountain Radar ii Copyright © 2015 Rocky Mountain Radar All rights reserved. ISBN: 1517790522 ISBN-13: 978-1517790523 “I was driving through Salt Lake City in the center lane at exactly the posted speed limit looking for my exit. This lady comes screaming past me on the right going at least 20 over! Suddenly she hits the brakes and dramatically slows down, letting me pass her and there‟s a cop on the side of the road with his radar gun, To this day I don‟t know if the lady had a radar detector or just saw the officer; I do know that the officer looked up and saw me passing her and assumed I was the guilty party. Yep, I got a ticket. What bites is that I was not speeding, for once!” Has this ever happened to you? You‘re within the limit and get burned anyway? Well, hopefully this little book can give you some useful tips and tools to avoid those inconvenient stops The Ultimate Guide to Avoiding (and Fighting) Speeding Tickets CONTENTS 1 Introduction 2 2 Avoiding Tickets 4 3 Traffic Offenses 11 4 Ticket Info 15 5 Enforcement 17 6 LIDAR 19 7 RADAR 24 8 Pacing 27 9 VASCAR 29 10 Speed Errors 31 11 Court 37 12 Tips & Tricks 43 13 Final Word 45 v “When cities in the US remove traffic ticket revenue from their budgets and law enforcement actively enforces the rules of the road for safety rather than revenue generation, we will gladly stop manufacturing and selling radar scrambling products.” Michael Churchman, President Rocky Mountain Radar 1 Rocky Mountain Radar Introduction: Speeding tickets are a costly fact of life and one of the financial hazards of driving.