The Chordal Analysis of Tonal Music

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Schenkerian Analysis in the Modern Context of the Musical Analysis

Mathematics and Computers in Biology, Business and Acoustics THE SCHENKERIAN ANALYSIS IN THE MODERN CONTEXT OF THE MUSICAL ANALYSIS ANCA PREDA, PETRUTA-MARIA COROIU Faculty of Music Transilvania University of Brasov 9 Eroilor Blvd ROMANIA [email protected], [email protected] Abstract: - Music analysis represents the most useful way of exploration and innovation of musical interpretations. Performers who use music analysis efficiently will find it a valuable method for finding the kind of musical richness they desire in their interpretations. The use of Schenkerian analysis in performance offers a rational basis and an unique way of interpreting music in performance. Key-Words: - Schenkerian analysis, structural hearing, prolongation, progression,modernity. 1 Introduction Even in a simple piece of piano music, the ear Musical analysis is a musicological approach in hears a vast number of notes, many of them played order to determine the structural components of a simultaneously. The situation is similar to that found musical text, the technical development of the in language. Although music is quite different to discourse, the morphological descriptions and the spoken language, most listeners will still group the understanding of the meaning of the work. Analysis different sounds they hear into motifs, phrases and has complete autonomy in the context of the even longer sections. musicological disciplines as the music philosophy, Schenker was not afraid to criticize what he saw the musical aesthetics, the compositional technique, as a general lack of theoretical and practical the music history and the musical criticism. understanding amongst musicians. As a dedicated performer, composer, teacher and editor of music himself, he believed that the professional practice of 2 Problem Formulation all these activities suffered from serious misunderstandings of how tonal music works. -

Point Counter Point"

University of Montana ScholarWorks at University of Montana Graduate Student Theses, Dissertations, & Professional Papers Graduate School 1940 Aldous Huxley's use of music in "Point Counter Point" Bennett Brudevold The University of Montana Follow this and additional works at: https://scholarworks.umt.edu/etd Let us know how access to this document benefits ou.y Recommended Citation Brudevold, Bennett, "Aldous Huxley's use of music in "Point Counter Point"" (1940). Graduate Student Theses, Dissertations, & Professional Papers. 1499. https://scholarworks.umt.edu/etd/1499 This Thesis is brought to you for free and open access by the Graduate School at ScholarWorks at University of Montana. It has been accepted for inclusion in Graduate Student Theses, Dissertations, & Professional Papers by an authorized administrator of ScholarWorks at University of Montana. For more information, please contact [email protected]. A1D005 HUXLEY'S USE OF MUSIC IB F o m r c o i m m by Bennett BruAevold Presented in P&rtlel Fulfillment of the Requirement for the Degree of Master of Arte State University of Montana 1940 Approveds û&^'rman of Ëxaminl]^^ ttee W- Sfiairraan’ of Graduate Ccmmlttee UMI Number; EP35823 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. Dissertation RMishing UMI EP35823 Published by ProQuest LLC (2012). Copyright in the Dissertation held by the Author. -

Harmonic, Melodic, and Functional Automatic Analysis

HARMONIC, MELODIC, AND FUNCTIONAL AUTOMATIC ANALYSIS Placido´ R. Illescas, David Rizo, Jose´ M. Inesta˜ Dept. Lenguajes y Sistemas Informaticos´ Universidad de Alicante, E-03071 Alicante, Spain [email protected] fdrizo,[email protected] ABSTRACT Besides the interpretation, there are many applications of this automatic analysis to diverse areas of music: edu- This work is an effort towards the development of a cation, score reduction, pitch spelling, harmonic compar- system for the automation of traditional harmonic analy- ison of works [10], etc. sis of polyphonic scores in symbolic format. A number of stages have been designed in this procedure: melodic The automatic tonal analysis has been tackled under analysis of harmonic and non-harmonic tones, vertical har- different approaches and objectives. Some works use gram- monic analysis, tonality, and tonal functions. All these in- mars to solve the problem [3, 14], or an expert system [6]. formations are represented as a weighted directed acyclic There are probabilistic models like that in [11] and others graph. The best possible analysis is the path that maxi- based on preference rules or scoring techniques [9, 13]. mizes the sum of weights in the graph, obtained through The work in [4] tries to solve the problem using neural a dynamic programming algorithm. The feasibility of the networks. Maybe the best effort so far, from our point proposed approach has been tested on six J. S. Bach’s har- of view is that of Taube [12], who solves the problem by monized chorales. means of model matching. A more comprehensive review of these works can be found in [1]. -

Birdsong in the Music of Olivier Messiaen a Thesis Submitted To

P Birdsongin the Music of Olivier Messiaen A thesissubmitted to MiddlesexUniversity in partial fulfilment of the requirementsfor the degreeof Doctor of Philosophy David Kraft School of Art, Design and Performing Arts Nfiddlesex University December2000 Abstract 7be intentionof this investigationis to formulatea chronologicalsurvey of Messiaen'streatment of birdsong,taking into accountthe speciesinvolved and the composer'sevolving methods of motivic manipulation,instrumentation, incorporation of intrinsic characteristicsand structure.The approachtaken in this studyis to surveyselected works in turn, developingappropriate tabular formswith regardto Messiaen'suse of 'style oiseau',identified bird vocalisationsand eventhe frequentappearances of musicthat includesfamiliar characteristicsof bird style, althoughnot so labelledin the score.Due to the repetitivenature of so manymotivic fragmentsin birdsong,it has becomenecessary to developnew terminology and incorporatederivations from other research findings.7be 'motivic classification'tables, for instance,present the essentialmotivic featuresin somevery complexbirdsong. The studybegins by establishingthe importanceof the uniquemusical procedures developed by Messiaen:these involve, for example,questions of form, melodyand rhythm.7he problemof is 'authenticity' - that is, the degreeof accuracywith which Messiaenchooses to treat birdsong- then examined.A chronologicalsurvey of Messiaen'suse of birdsongin selectedmajor works follows, demonstratingan evolutionfrom the ge-eralterm 'oiseau' to the preciseattribution -

Stephen Brien

AN INVESTIGATION OF FORWARD MOTION AS AN ANALYTIC TEMPLATE (~., ·~ · · STEPHEN BRIEN A THESIS S B~LITTED I P RTIAL F LFLLLMENT OF THE REQUIREMENTS FOR THE DEGREE OF MASTER OF M IC PERFORMANCE SYDNEY CONSERV ATORIUM OF MUSIC UN IVERSITY OF SYDNEY 2004 ACKNOWLEDGMENTS The author would like to thank Dick Montz, Craig Scott, Phil Slater and Dale Barlow for their support and encouragement in the writing of this thesis. :.~~,'I 1 lt,·,{l ((.. v <'oo'5 ( ., '. ,. ~.r To Hal Galper ABSTRACT Investigati on of Forward Motion as an analytic templ ate extracts the elements of Forward Motion as expounded in jazz pianist Hal Gal per's book- Forward Motion .from Bach to Bebop; a Corrective Approach to Jazz Phrasing and uses these element s as an analyt ic template wi th which to assess fo ur solos of Australian jazz musician Dale Barlow. This case study includes a statistical analysis of the Forward Motion elements inherent in Barlow' s improvisi ng, whi ch provides an interesting insight into how much of Galp er' s educational theory exists within the playing of a mu sician un fa miliar with Galper ' s methods. TABLES CONT ... 9. Table 2G 2 10. Table 2H 26 II . Table21 27 12. Table 2J 28 13 . Table2K 29 14. Table 2L 30 15. Table 2M 3 1 16. Table 2N 32 17. Table 20 33 18. Table 2P 35 19. Table 2Q 36 Angel Eyes I. Table lA 37 2. Table IB 37 3. Table 2A 38 4. Table 2B 40 5. Table 2C 42 6. -

Figured Bass and Tonality Recognition

Figured Bass and Tonality Recognition Jerome Barthélemy Alain Bonardi Ircam Ircam 1 Place Igor Stravinsky 1 Place Igor Stravinsky 75004 Paris France 75004 Paris France 33 01 44 78 48 43 33 01 44 78 48 43 [email protected] [email protected] ABSTRACT The aim of the figured bass was, in principle, oriented towards interpretation. Rameau turned it into a genuine theory of tonality In the course of the WedelMusic project [15], we are currently with the introduction of the fundamental concept of root. Successive refinements of the theory have been introduced in the implementing retrieval engines based on musical content th automatically extracted from a musical score. By musical content, 18 , 19th (e.g., by Reicha and Fetis) and 20th (e.g., Schoenberg we mean not only main melodic motives, but also harmony, or [10, 11]) centuries. For a general history of the theory of tonality. harmony, one can refer to Ian Bent [1] or Jacques Chailley [2] In this paper, we first review previous research in the domain of harmonic analysis of tonal music. Several processes can be build on the top of a harmonic reduction We then present a method for automated harmonic analysis of a • detection of tonality, music score based on the extraction of a figured bass. The figured • recognition of cadence, bass is determined by means of a template-matching algorithm, where templates for chords can be entirely and easily redefined by • detection of similar structures the end-user. We also address the problem of tonality recognition Following a brief review of systems addressing the problem of with a simple algorithm based on the figured bass. -

Identification and Analysis of Wes Montgomery's Solo Phrases Used in 'West Coast Blues'

Identification and analysis of Wes Montgomery's solo phrases used in ‘West Coast Blues ’ Joshua Hindmarsh A Thesis submitted in fulfillment of requirements for the degree of Masters of Music (Performance) Sydney Conservatorium of Music The University of Sydney 2016 Declaration I, Joshua Hindmarsh hereby declare that this submission is my own work and that it contains no material previously published or written by another person except for the co-authored publication submitted and where acknowledged in the text. This thesis contains no material that has been accepted for the award of a higher degree. Signed: Date: 4/4/2016 Acknowledgments I wish to extend my sincere gratitude to the following people for their support and guidance: Mr Phillip Slater, Mr Craig Scott, Prof. Anna Reid, Mr Steve Brien, and Dr Helen Mitchell. Special acknowledgement and thanks must go to Dr Lyle Croyle and Dr Clare Mariskind for their guidance and help with editing this research. I am humbled by the knowledge of such great minds and the grand ideas that have been shared with me, without which this thesis would never be possible. Abstract The thesis investigates Wes Montgomery's improvisational style, with the aim of uncovering the inner workings of Montgomery's improvisational process, specifically his sequencing and placement of musical elements on a phrase by phrase basis. The material chosen for this project is Montgomery's composition 'West Coast Blues' , a tune that employs 3/4 meter and a variety of chordal backgrounds and moving key centers, and which is historically regarded as a breakthrough recording for modern jazz guitar. -

Harmonic Vocabulary in the Music of John Adams: a Hierarchical Approach Author(S): Timothy A

Yale University Department of Music Harmonic Vocabulary in the Music of John Adams: A Hierarchical Approach Author(s): Timothy A. Johnson Source: Journal of Music Theory, Vol. 37, No. 1 (Spring, 1993), pp. 117-156 Published by: Duke University Press on behalf of the Yale University Department of Music Stable URL: http://www.jstor.org/stable/843946 Accessed: 06-07-2017 19:50 UTC JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range of content in a trusted digital archive. We use information technology and tools to increase productivity and facilitate new forms of scholarship. For more information about JSTOR, please contact [email protected]. Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at http://about.jstor.org/terms Yale University Department of Music, Duke University Press are collaborating with JSTOR to digitize, preserve and extend access to Journal of Music Theory This content downloaded from 198.199.32.254 on Thu, 06 Jul 2017 19:50:30 UTC All use subject to http://about.jstor.org/terms HARMONIC VOCABULARY IN THE MUSIC OF JOHN ADAMS: A HIERARCHICAL APPROACH Timothy A. Johnson Overview Following the minimalist tradition, much of John Adams's' music consists of long passages employing a single set of pitch classes (pcs) usually encompassed by one diatonic set.2 In many of these passages the pcs form a single diatonic triad or seventh chord with no additional pcs. In other passages textural and registral formations imply a single triad or seventh chord, but additional pcs obscure this chord to some degree. -

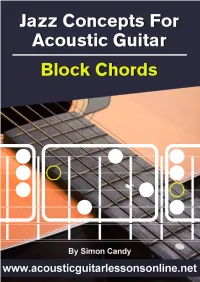

Jazz Concepts for Acoustic Guitar Block Chords

Jazz Concepts For Acoustic Guitar Block Chords © Guitar Mastery Solutions, Inc. Page !2 Table Of Contents A Little Jazz? 4 What Are Block Chords? 4 Chords And Inversions 5 The 3 Main Block Chord Types And How They Fit On The Fretboard 6 Major 7th ...................................................................................6 Dominant 7th ..............................................................................6 Block Chord Application 1 - Blues 8 Background ................................................................................8 Organising Dominant Block Chords On The Fretboard .......................8 Blues Example ...........................................................................10 Block Chord Application 2 - Neighbour Chords 11 Background ...............................................................................11 The Progression .........................................................................11 Neighbour Chord Example ...........................................................12 Block Chord Application 3 - Altered Dominant Block Chords 13 Background ...............................................................................13 Altered Dominant Chord Chart .....................................................13 Altered Dominant Chord Example .................................................15 What’s Next? 17 © Guitar Mastery Solutions, Inc. Page !3 A Little Jazz? This ebook is not necessarily for jazz guitar players. In fact, it’s more for acoustic guitarists who would like to inject a little -

Differentiating ERAN and MMN: an ERP Study

NEUROPHYSIOLOGY, BASIC AND CLINICAL NEUROREPORT Differentiating ERAN and MMN: An ERP study Stefan Koelsch,1,CA Thomas C. Gunter,1 Erich SchroÈger,2 Mari Tervaniemi,3 Daniela Sammler1,2 and Angela D. Friederici1 1Max Planck Institute of Cognitive Neuroscience, Stephanstr. 1a, D-04103 Leipzig; 2Institute of General Psychology, Leipzig, Germany; 3Cognitive Brain Research Unit, Helsinki, Finland CACorresponding Author Received 14 February 2001; accepted 27 February 2001 In the present study, the early right-anterior negativity (ERAN) both a longer latency, and a larger amplitude. The combined elicited by harmonically inappropriate chords during listening ®ndings indicate that ERAN and MMN re¯ect different mechan- to music was compared to the frequency mismatch negativity isms of pre-attentive irregularity detection, and that, although (MMN) and the abstract-feature MMN. Results revealed that both components have several features in common, the ERAN the amplitude of the ERAN, in contrast to the MMN, is does not easily ®t into the classical MMN framework. The speci®cally dependent on the degree of harmonic appropriate- present ERPs thus provide evidence for a differentiation of ness. Thus, the ERAN is correlated with the cognitive cognitive processes underlying the fast and pre-attentive processing of complex rule-based information, i.e. with the processing of auditory information. NeuroReport 12:1385±1389 application of music±syntactic rules. Moreover, results showed & 2001 Lippincott Williams & Wilkins. that the ERAN, compared to the abstract-feature MMN, had Key words: Auditory processing; EEG; ERAN; ERP; MMN; Music INTRODUCTION not a frequency MMN which is known to be elicited only Accurate pitch perception is a prerequisite for the proces- when a deviant tone, or chord, is preceded by a few sing of melodic, harmonic, and prosodic aspects of both standard tones or chords with identical frequency [8,9]. -

A General Theory of Comparative Music Analysis

A General Theory of Comparative Music Analysis by Richard R. Randall Submitted in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy Supervised by Professor Robert D. Morris Department of Music Theory Eastman School of Music University of Rochester Rochester, New York 2006 Curriculum Vitæ Richard Randall was born in Washington, DC, in 1969. He received his un- dergraduate musical training at the New England Conservatory in Boston, MA. He was awarded a Bachelor of Music in Theoretical Studies with a Distinction in Performance in 1995 and, in 1997, received a Master of Arts degree in music theory from Queens College of the City University of New York. Mr. Randall studied classical guitar with Robert Paul Sullivan, jazz guitar with Frank Rumoro, Walter Johns, and Rick Whitehead, and solfege and conducting with F. John Adams. In 1997, Mr. Randall entered the Ph.D. program in music theory at the University of Rochester’s Eastman School of Music. His research at the Eastman School was advised by Robert Morris. He received teaching assistantships and graduate scholarships in 1998, 1999, 2000. He was adjunct instructor in music at Northeastern Illi- nois University from 2002 to 2003. Additionally, Mr. Randall taught music theory and guitar at the Old Town School of Folk Music in Chicago as a private guitar instructor from 2001 to 2003. In 2003, Mr. Randall was ap- pointed Visiting Assistant Professor of Music in the Department of Music and Dance at the University of Massachusetts, Amherst. i Acknowledgments TBA ii Abstract This dissertation establishes a kind of comparative music analysis such that what we understand as musical features in a particular case are dependent on the music analytic systems that provide the framework by which we can contemplate (and communicate our thoughts about) music. -

Linear Jazz Improvisation Block Chord Keyboard Cadences

Linear Jazz Improvisation Block Chord Keyboard Cadences Ed Byrne © Ed Byrne 2008 CONTENTS Introduction 3 1. MAJOR KEY BLOCK CHORD CADENCES 5 2. MINOR KEY BLOCK CHORD CADENCES 9 3. MAJOR KEY MODIFIED BLOCK CHORD CADENCES 13 4. MINOR KEY MODIFIED BLOCK CHORD CADENCES 17 2 INTRODUCTION It is important that jazz practitioners have a firm basic knowledge of the keyboard, in particular the essential harmonic cadences found in most tunes. They should be learned in all keys. We focus here on ii7 V7 I MA7 and its minor key counterpart, ii7-5 V7-9 i7, before adding Modified Block Chords , in which we introduce such tension substitutions as 9 for 1, 6 for 5, and +9 for 3 in the right hand. While it would be nice to be a great pianist with great facility and sophisticated harmonic voicing capabilities on the keyboard, it is only essential that one knows enough to be able to use the keyboard as an aid in learning to hear and to analyze tunes in preparation for improvisation—and perhaps to compose. Therefore, we shall begin with block chords in all keys. Block chords are 4-note close position 7th chords, doubled in both hands at the octave. They are handy and can be used in a variety of ways, such as: 1. Accompanying ( comping )—in both hands, 2. Playing the chords in the left hand while playing the melody in the right, 3. Playing the chords in the left hand while improvising lines in the right, 4. Playing the basic (un-modified) chords in the right hand while playing a simple bass line or ostinato in the left.