The Feasibility of Eyes-Free Touchscreen Keyboard Typing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ETAO Keyboard: Text Input Technique on Smartwatches

Available online at www.sciencedirect.com ScienceDirect Procedia Computer Science 84 ( 2016 ) 137 – 141 7th International conference on Intelligent Human Computer Interaction, IHCI 2015 ETAO Keyboard: Text Input Technique on Smartwatches Rajkumar Darbara,∗, Punyashlok Dasha, Debasis Samantaa aSchool of Information Technology, IIT Kharagpur, West Bengal, India Abstract In the present day context of wearable computing, smartwatches augment our mobile experience even further by providing in- formation at our wrists. What they fail to do is to provide a comprehensive text entry solution for interacting with the various app notifications they display. In this paper we present ETAO keyboard, a full-fledged keyboard for smartwatches, where a user can input the most frequent English alphabets with a single tap. Other keys which include numbers and symbols are entered by a double tap. We conducted a user study that involved sitting and walking scenarios for our experiments and after a very short training session, we achieved an average words per minute (WPM) of 12.46 and 9.36 respectively. We expect that our proposed keyboard will be a viable option for text entry on smartwatches. ©c 20162015 TheThe Authors. Authors. Published Published by byElsevier Elsevier B.V. B.V. This is an open access article under the CC BY-NC-ND license (Peer-reviehttp://creativecommons.org/licenses/by-nc-nd/4.0/w under responsibility of the Scientific). Committee of IHCI 2015. Peer-review under responsibility of the Organizing Committee of IHCI 2015 Keywords: Text entry; smartwatch; mobile usability 1. Introduction Over the past few years, the world has seen a rapid growth in wearable computing and a demand for wearable prod- ucts. -

Typing Tutor

Typing Tutor Design Documentation Courtney Adams Jonathan Corretjer December 16, 2016 USU 3710 Contents 1 Introduction 2 2 Scope 2 3 Design Overview 2 3.1 Requirements . .2 3.2 Dependencies . .2 3.3 Theory of Operation . .3 4 Design Details 5 4.1 Hardware Design . .5 4.2 Software Design . .5 4.2.1 LCD Keyboard Display . .5 4.2.2 Key Stroke Input . .7 4.2.3 Menu Layout . .8 4.2.4 Level and Test Design . .9 4.2.5 Correctness Logic . 11 4.2.6 Buzzer Implementation . 11 4.2.7 Calculations . 12 5 Testing 12 5.0.1 SSI Output to LCD . 12 5.0.2 Keyboard Data Input . 13 5.0.3 Buzzer Frequency . 14 5.0.4 Buzzer Length . 15 5.0.5 Trial Testing . 15 5.0.6 Calculations . 16 6 Conclusion 16 7 Appendix 18 7.1 Code.................................................. 18 7.1.1 LCD.h . 19 7.1.2 LCD.c . 20 7.1.3 main.c . 22 7.1.4 TypingLib.h . 24 7.1.5 TypingMenu.h . 27 7.1.6 TypingMenu.c . 28 7.1.7 Keys.h . 31 1 7.1.8 Keys.c . 32 7.1.9 Speaker.h . 37 7.1.10 Speaker.c . 38 2 1 Introduction The goal of the Typing Tutor is to enable users to practice and develop their typing skills using an interactive interface that encourages improvement. Composed of various levels, the Typing Tutor provides a challenging medium for both beginners and experienced, alike. At any time during the tutoring process, users can take a typing test to measure their progress in speed and accuracy. -

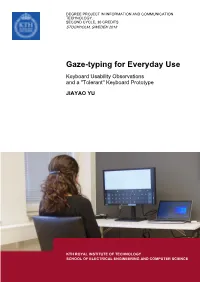

Gaze-Typing for Everyday Use Keyboard Usability Observations and a "Tolerant'' Keyboard Prototype

DEGREE PROJECT IN INFORMATION AND COMMUNICATION TECHNOLOGY, SECOND CYCLE, 30 CREDITS STOCKHOLM, SWEDEN 2018 Gaze-typing for Everyday Use Keyboard Usability Observations and a "Tolerant'' Keyboard Prototype JIAYAO YU KTH ROYAL INSTITUTE OF TECHNOLOGY SCHOOL OF ELECTRICAL ENGINEERING AND COMPUTER SCIENCE Sammandrag Blick-skrivande möjliggör en ny inmatningskanal, men dess tangentborddesign är inte än redo för dagligt bruk. För att utforska blick-skriftstangentbord för sådant bruk, som är enkla att lära sig använda, snabba att skriva med, och robusta för olika användning, analyserade jag användbarheten hos tre brett använda blick-skriftstangentbord genom en användarstudie med skrivprestationsmätningar, och syntetiserade ett designutrymme för blick-skriftstangentbord för dagsbruk baserat på teman av typningsscheman och tangentbordslayout, feed-back, användarvänlighet för text redigering, och system design. I synnerhet identifierade jag att blick-skriftstangentbord behöver ha "toleranta" designer som tillåter implicit blickkontroll och balans mellan inmatningsambiguitet och typningseffektivitet. Därför prototypade jag ett blick-skriftstangentbord som använder ett formskriftsschema som är avsett för vardagligt skrivande med blickgester, och anpassat till att segmentera blickpunkten när du skriver ord från en kontinuerlig ström av blickdata. Systemet erbjuder realtidsformskrivning i hastigheten 11.70 WPM och felfrekvensen 0.14 utvärderat med en erfaren användare, och har stöd för att skriva fler än 20000 ord från lexikonet. Gaze-typing for Everyday Use: Keyboard Usability Observations and a “Tolerant” Keyboard Prototype Jiayao Yu KTH Royal Institute of Technology, Aalto University Stockholm, Sweden [email protected] Figure 1: Representative gaze-input properties requiring “tolerant” design. Left: Jittering fixations require larger interactive elements. Middle: Gaze are hard to gesture along complex, precise, or long trajectories. -

Public Company Analysis 6

MOBILE SMART FUNDAMENTALS MMA MEMBERS EDITION NOVEMBER 2014 messaging . advertising . apps . mcommerce www.mmaglobal.com NEW YORK • LONDON • SINGAPORE • SÃO PAULO MOBILE MARKETING ASSOCIATION NOVEMBER 2014 REPORT Measurement & Creativity Thoughts for 2015 Very simply, mobile marketing will continue to present the highest growth opportunities for marketers faced with increasing profitability as well as reaching and engaging customers around the world. Widely acknowledged to be the channel that gets you the closest to your consumers, those marketers that leverage this uniqueness of mobile will gain competitive footholds in their vertical markets, as well as use mobile to transform their marketing and their business. The MMA will be focused on two cores issues which we believe will present the biggest opportunities and challenges for marketers and the mobile industry in 2015: Measurement and Creativity. On the measurement side, understanding the effectiveness of mobile: the ROI of a dollar spent and the optimized level for mobile in the marketing mix will become more and more critical as increased budgets are being allocated towards mobile. MMA’s SMoX (cross media optimization research) will provide the first-ever look into this. Additionally, attribution and understanding which mobile execution (apps, video, messaging, location etc…) is working for which mobile objective will be critical as marketers expand their mobile strategies. On the Creative side, gaining a deeper understanding of creative effectiveness cross-screen and having access to case studies from marketers that are executing some beautiful campaigns will help inspire innovation and further concentration on creating an enhanced consumer experience specific to screen size. We hope you’ve had a successful 2014 and we look forward to being a valuable resource to you again in 2015. -

QWERTY: the Effects of Typing on Web Search Behavior

QWERTY: The Effects of Typing on Web Search Behavior Kevin Ong Kalervo Järvelin RMIT University University of Tampere [email protected] [email protected] Mark Sanderson Falk Scholer RMIT University RMIT University [email protected] [email protected] ABSTRACT amount of relevant information for an underlying information need Typing is a common form of query input for search engines and to be resolved. In IIR, the focus is on the interaction between a hu- other information retrieval systems; we therefore investigate the man and a search system. The interaction can take place over three relationship between typing behavior and search interactions. The modalities, such as search by using/viewing images, speaking [17] search process is interactive and typically requires entering one or or traditionally by typing into a search box. In this paper we focus more queries, and assessing both summaries from Search Engine Re- on typing. sult Pages and the underlying documents, to ultimately satisfy some Nowadays, searchers expect rapid responses from search en- information need. Under the Search Economic Theory (SET) model gines [15]. However, the amount of useful information gathered of interactive information retrieval, differences in query costs will during the search process is also dependent on how well searchers result in search behavior changes. We investigate how differences are able to translate their search inputs (such as typing), into useful in query inputs themselves may relate to Search Economic Theory information output [2]. IIR is a collaborative effort between the by conducting a lab-based experiment to observe how text entries searcher and the system to answer an information need. -

High-Tech Note Taking by Andrew Leibs

High-tech Note Taking By Andrew Leibs The ability to take notes is crucial for college success, whether it’s capturing words a teacher says or writes during class, or gathering content from library references, periodicals, and online resources. Yet the inconsistency of environments can cause some students – especially those with a vision or learning disability – to struggle with some or all of their note- taking tasks. Fortunately, technology offers a variety of methods to help insure you don’t miss a word and that you’re able to access and organize the information you secure. Such solutions may offer a more efficient means for gathering information without you needing a personal note taker – a standard approach of many disability service offices. Tip: If you have a documented disability, ask teachers for print or digital copies of class notes, suggesting them as a reasonable accommodation (use that phrase) for participation. Capturing Spoken Words The surest way to miss nothing in class is to record the entire lecture. You can do this using any device with a built-in microphone. Convenient options include the “Voice Memos” iOS app (located under Utilities on the home screen) and pocket media players such as the Victor Reader Stream, which has a tactile keypad and stores recordings in a separate Notes directory. Though they provide peace of mind, such recordings also require replay and transcription to access and make use of notes – two time-consuming tasks. Tip: Plug the iOS device into your computer to open voice memos in iTunes – a more efficient interface for transcription’s many starts and stops. -

Ultra-Low-Power Mode for Screenless Mobile Interaction

Session 11: Mobile Interactions UIST 2018, October 14–17, 2018, Berlin, Germany Ultra-Low-Power Mode for Screenless Mobile Interaction ∗ ∗ ∗ Jian Xu, Suwen Zhu, Aruna Balasubramanian , Xiaojun Bi , Roy Shilkrot Department of Computer Science Stony Brook University Stony Brook, New York, USA {jianxu1, suwzhu, arunab, xiaojun, roys}@cs.stonybrook.edu ABSTRACT Smartphones are now a central technology in the daily lives of billions, but it relies on its battery to perform. Battery opti- mization is thereby a crucial design constraint in any mobile OS and device. However, even with new low-power methods, the ever-growing touchscreen remains the most power-hungry component. We propose an Ultra-Low-Power Mode (ULPM) for mobile devices that allows for touch interaction without visual feedback and exhibits significant power savings of up to 60% while allowing to complete interactive tasks. We demon- strate the effectiveness of the screenless ULPM in text-entry tasks, camera usage, and listening to videos, showing only a small decrease in usability for typical users. CCS Concepts •Human-centered computing ! Touch screens; Smart- phones; Mobile phones; •Software and its engineering ! Power management; Author Keywords Power Saving; Mobile System; Text Entry; Touchscreen; INTRODUCTION Smartphones play a crucial role in people’s daily lives. It is Figure 1: Picture of use cases of ULPM. The left shows the now hard to imagine living without a smartphone ready at hand, normal cases of using smartphones to take videos, write text yet this happens to nearly everyone on a daily basis - when the messages and watch online video; The right side shows users device is out of battery. -

Word Per Minute (WPM) Lampung Script Keyboard

Word Per Minute (WPM) Lampung Script Keyboard Mardiana Fahreza A. Arizal Meizano Ardhi Muhammad Informatics Engineering, Lampung Informatics Engineering, Lampung Informatics Engineering, Lampung University, Bandar Lampung, 35141, University, Bandar Lampung, 35141, University, Bandar Lampung, 35141, Indonesia Indonesia Indonesia [email protected] [email protected] [email protected] Martinus Gita Paramita Djausal Mechanical Engineering, Lampung University, International Business, Lampung University, Bandar Lampung, 35141, Indonesia Bandar Lampung, 35141, Indonesia [email protected] [email protected] Abstract— Users of Font Lampung in previous studies Lampung people use unique writing called Lampung have difficulty in typing because the layout of the script or better known as Kaganga (A. Restuningrat Lampung script is complicated to understand. Re- 2017). designing the Lampung alphabet keyboard layout allows Lampung language itself is only used by a small group users to be able to type text in characters more easily. The of Lampung people as well as Lampung script. The use of purpose of this research is to create a Lampung alphabet Script (Aksara) is only used and studied in school, the keyboard layout for more effective typing of the rest is Latin (G. F. Nama and F. Arnoldi 2016). Lampung alphabet. The methods used are UX (User Modern society tends to use computer systems to Experience) that are, requirements gathering, alternative communicate. No representation of Lampung script in the design, prototype, evaluation, and result report computer system. Research has been done before to solve generation. The layout design is created by eliminating the problem, which is research on font Lampung script to the use of the SHIFT key and regrouping parent letters, be used in a computer system (Meizano A. -

Google Cheat Sheet

Way Cool Apps Your Guide to the Best Apps for Your Smart Phone and Tablet Compiled by James Spellos President, Meeting U. [email protected] http://www.meeting-u.com twitter.com/jspellos scoop.it/way-cool-tools facebook.com/meetingu last updated: November 15, 2016 www.meeting-u.com..... [email protected] Page 1 of 19 App Description Platform(s) Price* 3DBin Photo app for iPhone that lets users take multiple pictures iPhone Free to create a 3D image Advanced Task Allows user to turn off apps not in use. More essential with Android Free Killer smart phones. Allo Google’s texting tool for individuals and groups...both Android, iOS Free parties need to have Allo for full functionality. Angry Birds So you haven’t played it yet? Really? Android, iOS Freemium Animoto Create quick, easy videos with music using pictures from iPad, iPhone Freemium - your mobile device’s camera. $5/month & up Any.do Simple yet efficient task manager. Syncs with Google Android Free Tasks. AppsGoneFree Apps which offers selection of free (and often useful) apps iPhone, iPad Free daily. Most of these apps typically are not free, but become free when highlighted by this service. AroundMe Local services app allowing user to find what is in the Android, iOS Free vicinity of where they are currently located. Audio Note Note taking app that syncs live recording with your note Android, iOS $4.99 taking. Aurasma Augmented reality app, overlaying created content onto an Android, iOS Free image Award Wallet Cloud based service allowing user to update and monitor all Android, iPhone Free reward program points. -

Covert Lie Detection Using Keyboard Dynamics

www.nature.com/scientificreports OPEN Covert lie detection using keyboard dynamics Merylin Monaro1, Chiara Galante2, Riccardo Spolaor1, Qian Qian Li1, Luciano Gamberini2,3, Mauro Conti3,4 & Giuseppe Sartori2,3 Received: 5 May 2017 Identifying the true identity of a subject in the absence of external verifcation criteria (documents, Accepted: 18 January 2018 DNA, fngerprints, etc.) is an unresolved issue. Here, we report an experiment on the verifcation of fake Published: xx xx xxxx identities, identifed by means of their specifc keystroke dynamics as analysed in their written response using a computer keyboard. Results indicate that keystroke analysis can distinguish liars from truth tellers with a high degree of accuracy - around 95% - thanks to the use of unexpected questions that efciently facilitate the emergence of deception clues. Presently, one of the major issues related to identity and security is represented by the numerous and current threats of terrorist attacks1. It is known that terrorists cross borders using false passports or claiming false identi- ties2. For example, one of the suicide bombers involved in the Brussels train-station attack on March 22, 2016 was travelling through Europe using the identity of an Italian football player3. In these scenarios, security measures mainly consist of cross-checks on the data declared by the suspects or in the verifcation of biometric features, such as fngerprints, hand geometry, and retina scans4. Nevertheless, terrorists are mostly unknown: they are not listed in any database, and the security services do not have any information by which to recognise them. False identities in online services represent another unresolved issue. -

Engram: a Systematic Approach to Optimize Keyboard Layouts for Touch Typing, with Example for the English Language

Preprints (www.preprints.org) | NOT PEER-REVIEWED | Posted: 10 March 2021 doi:10.20944/preprints202103.0287.v1 Engram: a systematic approach to optimize keyboard layouts for touch typing, with example for the English language 1 Arno Klein , PhD 1 MATTER Lab, Child Mind Institute, New York, NY, United States Corresponding Author: Arno Klein, PhD MATTER Lab Child Mind Institute 101 E 56th St NY, NY 10022 United States Phone: 1-347-577-2091 Email: [email protected] ORCID ID: 0000-0002-0707-2889 Abstract Most computer keyboard layouts (mappings of characters to keys) do not reflect the ergonomics of the human hand, resulting in preventable repetitive strain injuries. We present a set of ergonomics principles relevant to touch typing, introduce a scoring model that encodes these principles, and outline a systematic approach for developing optimized keyboard layouts in any language based on this scoring model coupled with character-pair frequencies. We then create a keyboard layout optimized for touch typing in English by constraining key assignments to reduce lateral finger movements and enforce easy access to high-frequency letters and letter pairs, applying open source software to generate millions of layouts, and evaluating them based on Google’s N-gram data. We use two independent scoring methods to compare the resulting Engram layout against 10 other prominent keyboard layouts based on a variety of publicly available text sources. The Engram layout scores consistently higher than other keyboard layouts. Keywords keyboard layout, optimized keyboard layout, keyboard, touch typing, bigram frequencies Practitioner summary Most keyboard layouts (character mappings) have questionable ergonomics foundations, resulting in preventable repetitive strain injuries. -

Analysis of Alternative Keyboards Using Learning Curves Allison M

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Digital Repository @ Iowa State University Industrial and Manufacturing Systems Engineering Industrial and Manufacturing Systems Engineering Publications 2009 Analysis of Alternative Keyboards Using Learning Curves Allison M. Anderson North Carolina State University Gary Mirka Iowa State University, [email protected] Sharon M.B. Jones North Carolina State University David B. Kaber North Carolina State University Follow this and additional works at: http://lib.dr.iastate.edu/imse_pubs Part of the Ergonomics Commons, Industrial Engineering Commons, and the Systems Engineering Commons The ompc lete bibliographic information for this item can be found at http://lib.dr.iastate.edu/ imse_pubs/159. For information on how to cite this item, please visit http://lib.dr.iastate.edu/ howtocite.html. This Article is brought to you for free and open access by the Industrial and Manufacturing Systems Engineering at Iowa State University Digital Repository. It has been accepted for inclusion in Industrial and Manufacturing Systems Engineering Publications by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. Analysis of Alternative Keyboards using Learning Curves Allison M. Anderson, North Carolina State University, Raleigh, North Carolina, Gary A. Mirka, Iowa State University, Ames, Iowa, and Sharon M. B. Joines and David B. Kaber, North Carolina State University, Raleigh, North Carolina ABSTRACT Objective: Quantify learning percentages for alternative keyboards (chord, contoured split, Dvorak, and split fixed-angle) and understand how physical, cognitive, and perceptual demand affect learning. Background: Alternative keyboards have been shown to offer ergonomic benefits over the conventional, single-plane QWERTY keyboard design, but productivity-related challenges may hinder their widespread acceptance.