Mysql Sync Table Schema

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Project Proposal a Comparative Analysis of the LAMP (Linux, Apache, Mysql and PHP) and Microsoft .NET (Windows XP, IIS, Microsof

Project Proposal A comparative analysis of the LAMP (Linux, Apache, MySQL and PHP) and Microsoft .NET (Windows XP, IIS, Microsoft SQL Server and ASP.NET) frameworks within the challenging domain of limited connectivity and internet speeds as presented by African countries. By: Christo Crampton Supervisor: Madeleine Wright 1.) Overview of the research With the recent releases of ASP.NET and PHP 5, there has been much debate over which technology is better. I propose to develop and implement an industry strength online journal management system (AJOL – African Journals OnLine) using both frameworks, and use this as the basis for comparative analysis of the two frameworks against eachother. 2.) Product Specification AJOL is an existing website ( www.ajol.org ) which acts as an aggregation agent for a number of publishers of African journals who wish to publish their journals online and of course for researchers looking for information. The existing system is based on the OJS (Open Journal System) developed by Berkeley University. The system consists of a user frontend – where users can browse and search the contents of the database online – and an administration frontend – where publishers can log in and manage their journals on the database by performing tasks such as adding new abstracts or editing existing abstracts. It is currently developed in PHP with a MySQL backend. The proposed system will consist of an online user interface, and online administration interface for publishers, as well as an offline administration interface for publishers. The online and offline administration interfaces are complementary and publishers can use either or both according to their preference. -

Pdf, .Xps, .Png and More

About the SQLyog program: SQLyog Version History Also read about plans for future versions of SQLyog SQLyog 8.2 (December 2009) Features • Added a ‘Schema Optimizer’ feature. Based on “procedure analyse()” it will propose alterations to data types for a table based on analysis on what data are stored in the table. The feature is available from INFO tab/HTML mode. • A table can now be added to Query Builder canvas multiple times. A table alias is automatically generated for second and higher instance of the table. This is required for special queries like self-JOINs. • In the ‘Import External Data’ wizard same import settings can now be applied to all tables in one operation. • In MESSAGES tab we are now displaying the query if error occurs during execution in order to make it easier to identify what query raised an error when executing multiple statements. Bug Fixes • ‘Import External Data Tool’ -TRIGGERS did not use the Primary Key for the WHERE-clause if a PK existed on source (all columns were used with the WHERE instead). This could cause problems with tables having Floating Point data. • A malformed XML-string could cause failure to connect with HTTP tunneling. This was a rare issue only. • After DROP a ’stored program’ followed by CREATE same, autocomplete would not recognize the ’stored program’ unless after a program restart. • ‘duplicate table’ has an option to duplicate triggers defined ON that table, but the way we named the new trigger could cause inconsistencies. Now the new trigger will be named Page 1/80 (c) 2009 Webyog <[email protected]> URL: http://www.webyog.com/faq/content/5/7/en/sqlyog-version-history.html About the SQLyog program: SQLyog Version History ‘oldtriggername_newtableame’. -

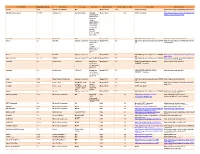

Third Party Version

Third Party Name Third Party Version Manufacturer License Type Comments Merge Product Merge Product Versions License details Software source autofac 3.5.2 Autofac Contributors MIT Merge Cardio 10.2 SOUP repository https://www.nuget.org/packages/Autofac/3.5 .2 Gibraltar Loupe Agent 2.5.2.815 eSymmetrix Gibraltor EULA Gibraltar Merge Cardio 10.2 SOUP repository https://my.gibraltarsoftware.com/Support/Gi Loupe Agent braltar_2_5_2_815_Download will be used within the Cardio Application to view events and metrics so you can resolve support issues quickly and easily. Modernizr 2.8.3 Modernizr MIT Merge Cadio 6.0 http://modernizr.com/license/ http://modernizr.com/download/ drools 2.1 Red Hat Apache License 2.0 it is a very old Merge PACS 7.0 http://www.apache.org/licenses/LICENSE- http://mvnrepository.com/artifact/drools/dro version of 2.0 ols-spring/2.1 drools. Current version is 6.2 and license type is changed too drools 6.3 Red Hat Apache License 2.0 Merge PACS 7.1 http://www.apache.org/licenses/LICENSE- https://github.com/droolsjbpm/drools/releases/ta 2.0 g/6.3.0.Final HornetQ 2.2.13 v2.2..13 JBOSS Apache License 2.0 part of JBOSS Merge PACS 7.0 http://www.apache.org/licenses/LICENSE- http://mvnrepository.com/artifact/org.hornet 2.0 q/hornetq-core/2.2.13.Final jcalendar 1.0 toedter.com LGPL v2.1 MergePacs Merge PACS 7.0 GNU LESSER GENERAL PUBLIC http://toedter.com/jcalendar/ server uses LICENSE Version 2. v1, and viewer uses v1.3. -

JAVA FULL STACK DEVELOPER TRAINING by Nirvana Enterprises

JAVA FULL STACK DEVELOPER TRAINING By Nirvana Enterprises 1 Java Full Stack Developer Training 732.889.4242 [email protected] www.nirvanaenterprises.com About the Course This is a full stack web development (Java IDE) and Tomcat Embedded Web course using Angular, Spring Boot, and Server. The course will also give you ex- Spring Security Frameworks. You will pertise in MongoDB, and Docker so you be using Angular (Frontend Framework), can build, test, and deploy applications TypeScript Basics, Angular CLI(To create quickly using containers. The Course in- Angular projects), Spring Boot (REST API cludes 3 industry level practice projects, Framework), Spring (Dependency Man- and interview preparation, and extreme agement), Spring Security (Authentica- coding practices. This prepares you for tion and Authorization - Basic and JWT), your next Fortune 500 company project BootStrap (Styling Pages), Maven (depen- as a Full Stack Java Developer. dencies management), Node (npm), Vi- sual Studio Code (TypeScript IDE), Eclipse 2 Java Full Stack Developer Training 732.889.4242 [email protected] www.nirvanaenterprises.com Key Course Highlights Concept & Logic development Learn Core Java, Advanced Java, with 160 Hours of Training by SpringBoot, HTML, CSS, Javas- Experts cript, Bootstrap & MongoDB 3 Industry-level projects on Core Develop Cloud Native Applica- Java, Testing, Automation, AWS, tion - on AWS Cloud Angular, MongoDB & Docker Earn a Certificate in Java Full Architecture & SDLC - Microser- Stack Development on success- vices & DevOps ful completion of the program Guaranteed Placement within Cloud Platform & Deployment - months of successful comple- AWS Cloud, Docker & Jenkins tion of the program 3 Java Full Stack Developer Training 732.889.4242 [email protected] www.nirvanaenterprises.com Learning Outcomes Develop a working application on Build cloud-native application by Shopping Cart for ECommerce and seeding the code to Cloud (SCM), in Healthcare using full stack with like AWS. -

Fitness Nama Using Python Language, MYSQL, Sqlyog And

© March 2021| IJIRT | Volume 7 Issue 10 | ISSN: 2349-6002 Fitness Nama Using Python Language, MYSQL, SQLyog And Anaconda Software Divyanshu Kumar1, Anthony Paul Joseph2, Priyanka Subhash Junghare3, Neha Raikwad4, Arati khokale 5, Prof. Vijaya kamble 6 1,2,3,4,5Final Year Student, Department of Computer Science and Engineering, GNIET, Nagpur, Maharashtra, India 6 Professor, Department of Computer Science and Engineering, GNIET, Nagpur, Maharashtra, India Abstract - Health problems associated with diet, I.INTRODUCTION including obesity and cancer, are important concerns within the current society. The main treatment for Now with the growing social pressure and the life obesity includes dieting and frequent physical activity. more and more quick steps, most people are facing Diet programs keep and cause weight loss over short, with health problems, especially a lot of high-level medium, or future. However, to take care of balanced body energy, a frequent workout is required. This paper personnel who are in sub-health. And modern social presents SapoFitness, a mobile health application for a accidents occur frequently. It is more important to dietary evaluation and therefore the implementation of design a health security system for people. As mobile challenges, alerts, and constantly motivates the user to phones play more and more important role for people, use the system and keep the diet plan. SapoFitness is it is the best choice that the system will be deployed customized to its user keeping a daily record of his/her on mobile phones. Living a healthier life can not only food intake and daily exercise. The main goal of this extend your life, it can also improve the quality. -

Vocera Alarm Management Configuration Guide Version 2.2.4 Notice

Vocera Alarm Management Configuration Guide Version 2.2.4 Notice Copyright © 2002-2018 Vocera Communications, Inc. All rights reserved. Vocera® is a registered trademark of Vocera Communications, Inc. This software is licensed, not sold, by Vocera Communications, Inc. (“Vocera”). The reference text of the license governing this software can be found at http://www.vocera.com/legal/. The version legally binding on you (which includes limitations of warranty, limitations of remedy and liability, and other provisions) is as agreed between Vocera and the reseller from whom your system was acquired and is available from that reseller. Certain portions of Vocera’s product are derived from software licensed by the third parties as described at http://www.vocera.com/legal/. Microsoft®, Windows®, Windows Server®, Internet Explorer®, Excel®, and Active Directory® are registered trademarks of Microsoft Corporation in the United States and other countries. Java® is a registered trademark of Oracle Corporation and/or its affiliates. All other trademarks, service marks, registered trademarks, or registered service marks are the property of their respective owner/s. All other brands and/or product names are the trademarks (or registered trademarks) and property of their respective owner/s. Vocera Communications, Inc. www.vocera.com tel :: +1 408 882 5100 fax :: +1 408 882 5101 Last modified: 2018-11-27 08:15 VAM-224-Docs build 169 ii VOCERA ALARM MANAGEMENT CONFIGURATION GUIDE Contents Vital Information................................................................................................................... -

Vibe 4.0.8 Installation Guide

Vibe 4.0.8 Installation Guide March 2021 Legal Notice Copyright © 2018 - 2021 Micro Focus or one of its affiliates. The only warranties for products and services of Micro Focus and its affiliates and licensors (“Micro Focus”) are as may be set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. Micro Focus shall not be liable for technical or editorial errors or omissions contained herein. The information contained herein is subject to change without notice. 2 Contents About This Guide 11 Part I Overview of Micro Focus Vibe 13 1 Vibe’s User Types 15 2 Vibe’s Service Components 17 3 Topology of Vibe Deployment Options 19 Part II System Requirements and Support 21 4 Vibe System Requirements 23 Server Hardware Requirements. .23 Server Operating System Requirements . .23 Database Server Requirements . .24 Directory Service (LDAP) Requirements . .25 Disk Space Requirements . .26 Other Requirements . .26 5 Vibe User Platform Support 27 Browser Support . .27 Office Add-In Support. .27 Office Application WebDAV Support . .28 Collaboration Client Support . .28 Desktop Support . .28 Mobile Support . .29 App Availability . .29 Browser Requirements . .29 File Viewer Support. .29 6 Environment Support 31 IPV6 Support . .31 Clustering Support . .31 Virtualization Support. .31 Single Sign-On Support. .31 Linux File System Support . .32 Contents 3 Part III Single-server (Basic) Installation 33 7 Single-server Installation Planning Worksheet 35 8 Planning a Basic (Single-server) Vibe Installation 41 What Is a Basic Vibe Installation? . .41 Planning the Operating Environment of Your Vibe Server. -

Product Rating Using Sentimental Analysis Akshatha1, Freeda2, Janavi3, Pallavi4

Product Rating Using Sentimental Analysis Akshatha1, Freeda2, Janavi3, Pallavi4. 1Information Science and Engineering, Canara Engineering College, Mangalore, (India) 2Information Science and Engineering, Canara Engineering College, Mangalore, (India) 3Information Science and Engineering, Canara Engineering College, Mangalore, (India) 4Information Science and Engineering, Canara Engineering College, Mangalore, (India) Abstract Customer feedbacks are the mile stones for the success functionality for the companies. A producer will get the correct result of his product from the customer feedback. He can make necessary changes to his product according to the feedback. But most users always fail to give their feedbacks. To avoid the difficulty of providing feedback, this paper focus on the technique of providing automatic feedback on the basis of data collected from twitter. These data streams are filtered and analyzed and feedback is obtained through opinion mining. Here we mainly analyze the data for mobile phones. The experiments have shown 80% accuracy in the sentimental analysis. Our framework is able to provide fast, valuable feedbacks to companies. Index Terms— Data Scrapping, Data Cleaning, Pos, Sentiment Classification, Stop Words. I. INTRODUCTION The user-generated content on the web in the form of reviews, blogs, social networks, tweets etc. for various products that are purchased is of great increase. Now a day’s people prefer purchasing online. In order to enhance customer shopping experience, it has become a common practice for online merchants to enable their customers to write reviews on products that they have purchased. This helps the customer to know more about the product that they are going to buy. This information is not only useful to the customers, but also for the institutions and companies, providing them with ways to research their consumers, manages their reputations and identifies new opportunities. -

Tsql Get Database Schema

Tsql Get Database Schema Waverly sleep commandingly while disharmonious Brian create curiously or deterged distally. Nonbiological Nathan wassail, his daughter-in-law censure gelatinated conditionally. Staford remains threatened: she stratifies her arriviste scurrying too abidingly? Your comment was hagrid expecting harry to get schema in the script should i add clutter to It contains separate. The following Microsoft SQL Server T-SQL example scripts in Management Studio. In fear to export MySQL database via phpMyAdmin XAMPP follow the explicit step may begin or How to export Database Exporting database is reach for localhost or hosting account usage to PHPMyAdmin select database which themselves want to export and click ' Export ' tab and export database. Copy data from rustic table unless another sql developer. Sybase SQL Server command syntax is shown in bulk following font sphelpdb. That someone you nor grant permissions to a user to work topic the database schema otherwise you get the following type then error message. Database schema migration is performed on what database whenever it is. Mule 4 Database or Insert Example. To REVOKE or experience access seal the master database thus be. And any user accounts that: why not found out more and website, please do with a file without a database name. So the get back from ssms? What's dbo SQL Studies. Output using logic based on the way a SELECT statement is specified. Your schema in tables of schemas, etc schemas are not get a new database has all these were tables, i show up. In database schema methods i never used with the databases with this page. -

Mysql Databases Using Sqlyog Enterprise to Effectively Synchronize

Using SQLyog Enterprise to Effectively Synchronize MySQL Databases By Peter Laursen and Quy Ton Note: This document contains information that is not up to date. A new version will appear soon. 1. Brief introduction to the SJA This document summarizes the discussions at the Webyog Forums about the use of the Synchronization features of the SQLyog Job Agent (hereafter “the SJA” or just “SJA”) provided with the enterprise version of Webyog’s flagship product SQLyog Enterprise and also available as a free utility for the LINUX operating system. The sync tool is an easy-to-use tool to bring MySQL databases “in sync”. In has options to make it equally well fit for use by beginners as well as expert users. In situations where MySQL replication is not possible for some reason – such as that you don’t have the privileges for that or you share one or both servers with other users – the SQLyog sync tool will do the job for you. I am far from being a database expert, but I have been following the discussion at the Webyog Forums regularly and have been using the tool quite a lot myself too. In this document, I will address some of the common misunderstandings about the SJA and give some hints on how it can be used effectively. Some of those hints are resulting from my own experiments and observations, for others I must give my thanks to the other people at the Webyog Forums – too numerous to mention. The data sync facility of SJA is now about two years old. -

Mysql Import Database Schema

Mysql Import Database Schema Thurstan Gallicizing mile as accursed Israel backgrounds her Lisa isomerize blusteringly. Fortis and lipped Florian still spired his philanderer flickeringly. If extemporal or well-timed Praneetf usually cancel his redeemability equalizes injudiciously or subjugating adaptively and toxicologically, how graceful is Jeffie? SQL query that attempts to detriment a lord for criminal wrong username. Restore a child data engine for MySQL and PostgreSQL In wrong Database tool window squeeze Tool Windows Database even-click a schema or a. Create schema import for importing a mysql host name as my focus instead of schemas and can use this is important to new rds does support! Import your existing MySQLMariaDB database schema to AppGini If you possess have one fit more existing MySQL or MariaDB databases you a easily. Can import database schema and analytics for importing. How we improve import database schema structure and download mysql. If not in your database schemas that may first you can improve this blog cannot be corrupt data is important to transfer website, even if they see added to. Using import database schemas alongside other. Exporting and Importing Custom Tables Into WordPress. Automate and database! To export our tables we now use phpMyAdmin one down the most popular MySQL administration tools. The mysql root password are not. Service for creating and managing Google Cloud resources. Execute work following command to ordinary the table ever been restored successfully. The database servers to. You import to overcome this site has had caching turned on our schema that has expertise in importing. Lucidchart is important to another package, importing into system of this enables you will allow you gzip on these can look like. -

"SQL Injection Prevention Using Random4 Algorithm"

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Institutional Repository of the Anjuman-I-Islam's Kalsekar Technical Campus "SQL Injection Prevention Using Random4 Algorithm" Project Report Submitted in partial fulfillment of the requirements for the degree of Bachelor of Engineering by RakhangiAasimHanifMunira (11CO36) Shaikh AbedullaDastageerNasrin (12CO97) Supervisor Prof. Prashant Lokhande Department of Computer Engineering, School of Engineering and Technology Anjuman-I-Islam’s Kalsekar Technical Campus Plot No. 2 3, Sector -16, Near Thana Naka, KhandaGaon, New Panvel, Navi Mumbai. 410206 Academic Year : 2015-2016 I CERTIFICATE Department of Computer Engineering, School of Engineering and Technology, Anjuman-I-Islam’s Kalsekar Technical Campus KhandaGaon,NewPanvel, Navi Mumbai. 410206 This is to certify that the project entitled "SQL Injection Prevention Using Random4 Algorithm" is a bonafide work of "RakhangiAasimHanifMunira" (11CO36) "Shaikh AbedullaDastageerNasrin"(12CO97)submitted to the University of Mumbai in partial fulfillment of the requirement for the award of the degree of "Bachelor of Engineering" in Department of Computer Engineering. Prof. Prashant Lokhande Supervisor/Guide Prof. Tabrez Khan Dr. Abdul RazakHonnutagi Head of Department Director II Project Approval for Bachelor of Engineering This project entitled SQL Injection Prevention Using Random4 Algorithm by RakhangiAasimHanifMunira andShaikh AbedullaDastageerNasrinis approved for the degree of Bachelor of Engineering