Decisions and Performance Under Bounded Rationality: a Computational Benchmarking Approach∗

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Download the Booklet of Chessbase Magazine #199

THE Magazine for Professional Chess JANUARY / FEBRUARY 2021 | NO. 199 | 19,95 Euro S R V ON U I O D H E O 4 R N U A N DVD H N I T NG RE TIME: MO 17 years old, second place at the Altibox Norway Chess: Alireza Firouzja is already among the Top 20 TOP GRANDmasTERS ANNOTATE: ALL IN ONE: SEMI-TaRRasCH Duda, Edouard, Firouzja, Igor Stohl condenses a Giri, Nielsen, et al. trendy opening AVRO TOURNAMENT 1938 – LONDON SYSTEM – no reST FOR THE Bf4 CLash OF THE GENERATIONS Alexey Kuzmin hits with the Retrospective + 18 newly annotated active 5...Nh5!? Keres games THE MODERN BENONI UNDER FIRE! Patrick Zelbel presents a pointed repertoire with 6.Nf3/7.Bg5 THE MAGAZINE FOR PROFESSIONAL CHESS JANU 17 years old, secondARY / placeFEB at the RU Altibox Norway Chess: AlirezaAR FirouzjaY 2021 is already among the Top 20 NO . 199 1 2 0 2 G R U B M A H , H B M G E S DVD with first class training material for A B S S E club players and professionals! H C © EDITORIAL The new chess stars: Alireza Firouzja and Moscow in order to measure herself against Beth Harmon the world champion in a tournament. She is accompanied by a US official who warns Now the world and also the world of chess her about the Soviets and advises her not to has been hit by the long-feared “second speak with anyone. And what happens? She wave” of the Covid-19 pandemic. Many is welcomed with enthusiasm by the popu- tournaments have been cancelled. -

Magnus Carlsen: Side 8-10 Superturnering I Sjakk 7.-18. Mai 2013

Norway Chess Superturnering i sjakk 7.-18. mai 2013 Magnus Carlsen: side 8-10 Sjakkens første super- stjerne møter verdens sjakkelite i Rogaland Stor skolesjakkturnering: Barn i Rogaland spiller sjakk side 3 Sjakkens ABC: Lær deg alt om sjakkspillet side 16-17 Sjakkpresident Jøran Aulin-Jansson: 92 timer med sjakk i strekk side 24-25 Tid for å skape historie Plutselig er vi der, og det er tid for å levere. Tanker, ideer, møter, diskusjoner og mye planlegging skal nå gi oss et godt og helt nytt arrangement. Det er tid for Norway Chess – tid for å skape historie. Det er nemlig aller første gang det arrangeres en slik super- turnering i Norge. Verden holder øynene åpne og kommer til å følge godt med når det skal utkjempes nye og viktige slag i regio- nen. Sverdslag i Hafrsfjord og kamp om kongemakt er byttet ut med å ta motstanderens konge på brettet. Mag- nus Carlsen skal for aller første gang få møte sine arges- te konkurrenter her hjemme, og møtet med Vishy Anand har allerede fått mye oppmerksomhet. Det er et stort arrangement, og vi skal vise fram mye flott av det vi har å by på. Quality Hotel Residence på Sandnes skal være hovedarena med mange av de i alt ni runde- Mannen bak ne. Men spillere og presse skal få stifte bekjentskap med Flor & Fjære, Universitetet, det nye flotte konserthuset i Tekst: Maren Stangeland Stavanger og en ”striglet” produksjonshall hos Aarbakke på Bryne. Hvordan kan det ha seg at vi har verdens beste sjakkspiller, men Sjakk har en lang historie. -

Årsrapport 2014

Årsrapport 2014 Finnøy, Rennesøy, Randaberg, Stavanger, Sandnes, Sola, Gjesdal, Klepp, Time, Hå, Bjerkreim, Eigersund, Lund, Sokndal og Sirdal Innhold Reiselivsdirektøren har ordet 4 Sammendrag 5 Ansatte og styret 8–11 Styrets årsberetning 2014 12 Tiltakene i 2014 14 Generell markedsutvikling i 2014 16 Kommunikasjon 18 Kongress og arrangementsturisme 28 Ferie og fritid 36 Vertskap 44 Årsregnskap 2014 48 Resultatregnskap 2014 50 Balanse 2014 51 Noter 52 Revisjonsberetning 54 Medlemmer i 2014 56 Forsidefoto: Eikeland, Bjerkreim. Foto © Eduardo Grund – Bakgrunnsfoto: Sandestranden, freesbe. © Gunhild Vevik Reiselivsdirektøren har ordet Sammendrag 4 Finnøy, Rennesøy, Randaberg, Stavanger, Sandnes, Sola, Gjesdal, Klepp, Time, Hå, Bjerkreim, Eigersund, Lund, Sokndal og Sirdal Finnøy, Rennesøy, Randaberg, Stavanger, Sandnes, Sola, Gjesdal, Klepp, Time, Hå, Bjerkreim, Eigersund, Lund, Sokndal og Sirdal 5 Reiselivs direktøren Sammendrag årsrapport 2014 Region Stavanger har hatt et høyt aktivitetsnivå på salgs- og markedsaktiviteter mot ferie- og fritids- markedet, kurs- og konferansemarkedet og arrangementsturismemarkedet i 2014. Strategisk og har ordet fokusert satsing på utvalgte markeder og målgrupper, har gitt resultater for både reiselivsnæringen, øvrig næringsliv og regionen generelt i form av omdømmebygging, verdiskaping og gjestedøgn. Reiselivsåret 2014 i Stavangerregionen – Fra besøksrekorder og tidenes beste sommer til kapasitetsutvidelse, omstilling og lav oljepris! Stavangerregionen fortsetter å ta markedsandeler 2014 startet godt med en veldig positiv utvikling i reiselivsnæringen i Stavangerregionen. Regionen har en samlet oppgang i totale hotellgjestedøgn på 22 790 (+1,8 %) fra 2013. Statistikkene viser til vekst i Samlet hotellmarked 2014 var på 1 308 697 gjestedøgn. Oppgangen i totale gjestedøgn var på 22 790 ferie- og fritidsmarkedet med hele 28 486 hotellgjestedøgn (+6,7%) fra 2013, mens kurs- og konferanse- en økning på 1,8%. -

Players in Norway Chess

The first chess super tournament in Norway – 07th to 18th of May Photo: New In Chess New Photo: PLAYERS IN NORWAY CHESS Player FIDE-rating: (Feb. 2013) Magnus Carlsen 2872 Vladimir Kramnik 2810 Levon Aronian 2809 Teimour Radjabov 2793 Sergey Karjakin 2786 Viswanathan Anand 2780 Veselin Topalov 2771 Hikaru Nakamura 2767 Wang Hao 2743 Jon Ludvig Hammer 2629 Average rating: 2776,0 The document can be downloaded here: www.norwaychess.com/en/press Follow us for more information: Our main sponsors: Homepage: www.norwaychess.com/en Facebook: www.facebook.com/NorwayChess Twitter: @NorwayChess The first chess super tournament in Norway – 07th to 18th of May Photo: New In Chess New Photo: Participant Norway Chess 2013 MAGNUS CARLSEN Country: Norway FIDE rating February 2013: 2872 Born: November 30th, 1990 World ranking: 1st Since 2010, Magnus Carlsen has dominated Having suffered only two losses in 2012, Magnus the chess world by winning almost every Carlsen is the clear favourite in this event. Yet, tournament he takes part in. Only 22 years with every tournament the pressure increases old, the World Number One has already won and the big question is: Can he handle the 15 super tournaments. In the process he has pressure in the first classical super tournament broken Garry Kasparov’s legendary all-time high in his home country? rating record of 2851. Currently rated at 2872, more than 60 rating points ahead of number More information: 2, he makes chess fans wonder about the new http://en.wikipedia.org/wiki/Magnus_Carlsen heights he will scale. -

Møt Spillerne I Altibox Norway Chess Side 20–22

AVIS FOR SUPERTURNERINGEN I SJAKK ALTIBOX STAVAngerregionen 3.–15. juni NORWAY CHess MAGNUS CARLSEN NORGE MAXIME VACHIER- SHAKHRIYAR MAMEDYAROV VISWANATHAN ANAND LAGRAVE FRANKRIKE ASERBAJDSJAN INDIA DING LIREN LEVON ARONIAN FABIANO CARUANA KINA ARMENIA USA WESLEY SO YANGYI YU ALEXANDER GRISCHUK USA KINA RUSSLAND Møt spillerne i Altibox Norway Chess Side 20–22 En annerledes skole- Ekslusivt intervju med Lynkurs i sjakk med sjakkturnering SIDE 16 Siri Lill Mannes SIDE 24 Ellisiv Reppen SIDE 29 2 ALTIBOX NORWAY CHESS 3.–15. JUNI 2019 INNHOLD PLANT ET TRE GI EN BEDRE FREMTID Leder ......................................... 03 Produksjon av arganolje Program ..................................... 04 er en av svært få muligheter for betalt Et tilbakeblikk på fjorårets arbeid for fattige kvinner i sørvest turnering .................................... 06 Marokko. Argan skogen er også helt essensiell for dyre- og plantelivet i Tiden – et viktig element i sjakk 13 landet, og har så stor betydning for klima De første sjakkstjernene ........... 14 at UNESCO erklærte den et biosfærereservat i 1998. Skolesjakkturneringen .............. 16 Spill gjennom alle tider ............. 19 Nå er skogen utrydningstruet! Møt spillerne .............................. 20 Intervju med Siri Lill Mannes .... 24 Kompleksiteten i det oversiktlige................................. 27 Lynkurs i sjakk ........................... 29 Argan skogen i Marokko er avgjørende for Carlsens ofre ............................. 31 tusenvis av plante- og dyrearter og helt essensiell Argan Care ble startet av Benedicte Westre Skog i Stavanger i 2016. Vi har så langt plantet og for å redusere spredning av ørkenen i området. Dronning Shakiras hevn ............ 32 Skogen har blitt redusert kraftig de siste årene på rehabilitert trær på 200 mål sør-vest i Marokko. grunn av overforbruk, avskoging og kanskje noe Argan Care bidrar til å etablere arbeidsplasser, Løpsk løper mot spesielt for kvinner, i en region med svært få mer overraskende, geiter. -

For Immediate Release GRAND CHESS TOUR ANNOUNCES 2016 SCHEDULE World's Top Players Will Compete in 2NdYear of the To

For Immediate Release GRAND CHESS TOUR ANNOUNCES 2016 SCHEDULE nd World’s top players will compete in 2 year of the tour SAINT LOUIS (April 24, 2015) – The Grand Chess Tour has announced its schedule and dates for the 2016 circuit. The announcement came at the conclusion of the Sinquefield Cup, the second stop on the inaugural year of the tour. The 2016 schedule will feature the same three tournaments as this year’s tour with Norway Chess, Sinquefield Cup and London Chess Classic. The Grand Chess Tour has quickly established itself as the premier chess circuit in the world, featuring 10 of the top players in the world. The field for 2016 will be announced towards the end of the calendar year. Invites will be extended to the three top finishers in the 2015 Grand Chess Tour, the six highest average rated players in 2015, and a wild card chosen by each tournament which rounds out the field to ten. The 2016 Tour will once again follow the same order with Norway Chess in April, followed by the Sinquefield Cup in August/September, and finishing with the London Chess Classic in November/December. 2016 Grand Chess Tour Location Date Norway Chess Norway April 16 – 29, 2016 Sinquefield Cup Saint Louis, USA August 19 – September 2, 2016 London Chess Classic London, England November 30 – December 13, 2016 The same prize fund will be offered in 2016 with each of the three 2016 Grand Chess Tour events awarding individual prize funds of $300,000, with competitors also tallying points toward a tour prize fund of $150,000; the overall tour champion will receive an additional $75,000. -

Magnus with Headers Smaller:Soltyonecol.Qxd.Qxd

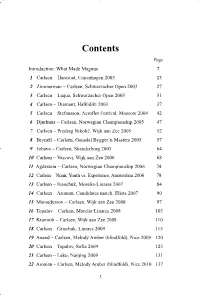

Magnus with Headers smaller:soltyonecol.qxd 07/07/2020 19:43 Page 3 Contents Page Introduction: What Made Magnus 7 1 Carlsen – Harestad, Copenhagen 2003 23 2 Zimmerman – Carlsen, Schwarzacher Open 2003 27 3 Carlsen – Laqua, Schwarzacher Open 2003 31 4 Carlsen – Diamant, Halkidiki 2003 37 5 Carlsen – Stefansson, Aeroflot Festival, Moscow 2004 42 6 Djurhuus – Carlsen, Norwegian Championship 2005 47 7 Carlsen – Predrag Nikolić, Wijk aan Zee 2005 52 8 Brynell – Carlsen, Gausdal Bygger’n Masters 2005 57 9 Jobava – Carlsen, Skanderborg 2005 64 10 Carlsen – Vescovi, Wijk aan Zee 2006 68 11 Agdestein – Carlsen, Norwegian Championship 2006 74 12 Carlsen – Nunn, Youth vs. Experience, Amsterdam 2006 78 13 Carlsen – Ivanchuk, Morelia-Linares 2007 84 14 Carlsen – Aronian, Candidates match, Elista 2007 90 15 Mamedyarov – Carlsen, Wijk aan Zee 2008 97 16 Topalov – Carlsen, Morelia-Linares 2008 103 17 Kramnik – Carlsen, Wijk aan Zee 2008 110 18 Carlsen – Grischuk, Linares 2009 115 19 Anand – Carlsen, Melody Amber (blindfold), Nice 2009 120 20 Carlsen – Topalov, Sofia 2009 125 21 Carlsen – Leko, Nanjing 2009 131 22 Aronian – Carlsen, Melody Amber (blindfold), Nice 2010 137 3 Magnus with Headers smaller:soltyonecol.qxd 07/07/2020 19:43 Page 4 Contents 23 Carlsen – Bacrot, Nanjing 2010 142 24 Smeets – Carlsen, Wijk aan Zee 2011 147 25 Carlsen – Nakamura, Medias 2011 153 26 Carlsen – Gelfand, Tal Memorial, Moscow 2011 160 27 Carlsen – Nakamura, Wijk aan Zee 2011 166 28 Radjabov – Carlsen, Moscow 2012 171 29 Carlsen – Caruana, Sao Paulo 2012 178 30 Carlsen – Anand, Sao Paolo 2012 184 31 Carlsen – Judith Polgar, London 2012 189 32 Carlsen – Harikrishna, Wijk aan Zee 2013 195 33 Anand – Carlsen, World Championship, Chennai 2013 203 34 Carlsen – Caruana, Shamkir 2014 210 35 Carlsen – Anand, World Championship, Sochi 2014 216 36 Nakamura – Carlsen, Zürich 2014 222 37 Carlsen – Wojtaszek, Olympiad, Tromsø 2014 228 38 Carlsen – So, Sinquefield Cup, St. -

London Chess Classic Day 9 Round-Up

13th December 2015 LONDON CHESS CLASSIC DAY 9 ROUND-UP Yesterday’s round-up predicted it could be a late night at the Olympia Conference Centre on the last day of the London Chess Classic, but little did the fans know they were in for a high intensity 10-hour chess marathon. Magnus Carlsen, winner of the London Chess Classic and of the Grand Chess Tour The final and decisive round had started at the usual 2 pm weekend kick-off time and, for the seventh time in the tournament, there were four draws and one decisive result. Adams-Caruana, Aronian-Vachier Lagrave, Anand-Giri and Nakamura-Topalov were all balanced draws. The one decisive result (which was incidentally also the last game to finish) was crucial as Magnus Carlsen ultimately got the better of Alexander Grischuk. Carlsen was very lucky as Grischuk first missed a promising continuation with 30...Rxg4, then a forced draw, before sacrificing a rook for a non- existent perpetual check. Both players made the same oversight. This last-gasp win enabled Carlsen to join Giri and Vachier-Lagrave in the lead and thus force a 3-way play-off. As stipulated by the official regulations, ‘The two players placed lowest on tiebreak shall contest a two game Rapidplay match at a time limit of Game / 25 mins + 5 secs / move throughout’, which meant Giri and Vachier- Lagrave would have to face off first for the right to challenge Carlsen for tournament victory. In the first game, Giri opted for a Berlin (another one!) with the black pieces and played a perfect game to score an all-important victory. -

ECU NEWSLETTER June 2017

NL JUNE 2017 EUROPEAN CHESS UNION EUROPEAN EUROPEAN AMATEUR EUROPEAN SCHOOL EUROPEAN YOUTH INDIVIDUAL CHESS CHESS CHESS RAPID&BLITZ CHESS CHAMPIONSHIP 2017 CHAMPIONSHIP 2017 CHAMPIONSHIP 2017 CHAMPIONSHIP 2017 EUROPEAN CHESS UNION NEWSLETTER MAXIM MATLAKOV WON EUROPEAN INDIVIDUAL CHESS CHAMPIONSHIP 2017 European Individual Chess Championship 2017 took place from 29th May to 11th June in Minsk, the capital of Belarus. The 18th edition of one of the biggest tournaments in the ECU calendar took place in the Sports Palace in Minsk, with the participation of 400 players, who competed for the 1 NL JUNE 2017 EUROPEAN CHESS UNION prestigious title of the European Chess Champion and one of the 22 seats – tickets to the World Chess Cup 2018. The winner became Grandmaster Maxim Matlakov (RUS, 2714) who triumphed with 8.5 points. The Russian scored the same points as Baadur Jobava (GEO, 2713) and Vladimir Fedoseev (RUS, 2690), but thanks to better tiebreak criteria he became the new European Chess Champion, while the other two won silver and bronze respectively. The prize fund of 100 000 EUR, attracted the majority of the best European players including and many women players as well. The best ranked women players were WGM Olga Girya (RUS, 2476) and IM Elisabeth Paehtz (GER, 2474) with 6.5 points, and WGM Aleksandra Goryachkina (RUS, 2470) with 6 points. The best senior player was GM Zurab Sturua (GEO, 2555), the 2014 World and 2015 European Senior Chess Champion. The best players U18 were IM Haik M. Martirosyan (ARM, 2516), IM Aram Hakobyan (ARM, 2449), IM Kirill Shevchenko (UKR, 2491) and IM Alexey Sarana (RUS, 2472), all with 7 points. -

Avis for Superturnering I Sjakk 2.-13. Juni 2014 Verdens- Mesteren Vil Vinne Side 8-10

Norway Chess Avis for superturnering i sjakk 2.-13. juni 2014 Verdens- mesteren vil vinne side 8-10 Lahlum deler Skolesjakk i Bli kjent med ut gule og røde vinden i Carlsens nær- kort Rogaland meste utfordrer side 6-7 side 24 side 26-27 2 LEDER NO LOGO NORWAY CHESS 2. – 13. JUNI 2014 Kjell Madland følger partiene fra tilskuerbenkene på Flor & Fjære under runde syv av Norway Chess 2013. Sjakkinteressen mot nye høyder Endelig er det tid for en ny runde av No Logo Norway Chess. 2013-utgaven ble en stor suksess med enorm oppmerksomhet regionalt, nasjonalt og internasjonalt. et som ved forrige arrangement var Vi ønsker å befeste No Logo Norway Chess og at folk møter opp og ser på når kjendiser, Kanskje blir det en nordmann som klarer det i «nybrottsarbeid» og mange vyer, som en av de desidert sterkeste årlige turnerin- skolebarn og superstjerner møtes i de ulike år. Å vinne No Logo Norway Chess! er nå over i en ny spennende fase. gene på verdensbasis. Deltakerlista er vi veldig arrangement No Logo Norway Chess har. Vel møtt! D Siden sist har Magnus Carlsen blitt stolte av, den inneholder den absolutte verdens- Russeren Sergej Karjakin vant i fjor. Kjell Madland verdensmester og satt en hel nasjon på hodet. eliten. Det er sannsynlig at årets superturn- Sjakkinteressen, og kanskje aller mest Carlsen- ering vil bli en de tre sterkeste turneringer i interessen nådde høyder ingen ante kunne verden noensinne, og målet videre er å holde være mulig. I ettertid har mange begynt å spille det nivået. -

Carlsen Best in the World

(Periodicals postage paid at Seattle, WA) TIME DATED MATERIAL — DO NOT DELAY This week on Norway.com This week in the paper Genetic secrets The Annual of cod If the only prayer you said in your whole life Christmas revealed was, “thank you,” that would suffice. Gift Guide - Meister Eckhart Read more Read more at www.blog.norway.com on page 8 Norwegian American Weekly Vol. 120, No. 42 November 20, 2009 7301 Fifth Avenue NE Suite A, Seattle, WA 98115 Tel (800) 305-0217 • www.norway.com $1.50 per copy Online News Dateline Oslo Carlsen best in the world Cisco boosts bid for Norway’s chess ace Tandberg to $3.41 billion Magnus Carlsen (19) now tops the world Cisco Systems has bumped up ranking list, after he its buyout offer to $3.41 billion beat Hungarian former for video conferencing compa- world champion Peter Leko in the last match of ny Tandberg. The new bid ex- the Tal Memorial chess pires December 1. Cisco said tournament in Moscow that if the bid isn’t accepted by Nov. 14 that deadline, it will withdraw SPE C IAL RELEASE the bid and look at other ways Norwegian American Weekly Staff to expand its reach in the video conferencing market. For the second time in his chess career, Magnus Carlsen has (CNET) reached the number one spot in the rankings. The last time he achieved this was in September 2008 during Trade balance up the tournament in Bilbao, after round four. Exports of goods reached Photo: Chessbase.com CONTINUES PAGE 22 Carlsen finished the event in Russia as the top ranked player in the world. -

BT-Montag 23. Juli 2018 Copy

Bieler Tagblatt Montag, 23.07.2018 11 Sport Wirtschaft Zum Auftakt der Super Der Mann, der Fiat und League hat Aufsteiger Chrysler rettete, sollte erst Sport Xamax in Luzern mit 2:0 2019 abtreten. Doch nun gewonnen. Schweizer Meis- endet die 14-jährige Erfolgs- ter YB schlug zuhause die geschichte von Sergio Mar- Grasshoppers 2:0. – Seite 12 chionne abrupt. – Seite 17 Die Aufwärtstendenz bestätigen Schach Weltmeister Magnus Carlsen steht beim Bieler Schachfestival im Fokus. Der Norweger will beweisen, dass er seine letztjährige Formkrise, die ihm fast die Spitzenposition an der Weltrangliste gekostet hätte, überstanden hat. Im November kommt es zum Gipfeltreen. Michael Lehmann Sechs Jahre ist es her, seit Magnus Carl- sen das letzte Mal in Biel spielte. Warum er so lange nicht mehr am Schachfestival teilnahm, mochte der 27-jährige Norwe- ger nicht beantworten. «Schauen wir doch lieber nach vorne, nicht zurück.» Das scheint der allgemeine Tenor im Lager Carlsens zu sein. Denn seine lange unangefochtene Position an der Spitze der Weltrangliste geriet zuletzt etwas ins Wanken. Besonders im letzten Jahr musste sich Carlsen mit mehreren Rück- schlägen auseinandersetzen. Der Tief- punkt war, als er sein Heimturnier, das «Norway Chess» in Stavanger, als Neun- ter von zehn Teilnehmern abschloss. Sein Elo-Rating fiel im vergangenen August auf 2822 Punkte, tiefer war es zuletzt im Juli 2011. Damals hatte er den ersten Platz in der Weltrangliste zurückerobert und seither nicht mehr hergegeben. Zwar geriet sie im letzten Jahr mehrmals in Gefahr, letztlich schaffte es Carlsen je- doch, die Spitzenposition zu halten. Keine Spur von Selbstzweifel Dennoch rätselte die Schachwelt.