Quality Code: Software Testing Principles, Practices, and Patterns

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Practical Unit Testing for Embedded Systems Part 1 PARASOFT WHITE PAPER

Practical Unit Testing for Embedded Systems Part 1 PARASOFT WHITE PAPER Table of Contents Introduction 2 Basics of Unit Testing 2 Benefits 2 Critics 3 Practical Unit Testing in Embedded Development 3 The system under test 3 Importing uVision projects 4 Configure the C++test project for Unit Testing 6 Configure uVision project for Unit Testing 8 Configure results transmission 9 Deal with target limitations 10 Prepare test suite and first exemplary test case 12 Deploy it and collect results 12 Page 1 www.parasoft.com Introduction The idea of unit testing has been around for many years. "Test early, test often" is a mantra that concerns unit testing as well. However, in practice, not many software projects have the luxury of a decent and up-to-date unit test suite. This may change, especially for embedded systems, as the demand for delivering quality software continues to grow. International standards, like IEC-61508-3, ISO/DIS-26262, or DO-178B, demand module testing for a given functional safety level. Unit testing at the module level helps to achieve this requirement. Yet, even if functional safety is not a concern, the cost of a recall—both in terms of direct expenses and in lost credibility—justifies spending a little more time and effort to ensure that our released software does not cause any unpleasant surprises. In this article, we will show how to prepare, maintain, and benefit from setting up unit tests for a simplified simulated ASR module. We will use a Keil evaluation board MCBSTM32E with Cortex-M3 MCU, MDK-ARM with the new ULINK Pro debug and trace adapter, and Parasoft C++test Unit Testing Framework. -

Parallel, Cross-Platform Unit Testing for Real-Time Embedded Systems

University of Denver Digital Commons @ DU Electronic Theses and Dissertations Graduate Studies 1-1-2017 Parallel, Cross-Platform Unit Testing for Real-Time Embedded Systems Tosapon Pankumhang University of Denver Follow this and additional works at: https://digitalcommons.du.edu/etd Part of the Computer Sciences Commons Recommended Citation Pankumhang, Tosapon, "Parallel, Cross-Platform Unit Testing for Real-Time Embedded Systems" (2017). Electronic Theses and Dissertations. 1353. https://digitalcommons.du.edu/etd/1353 This Dissertation is brought to you for free and open access by the Graduate Studies at Digital Commons @ DU. It has been accepted for inclusion in Electronic Theses and Dissertations by an authorized administrator of Digital Commons @ DU. For more information, please contact [email protected],[email protected]. PARALLEL, CROSS-PLATFORM UNIT TESTING FOR REAL-TIME EMBEDDED SYSTEMS __________ A Dissertation Presented to the Faculty of the Daniel Felix Ritchie School of Engineering and Computer Science University of Denver __________ In Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy __________ by Tosapon Pankumhang August 2017 Advisor: Matthew J. Rutherford ©Copyright by Tosapon Pankumhang 2017 All Rights Reserved Author: Tosapon Pankumhang Title: PARALLEL, CROSS-PLATFORM UNIT TESTING FOR REAL-TIME EMBEDDED SYSTEMS Advisor: Matthew J. Rutherford Degree Date: August 2017 ABSTRACT Embedded systems are used in a wide variety of applications (e.g., automotive, agricultural, home security, industrial, medical, military, and aerospace) due to their small size, low-energy consumption, and the ability to control real-time peripheral devices precisely. These systems, however, are different from each other in many aspects: processors, memory size, develop applications/OS, hardware interfaces, and software loading methods. -

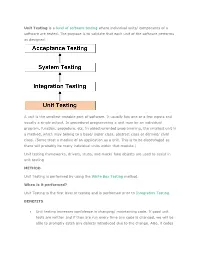

Unit Testing Is a Level of Software Testing Where Individual Units/ Components of a Software Are Tested. the Purpose Is to Valid

Unit Testing is a level of software testing where individual units/ components of a software are tested. The purpose is to validate that each unit of the software performs as designed. A unit is the smallest testable part of software. It usually has one or a few inputs and usually a single output. In procedural programming a unit may be an individual program, function, procedure, etc. In object-oriented programming, the smallest unit is a method, which may belong to a base/ super class, abstract class or derived/ child class. (Some treat a module of an application as a unit. This is to be discouraged as there will probably be many individual units within that module.) Unit testing frameworks, drivers, stubs, and mock/ fake objects are used to assist in unit testing. METHOD Unit Testing is performed by using the White Box Testing method. When is it performed? Unit Testing is the first level of testing and is performed prior to Integration Testing. BENEFITS Unit testing increases confidence in changing/ maintaining code. If good unit tests are written and if they are run every time any code is changed, we will be able to promptly catch any defects introduced due to the change. Also, if codes are already made less interdependent to make unit testing possible, the unintended impact of changes to any code is less. Codes are more reusable. In order to make unit testing possible, codes need to be modular. This means that codes are easier to reuse. Development is faster. How? If you do not have unit testing in place, you write your code and perform that fuzzy ‘developer test’ (You set some breakpoints, fire up the GUI, provide a few inputs that hopefully hit your code and hope that you are all set.) If you have unit testing in place, you write the test, write the code and run the test. -

What Every Engineer Should Know About Software Engineering

7228_C000.fm Page v Tuesday, March 20, 2007 6:04 PM WHAT EVERY ENGINEER SHOULD KNOW ABOUT SOFTWARE ENGINEERING Phillip A. Laplante Boca Raton London New York CRC Press is an imprint of the Taylor & Francis Group, an informa business © 2007 by Taylor & Francis Group, LLC 7228_C000.fm Page vi Tuesday, March 20, 2007 6:04 PM CRC Press Taylor & Francis Group 6000 Broken Sound Parkway NW, Suite 300 Boca Raton, FL 33487-2742 © 2007 by Taylor & Francis Group, LLC CRC Press is an imprint of Taylor & Francis Group, an Informa business No claim to original U.S. Government works Printed in the United States of America on acid-free paper 10 9 8 7 6 5 4 3 2 1 International Standard Book Number-10: 0-8493-7228-3 (Softcover) International Standard Book Number-13: 978-0-8493-7228-5 (Softcover) This book contains information obtained from authentic and highly regarded sources. Reprinted material is quoted with permission, and sources are indicated. A wide variety of references are listed. Reasonable efforts have been made to publish reliable data and information, but the author and the publisher cannot assume responsibility for the validity of all materials or for the conse- quences of their use. No part of this book may be reprinted, reproduced, transmitted, or utilized in any form by any electronic, mechanical, or other means, now known or hereafter invented, including photocopying, microfilming, and recording, or in any information storage or retrieval system, without written permission from the publishers. For permission to photocopy or use material electronically from this work, please access www. -

Including Jquery Is Not an Answer! - Design, Techniques and Tools for Larger JS Apps

Including jQuery is not an answer! - Design, techniques and tools for larger JS apps Trifork A/S, Headquarters Margrethepladsen 4, DK-8000 Århus C, Denmark [email protected] Karl Krukow http://www.trifork.com Trifork Geek Night March 15st, 2011, Trifork, Aarhus What is the question, then? Does your JavaScript look like this? 2 3 What about your server side code? 4 5 Non-functional requirements for the Server-side . Maintainability and extensibility . Technical quality – e.g. modularity, reuse, separation of concerns – automated testing – continuous integration/deployment – Tool support (static analysis, compilers, IDEs) . Productivity . Performant . Appropriate architecture and design . … 6 Why so different? . “Front-end” programming isn't 'real' programming? . JavaScript isn't a 'real' language? – Browsers are impossible... That's just the way it is... The problem is only going to get worse! ● JS apps will get larger and more complex. ● More logic and computation on the client. ● HTML5 and mobile web will require more programming. ● We have to test and maintain these apps. ● We will have harder requirements for performance (e.g. mobile). 7 8 NO Including jQuery is NOT an answer to these problems. (Neither is any other js library) You need to do more. 9 Improving quality on client side code . The goal of this talk is to motivate and help you improve the technical quality of your JavaScript projects . Three main points. To improve non-functional quality: – you need to understand the language and host APIs. – you need design, structure and file-organization as much (or even more) for JavaScript as you do in other languages, e.g. -

Testing and Debugging Tools for Reproducible Research

Testing and debugging Tools for Reproducible Research Karl Broman Biostatistics & Medical Informatics, UW–Madison kbroman.org github.com/kbroman @kwbroman Course web: kbroman.org/Tools4RR We spend a lot of time debugging. We’d spend a lot less time if we tested our code properly. We want to get the right answers. We can’t be sure that we’ve done so without testing our code. Set up a formal testing system, so that you can be confident in your code, and so that problems are identified and corrected early. Even with a careful testing system, you’ll still spend time debugging. Debugging can be frustrating, but the right tools and skills can speed the process. "I tried it, and it worked." 2 This is about the limit of most programmers’ testing efforts. But: Does it still work? Can you reproduce what you did? With what variety of inputs did you try? "It's not that we don't test our code, it's that we don't store our tests so they can be re-run automatically." – Hadley Wickham R Journal 3(1):5–10, 2011 3 This is from Hadley’s paper about his testthat package. Types of tests ▶ Check inputs – Stop if the inputs aren't as expected. ▶ Unit tests – For each small function: does it give the right results in specific cases? ▶ Integration tests – Check that larger multi-function tasks are working. ▶ Regression tests – Compare output to saved results, to check that things that worked continue working. 4 Your first line of defense should be to include checks of the inputs to a function: If they don’t meet your specifications, you should issue an error or warning. -

The Art, Science, and Engineering of Fuzzing: a Survey

1 The Art, Science, and Engineering of Fuzzing: A Survey Valentin J.M. Manes,` HyungSeok Han, Choongwoo Han, Sang Kil Cha, Manuel Egele, Edward J. Schwartz, and Maverick Woo Abstract—Among the many software vulnerability discovery techniques available today, fuzzing has remained highly popular due to its conceptual simplicity, its low barrier to deployment, and its vast amount of empirical evidence in discovering real-world software vulnerabilities. At a high level, fuzzing refers to a process of repeatedly running a program with generated inputs that may be syntactically or semantically malformed. While researchers and practitioners alike have invested a large and diverse effort towards improving fuzzing in recent years, this surge of work has also made it difficult to gain a comprehensive and coherent view of fuzzing. To help preserve and bring coherence to the vast literature of fuzzing, this paper presents a unified, general-purpose model of fuzzing together with a taxonomy of the current fuzzing literature. We methodically explore the design decisions at every stage of our model fuzzer by surveying the related literature and innovations in the art, science, and engineering that make modern-day fuzzers effective. Index Terms—software security, automated software testing, fuzzing. ✦ 1 INTRODUCTION Figure 1 on p. 5) and an increasing number of fuzzing Ever since its introduction in the early 1990s [152], fuzzing studies appear at major security conferences (e.g. [225], has remained one of the most widely-deployed techniques [52], [37], [176], [83], [239]). In addition, the blogosphere is to discover software security vulnerabilities. At a high level, filled with many success stories of fuzzing, some of which fuzzing refers to a process of repeatedly running a program also contain what we consider to be gems that warrant a with generated inputs that may be syntactically or seman- permanent place in the literature. -

Background Goal Unit Testing Regression Testing Automation

Streamlining a Workflow Jeffrey Falgout Daniel Wilkey [email protected] [email protected] Science, Discovery, and the Universe Bloomberg L.P. Computer Science and Mathematics Major Background Regression Testing Jenkins instance would begin running all Bloomberg L.P.'s main product is a Another useful tool to have in unit and regression tests. This Jenkins job desktop application which provides sufficiently complex systems is a suite would then report the results on the financial tools. Bloomberg L.P. of regression tests. Regression testing Phabricator review and signal the author employs many computer programmers allows you to test very high level in an appropriate way. The previous to maintain, update, and add new behavior which can detect low level workflow required manual intervention to features to this product. bugs. run tests. This new workflow only the The product the code base was developer to create his feature branch in implementing has a record/playback order for all tests to be run automatically. Goal feature. This was leveraged to create a Many of the tasks that a programmer regression testing framework. This has to perform as part of a team can be framework leveraged the unit testing automated. The goal of this project was framework wherever possible. to work with a team to automate tasks At various points during the playback, where possible and make them easier callback methods would be invoked. An example of the new workflow, where a Jenkins where impossible. These methods could have testing logic to instance will automatically run tests and update the make sure the application was in the peer review. -

Introduction to Unit Testing

INTRODUCTION TO UNIT TESTING In C#, you can think of a unit as a method. You thus write a unit test by writing something that tests a method. For example, let’s say that we had the aforementioned Calculator class and that it contained an Add(int, int) method. Let’s say that you want to write some code to test that method. public class CalculatorTester { public void TestAdd() { var calculator = new Calculator(); if (calculator.Add(2, 2) == 4) Console.WriteLine("Success"); else Console.WriteLine("Failure"); } } Let’s take a look now at what some code written for an actual unit test framework (MS Test) looks like. [TestClass] public class CalculatorTests { [TestMethod] public void TestMethod1() { var calculator = new Calculator(); Assert.AreEqual(4, calculator.Add(2, 2)); } } Notice the attributes, TestClass and TestMethod. Those exist simply to tell the unit test framework to pay attention to them when executing the unit test suite. When you want to get results, you invoke the unit test runner, and it executes all methods decorated like this, compiling the results into a visually pleasing report that you can view. Let’s take a look at your top 3 unit test framework options for C#. MSTest/Visual Studio MSTest was actually the name of a command line tool for executing tests, MSTest ships with Visual Studio, so you have it right out of the box, in your IDE, without doing anything. With MSTest, getting that setup is as easy as File->New Project. Then, when you write a test, you can right click on it and execute, having your result displayed in the IDE One of the most frequent knocks on MSTest is that of performance. -

Software Development Process for Teams with Low Expertise, High-Rotation and No- Architect: a Systematic Mapping Study

Software Development Process for Teams with Low Expertise, High-Rotation and No- Architect: A Systematic Mapping Study Paulina Valencia Franco Angelina Espinoza, Humberto Cervantes Maceda Electrical Engineering Department, Electrical Engineering Department, Universidad Autónoma Metropolitana Universidad Autónoma Metropolitana Iztapalapa, México Iztapalapa, México Email: paulatina63 –at- gmail.com Email: aespinoza, hcm -at- xanum.uam.mx Abstract —To have a well-organized software team is Process) [1], TSP (Team Software Process) [2], PSP (Personal fundamental to achieve the business goals in a software Software Process) [3], XP (Extreme Programming) [4] or development project, such as: functionality and quality in the SCRUM [5]. planned times. However, the teams present a particular problematic that are common in both industrial and academic These methodologies, for their nature, are more or less settings, this paper focuses on three: a) Teams with members prepared to face the team features previously mentioned, but with low expertise on methodologies, b) Teams with high member the real big challenge is to combine these models, to get an rotations, or c) Teams lacking a software architect. One adapted process model for overcoming all the three previous approach to address this situation is to define a software features in development teams. Particularly, these kinds of development process, adapted from the adaptability teams are commonly found in academic environments where characteristics and benefits offered by several methodologies there are not resources to hire a technical leader, nor currently used, in order that the development team obtains the experienced staff, so that development teams are mainly expected results in terms of productivity, time and quality of the comprised of students who are in constant rotation. -

Adapting the Harris Matrix for Software Stratigraphy

Adapting the Harris Matrix for Software Stratigraphy Andrew Reinhard Edward Harris’s (1979) landmark work, Principles of preservation of these born-digital archaeological Archaeological Stratigraphy, from which sprouted sites. the idea and practice of the Harris matrix, became This is not a new concern for people who work within the broad my windmill (or albatross) in 2017 as I attempted to umbrella of “digital humanities.” “Software archaeology”—the leverage its data visualization technique onto a digital attempt to reverse-engineer poorly documented (or undocu- mented) software in order to restore and preserve functionality— archaeological site. This visualization would not only has existed formally for over 15 years.1 Digital preservation is allow archaeologists to understand the composition also not a new idea and forms a border of software archaeology; namely, what to do with software post-use or post-“excavation.”2 and history of a digital built environment—something None of the software archaeology approaches, however, treat important to the emerging field of digital heritage— software as archaeological sites in the traditional sense, and I wanted to see what would happen if I conducted a truly archaeo- but also would contribute to the conservation and logical investigation of a software application using a tool known ABSTRACT In 1979, Edward C. Harris invented and published his eponymous matrix for visualizing stratigraphy, creating an indispensable tool for generations of archaeologists. When presenting his matrix, Harris also detailed his four laws of archaeological stratigraphy: superposition, original horizontal, original continuity, and stratigraphic succession. In 2017, I created the first stratigraphic matrix for software, using as a test the 2016 video game No Man’s Sky (Hello Games). -

Unit Testing Or What Can We Learn from Tech Startups

Unit Testing or what can we learn from tech startups 2014-04-01 Saulius Lukauskas Outline • Motivation • Hierarchy of Testing • Short tutorial of unit testing in Python • Two rules of writing testable code • Kickstarting Software Development Workflow Coding Testing Production This is the standard software workflow which most of people are familiar with. Unfortunately, when people talk about coding only the coding and production parts are mentioned, while the testing part is often not spoken about. The better we get at Coding the less problems there will be with our software There is this misconception in software development, an arrogance one may say, that good programmers make good code that has few problems. The better we get at Testing the less problems there will be with our software In reality is that people who are good at testing, not at programming per se, make good software. Good programmers just make easier to test code Software Development Workflow Putting bugs in Debugging Production This is due to the fact the development cycle often looks like this. Cost of a software bug Putting bugs in Debugging Production The cost of having a bug in your software increases with each the step of the cycle My program crashed when I clicked “run” Cost of a software bug Putting bugs in Debugging Production The bugs at the coding stage are very easy to spot — the compiler often warns about them. They are also so easy to fix, most of us do not even consider them “bugs” any more. I tried this sample My program crashed model and the when I clicked “run” probabilities did not sum to 1 Cost of a software bug Putting bugs in Debugging Production Bugs at the quality assurance (testing) stage are a bit harder to spot, often require running your software for a good few minutes, checking the output carefully.