Distributed File Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Reference Guide

Reference Guide Scyld ClusterWare Release 5.10.1-5101g0000 December 18, 2013 Reference Guide: Scyld ClusterWare Release 5.10.1-5101g0000; December 18, 2013 Revised Edition Published December 18, 2013 Copyright © 1999 - 2013 Penguin Computing, Inc. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means (electronic, mechanical, photocopying, recording or otherwise) without the prior written permission of Penguin Computing, Inc.. The software described in this document is "commercial computer software" provided with restricted rights (except as to included open/free source). Use beyond license provisions is a violation of worldwide intellectual property laws, treaties, and conventions. Scyld ClusterWare, the Highly Scyld logo, and the Penguin Computing logo are trademarks of Penguin Computing, Inc.. Intel is a registered trademark of Intel Corporation or its subsidiaries in the United States and other countries. Infiniband is a trademark of the InfiniBand Trade Association. Linux is a registered trademark of Linus Torvalds. Red Hat and all Red Hat-based trademarks are trademarks or registered trademarks of Red Hat, Inc. in the United States and other countries. All other trademarks and copyrights referred to are the property of their respective owners. Table of Contents Preface .....................................................................................................................................................................................v -

Filedes = Open(Name, Mode) Opens an Existing File with the Given Name

Computer Science 425 Distributed Systems CS 425 / ECE 428 Fall 2013 Indranil Gupta (Indy) December 3, 2013 Lecture 27 Distributed File Systems Chapter 12 (relevant parts) 2013, I. Gupta, K. Nahrtstedt, S. Mitra, N. Vaidya, M. T. Harandi, J. Hou Lecture 27-1 File Attributes & System Modules File Attribute Block Block Block Record length creation timestamp File read timestamp Directory Module Access write timestamp Module attribute timestamp File Module Block reference count Module file type Access control ownership Device Module Module access control list File System Modules Lecture 27-2 UNIX File System Operations filedes = open(name, mode) Opens an existing file with the given name . filedes = creat(name, mode) Creates a new file with the given name . Both operations deliver a file descriptor referencing the open file. The mode is read, write or both. status = close(filedes) Closes the open file filedes. count = read(filedes, buffer, n) Transfers n bytes from the file referenced by filedes to buffer . count = write(filedes, buffer, n) Transfers n bytes to the file referenced by filedes from buffer. Both operations deliver the number of bytes actually transferred and advance the read-write pointer. pos = lseek(filedes, offset, Moves the read-write pointer to offset (relative or absolute, whence) depending on whence). status = unlink(name) Removes the file name from the directory structure. If the file has no other links to it, it is deleted from disk. status = link(name1, name2) Creates a new link (name2) for a file (name1 ). status = stat(name, buffer) Gets the file attributes for file name into buffer. Lecture 27-3 Distributed File System (DFS) Requirements Transparency : server-side changes should be invisible to the client-side. -

Apple File System Reference

Apple File System Reference Developer Contents About Apple File System 7 General-Purpose Types 9 paddr_t .................................................. 9 prange_t ................................................. 9 uuid_t ................................................... 9 Objects 10 obj_phys_t ................................................ 10 Supporting Data Types ........................................... 11 Object Identifier Constants ......................................... 12 Object Type Masks ............................................. 13 Object Types ................................................ 14 Object Type Flags .............................................. 20 EFI Jumpstart 22 Booting from an Apple File System Partition ................................. 22 nx_efi_jumpstart_t ........................................... 24 Partition UUIDs ............................................... 25 Container 26 Mounting an Apple File System Partition ................................... 26 nx_superblock_t ............................................. 27 Container Flags ............................................... 36 Optional Container Feature Flags ...................................... 37 Read-Only Compatible Container Feature Flags ............................... 38 Incompatible Container Feature Flags .................................... 38 Block and Container Sizes .......................................... 39 nx_counter_id_t ............................................. 39 checkpoint_mapping_t ........................................ -

(Fall 2009) Lecture 19 Distributed File Systems Reading

Computer Science 425/ECE 428/CSE 424 Distributed Systems (Fall 2009) Lecture 19 Distributed File Systems Reading: Chapter 8 Acknowledgement • The slides during this semester are based on ideas and material from the following sources: – Slides prepared by Professors M. Harandi, J. Hou, I. Gupta, N. Vaidya, Y-Ch. Hu, S. Mitra. – Slides from Professor S. Gosh’s course at University o Iowa. Administrative • MP2 posted October 5, 2009, on the course website, – Deadline November 6 (Friday) – Demonstration, 4-6pm, 11/6/2009 – Tutorial for MP2 planned for October 28 evening if students send questions to TA by October 25. Send requests what you would like to hear in the tutorial. Administrative • MP3 proposal instructions – Deadline for MP3 proposal: October 25, 2009, email proposal to TA – At least one representative of each group meets with instructor or TA during October 26-28 during their office hours ) watch for extended office hours during these days. – Wednesday, October 28, 8:30-10am – instructor’s office hours 3104 SC – No office hours, Thursday, 29, 9-10am Administrative • Homework 3 posted on Thursday, October 15 – Deadline: Thursday, October 29, 2009 at the beginning of class • Midterm Re-grading Period by Instructor – additional office hours: – October 27, 3:15-4pm – in 3104 SC – October 29, 3:15-4pm – in 3104 SC Plan for Today • File Systems – Review • Distributed File Systems – Requirements • File System Architecture • Network File System (NFS) • Andrew File System (AFS) File Systems A file is a collection of data with a user view (file structure) and a physical view (blocks). A directory is a file that provides a mapping from text names to internal file identifiers. -

Database-Installation-Guide-Linux.Pdf

Oracle® Database Database Installation Guide 19c for Linux E96432-19 July 2021 Oracle Database Database Installation Guide, 19c for Linux E96432-19 Copyright © 2015, 2021, Oracle and/or its affiliates. Primary Authors: Prakash Jashnani, Subhash Chandra Contributing Authors: Douglas Williams Contributors: Jean-Francois Verrier, Richard Roddy, Neha Avasthy, Sampath Ravindhran, Prasad Bagal, Subhranshu Banerjee, Gerald Venzl, Tammy Bednar, Avi Miller, Gavin Bowe, Gia-Khanh Nguyen, Darcy Christensen, Kiran Chamala, Jonathan Creighton, Benoit Dageville, Logeshwaran Rajan, Rajesh Dasari, Angad Gokakkar , Anu Natarajan, Girdhari Ghantiyala, Bernard Clouse, Chandrasekharan Iyer, Anil Nair, Sivaram Soma, Lisa Vaz, Ranjit Noronha, Vasu Venkatasubramanian, Sumanta Chatterjee, Margaret Susairaj, Malai Stalin, Markus Michalewicz, Subrahmanyam Kodavaluru, Sudip Datta, Madhu Hunasigi, Jim Erickson, Marcus Fallen, Joseph Francis, Allan Graves, Barbara Glover, Asad Hasan, Thirumaleshwara Hasandka, Putta Ramesh, Sergio Leunissen, Aneesh Khandelwal, Joel Kallman, Eugene Karichkin, Jai Krishnani, Prasad K Kulkarni, Ranjith Kundapur, Balaji Pagadala, Christopher Jones, Tak Wang, Bryn Llewellyn, Saar Maoz, Chao Liang, Gopal Mulagund, Pushkar Punit, Sivaselvam Narayanasamy, Ankur Kemkar, Sue Lee, Rich Long, Ricardo Alonso Gonzalez Acuna, Barb Lundhild, Sangeeth Jose, Rudregowda Mallegowda, Prasad Kuruvadi Nagaraj, Mughees Minhas, Krishna Mohan, Matthew McKerley, John McHugh, Gurudas Pai, Satish Panchumarthy , Rajesh Prasad, Rajendra Pingte, Ramesh Chakravarthula, -

SGI NAS CIFS User Guide

SGI NAS CIFS User Guide Release 3.1.x 007-5949-001 Copyright © 2013 SGI. All rights reserved; provided portions may be copyright in third parties, as indicated elsewhere herein. No permission is granted to copy, distribute, or create derivative works from the contents of this electronic documentation in any manner, in whole or in part, without the prior written permission of SGI. SGI reserves the right to make changes to this document at any time without notice and assumes no responsibility for its use. Refer to the latest product announcement or contact your local SGI representative for information on feature and product availability. This document includes the latest information available at the time of publication. TRADEMARKS AND ATTRIBUTIONS SGI, Silicon Graphics, Supportfolio and the SGI logo are trademarks or registered trademarks of Silicon Graphics International Corp. or its subsidiaries in the United States and other countries. Solaris and OpenSolaris are trademarks or registered trademarks of Sun Microsystems, Inc. in the U.S. and other countries. Microsoft and Windows are registered trademarks or trademarks of Microsoft Corporation in the United States and/or other countries. All other trademarks mentioned herein are the property of their respective owners. Document Number: 007-5949-001 SGI NAS CIFS User Guide Table of Contents 1 Overview .................................................................................................................................1 1.1 Purpose ............................................................................................................................1 -

Technical Overview

9 Product Documentation Likewise Enterprise Technical Overview IN THIS DOCUMENT Abstract • Authenticating Users This overview describes how Likewise joins non-Windows computers to • Authorizing Users and Groups Active Directory, authenticates users, authorizes users and groups for Managing Users and Groups • access to resources, stores Unix and Linux user information in Active • Applying Group Policies Directory, and manages Linux and Unix computers with group policies. The • Software Components and overview also outlines Likewise's two operating modes, its use of cells, and Architecture its software components and processes. Copyright © 2008 Likewise Software. All rights reserved. 1 Product Documentation Likewise Enterprise: Technical Overview The information contained in this document represents the current view of Likewise Software on the issues discussed as of the date of publication. Because Likewise Software must respond to changing market conditions, it should not be interpreted to be a commitment on the part of Likewise, and Likewise Software cannot guarantee the accuracy of any information presented after the date of publication. These documents are for informational purposes only. LIKEWISE SOFTWARE MAKES NO WARRANTIES, EXPRESS OR IMPLIED. Complying with all applicable copyright laws is the responsibility of the user. Without limiting the rights under copyright, no part of this document may be reproduced, stored in, or introduced into a retrieval system, or transmitted in any form, by any means (electronic, mechanical, photocopying, recording, or otherwise), or for any purpose, without the express written permission of Likewise Software. Likewise may have patents, patent applications, trademarks, copyrights, or other intellectual property rights covering subject matter in this document. Except as expressly provided in any written license agreement from Likewise, the furnishing of this document does not give you any license to these patents, trademarks, copyrights, or other intellectual property. -

UNIX System Servicesuser's Guide

z/OS Version 2 Release 3 UNIX System Services User's Guide IBM SA23-2279-30 Note Before using this information and the product it supports, read the information in “Notices” on page 321. This edition applies to Version 2 Release 3 of z/OS (5650-ZOS) and to all subsequent releases and modifications until otherwise indicated in new editions. Last updated: 2019-02-16 © Copyright International Business Machines Corporation 1996, 2018. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents List of Figures...................................................................................................... xv List of Tables......................................................................................................xvii About this document...........................................................................................xix Who should use z/OS UNIX System Services User's Guide?....................................................................xix What is in z/OS UNIX System Services User's Guide?........................................................................ xix Tasks that can be performed in more than one environment.............................................................xix z/OS information.................................................................................................................................. xix How to send your comments to IBM.....................................................................xxi If you -

User Management Index

User Management Sistemas Informáticos 1 Index • User Management – Definition and Generation – Environment configuration – Group management – Elimination • Security and access control – password management • Privilege delegation Sistemas Informáticos 2 User Management • Steps to create a new user: 1. Decide some basic configuration parameters for the user. • username, identification (UID), user group (GID), user root directory ($HOME) location, kind of shell, … 2. Add that parameters to the system database. • /etc/passwd, /etc/shadow, /etc/group 3. Configure security aspects. • Password, special privileges, account expiration date,… 4. Create and configure $HOME directory for the user. • Shell, X environ, owner and group, … 5. Check that new account works correctly. Sistemas Informáticos 3 root:x:0:0:root:/root:/bin/bash bin:x:1:1:bin:/bin: daemon:x:2:2:daemon:/sbin: adm:x:3:4:adm:/var/adm: lp:x:4:7:lp:/var/spool/lpd: User Management sync:x:5:0:sync:/sbin:/bin/sync shutdown:x:6:0:shutdown:/sbin:/sbin/shutdown halt:x:7:0:halt:/sbin:/sbin/halt … • Steps 1 & 2: Definition and Creation test:x:500:500:Usuario test:/home/test:/bin/bash apache:x:48:48:Apache:/var/www:/bin/false – The file /etc/passwd: … test:x:500: 500: Usuario test: /home/test: /bin/bash: •Name: all the resources employed by the user identified with that label. Must be unique. •Password: the x indicates the account is protected by an encrypted password stored in the file with restricted access /etc/shadow. •UID:GID: numerical identifiers for user and group. UID must be unique. Coherent assignation policies: UID>999 (lower ids for system accounts). -

HPE Insight Cluster Management Utility V8.0 User Guide

HPE Insight Cluster Management Utility v8.0 User Guide Abstract This guide describes how to install, configure, and use HPE Insight Cluster Management Utility (Insight CMU) v8.0 on Hewlett Packard Enterprise systems. Insight CMU is software dedicated to the administration of HPC and large Linux clusters. This guide is intended primarily for administrators who install and manage a large collection of systems. This document assumes you have access to the documentation that comes with the hardware platform where the Insight CMU cluster will be installed, and you are familiar with installing and administering Linux operating systems. Part Number: 5900-4408 Published: April 2016 Edition: 1 © Copyright 2016 Hewlett Packard Enterprise Development LP The information contained herein is subject to change without notice. The only warranties for Hewlett Packard Enterprise products and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. Hewlett Packard Enterprise shall not be liable for technical or editorial errors or omissions contained herein. Confidential computer software. Valid license from Hewlett Packard Enterprise required for possession, use, or copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under vendor's standard commercial license. Links to third-party websites take you outside the Hewlett Packard Enterprise website. Hewlett Packard Enterprise has no control over and is not responsible for information outside the Hewlett Packard Enterprise website. Oracle Java® are registered trademarks of Oracle and/or its affiliates. -

Operating Systems Principles and Programming More Contact

Operating Systems Principles and Programming Principes et programmation des syst`emesd’exploitation Albert Cohen [email protected] Ecole´ Polytechnique — Master 1 — INF583 2014–2015 1 / 1 More Contact Information Albert Cohen: senior research scientist at INRIA Parkas group at ENS: http://www.di.ens.fr/ParkasTeam.html Parallelism of synchronous Kahn networks 2 / 1 Organization Practical Information 9 lectures (slides in English) and 9 labs (in French) Oral examination Questions are welcome If you are lost, do not wait before asking for help Prerequisites Attending lectures and labs Programming, reading code and documentation after lab hours http://www.enseignement.polytechnique.fr/informatique/INF583 3 / 1 Contents Course Principles and design of operating systems Operating system programming Concrete examples Labs Corrections for most exercises Balanced between principles, algorithms, system design, kernel internals and system programming 4 / 1 Outline 5 / 1 1. Survival Kit 1. Survival Kit 6 / 1 1. Survival Kit Help Yourself UNIX man pages Read man pages: http://www.linuxmanpages.com or http://linux.die.net/man I Quick reference in French: http://www.blaess.fr/christophe/documents.php?pg=001 I BusyBox: shell for embedded systems: http://www.enseignement.polytechnique.fr/informatique/INF422/busybox.html Command-line usage I $ man 1 command (UNIX command) I $ man 2 system call (primitive system calls) I $ man 3 library call (e.g., C library, system call front-end stubs) I Warning: multiple entries with the same name may appear in different sections of the man pages run $ man -k name if you are not sure → I The SEE ALSO section at the bottom of most man pages is an important way to navigate through this valuable source of precise/reference information 7 / 1 1. -

Distributed File Systems

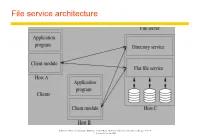

File service architecture Instructor’s Guide for Coulouris, Dollimore and Kindberg Distributed Systems: Concepts and Design Edn. 4 © Pearson Education 2005 File service architecture Flat file service The flat file service is concerned with implementing operations on the contents of files. A unique file identifier (UFID) is given to the flat file service to refer to the file to be operated on. The UFID is unique over all the files in the distributed system. The flat file service creates a new UFID for each new file that it creates. Directory service The directory service provides a mapping between text names and their UFIDs. The directory service creates directories and can add and delete files from the directories. The directory service is itself a client of the flat file service since the directory files are stored there. Client module integrate/extend the operations of the flat file and directory services provide a common application programming interface (can emulate different file interfaces) stores location of flat file and directory services Though the client module is shown as directly supporting application programs, in practice it integrates into a virtual file system. Instructor’s Guide for Coulouris, Dollimore and Kindberg Distributed Systems: Concepts and Design Edn. 4 © Pearson Education 2005 Virtual File System A virtual file system (VFS) is an abstraction layer on top of a more concrete file system. Its purpose is to allow client applications to access different types of concrete file systems in a uniform way. A VFS can, for example, be used to access local and network storage devices transparently without the client application noticing the difference.