JVM for a Heterogeneous Shared Memory System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

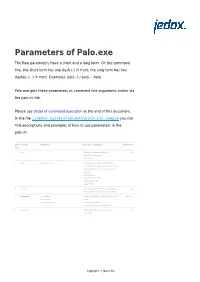

Parameters of Palo.Exe

Parameters of Palo.exe The Palo parameters have a short and a long form. On the command line, the short form has one dash (-) in front; the long form has two dashes (- -) in front. Examples: palo -? / palo – -help. Palo.exe gets these parameters as command line arguments and/or via the palo.ini file. Please see Order of command execution at the end of this document. In the file …\Jedox Suite\olap\data\palo.ini.sample you can find descriptions and examples of how to use parameters in the palo.ini. Short Long form Argument(s) Description / Example(s) Default value form ? help Displays the parameters of palo.exe. False Only for the command line. On/off switch. a admin <address> <port> Http interface with server browser and online documentation. An address can be a server name, an internet address or “” for all server internet addresses. Port is a number: admin 192.168.1.2 7777 admin localhost 7770 admin “” 7778 A auto-load Loads all databases on server start into memory True which are defined in the palo.csv. On/off switch. b cache-barrier <max number Sets the max number of cells to store in each cube 100000000 of cells to store cache. in each cube cache> cache-barrier 150000000 cache-barrier 0 (sets cache-barrier to 0). B auto-commit Commits all changes on server shutdown. True On/off switch. Copyright © Jedox AG c crypt Turns on encrypting of the database files. Newly False saved files are encrypted if this is set using the Blowfish algorithm. On/off switch. -

The Role of Multidimensional Databases in Modern Organizations

Vol.IV (LXVII) 95 - 102 Economic Insights – Trends and Challenges No. 2/2015 The Role of Multidimensional Databases in Modern Organizations Ana Tănăsescu Faculty of Economic Sciences, Petroleum-Gas University of Ploieşti, Bd. Bucureşti 39, 100680, Ploieşti, Romania e-mail: [email protected] Abstract The relational databases represent an ineffective data storage method when organizations manipulate a large amount of heterogeneous data and must realize complex analyses on these data. Thus, a new concept is required, the multidimensional database concept. In this paper the multidimensional database concept will be presented, its characteristics will be described, a comparative analysis between multidimensional databases and relational databases will be realized and a multidimensional database will be presented. This database was designed using Palo for Excel tool and can be used in order to analyse the academic staff research activity from a faculty. Keywords: multidimensional databases; multidimensional modelling; relational databases JEL Classification: C63; C81 Introduction The large amount of heterogeneous data accumulated by organizations represents one of the reasons why organizations need a technology that allows them to quickly achieve complex analyses on these data. Because the relational databases are ineffective from this point of view, a new concept is required, the multidimensional database concept. Annually, a large volume of information regarding the teaching and research activity of the academic staff from faculty departments is accumulated. The departments’ managers as well as the teaching process and research activity responsible persons must, periodically, elaborate reports, synthesizing the information accumulated in different time periods. A multidimensional database where this information will be stored is required to be designed in order to streamline their activity. -

The Cedar Programming Environment: a Midterm Report and Examination

The Cedar Programming Environment: A Midterm Report and Examination Warren Teitelman The Cedar Programming Environment: A Midterm Report and Examination Warren Teitelman t CSL-83-11 June 1984 [P83-00012] © Copyright 1984 Xerox Corporation. All rights reserved. CR Categories and Subject Descriptors: D.2_6 [Software Engineering]: Programming environments. Additional Keywords and Phrases: integrated programming environment, experimental programming, display oriented user interface, strongly typed programming language environment, personal computing. t The author's present address is: Sun Microsystems, Inc., 2550 Garcia Avenue, Mountain View, Ca. 94043. The work described here was performed while employed by Xerox Corporation. XEROX Xerox Corporation Palo Alto Research Center 3333 Coyote Hill Road Palo Alto, California 94304 1 Abstract: This collection of papers comprises a report on Cedar, a state-of-the-art programming system. Cedar combines in a single integrated environment: high-quality graphics, a sophisticated editor and document preparation facility, and a variety of tools for the programmer to use in the construction and debugging of his programs. The Cedar Programming Language is a strongly-typed, compiler-oriented language of the Pascal family. What is especially interesting about the Ce~ar project is that it is one of the few examples where an interactive, experimental programming environment has been built for this kind of language. In the past, such environments have been confined to dynamically typed languages like Lisp and Smalltalk. The first paper, "The Roots of Cedar," describes the conditions in 1978 in the Xerox Palo Alto Research Center's Computer Science Laboratory that led us to embark on the Cedar project and helped to define its objectives and goals. -

Open Source As an Alternative to Commercial Software

OPEN-SOURCE AS AN ALTERNATIVE TO COMMERCIAL SOFTWARE Final Report 583 Prepared by: Sean Coleman 2401 E Rio Salado Pkwy. #1179 Tempe, Arizona 85281 March 2009 Prepared for: Arizona Department of Transportation 206 South 17th Avenue Phoenix, Arizona 85007 in cooperation with U.S. Department of Transportation Federal Highway Administration The contents of this report reflect the views of the authors who are responsible for the facts and the accuracy of the data presented herein. The contents do not necessarily reflect the official views or policies of the Arizona Department of Transportation or the Federal Highway Administration. This report does not constitute a standard, specification, or regulation. Trade or manufacturers’ names which may appear herein are cited only because they are considered essential to the objectives of the report. The U.S. Government and the State of Arizona do not endorse products or manufacturers. TECHNICAL REPORT DOCUMENTATION PAGE 1. Report No. 2. Government Accession No. 3. Recipient’s Catalog No. FHWA-AZ-09-583 4. Title and Subtitle 5. Report Date: March, 2009 Open-Source as an Alternative to Commercial Software 6. Performing Organization Code 7. Authors: 8. Performing Organization Sean Coleman Report No. 9. Performing Organization Name and Address 10. Work Unit No. Sean Coleman 11. Contract or Grant No. 2401 E Rio Salado Pkwy, #1179 SPR-583 Tempe, AZ 85281 12. Sponsoring Agency Name and Address 13. Type of Report & Period Arizona Department of Transportation Covered 206 S. 17th Ave. Phoenix, AZ 85007 14. Sponsoring Agency Code Project Managers: Frank DiBugnara, John Semmens, and Steve Rost 15. Supplementary Notes 16. -

Taxonomies of Visual Programming and Program Visualization

Taxonomies of Visual Programming and Program Visualization Brad A. Myers September 20, 1989 School of Computer Science Carnegie Mellon University Pittsburgh, PA 15213-3890 [email protected] (412) 268-5150 The research described in this paper was partially funded by the National Science and Engineering Research Council (NSERC) of Canada while I was at the Computer Systems Research Institute, Univer- sity of Toronto, and partially by the Defense Advanced Research Projects Agency (DOD), ARPA Order No. 4976 under contract F33615-87-C-1499 and monitored by the Avionics Laboratory, Air Force Wright Aeronautical Laboratories, Aeronautical Systems Division (AFSC), Wright-Patterson AFB, OH 45433-6543. The views and conclusions contained in this document are those of the author and should not be interpreted as representing the of®cial policies, either expressed or implied, of the Defense Advanced Research Projects Agency of the US Government. This paper is an updated version of [4] and [5]. Part of the work for this article was performed while the author was at the University of Toronto in Toronto, Ontario, Canada. Taxonomies of Visual Programming and Program Visualization Brad A. Myers ABSTRACT There has been a great interest recently in systems that use graphics to aid in the programming, debugging, and understanding of computer systems. The terms ``Visual Programming'' and ``Program Visualization'' have been applied to these systems. This paper attempts to provide more meaning to these terms by giving precise de®nitions, and then surveys a number of sys- tems that can be classi®ed as providing Visual Programming or Program Visualization. These systems are organized by classifying them into three different taxonomies. -

Debian GNU/Linux

Debian GNU/Linux Debian GNU/Linux Copyright © 2004, 2005 Debian Debian GNU/Linux 3.1 (“sarge”) ø PA-RISC (“hppa”). , Debian. þ , Debian (“boot-floppies”). þ , hppa ø ø . þ , boot-floppies. , , debian-installer (http://www.debian.org/devel/debian-installer/). . ; / GNU. : .E. Debian GNU/Linux 3.1 hppa..............................................................................viii 1. Debian................................................................................................... 1 1.1. Debian? ............................................................................................................. 1 1.2. GNU/Linux? ..................................................................................................... 2 1.3. Debian GNU/Linux?......................................................................................... 3 1.4. Debian ............................................................................................................. 4 1.5. ........................................................... 4 1.6. ........................................................................................... 4 1.7. ..................................................... 5 2. ........................................................................................................... 7 2.1. ..................................................................................... 7 2.1.1. -

Jedox Technical Specifications 2020.3

Jedox 2020.3 Technical Specifications Jedox Technical Specifications 2020.3 Updated October 7, 2020 Copyright © Jedox AG Copyright Reserved. Reproduction including electronic reproduction and substantive recovery - even of parts - only with the approval of Jedox AG. Legal steps may be taken in case of non-compliance. Jedox, Worksheet-Server™, Supervision Server and Palo are trademarks or registered trademarks of Jedox AG. Microsoft and Microsoft Excel are trademarks or registered trademarks of the Microsoft Corp. All other trademarks are property of the respective companies. For the purpose of readability, brand names and trademarks are not explicitly stressed. If a relevant description (e.g. TM or ®) is missing, it is not to be concluded that the name is freely available. Contents Jedox Architecture 4 Component Communication 5 Excel Add-in 5 Jedox Web 5 Platform Support Guide for Jedox Software 6 Support Terms 6 Supported Platforms 6 Table 1: Jedox Excel Add-in, In-Memory DB, Jedox Web 6 Table 2: Integrator, 3rd Party Access 8 Virtualized Environments 9 Jedox Cloud Hardware Specifications 10 Capacity guide for Jedox Cloud 10 Hardware specifications 10 Hardware Requirements 12 Jedox Cloud 12 Jedox On-Premises 12 First server machine 12 Jedox GPU Accelerator 13 Additional server machine(s) 13 Client Machines 14 Screen Resolution 15 Software Requirements 16 Limits of Jedox Software Components 18 Limits of Jedox In-Memory DB 18 Limits of Jedox Web 21 Limits of the Jedox Integrator 22 Technical Specifications - Jedox Jedox Architecture The diagram below displays an overview of the Jedox software architecture: Jedox In-Memory DB (OLAP), a database completely loaded into the memory, providing high performance. -

SECURITY LIFECYCLE REVIEW Acme Corporation

SECURITY LIFECYCLE REVIEW Acme Corporation PREPARED BY Acme Acme www.acmecorporation.com The Security Lifecycle Review summarizes the threat exposure and security risks facing Acme Corporation and the customers connecting to their networks. The data used for this analysis was gathered by Palo Alto Networks during the report time period. The report provides actionable intelligence and risk assessment around the applications, URL traffic, and types of content that are traversing the Acme Corporation network as well as volume and types of threats and vulnerabilities that are observed. Recommendations are provided that can be employed to reduce the overall risk exposure for both the network operator and their customers. Industry Average Period: 9 DAYS Tue, Apr 02, 2019 - Wed, Apr 10, 2019 Confidential Information - Do Not Redistribute TABLE OF CONTENTS 3 Executive Summary 4 Applications Applications at a Glance Applications that Introduce Risk Applications that Introduce Risk — Detail SaaS Applications 16 URL Activity URL Activity 17 File Transfer File Transfer Analysis 18 Threats Threats at a Glance High-Risk and Malicious File Type Analysis Application Vulnerabilities Known and Unknown Malware Command and Control Analysis 25 Summary ACME CORPORATION | SECURITY LIFECYCLE REVIEW INDUSTRY AVERAGE PERIOD: 9 DAYS 2 Confidential Information - Do Not Redistribute EXECUTIVE SUMMARY APPLICATIONS SAAS APPLICATIONS URL ACTIVITY FILE TRANSFER THREATS SUMMARY EXECUTIVE SUMMARY FOR Acme Corporation The Security Lifecycle Review summarizes the business and security risks facing Acme Corporation. The data used for this analysis was gathered by Palo Alto Networks during the report time period. The report provides actionable intelligence around the applications, URL traffic, types of content, and threats traversing the network, including recommendations that can be employed to reduce the organization’s overall risk exposure. -

Next-Generation OLAP the Future of Dimensional Analysis

Next-Generation OLAP The Future of Dimensional Analysis John OʼBrien Zukeran Technologies August 2008 Agenda • OLAP Overview • A brief history of OLAP vendors and products • The next-generation technologies and styles • What does the future hold 2 OLAP OVERVIEW 3 Online Analytical Processing Ability to quickly answer analytical queries that are multi-dimensional in nature. 1. Interactive experience requirement – A few seconds for every answer 2. “Framed” business questions – How much of what happened when by whom? 3. “Slice and Dice” means Pivot tables at click speed – Microsoft Excel with plug-ins 4 Fundamental principles • Dimensional: – Logically modeled star schemas with Facts and Dimensions • Aggregated: – Performance from pre-calculating aggregates • Sum, Average, Min, Max, Count, Distinct Count – View selections to minimize over calculating every combination • Hierarchical : – Levels of aggregation per dimension • Example: day -> week -> month -> quarter -> year – Multiple Hierarchies on the same dimension • Example: Fiscal and Calendar year, Sales regions and geography 5 OLAP in Mixed BI Workloads 6 OLAP Taxonomy Mainstream: • MOLAP – Classic multi-dimensional cubes • ROLAP – Relational database used • HOLAP – Hybrid combination of MOLAP/ROLAP Minority Variations: • DOLAP – Desktop OLAP • WOLAP – Web-based OLAP • RTOLAP – Real-time OLAP 7 MOLAP Cubes • Multi-dimensional database server optimized for; – Highest performance from specialized indexing and storage optimizations – Aggregating data – Storing and compressing data – High -

Nbs Programming Environment Workshop Report

of Commerce Computer Science National Bureau of Standards and Technology NBS NBS Special Publication 500-78 PUBLICATIONS NBS Programming * Of '"StAU Environment Worksiiop Report NATIONAL BUREAU OF STANDARDS The National Bureau of Standards' was established by an act of Congress on March 3, 1901. The Bureau's overall goal is to strengthen and advance the Nation's science and technology and facilitate their effective application for public benefit. To this end, the Bureau conducts research and provides: (1) a basis for the Nation's physical measurement system, (2) scientific and technological services for industry and government, (3) a technical basis for equity in trade, and (4) technical services to promote public safety. The Bureau's technical work is per- formed by the National Measurement Laboratory, the National Engineering Laboratory, and the Institute for Computer Sciences and Technology. THE NATIONAL MEASUREMENT LABORATORY provides the national system of physical and chemical and materials measurement; coordinates the system with measurement systems of other nations and furnishes essentia! services leading to accurate and uniform physical and chemical measurement throughout the Nation's scientific community, industry, and commerce; conducts materials research leading to improved methods of measurement, standards, and data on the properties of materials needed by industry, commerce, educational institutions, and Government; provides advisory and research services to other Government agencies; develops, produces, and distributes Standard -

Palo Alto Networks® Compatibility Matrix

Palo Alto Networks® Compatibility Matrix paloaltonetworks.com/documentation Contact Information Corporate Headquarters: Palo Alto Networks 3000 Tannery Way Santa Clara, CA 95054 www.paloaltonetworks.com/company/contact-support About the Documentation • For the most recent version of this guide or for access to related documentation, visit the Technical Documentation portal www.paloaltonetworks.com/documentation. • To search for a specific topic, go to our search page www.paloaltonetworks.com/documentation/ document-search.html. • Have feedback or questions for us? Leave a comment on any page in the portal, or write to us at [email protected]. Copyright Palo Alto Networks, Inc. www.paloaltonetworks.com © 2016-2020 Palo Alto Networks, Inc. Palo Alto Networks is a registered trademark of Palo Alto Networks. A list of our trademarks can be found at www.paloaltonetworks.com/company/ trademarks.html. All other marks mentioned herein may be trademarks of their respective companies. Last Revised March 3, 2020 2 PALO ALTO NETWORKS® COMPATIBILITY MATRIX | Table of Contents Supported OS Releases by Model.................................................................. 7 Palo Alto Networks Next-Gen Firewalls............................................................................................... 9 Palo Alto Networks Appliances.............................................................................................................11 HA Port and Processor Support.......................................................................................................... -

Nbs Programming Environment Workshop Report

of Commerce Computer Science National Bureau of Standards and Technology NBS NBS Special Publication 500-78 PUBLICATIONS NBS Programming * Of '"StAU Environment Worksiiop Report NATIONAL BUREAU OF STANDARDS The National Bureau of Standards' was established by an act of Congress on March 3, 1901. The Bureau's overall goal is to strengthen and advance the Nation's science and technology and facilitate their effective application for public benefit. To this end, the Bureau conducts research and provides: (1) a basis for the Nation's physical measurement system, (2) scientific and technological services for industry and government, (3) a technical basis for equity in trade, and (4) technical services to promote public safety. The Bureau's technical work is per- formed by the National Measurement Laboratory, the National Engineering Laboratory, and the Institute for Computer Sciences and Technology. THE NATIONAL MEASUREMENT LABORATORY provides the national system of physical and chemical and materials measurement; coordinates the system with measurement systems of other nations and furnishes essentia! services leading to accurate and uniform physical and chemical measurement throughout the Nation's scientific community, industry, and commerce; conducts materials research leading to improved methods of measurement, standards, and data on the properties of materials needed by industry, commerce, educational institutions, and Government; provides advisory and research services to other Government agencies; develops, produces, and distributes Standard