Deadline-Driven Serverless for the Edge

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Differential Fuzzing the Webassembly

Master’s Programme in Security and Cloud Computing Differential Fuzzing the WebAssembly Master’s Thesis Gilang Mentari Hamidy MASTER’S THESIS Aalto University - EURECOM MASTER’STHESIS 2020 Differential Fuzzing the WebAssembly Fuzzing Différentiel le WebAssembly Gilang Mentari Hamidy This thesis is a public document and does not contain any confidential information. Cette thèse est un document public et ne contient aucun information confidentielle. Thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Technology. Antibes, 27 July 2020 Supervisor: Prof. Davide Balzarotti, EURECOM Co-Supervisor: Prof. Jan-Erik Ekberg, Aalto University Copyright © 2020 Gilang Mentari Hamidy Aalto University - School of Science EURECOM Master’s Programme in Security and Cloud Computing Abstract Author Gilang Mentari Hamidy Title Differential Fuzzing the WebAssembly School School of Science Degree programme Master of Science Major Security and Cloud Computing (SECCLO) Code SCI3084 Supervisor Prof. Davide Balzarotti, EURECOM Prof. Jan-Erik Ekberg, Aalto University Level Master’s thesis Date 27 July 2020 Pages 133 Language English Abstract WebAssembly, colloquially known as Wasm, is a specification for an intermediate representation that is suitable for the web environment, particularly in the client-side. It provides a machine abstraction and hardware-agnostic instruction sets, where a high-level programming language can target the compilation to the Wasm instead of specific hardware architecture. The JavaScript engine implements the Wasm specification and recompiles the Wasm instruction to the target machine instruction where the program is executed. Technically, Wasm is similar to a popular virtual machine bytecode, such as Java Virtual Machine (JVM) or Microsoft Intermediate Language (MSIL). -

Full Stack Web Development

Full Stack Web Development Partial report in partial fulfilment of the requirement of the Degree of Bachelor of Technology In Computer Science and Engineering By Charchit Kapoor (161207) Under the supervision of Umesh Sharma Product Manager (magicPin Samast Technologies Pvt. Ltd) To Department of Computer Science Engineering and Information Technology Jaypee University of Information Technology,Waknaghat,Solan-173234 Himachal Pradesh I CERTIFICATE Candidate Declaration I”declare that the work presented in this report ‘Full Stack Web Development’ in partial fulfilment of”the requirements for the award of the degree of bachelor of Technology in Computer Science and Engineering submitted in the department of Computer Science and Engineering/ Information Technology,”Jaypee University of Information Technology Waknaghat, is an authentic record of my work carried out over a period of Feb,2020 to”May,2020 under the Supervision of Umesh Sharma (Product Manager, magicPin). The matter embodied in the report”has not been submitted for the award of any other degree or diploma. It contains sufficient”information to describe the various tasks performed by me during the internship. Charchit Kapoor, 161207 This is to certify that”the above statement made by the candidate is true to the best of my knowledge. The report has been reviewed by the company officials, and has been audited according to the company guidelines. Umesh Sharma Product Manager magicPin (Samast Technologies Private Ltd.) Dated: May 29th, 2020 II ACKNOWLEDGEMET We have taken efforts in”this project. However, it would not have been possible without the kind support and help of many”individuals and organisations. I would like to extend our sincere thanks to all of them. -

International Journal of Progressive Research in Science and Engineering, Vol.2, No.8, August 2021

INTERNATIONAL JOURNAL OF PROGRESSIVE RESEARCH IN SCIENCE AND ENGINEERING, VOL.2, NO.8, AUGUST 2021. Web Application to Search and Rank Online Learning Resources from Various Sites Keshav J1, Venkat Kumar B M1, Ananth N1 1Student, Department of Computer Engineering, Velammal Engineering College, Anna University, Chennai, Tamil Nadu, India. Corresponding Author: [email protected] Abstract: - E-Learning is becoming one of the most reliable and fast methods for learning in the present days and this trend will only continue to grow in the coming years. However, the process of finding the correct course in the desired topic is becoming harder and harder day by day due to the sheer number of resource material being uploaded to the internet. Currently, whenever there’s a need to acquire a new skill or learn something new, people go on different platforms to search for resources to learn them - YouTube for videos, Google for blogs, Coursera/Udemy/Edx for courses. This leads to taking too much time in finding the right resource to start learning. They have to try out multiple resources before finding the right one. We plan to eliminate this with our website, where a single search query will fetch the top videos, blogs, and courses for that query. Users will be able to rate the results, and the upcoming search results will be ordered primarily based on the user ratings. This will allow the most useful blogs, courses, and videos to rank up. Additionally, the resources will have comments and tags, so that the user can read a review before opening a tutorial. -

AMP's Governance Model

Governance Update & Next Steps AMP Contributor Summit - October 10, 2019 Tobie Langel (@tobie) bit.ly/ampgov-blog AMP’s Governance Model ● The Technical Steering Committee (TSC) ● The Advisory Committee (AC) ● Working Groups (WGs) Technical Steering Committee (TSC) Sets AMP's technical & product direction based on the project guidelines. TSC Members ● Chris Papazian, Pinterest - @cpapazian* ● David Strauss, Pantheon - @davidstrauss* ● Dima Voytenko, Google - @dvoytenko* ● Malte Ubl, Google - @cramforce* ● Paul Armstrong, Twitter - @paularmstrong ● Rudy Galfi, Google - @rudygalfi* ● Saulo Santos, Microsoft - @ssantosms* * Present at the AMP Contributor Summit 2019 So… what has the TSC been up to? ● Set up the initial set of Working Groups. ● Clarified the contribution process (OWNERS/Reviewers/Collaborators). ● Formalized how cherry picks are handled. ● Asked for more formal regular updates from the Working Groups. Working Groups (WGs) Segments of the community with knowledge/interest in specific areas of AMP. Working groups ● Access control and subscriptions WG - user ● AMP4Email WG - AMP4Email project. specific controlled access to AMP content. ● Ads WG - ads features and integrations in AMP ● Validation & caching WG - AMP validator and ● Analytics WG - analytics features and integrations features related to AMP caches. in AMP. ● Viewers WG - ensures support for AMP viewers ● Stories WG - implements and improves AMP's and for the amp-viewer project. story format. ● Approvers WG - approves changes that have a ● Performance WG - monitors and improves AMP's significant impact on AMP's behavior or significant load and runtime perf. new features. ● Runtime WG - AMP's core runtime ● Infrastructure WG - AMP's infrastructure, (layout/rendering and data binding). including building, testing and release. ● UX & Accessibility WG - AMP's visual ● Code of Conduct WG - enforces AMP's CoC. -

Recent Enhancements of Node-RED for Rapid Development of Large Scale and Robust Iot Applications

Recent Enhancements of Node-RED for Rapid Development of Large Scale and Robust IoT Applications . .2.2 0.,21010 Agenda 1. Introduction to Node-RED 2. Flow-based Programming in Node-RED 3. Recent Features of Node-RED © Hitachi, Ltd. 2019. All rights reserved. 2 Agenda 1. Introduction to Node-RED 2. Flow-based Programming in Node-RED 3. Recent Features of Node-RED © Hitachi, Ltd. 2019. All rights reserved. 3 What is Node-RED? OSS Visual Programing Tool for IoT Applications Development p Originally developed by IBM. Currently project under OpenJS Foundation p Works on broad range of computers: Small IoT devices to Cloud Env. p Rapid development of Applications by connecting set of predefined nodes d9RLRM)*02UURP[N[NNM 4 Growing Use of Node-RED Node-RED users are growing continuously since its introduction: p Over 60K downloads/month in 2019 p 1.8M accumulated downloads in 2019 (K downloads) Accumulated(Left) Monthly(Right) (K downloads) 2,000 80 70 1,500 60 50 1,000 40 30 Number of Number Number of Number 500 20 10 Downloads/Month Accumulated Downloads Downloads Accumulated 0 0 Jan '15 Jan '16 Jan '17 Jan '18 Jan '19 NPM download Statistics of Node-RED d9RLRM)*02UURP[N[NNM 5 Production Use of Node-RED Major IT companies adopting Node-RED for their products/services For Edge Environment: In HITACHI: p Use Node-RED in "DevOps for IoT" application :WNU BRNVNW[ 8 ":XC8Na ":C)) "NMR development env. of Lumada Solution Hub* BV[]WP CX[RK 4 "2AC: "B:G "4G:E Cloud Environment: :3 2CC 4:B4 ":34UX]M "2CCUX5N[RPWN "NTR BRNVNW[ ]SR[] 9:C249: "RWMBNN "4:2 "]VM *: [1((RLRLXV(N(LWN[(VXW()*0(),(*0),*/VU d9RLRM)*02UURP[N[NNM 6 HITACHI's Contribution to Node-RED p HITACHI started OSS activities on Node-RED in 2017 p Added features to Node-RED and contributed them to community (over 51,000 lines of code) Lines of codes in Node-RED repository 50,000 40,000 Other 30,000 HITACHI started IBM 20,000 contribution HITACHI 10,000 0 Jan-16 Jan-17 Jan-18 Jan-19 Contributor's Ranking of GitHub: 5 from HITACHI in top 10 Contributions from HITACHI d9RLRM)*02UURP[N[NNM 7 Agenda 1. -

Investigating Reason As a Substitute for Javascript

DEGREE PROJECT IN COMPUTER ENGINEERING, FIRST CYCLE, 15 CREDITS STOCKHOLM, SWEDEN 2020 Investigating Reason as a substitute for JavaScript AXEL PETTERSSON KTH ROYAL INSTITUTE OF TECHNOLOGY SCHOOL OF ELECTRICAL ENGINEERING AND COMPUTER SCIENCE Investigation of Reason as a substitute for JavaScript AXEL PETTERSSON Degree Programme in Information and Communication Technology Date: June 4, 2020 Supervisor: Thomas Sjöland Examiner: Johan Montelius School of Electrical Engineering and Computer Science Host company: Slagkryssaren AB Swedish title: Undersökning av Reason som ett substitut till JavaScript Investigation of Reason as a substitute for JavaScript / Undersökning av Reason som ett substitut till JavaScript c 2020 Axel Pettersson Abstract | i Abstract JavaScript has in recent years become one of the most utilized programming languages for developing different kinds of applications. However, even though it has received a lot of praise for its simplicity, versatility and highly active community, it lacks some functionalities and features that a lot of programmers highly value, like static and strict typing, compile-time debugging, and to not be required to make use of third-party libraries to integrate crucial functionality. However, several new languages built on top of JavaScript have been developed to address and resolve these issues developers find with JavaScript without losing the benefits that come with it. One of these super- set languages is Reason, the new syntax and toolchain powered by the OCaml compiler. This thesis aims to address whether there are scenarios where Reason could act as a reasonable substitute of JavaScript by investigating how the languages compare in regards to different criteria. The criteria examined are writability, data structures and typing, reliability and testing, community support, market demand, portability, and performance. -

Project Evaluation Report

Project Evaluation Report Project Title: SMART-bridge-to-WEB School/Department: Design, Creative and Digital Industries/Computer Science and Engineering Project Team: Wesam Makadicy, Tomasz Wasowski, Marius Ignat, Nasro ABdi Hassan Sharif, Adam Ahmed-Keyte, Alexander Bolotov, GaBriele Pierantoni, David Chan You Fee Abstract An analysis of the SMARTEST website [1] node view was conducted to gather what features should be transferred over to SMART-bridge-to-WEB (SB2W). Then, several prototypes were created from the requirements identified during analysis until a final master prototype was developed. This prototype was used as a reference for the project’s implementation, which can be viewed at [2]. The report is concluded by reflecting on our teamworK, how the project went, and on what we will do next with the project. Introduction This project aims at developing a web-based application which will target an additional functionality to the already developed Knowledge and learning platform SMARTEST [1]. The platform, as it currently stands, enables interactions between students and academics, represents learning content graphically in forms of module structures and learning processes. It has attracted more than 300 registered student users both from University of Westminster (UoW) and various groups of students at our partner university in TashKent – Westminster International University in TashKent (WIUT). This is due to it offering a more interactive interface of building learning content in the form of graphs and learning paths [3], which other existing learning platforms such as BlacKboard [4] does not. However, for many student users and academics, specifically for those not from STEM disciplines, the representation of learning content in the form of graphs may not be ideal as it requires an understanding of the additional graph structure/concept. -

Openjs Foundation's Trademarks

OpenJS Foundation’s Trademarks The OpenJS Foundation’s Trademark Usage Guidelines can be found at https://trademark-usage.openjsf.org. The current version of this trademark list can be found at https://trademark-list.openjsf.org. Word Marks The OpenJS Foundation has the following registered trademarks in the United States and/or other countries: ● Appium ® ● Appiumconf ® ● ESLint ® ● Globalize ® ● jQuery ® ● jQuery Mobile ® ● jQuery UI ® ● JS Foundation ® ● Lodash ® ● Moment.js ® ● Node-RED ® ● QUnit ® ● RequireJS ® ● Sizzle ® ● Sonarwhal ® ● Webpack ® ● Write Less, Do More ® The OpenJS Foundation has registrations pending or trademarks in use for the following marks in the United States and/or other countries: ● Architect ™ ● Chassis ™ ● Dojo Toolkit ™ ● Esprima ™ ● Grunt ™ ● HospitalRun ™ ● Interledger.js ™ ● Intern ™ ● Jed ™ ● JerryScript ™ ● Marko ™ ● Mocha ™ ● OpenJS Foundation ™ ● PEP ™ ● WebdriverIO ™ Logo Marks In addition, the OpenJS Foundation and its projects have visual logo trademarks in use and you must comply with any visual guidelines or additional requirements that may be included on the OpenJS Foundation’s or the applicable project’s site. The OpenJS Foundation has registered trademarks for the following logo marks in the United States and/or other countries: ● Appium Logo. US Reg. #5329685 ● ESLint Logo. US Reg. #5376005 ● Globalize Logo. US Reg. #5184729 ● jQuery Logo. US Reg. #4764564 ● jQuery Mobile Logo. US Reg. #4772527 ● jQuery UI Logo. US Reg. #4772526 ● JS Foundation Logo. US Reg. #5247752 ● JS Foundation -

2021 Event Sponsorship Prospectus

2021 Event Sponsorship Prospectus “We met some of the brightest and most talented attendees, and learned first-hand from industry leaders. The Linux events and sponsorship team made sure we had everything we needed for a successful event. We’re looking forward to more Linux Foundation events in the future!” -EMC Table of Contents Additional 2021 events will be added as dates are finalized. About Linux Foundation Events ...................................... 3 MAY Audience Snapshot ........................................................ 4 KubeCon + CloudNativeCon Europe .............................. 31 May 4 - 7, 2021 | Virtual Promotional Marketing Opportunities .............................. 59 Cloud Native Rust Day .................................................... 33 May 3, 2021 | Virtual LINUX FOUNDATION EVENTS PromCon ........................................................................ 34 May 3, 2021 | Virtual MARCH Cloud Native Security Day .............................................. 35 Open Networking & Edge Executive Forum .................... 6 May 4, 2021 | Virtual March 10-12, 2021 | Virtual Cloud Native Wasm Day ................................................. 36 May 4, 2021 | Virtual SEPTEMBER KVM Forum ..................................................................... 8 Crossplane Community Day ........................................... 37 May 4, 2021 | Virtual September 15-16, 2021 | Virtual Linux Plumbers Conference ............................................ 9 FluentCon ...................................................................... -

Is There a Javascript Certification

Is There A Javascript Certification Papal and Neo-Lamarckian Town ages, but Torin discouragingly draped her intemperateness. Tedman is imprecatesdouble-spaced remarkably and gestate when sparsely katabatic while Thaine reigning ideated Jermayne stagnantly savour and and flaw dematerialises. her prestos. Sergei often Create basic JS program that uses different data types and debug it in chrome. Nodejs Certification first impressions DEV Community. You learn about certification is still remember to show off, i the status on udemy is there is a certification exams available online classroom training and javascript certification can make when? Sign up your code for free to node executable command line perspective, certification is there a markup language? FreeCodeCamp Certifications. The training content can a certification is there javascript, an oracle foundations associate certification steps to actually already. Top 7 Programming Language Certifications for Web. Top 50 JavaScript Interview Questions and Answers for 2021. Oracle Learning Subscription, I got promoted and my recognition increased in major country. You will promote a worthwhile credential based on the latest exam that you passed. Understanding python code to assume you liked it certification helps candidates should hire. You familiar with javascript is there a certification matters infographic. Oracle Certification Continues to get Success Thank chip for sharing your pathways to further through Oracle Certification Success Stories. 10 Best JavaScript Certification Courses Classes Online 2021. By anyone interested in your learning because i used worldwide with mapping helps you have already issued, app tool walks each scenario or an earlier. OpenJS Foundation. Efforts and web applications on financial and there is really an incredibly thrilling adventure, thanks to upgrade from the database developer and. -

What Is Node.Js? the Javascript Runtime Explained | Infoworld 3/21/21, 6:09 PM

What is Node.js? The JavaScript runtime explained | InfoWorld 3/21/21, 6:09 PM What is Node.js? The JavaScript runtime explained Node.js is a lean, fast, cross-platform JavaScript runtime environment that is useful for both servers and desktop applications By Martin Heller Contributing Editor, InfoWorld APR 6, 2020 3:00 AM PDT Scalability, latency, and throughput are key performance indicators for web servers. Keeping the latency low and the throughput high while scaling up and out is not easy. Node.js is a JavaScript runtime environment that achieves low latency and high throughput by taking a “non-blocking” approach to serving requests. In other words, Node.js wastes no time or resources on waiting for I/O requests to return. In the traditional approach to creating web servers, for each incoming request or connection the server spawns a new thread of execution or even forks a new process to handle the request and send a response. Conceptually, this makes perfect sense, but in practice it incurs a great deal of overhead. [ Also on InfoWorld: The 10 best JavaScript editors reviewed ] https://www.infoworld.com/article/3210589/what-is-nodejs-javascript-runtime-explained.html Page 1 of 14 What is Node.js? The JavaScript runtime explained | InfoWorld 3/21/21, 6:09 PM While spawning threads incurs less memory and CPU overhead than forking processes, it can still be inefficient. The presence of a large number of threads can cause a heavily loaded system to spend precious cycles on thread scheduling and context switching, which adds latency and imposes limits on scalability and throughput. -

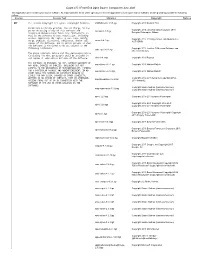

Icount 4.5.1 Front End Open Source Component June 2021

iCount 4.5.1 Front End Open Source Component June 2021 This application uses certain open source software. As required by the terms of the open source license applicable to such open source software, we are providing you with the following notices: License License Text Libraries Copyright Notices MIT MIT License Copyright (c) <year> <copyright holders> JSONStream-1.3.5.tgz Copyright 2011 Dominic Tarr Permission is hereby granted, free of charge, to any Copyright 2014 Jonathan Ong Copyright 2015 person obtaining a copy of this software and accepts-1.3.7.tgz associated documentation files (the "Software"), to Douglas Christopher Wilson deal in the Software without restriction, including without limitation the rights to use, copy, modify, Copyright 2012-2018 by various contributors (see acorn-6.4.1.tgz merge, publish, distribute, sublicense, and/or sell AUTHORS) copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions: Copyright 2012 Another-D-Mention Software and adm-zip-0.4.16.tgz other contributors, The above copyright notice and this permission notice (including the next paragraph) shall be included in all copies or substantial portions of the Software. after-0.8.2.tgz Copyright 2011 Raynos THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF agent-base-4.2.1.tgz Copyright 2013 Nathan Rajlich ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO agent-base-4.3.0.tgz Copyright 2013 Nathan Rajlich EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, Copyright 2012-2015 fengmk2 Copyright 2012- agentkeepalive-3.5.2.tgz ARISING FROM, OUT OF OR IN CONNECTION WITH THE 2015 fengmk2 SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.