DEPARTMENT of INFORMATICS Implementation of Volumetric Light

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Volumetric Real-Time Smoke and Fog Effects in the Unity Game Engine

Volumetric Real-Time Smoke and Fog Effects in the Unity Game Engine A Technical Report presented to the faculty of the School of Engineering and Applied Science University of Virginia by Jeffrey Wang May 6, 2021 On my honor as a University student, I have neither given nor received unauthorized aid on this assignment as defined by the Honor Guidelines for Thesis-Related Assignments. Jeffrey Wang Technical advisor: Luther Tychonievich, Department of Computer Science Volumetric Real-Time Smoke and Fog Effects in the Unity Game Engine Abstract Real-time smoke and fog volumetric effects were created in the Unity game engine by combining volumetric lighting systems and GPU particle systems. A variety of visual effects were created to demonstrate the features of these effects, which include light scattering, absorption, high particle count, and performant collision detection. The project was implemented by modifying the High Definition Render Pipeline and Visual Effect Graph packages for Unity. 1. Introduction Digital media is constantly becoming more immersive, and our simulated depictions of reality are constantly becoming more realistic. This is thanks, in large part, due to advances in computer graphics. Artists are constantly searching for ways to improve the complexity of their effects, depict more realistic phenomena, and impress their audiences, and they do so by improving the quality and speed of rendering – the algorithms that computers use to transform data into images (Jensen et al. 2010). There are two breeds of rendering: real-time and offline. Offline renders are used for movies and other video media. The rendering is done in advance by the computer, saved as images, and replayed later as a video to the audience. -

The Design and Evolution of the Uberbake Light Baking System

The design and evolution of the UberBake light baking system DARIO SEYB∗, Dartmouth College PETER-PIKE SLOAN∗, Activision Publishing ARI SILVENNOINEN, Activision Publishing MICHAŁ IWANICKI, Activision Publishing WOJCIECH JAROSZ, Dartmouth College no door light dynamic light set off final image door light only dynamic light set on Fig. 1. Our system allows for player-driven lighting changes at run-time. Above we show a scene where a door is opened during gameplay. The image on the left shows the final lighting produced by our system as seen in the game. In the middle, we show the scene without the methods described here(top).Our system enables us to efficiently precompute the associated lighting change (bottom). This functionality is built on top of a dynamic light setsystemwhich allows for levels with hundreds of lights who’s contribution to global illumination can be controlled individually at run-time (right). ©Activision Publishing, Inc. We describe the design and evolution of UberBake, a global illumination CCS Concepts: • Computing methodologies → Ray tracing; Graphics system developed by Activision, which supports limited lighting changes in systems and interfaces. response to certain player interactions. Instead of relying on a fully dynamic solution, we use a traditional static light baking pipeline and extend it with Additional Key Words and Phrases: global illumination, baked lighting, real a small set of features that allow us to dynamically update the precomputed time systems lighting at run-time with minimal performance and memory overhead. This ACM Reference Format: means that our system works on the complete set of target hardware, ranging Dario Seyb, Peter-Pike Sloan, Ari Silvennoinen, Michał Iwanicki, and Wo- from high-end PCs to previous generation gaming consoles, allowing the jciech Jarosz. -

Fast Rendering of Irregular Grids

Fast Rendering of Irregular Grids Cl´audio T. Silva Joseph S. B. Mitchell Arie E. Kaufman State University of New York at Stony Brook Stony Brook, NY 11794 Abstract Definitions and Terminology 3 A polyhedron is a closed subset of < whose boundary consists We propose a fast algorithm for rendering general irregular grids. of a finite collection of convex polygons (2-faces,orfacets) whose Our method uses a sweep-plane approach to accelerate ray casting, union is a connected 2-manifold. The edges (1-faces)andvertices and can handle disconnected and nonconvex (even with holes) un- (0-faces) of a polyhedron are simply the edges and vertices of the structured irregular grids with a rendering cost that decreases as the polygonal facets. A convex polyhedron is called a polytope.A “disconnectedness” decreases. The algorithm is carefully tailored polytope having exactly four vertices (and four triangular facets) is to exploit spatial coherence even if the image resolution differs sub- called a simplex (tetrahedron). A finite set S of polyhedra forms a stantially from the object space resolution. mesh (or an unstructured, irregular grid) if the intersection of any In this paper, we establish the practicality of our method through two polyhedra from S is either empty, a single common edge, a sin- experimental results based on our implementation, and we also pro- gle common vertex, or a single common facet of the two polyhedra; vide theoretical results, both lower and upper bounds, on the com- such a set S is said to form a cell complex. The polyhedra of a mesh plexity of ray casting of irregular grids. -

3D Computer Graphics Compiled By: H

animation Charge-coupled device Charts on SO(3) chemistry chirality chromatic aberration chrominance Cinema 4D cinematography CinePaint Circle circumference ClanLib Class of the Titans clean room design Clifford algebra Clip Mapping Clipping (computer graphics) Clipping_(computer_graphics) Cocoa (API) CODE V collinear collision detection color color buffer comic book Comm. ACM Command & Conquer: Tiberian series Commutative operation Compact disc Comparison of Direct3D and OpenGL compiler Compiz complement (set theory) complex analysis complex number complex polygon Component Object Model composite pattern compositing Compression artifacts computationReverse computational Catmull-Clark fluid dynamics computational geometry subdivision Computational_geometry computed surface axial tomography Cel-shaded Computed tomography computer animation Computer Aided Design computerCg andprogramming video games Computer animation computer cluster computer display computer file computer game computer games computer generated image computer graphics Computer hardware Computer History Museum Computer keyboard Computer mouse computer program Computer programming computer science computer software computer storage Computer-aided design Computer-aided design#Capabilities computer-aided manufacturing computer-generated imagery concave cone (solid)language Cone tracing Conjugacy_class#Conjugacy_as_group_action Clipmap COLLADA consortium constraints Comparison Constructive solid geometry of continuous Direct3D function contrast ratioand conversion OpenGL between -

Volumetric Fog Rendering Bachelor’S Thesis (9 ECT)

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by DSpace at Tartu University Library UNIVERSITY OF TARTU Institute of Computer Science Computer Science curriculum Siim Raudsepp Volumetric Fog Rendering Bachelor’s thesis (9 ECT) Supervisor: Jaanus Jaggo, MSc Tartu 2018 Volumetric Fog Rendering Abstract: The aim of this bachelor’s thesis is to describe the physical behavior of fog in real life and the algorithm for implementing fog in computer graphics applications. An implementation of the volumetric fog algorithm written in the Unity game engine is also provided. The per- formance of the implementation is evaluated using benchmarks, including an analysis of the results. Additionally, some suggestions are made to improve the volumetric fog rendering in the future. Keywords: Computer graphics, fog, volumetrics, lighting CERCS: P170: Arvutiteadus, arvutusmeetodid, süsteemid, juhtimine (automaat- juhtimisteooria) Volumeetrilise udu renderdamine Lühikokkuvõte: Käesoleva bakalaureusetöö eesmärgiks on kirjeldada udu füüsikalist käitumist looduses ja koostada algoritm udu implementeerimiseks arvutigraafika rakendustes. Töö raames on koostatud volumeetrilist udu renderdav rakendus Unity mängumootoris. Töös hinnatakse loodud rakenduse jõudlust ning analüüsitakse tulemusi. Samuti tuuakse töös ettepanekuid volumeetrilise udu renderdamise täiustamiseks tulevikus. Võtmesõnad: Arvutigraafika, udu, volumeetria, valgustus CERCS: P170: Computer science, numerical analysis, systems, control 2 Table of Contents Introduction -

Techniques for an Artistic Manipulation of Light, Signal and Material

Techniques for an artistic manipulation of light, signal and material Sarah El-Sherbiny∗ Vienna University of Technology Figure 1: Beginning from the left side the images in the first column show an editing of the lighting. The scene was manipulated by painting with light and automatic refinements and optimizations made by the system [Pellacini et al. 2007]. A result of volumetric lighting can be seen in the second column. The top image was generated by static emissive curve geometry, while the image in the bottom was created by animated beams that get shaded with volumetric effects [Nowrouzezahrai et al. 2011]. In the third column the shadows were modified. The user can set constraints and manipulate surface effects via drag and drop [Ritschel et al. 2010]. The images in the fourth column illustrate the editing of reflections. The original image was manipulated so, that the reflected face gets better visible on the ring [Ritschel et al. 2009]. The last images on the right show the editing of materials by making objects transparent and translucent [Khan et al. 2006]. Abstract 1 Introduction Global illumination is important for getting more realistic images. This report gives an outline of some methods that were used to en- It considers direct illumination that occurs when light falls directly able an artistic editing. It describes the manipulation of indirect from a light source on a surface. Also indirect illumination is taken lighting, surface signals and materials of a scene. Surface signals into account, when rays get reflected on a surface. In diffuse re- include effects such as shadows, caustics, textures, reflections or flections the incoming ray gets reflected in many angles while in refractions. -

Real Time Rendering of Atmospheric Scattering and Volumetric Shadows Venceslas Biri, Didier Arquès, Sylvain Michelin

Real Time Rendering of Atmospheric Scattering and Volumetric Shadows Venceslas Biri, Didier Arquès, Sylvain Michelin To cite this version: Venceslas Biri, Didier Arquès, Sylvain Michelin. Real Time Rendering of Atmospheric Scattering and Volumetric Shadows. Journal of WSCG, University of West Bohemia, Czech Republic, 2006, 14 (1), pp.65-72. hal-00681568 HAL Id: hal-00681568 https://hal-upec-upem.archives-ouvertes.fr/hal-00681568 Submitted on 21 Mar 2012 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Real Time Rendering of Atmospheric Scattering and Volumetric Shadows Biri Venceslas Arquès Didier Michelin Sylvain Charles Cros Institute Charles Cros Institute Charles Cros Institute 6 bd du Danube 6 bd du Danube 6 bd du Danube F-77700 SERRIS F-77700 SERRIS F-77700 SERRIS FRANCE FRANCE FRANCE [email protected] [email protected] [email protected] ABSTRACT Real time rendering of atmospheric light scattering is one of the most difficult lighting effect to achieve in computer graphics. This paper presents a new real time method which renders these effects including volumetric shadows, which provides a great performance improvement over previous methods. Using an analytical expression of the light transport equation we are able to render directly the contribution of the participating medium on any surface. -

Real-Time DVR Illumination Methods for Ultrasound Data

LiU-ITN-TEK-A--10/045--SE Real-time DVR Illumination Methods for Ultrasound Data Erik Sundén 2010-06-07 Department of Science and Technology Institutionen för teknik och naturvetenskap Linköping University Linköpings Universitet SE-601 74 Norrköping, Sweden 601 74 Norrköping LiU-ITN-TEK-A--10/045--SE Real-time DVR Illumination Methods for Ultrasound Data Examensarbete utfört i vetenskaplig visualisering vid Tekniska Högskolan vid Linköpings universitet Erik Sundén Handledare Patric Ljung Examinator Karljohan Lundin Palmerius Norrköping 2010-06-07 Upphovsrätt Detta dokument hålls tillgängligt på Internet – eller dess framtida ersättare – under en längre tid från publiceringsdatum under förutsättning att inga extra- ordinära omständigheter uppstår. Tillgång till dokumentet innebär tillstånd för var och en att läsa, ladda ner, skriva ut enstaka kopior för enskilt bruk och att använda det oförändrat för ickekommersiell forskning och för undervisning. Överföring av upphovsrätten vid en senare tidpunkt kan inte upphäva detta tillstånd. All annan användning av dokumentet kräver upphovsmannens medgivande. För att garantera äktheten, säkerheten och tillgängligheten finns det lösningar av teknisk och administrativ art. Upphovsmannens ideella rätt innefattar rätt att bli nämnd som upphovsman i den omfattning som god sed kräver vid användning av dokumentet på ovan beskrivna sätt samt skydd mot att dokumentet ändras eller presenteras i sådan form eller i sådant sammanhang som är kränkande för upphovsmannens litterära eller konstnärliga anseende eller egenart. För ytterligare information om Linköping University Electronic Press se förlagets hemsida http://www.ep.liu.se/ Copyright The publishers will keep this document online on the Internet - or its possible replacement - for a considerable time from the date of publication barring exceptional circumstances. -

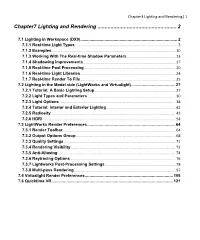

Lighting and Rendering| 1

Chapter3 Lighting and Rendering| 1 Chapter7 Lighting and Rendering ...................................................... 2 7.1 Lighting in Workspace (DX9) .................................................................................... 2 7.1.1 Real-time Light Types .................................................................................................... 3 7.1.2 Examples ......................................................................................................................... 10 7.1.3 Working With The Real-time Shadow Parameters ............................................... 13 7.1.4 Shadowing improvements .......................................................................................... 17 7.1.5 Real-time Post Processing ......................................................................................... 20 7.1.6 Real-time Light Libraries ............................................................................................. 24 7.1.7 Real-time Render To File ............................................................................................. 25 7.2 Lighting in the Model side (LightWorks and Virtualight) ...................................... 27 7.2.1 Tutorial: A Basic Lighting Setup ............................................................................... 27 7.2.2 Light Types and Parameters ...................................................................................... 30 7.2.3 Light Options ................................................................................................................. -

Volume Rendering Simulation in Real-Time

DEGREE PROJECT IN TECHNOLOGY, SECOND CYCLE, 30 CREDITS STOCKHOLM, SWEDEN 2020 Volume Rendering Simulation in Real-Time KTH Thesis Report Enrique Perez Soler KTH ROYAL INSTITUTE OF TECHNOLOGY ELECTRICAL ENGINEERING AND COMPUTER SCIENCE Authors Enrique Perez Soler <[email protected]> Information and Communication Technology KTH Royal Institute of Technology Place for Project Stockholm, Sweden Fatshark AB Examiner Elena Dubrova Place KTH Royal Institute of Technology Supervisor Huanyu Wang Place KTH Royal Institute of Technology Industrial Supervisor Axel Kinner Place Fatshark AB ii Abstract Computer graphics is a branch of Computer Science that specializes in the virtual representation of real-life objects in a computer screen. While the past advancements in this field were commonly related to entertaining media industries such as video games and movies, these have found a use in others. For instance, they have provided a visual aid in medical exploration for healthcare and training simulation for military and defense. Ever since their first applications for data visualization, there has been a growth in the development of new technologies, methods and techniques to improve it. Today, among the most leading methods we can find the Volumetric or Volume Rendering techniques. These allow the display of 3-dimensional discretely sampled data in a 2- dimensional projection on the screen. This thesis provides a study on volumetric rendering through the implementation of a real-time simulation. It presents the various steps, prior knowledge and research required to program it. By studying the necessary mathematical concepts including noise functions, flow maps and vector fields; an interactive effect can be applied to volumetric data. -

Deep Volumetric Ambient Occlusion

arXiv:2008.08345v2 [cs.CV] 16 Oct 2020 © 2020 IEEE. This is the author’s version of the article that has been published in IEEE Transactions on Visualization and Computer Graphics. The final version of this record is available at: 10.1109/TVCG.2020.3030344 Deep Volumetric Ambient Occlusion Dominik Engel and Timo Ropinski Without AO Full Render with AO AO only Ground Truth AO Fig. 1: Volume rendering with volumetric ambient occlusion achieved through Deep Volumetric Ambient Occlusion (DVAO). DVAO uses a 3D convolutional encoder-decoder architecture, to predict ambient occlusion volumes for a given combination of volume data and transfer function. While we introduce and compare several representation and injection strategies for capturing the transfer function information, the shown images result from preclassified injection based on an implicit representation. Abstract—We present a novel deep learning based technique for volumetric ambient occlusion in the context of direct volume rendering. Our proposed Deep Volumetric Ambient Occlusion (DVAO) approach can predict per-voxel ambient occlusion in volumetric data sets, while considering global information provided through the transfer function. The proposed neural network only needs to be executed upon change of this global information, and thus supports real-time volume interaction. Accordingly, we demonstrate DVAO’s ability to predict volumetric ambient occlusion, such that it can be applied interactively within direct volume rendering. To achieve the best possible results, we propose and analyze a variety of transfer function representations and injection strategies for deep neural networks. Based on the obtained results we also give recommendations applicable in similar volume learning scenarios. -

3D Animation-3D Lighting & Rendering (Practical)

DMA-04 3D Animation Block –IV: 3D Lighting & Rendering (Practical) Odisha State Open University 3D Animation This course has been developed with the support of the Commonwealth of Learning (COL). COL is an intergovernmental organisation created by Commonwealth Heads of Government to promote the development and sharing of open learning and distance education knowledge, resources and technologies. Odisha State Open University, Sambalpur (OSOU) is the first Open and Distance learning institution in the State of Odisha, where students can pursue their studies through Open and Distance Learning (ODL) methodologies. Degrees, Diplomas, or Certificates awarded by OSOU are treated as equivalent to the degrees, diplomas, or certificates awarded by other national universities in India by the University Grants Commission. © 2018 by the Commonwealth of Learning and Odisha State Open University. Except where otherwise noted, 3D Animation is made available under Creative Commons Attribution-ShareAlike 4.0 International (CC BY-SA 4.0) License: https://creativecommons.org/licenses/by-sa/4.0/legalcode For the avoidance of doubt, by applying this license the Commonwealth of Learning does not waive any privileges or immunities from claims that it may be entitled to assert, nor does the Commonwealth of Learning submit itself to the jurisdiction, courts, legal processes or laws of any jurisdiction. The ideas and opinions expressed in this publication are those of the author/s; they are not necessarily those of Commonwealth of Learning and do not commit the organisation Odisha State Open University Commonwealth of Learning G.M. University Campus 4710 Kingsway, Suite 2500, Sambalpur Burnaby, V5H 4M2, British, Odisha Columbia India Canada Fax: +91-0663-252 17 00 Fax: +1 604 775 8210 E-mail: [email protected] Email: [email protected] Website: www.osou.ac.in Website: www.col.org Acknowledgements The Odisha State Open University and COL, Canada wishes to thank those Resource Persons below for their contribution to this DMA-04: Concept / Advisor Dr.