Declare Input Source Steamvr

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

009NAG – September 2012

SOUTH AFRICA’S LEADING GAMING, COMPUTER & TECHNOLOGY MAGAZINE VOL 15 ISSUE 6 BORDERLANDS 2 COMPETITION Stuff you can’t buy anywhere! PC / PLAYSTATION / XBOX / NINTENDO PREVIEWS Sleeping Dogs Beyond: Two Souls Pikmin 3 Injustice: Gods among Us ENEMY UNKNOWN Is that a plasma rifl e in your pocket, or are you just happy to see me? ULTIMATE GAMING LOUNGE What your lounge should look like Contents Editor Michael “RedTide“ James Regulars [email protected] 10 Ed’s Note Assistant editor 12 Inbox Geoff “GeometriX“ Burrows 16 Bytes Staff writer Dane “Barkskin “ Remendes Opinion 16 I, Gamer Contributing editor Lauren “Guardi3n “ Das Neves 18 The Game Stalkerer 20 The Indie Investigatorgator Technical writer 22 Miktar’s Meanderingsrings Neo “ShockG“ Sibeko 83 Hardwired 98 Game Over Features International correspondent Miktar “Miktar” Dracon 30 TOPTOP 8 HOLYHOLY SH*TSH*T MOMENTS IN GAMING Contributors Previews Throughout gaming’s relatively short history, we’ve Rodain “Nandrew” Joubert 44 Sleeping Dogs been treated to a number of moments that very nearly Walt “Ramjet” Pretorius 46 Injustice: Gods Among Us made our minds explode out the back of our heads. Miklós “Mikit0707 “ Szecsei Find out what those are. Pippa “UnexpectedGirl” Tshabalala 48 Beyond: Two Souls Tarryn “Azimuth “ Van Der Byl 50 Pikmin 3 Adam “Madman” Liebman 52 The Cave 32 THE ULTIMATE GAMING LOUNGE Tired of your boring, traditional lounge fi lled with Art director boring, traditional lounge stuff ? Then read this! Chris “SAVAGE“ Savides Reviews Photography 60 Reviews: Introduction 36 READER U Chris “SAVAGE“ Savides The results of our recent reader survey have been 61 Short Reviews: Dreamstime.com tallied and weighed by humans better at mathematics Fotolia.com Death Rally / Deadlight and number-y stuff than we pretend to be! We’d like 62 The Secret World to share some of the less top-secret results with you. -

Slang: Language Mechanisms for Extensible Real-Time Shading Systems

Slang: language mechanisms for extensible real-time shading systems YONG HE, Carnegie Mellon University KAYVON FATAHALIAN, Stanford University TIM FOLEY, NVIDIA Designers of real-time rendering engines must balance the conicting goals and GPU eciently, and minimizing CPU overhead using the new of maintaining clear, extensible shading systems and achieving high render- parameter binding model oered by the modern Direct3D 12 and ing performance. In response, engine architects have established eective de- Vulkan graphics APIs. sign patterns for authoring shading systems, and developed engine-specic To help navigate the tension between performance and maintain- code synthesis tools, ranging from preprocessor hacking to domain-specic able/extensible code, engine architects have established eective shading languages, to productively implement these patterns. The problem is design patterns for authoring shading systems, and developed code that proprietary tools add signicant complexity to modern engines, lack ad- vanced language features, and create additional challenges for learning and synthesis tools, ranging from preprocessor hacking, to metapro- adoption. We argue that the advantages of engine-specic code generation gramming, to engine-proprietary domain-specic languages (DSLs) tools can be achieved using the underlying GPU shading language directly, [Tatarchuk and Tchou 2017], for implementing these patterns. For provided the shading language is extended with a small number of best- example, the idea of shader components [He et al. 2017] was recently practice principles from modern, well-established programming languages. presented as a pattern for achieving both high rendering perfor- We identify that adding generics with interface constraints, associated types, mance and maintainable code structure when specializing shader and interface/structure extensions to existing C-like GPU shading languages code to coarse-grained features such as a surface material pattern or enables real-time renderer developers to build shading systems that are a tessellation eect. -

Volumetric Real-Time Smoke and Fog Effects in the Unity Game Engine

Volumetric Real-Time Smoke and Fog Effects in the Unity Game Engine A Technical Report presented to the faculty of the School of Engineering and Applied Science University of Virginia by Jeffrey Wang May 6, 2021 On my honor as a University student, I have neither given nor received unauthorized aid on this assignment as defined by the Honor Guidelines for Thesis-Related Assignments. Jeffrey Wang Technical advisor: Luther Tychonievich, Department of Computer Science Volumetric Real-Time Smoke and Fog Effects in the Unity Game Engine Abstract Real-time smoke and fog volumetric effects were created in the Unity game engine by combining volumetric lighting systems and GPU particle systems. A variety of visual effects were created to demonstrate the features of these effects, which include light scattering, absorption, high particle count, and performant collision detection. The project was implemented by modifying the High Definition Render Pipeline and Visual Effect Graph packages for Unity. 1. Introduction Digital media is constantly becoming more immersive, and our simulated depictions of reality are constantly becoming more realistic. This is thanks, in large part, due to advances in computer graphics. Artists are constantly searching for ways to improve the complexity of their effects, depict more realistic phenomena, and impress their audiences, and they do so by improving the quality and speed of rendering – the algorithms that computers use to transform data into images (Jensen et al. 2010). There are two breeds of rendering: real-time and offline. Offline renders are used for movies and other video media. The rendering is done in advance by the computer, saved as images, and replayed later as a video to the audience. -

Create an Endless Running Game in Unity

Zhang Yancan Create an Endless Running Game in Unity Bachelor’s Thesis Information Technology May 2016 DESCRIPTION Date of the bachelor's thesis 2/Dec/2016 Author(s) Degree programme and option Zhang Yancan Information Technology Name of the bachelor's thesis Create an Endless Running Game in Unity The fundamental purpose of the study is to explore how to create a game with Unity3D game engine. Another aim is to get familiar with the basic processes of making a game. By the end of the study, all the research objectives were achieved. In this study, the researcher firstly studied the theoretical frameworks of game engine and mainly focused on the Unity3D game engine. Then the theoretical knowledge was applied into practice. The project conducted during the research is to generate an endless running game, which allows the players getting points by keep moving on the ground and colleting coins that appeared during the game. In addition, the players need to dodge the enemies and pay attention to the gaps emerged on the ground. The outcomes of the study have accomplished the research purposes. The game created is able to function well during the gameplay as the researcher expected. All functions have displayed in game. Subject headings, (keywords) Unity3D, Endless running game, C# Pages Language URN 34 English Remarks, notes on appendices Tutor Bachelor’s thesis assigned by Mikkeli University of Applied Sciences (change Reijo Vuohelainen to a company name, if applicable) CONTENTS 1 INTRODUCTION................................................................................................ 1 2 THEORETICAL OF BACKGROUND GAME DESIGN .................................. 2 2.1 Game strategy design .................................................................................. 2 2.2 Game balance ............................................................................................. -

Experiments on Flow and Learning in Games : Creating Services to Support Efficient Serious Games Development

Experiments on flow and learning in games : creating services to support efficient serious games development Citation for published version (APA): Pranantha Dolar, D. (2015). Experiments on flow and learning in games : creating services to support efficient serious games development. Technische Universiteit Eindhoven. https://doi.org/10.6100/IR783192 DOI: 10.6100/IR783192 Document status and date: Published: 01/01/2015 Document Version: Publisher’s PDF, also known as Version of Record (includes final page, issue and volume numbers) Please check the document version of this publication: • A submitted manuscript is the version of the article upon submission and before peer-review. There can be important differences between the submitted version and the official published version of record. People interested in the research are advised to contact the author for the final version of the publication, or visit the DOI to the publisher's website. • The final author version and the galley proof are versions of the publication after peer review. • The final published version features the final layout of the paper including the volume, issue and page numbers. Link to publication General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. • Users may download and print one copy of any publication from the public portal for the purpose of private study or research. • You may not further distribute the material or use it for any profit-making activity or commercial gain • You may freely distribute the URL identifying the publication in the public portal. -

The Design and Evolution of the Uberbake Light Baking System

The design and evolution of the UberBake light baking system DARIO SEYB∗, Dartmouth College PETER-PIKE SLOAN∗, Activision Publishing ARI SILVENNOINEN, Activision Publishing MICHAŁ IWANICKI, Activision Publishing WOJCIECH JAROSZ, Dartmouth College no door light dynamic light set off final image door light only dynamic light set on Fig. 1. Our system allows for player-driven lighting changes at run-time. Above we show a scene where a door is opened during gameplay. The image on the left shows the final lighting produced by our system as seen in the game. In the middle, we show the scene without the methods described here(top).Our system enables us to efficiently precompute the associated lighting change (bottom). This functionality is built on top of a dynamic light setsystemwhich allows for levels with hundreds of lights who’s contribution to global illumination can be controlled individually at run-time (right). ©Activision Publishing, Inc. We describe the design and evolution of UberBake, a global illumination CCS Concepts: • Computing methodologies → Ray tracing; Graphics system developed by Activision, which supports limited lighting changes in systems and interfaces. response to certain player interactions. Instead of relying on a fully dynamic solution, we use a traditional static light baking pipeline and extend it with Additional Key Words and Phrases: global illumination, baked lighting, real a small set of features that allow us to dynamically update the precomputed time systems lighting at run-time with minimal performance and memory overhead. This ACM Reference Format: means that our system works on the complete set of target hardware, ranging Dario Seyb, Peter-Pike Sloan, Ari Silvennoinen, Michał Iwanicki, and Wo- from high-end PCs to previous generation gaming consoles, allowing the jciech Jarosz. -

Fast Rendering of Irregular Grids

Fast Rendering of Irregular Grids Cl´audio T. Silva Joseph S. B. Mitchell Arie E. Kaufman State University of New York at Stony Brook Stony Brook, NY 11794 Abstract Definitions and Terminology 3 A polyhedron is a closed subset of < whose boundary consists We propose a fast algorithm for rendering general irregular grids. of a finite collection of convex polygons (2-faces,orfacets) whose Our method uses a sweep-plane approach to accelerate ray casting, union is a connected 2-manifold. The edges (1-faces)andvertices and can handle disconnected and nonconvex (even with holes) un- (0-faces) of a polyhedron are simply the edges and vertices of the structured irregular grids with a rendering cost that decreases as the polygonal facets. A convex polyhedron is called a polytope.A “disconnectedness” decreases. The algorithm is carefully tailored polytope having exactly four vertices (and four triangular facets) is to exploit spatial coherence even if the image resolution differs sub- called a simplex (tetrahedron). A finite set S of polyhedra forms a stantially from the object space resolution. mesh (or an unstructured, irregular grid) if the intersection of any In this paper, we establish the practicality of our method through two polyhedra from S is either empty, a single common edge, a sin- experimental results based on our implementation, and we also pro- gle common vertex, or a single common facet of the two polyhedra; vide theoretical results, both lower and upper bounds, on the com- such a set S is said to form a cell complex. The polyhedra of a mesh plexity of ray casting of irregular grids. -

Game Engines

Game Engines Martin Samuelčík VIS GRAVIS, s.r.o. [email protected] http://www.sccg.sk/~samuelcik Game Engine • Software framework (set of tools, API) • Creation of video games, interactive presentations, simulations, … (2D, 3D) • Combining assets (models, sprites, textures, sounds, …) and programs, scripts • Rapid-development tools (IDE, editors) vs coding everything • Deployment on many platforms – Win, Linux, Mac, Android, iOS, Web, Playstation, XBOX, … Game Engines 2 Martin Samuelčík Game Engine Assets Modeling, scripting, compiling Running compiled assets + scripts + engine Game Engines 3 Martin Samuelčík Game Engine • Rendering engine • Scripting engine • User input engine • Audio engine • Networking engine • AI engine • Scene engine Game Engines 4 Martin Samuelčík Rendering Engine • Creating final picture on screen • Many methods: rasterization, ray-tracing,.. • For interactive application, rendering of one picture < 33ms = 30 FPS • Usually based on low level APIs – GDI, SDL, OpenGL, DirectX, … • Accelerated using hardware • Graphics User Interface, HUD Game Engines 5 Martin Samuelčík Scripting Engine • Adding logic to objects in scene • Controlling animations, behaviors, artificial intelligence, state changes, graphics effects, GUI, audio execution, … • Languages: C, C++, C#, Java, JavaScript, Python, Lua, … • Central control of script executions – game consoles Game Engines 6 Martin Samuelčík User input Engine • Detecting input from devices • Detecting actions or gestures • Mouse, keyboard, multitouch display, gamepads, Kinect -

3D Computer Graphics Compiled By: H

animation Charge-coupled device Charts on SO(3) chemistry chirality chromatic aberration chrominance Cinema 4D cinematography CinePaint Circle circumference ClanLib Class of the Titans clean room design Clifford algebra Clip Mapping Clipping (computer graphics) Clipping_(computer_graphics) Cocoa (API) CODE V collinear collision detection color color buffer comic book Comm. ACM Command & Conquer: Tiberian series Commutative operation Compact disc Comparison of Direct3D and OpenGL compiler Compiz complement (set theory) complex analysis complex number complex polygon Component Object Model composite pattern compositing Compression artifacts computationReverse computational Catmull-Clark fluid dynamics computational geometry subdivision Computational_geometry computed surface axial tomography Cel-shaded Computed tomography computer animation Computer Aided Design computerCg andprogramming video games Computer animation computer cluster computer display computer file computer game computer games computer generated image computer graphics Computer hardware Computer History Museum Computer keyboard Computer mouse computer program Computer programming computer science computer software computer storage Computer-aided design Computer-aided design#Capabilities computer-aided manufacturing computer-generated imagery concave cone (solid)language Cone tracing Conjugacy_class#Conjugacy_as_group_action Clipmap COLLADA consortium constraints Comparison Constructive solid geometry of continuous Direct3D function contrast ratioand conversion OpenGL between -

Volumetric Fog Rendering Bachelor’S Thesis (9 ECT)

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by DSpace at Tartu University Library UNIVERSITY OF TARTU Institute of Computer Science Computer Science curriculum Siim Raudsepp Volumetric Fog Rendering Bachelor’s thesis (9 ECT) Supervisor: Jaanus Jaggo, MSc Tartu 2018 Volumetric Fog Rendering Abstract: The aim of this bachelor’s thesis is to describe the physical behavior of fog in real life and the algorithm for implementing fog in computer graphics applications. An implementation of the volumetric fog algorithm written in the Unity game engine is also provided. The per- formance of the implementation is evaluated using benchmarks, including an analysis of the results. Additionally, some suggestions are made to improve the volumetric fog rendering in the future. Keywords: Computer graphics, fog, volumetrics, lighting CERCS: P170: Arvutiteadus, arvutusmeetodid, süsteemid, juhtimine (automaat- juhtimisteooria) Volumeetrilise udu renderdamine Lühikokkuvõte: Käesoleva bakalaureusetöö eesmärgiks on kirjeldada udu füüsikalist käitumist looduses ja koostada algoritm udu implementeerimiseks arvutigraafika rakendustes. Töö raames on koostatud volumeetrilist udu renderdav rakendus Unity mängumootoris. Töös hinnatakse loodud rakenduse jõudlust ning analüüsitakse tulemusi. Samuti tuuakse töös ettepanekuid volumeetrilise udu renderdamise täiustamiseks tulevikus. Võtmesõnad: Arvutigraafika, udu, volumeetria, valgustus CERCS: P170: Computer science, numerical analysis, systems, control 2 Table of Contents Introduction -

Techniques for an Artistic Manipulation of Light, Signal and Material

Techniques for an artistic manipulation of light, signal and material Sarah El-Sherbiny∗ Vienna University of Technology Figure 1: Beginning from the left side the images in the first column show an editing of the lighting. The scene was manipulated by painting with light and automatic refinements and optimizations made by the system [Pellacini et al. 2007]. A result of volumetric lighting can be seen in the second column. The top image was generated by static emissive curve geometry, while the image in the bottom was created by animated beams that get shaded with volumetric effects [Nowrouzezahrai et al. 2011]. In the third column the shadows were modified. The user can set constraints and manipulate surface effects via drag and drop [Ritschel et al. 2010]. The images in the fourth column illustrate the editing of reflections. The original image was manipulated so, that the reflected face gets better visible on the ring [Ritschel et al. 2009]. The last images on the right show the editing of materials by making objects transparent and translucent [Khan et al. 2006]. Abstract 1 Introduction Global illumination is important for getting more realistic images. This report gives an outline of some methods that were used to en- It considers direct illumination that occurs when light falls directly able an artistic editing. It describes the manipulation of indirect from a light source on a surface. Also indirect illumination is taken lighting, surface signals and materials of a scene. Surface signals into account, when rays get reflected on a surface. In diffuse re- include effects such as shadows, caustics, textures, reflections or flections the incoming ray gets reflected in many angles while in refractions. -

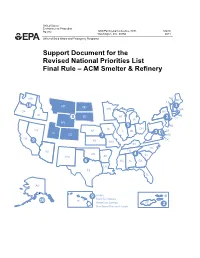

Final Listing Support Document

United States Environmental Protection Agency 1200 Pennsylvania Avenue, N.W. March Washington, D.C. 20460 2011 Office of Solid Waste and Emergency Response Support Document for the Revised National Priorities List Final Rule – ACM Smelter & Refinery Support Document for the Revised National Priorities List Final Rule ACM Smelter and Refinery March 2011 Site Assessment and Remedy Decisions Branch Office of Superfund Remediation and Technology Innovation Office of Solid Waste and Emergency Response U.S. Environmental Protection Agency Washington, DC 20460 Table of Contents Executive Summary ....................................................................................................................................v Introduction................................................................................................................................................vi Background of the NPL.........................................................................................................................vi Development of the NPL.......................................................................................................................vii Hazard Ranking System.......................................................................................................................vii Other Mechanisms for Listing ............................................................................................................viii Organization of this Document.............................................................................................................ix