Color Temperature Tuning: Allowing Accurate Post-Capture White-Balance Editing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Color Theory for Painting Video: Color Perception

Color Theory For Painting Video: Color Perception • http://www.ted.com/talks/lang/eng/beau_lotto_optical_illusions_show_how_we_see.html • Experiment • http://www.youtube.com/watch?v=y8U0YPHxiFQ Intro to color theory • http://www.youtube.com/watch?v=059-0wrJpAU&feature=relmfu Color Theory Principles • The Color Wheel • Color context • Color Schemes • Color Applications and Effects The Color Wheel The Color Wheel • A circular diagram displaying the spectrum of visible colors. The Color Wheel: Primary Colors • Primary Colors: Red, yellow and blue • In traditional color theory, primary colors can not be mixed or formed by any combination of other colors. • All other colors are derived from these 3 hues. The Color Wheel: Secondary Colors • Secondary Colors: Green, orange and purple • These are the colors formed by mixing the primary colors. The Color Wheel: Tertiary Colors • Tertiary Colors: Yellow- orange, red-orange, red-purple, blue-purple, blue-green & yellow-green • • These are the colors formed by mixing a primary and a secondary color. • Often have a two-word name, such as blue-green, red-violet, and yellow-orange. Color Context • How color behaves in relation to other colors and shapes is a complex area of color theory. Compare the contrast effects of different color backgrounds for the same red square. Color Context • Does your impression od the center square change based on the surround? Color Context Additive colors • Additive: Mixing colored Light Subtractive Colors • Subtractive Colors: Mixing colored pigments Color Schemes Color Schemes • Formulas for creating visual unity [often called color harmony] using colors on the color wheel Basic Schemes • Analogous • Complementary • Triadic • Split complement Analogous Color formula used to create color harmony through the selection of three related colors which are next to one another on the color wheel. -

Digital Refocusing with Incoherent Holography

Digital Refocusing with Incoherent Holography Oliver Cossairt Nathan Matsuda Northwestern University Northwestern University Evanston, IL Evanston, IL [email protected] [email protected] Mohit Gupta Columbia University New York, NY [email protected] Abstract ‘adding’ and ‘subtracting’ blur to the point spread functions (PSF) of different scene points, depending on their depth. In Light field cameras allow us to digitally refocus a pho- a conventional 2D image, while it is possible to add blur to tograph after the time of capture. However, recording a a scene point’s PSF, it is not possible to subtract or remove light field requires either a significant loss in spatial res- blur without introducing artifacts in the image. This is be- olution [10, 20, 9] or a large number of images to be cap- cause 2D imaging is an inherently lossy process; the angular tured [11]. In this paper, we propose incoherent hologra- information in the 4D light field is lost in a 2D image. Thus, phy for digital refocusing without loss of spatial resolution typically, if digital refocusing is desired, 4D light fields are from only 3 captured images. The main idea is to cap- captured. Unfortunately, light field cameras sacrifice spa- ture 2D coherent holograms of the scene instead of the 4D tial resolution in order to capture the angular information. light fields. The key properties of coherent light propagation The loss in resolution can be significant, up to 1-2 orders are that the coherent spread function (hologram of a single of magnitude. While there have been attempts to improve point source) encodes scene depths and has a broadband resolution by using compressive sensing techniques [14], spatial frequency response. -

Thanks for Downloading the Sample Chapters

Thanks for Downloading the Sample Chapters Here are the chapters included. The Quick Start chapter, the first in the book, which identifies five quick ways to up the video and audio quality of your webinars and videoconferences. Chapter 5: Simple Lighting Techniques. The easiest way to significantly improve the quality of video produced by webcams and smartphones is to add lighting. You don’t have to spend a fortune; in fact, my go-to setup cost $30. You can read about this and more in this chapter. Chapter 9: Working With Audio on Android Devices. How to add and control a microphone on Android devices. Chapter 14: Working with Onstream Webinars. Audio and video adjustment controls available in Onstream Webinars. After the chapters, I’ve inserted the introduction to the book, and then the table of contents, so you can see what else is covered in the book. Thanks for having a look. Quick Start: Do This, Don’t Do That Figure a. Check your upload speed well in advance; don’t just pray for the best. This chapter contains highlights from various chapters in the book, both as a quick-start reference and as an introduction to the materials covered in the book. As you can see in Figure a, it’s better to check your outbound bandwidth with a tool called Speedtest than to simply pray that your bandwidth is sufficient. Chapter 1 has tables detailing the recommended bitrate for various conferencing and webinar applications, and other, related tips. Note that Speedtest is available as an app for both iOS and Android platforms, so you can check there as well. -

White Paper the Twilight Zone

White paper The twilight zone …a journey through the magic realm of color spaces, gray shades, color temperatures, just noticeable differences and more Goran Stojmenovik Product Manager Barco Control Rooms division The whole story behind display specifications and human vision (2) Abstract Our world and the displays representing it are not black and white, as someone might think when seeing a display spec consisting only of luminance and contrast. There is much more, there is a whole world of gray shades, color spaces, color temperatures, contrast sensitivities, just noticeable differences. Knowing what “brightness” and “luminance” are and being able to distinguish them, knowing what influences on-screen contrast of a display, and knowing how the eye adapts to changes in luminance levels – these are factors you should have learned from the first part of this white paper. Here, we will go into deeper waters of human vision, to explain when we perceive a display as a good display, and what are the requirements posed by the human visual system to create a good display. Page 1 of 9 www.barco.com White paper The twilight zone Display requirements and human vision Gamma When we talk about brightness and contrast, we cannot THE GAMMA DETERMINES THE skip the famous “gamma.” For one specified contrast, BRIGHTNESS OF THE MID-GRAY we know it’s the brightest white and darkest black that SHADES matter. But, what about all the in-between? This is the region of the gray shades. They are produced by tilting the liquid crystal molecules with a voltage (in LCD displays), or by modulating the amount of time a certain DMD mirror is on during one frame (in DLP projection displays). -

Kelvin Color Temperature

KELVIN COLOR TEMPERATURE William Thompson Kelvin was a 19th century physicist and mathematician who invented a temperature scale that had absolute zero as its low endpoint. In physics, absolute zero is a very cold temperature, the coldest possible, at which no heat exists and kinetic energy (movement) ceases. On the Celsius scale absolute zero is -273 degrees, and on the Fahrenheit scale it is -459 degrees. The Kelvin temperature scale is often used for scientific measurements. Kelvins, as the degrees are now called, are derived from the actual temperature of a black body radiator, which means a black material heated to that temperature. An incandescent filament is very dark, and approaches being a black body radiator, so the actual temperature of an incandescent filament is somewhat close to its color temperature in Kelvins. The color temperature of a lamp is very important in the television industry where the camera must be calibrated for white balance. This is often done by focusing the camera on a white card in the available lighting and tweaking it so that the card reads as true white. All other colors will automatically adjust so that they read properly. This is especially important to reproduce “normal” looking skin tones. In theatre applications, where it is only important for colors to read properly to the human eye, the exact color temperature of lamps is not so important. Incandescent lamps tend to have a color temperature around 3200 K, but this is true only if they are operating with full voltage. Remember that dimmers work by varying the voltage pressure supplied to the lamp. -

Calculating Correlated Color Temperatures Across the Entire Gamut of Daylight and Skylight Chromaticities

Calculating correlated color temperatures across the entire gamut of daylight and skylight chromaticities Javier Herna´ ndez-Andre´ s, Raymond L. Lee, Jr., and Javier Romero Natural outdoor illumination daily undergoes large changes in its correlated color temperature ͑CCT͒, yet existing equations for calculating CCT from chromaticity coordinates span only part of this range. To improve both the gamut and accuracy of these CCT calculations, we use chromaticities calculated from our measurements of nearly 7000 daylight and skylight spectra to test an equation that accurately maps CIE 1931 chromaticities x and y into CCT. We extend the work of McCamy ͓Color Res. Appl. 12, 285–287 ͑1992͔͒ by using a chromaticity epicenter for CCT and the inverse slope of the line that connects it to x and y. With two epicenters for different CCT ranges, our simple equation is accurate across wide chromaticity and CCT ranges ͑3000–106 K͒ spanned by daylight and skylight. © 1999 Optical Society of America OCIS codes: 010.1290, 330.1710, 330.1730. 1. Introduction term correlated color temperature ͑CCT͒ instead of A colorimetric landmark often included in the Com- color temperature to describe its appearance. Sup- mission Internationale de l’Eclairage ͑CIE͒ 1931 pose that x1, y1 is the chromaticity of such an off-locus chromaticity diagram is the locus of chromaticity co- light source. By definition, the CCT of x1, y1 is the ordinates defined by blackbody radiators ͑see Fig. 1, temperature of the Planckian radiator whose chro- inset͒. One can calculate this Planckian ͑or black- maticity is nearest to x1, y1. The colorimetric body͒ locus by colorimetrically integrating the Planck minimum-distance calculations that determine CCT function at many different temperatures, with each must be done within the color space of the CIE 1960 temperature specifying a unique pair of 1931 x, y uniformity chromaticity scale ͑UCS͒ diagram. -

Calculating Color Temperature and Illuminance Using the TAOS TCS3414CS Digital Color Sensor Contributed by Joe Smith February 27, 2009 Rev C

TAOS Inc. is now ams AG The technical content of this TAOS document is still valid. Contact information: Headquarters: ams AG Tobelbader Strasse 30 8141 Premstaetten, Austria Tel: +43 (0) 3136 500 0 e-Mail: [email protected] Please visit our website at www.ams.com NUMBER 25 INTELLIGENT OPTO SENSOR DESIGNER’S NOTEBOOK Calculating Color Temperature and Illuminance using the TAOS TCS3414CS Digital Color Sensor contributed by Joe Smith February 27, 2009 Rev C ABSTRACT The Color Temperature and Illuminance of a broad band light source can be determined with the TAOS TCS3414CS red, green and blue digital color sensor with IR blocking filter built in to the package. This paper will examine Color Temperature and discuss how to calculate the Color Temperature and Illuminance of a given light source. Color Temperature information could be useful in feedback control and quality control systems. COLOR TEMPERATURE Color temperature has long been used as a metric to characterize broad band light sources. It is a means to characterize the spectral properties of a near-white light source. Color temperature, measured in degrees Kelvin (K), refers to the temperature to which one would have to heat a blackbody (or planckian) radiator to produce light of a particular color. A blackbody radiator is defined as a theoretical object that is a perfect radiator of visible light. As the blackbody radiator is heated it radiates energy first in the infrared spectrum and then in the visible spectrum as red, orange, white and finally bluish white light. Incandescent lights are good models of blackbody radiators, because most of the light emitted from them is due to the heating of their filaments. -

Color Temperature and at 5,600 Degrees Kelvin It Will Begin to Appear Blue

4,800 degrees it will glow a greenish color Color Temperature and at 5,600 degrees Kelvin it will begin to appear blue. But light itself has no heat; Color temperature is usually used so for photography it is just a measure- to mean white balance, white point or a ment of the hue of a specific type of light means of describing the color of white source. light. This is a very difficult concept to ex- plain, because–”Isn’t white always white?” The human brain is incred- ibly adept at quickly correcting for changes in the color temperature of light; many different kinds of light all seem “white” to us. When moving from a bright daylight environment to a room lit by a candle all that will appear to change, to the naked eye, is the light level. Yet record these two situations shooting color film, digital photographs or with tape in an unbalanced camcorder and the outside images will have a blueish hue and the inside images will have a heavy orange cast. The brain quickly adjust to the changes, mak- ing what is perceived as white ap- pear white, whereas film, digital im- ages and camcorders are balanced for one particular color and anything that deviates from this will produce a color cast. A GUIDE TO COLOR TEMPERATURE The color of light is measured by the Kelvin scale . This is a sci- entific temperature scale used to measure the exact temperature of objects. If you heat a carbon rod to 3,200 degrees, it glows orange. -

Got Good Color? Controlling Color Temperature in Imaging Software

Got Good Color? Controlling Color Temperature in Imaging Software When you capture an image of a specimen, you want it to look like what you saw through the eyepieces. In this article we will cover the basic concepts of color management and what you can do to make sure what you capture is as close to what you see as possible. Get a Reference Let’s define what you want to achieve: you want the image you produce on your computer screen or on the printed page to look like what you see through the eyepieces of your microscope. In order to maximize the match between these images, your system must be setup to use the same reference. White light is the primary reference that you have most control of in your imaging system. It is your responsibility to set it properly at different points in your system. Many Colors of White Although “White” sounds like a defined color, there are different shades of white. This happens because white light is made up of all wavelengths in the visible spectrum. True white light has an equal intensity at every wavelength. If any wavelength has a higher intensity than the rest, the light takes on a hue related to the dominant wavelength. A simple definition of the hue cast of white light is called “Color Temperature”. This comes from its basis in the glow of heated materials. In general the lower the temperature (in °Kelvin = C° + 273) the redder the light, the higher the temperature the bluer the light. The one standard white lighting in photography and photomicrography is 5000°K (D50) and is called daylight neutral white. -

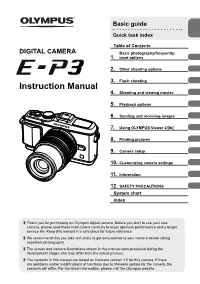

E-P3 Instruction Manual

Basic guide Quick task index Table of Contents DIGITAL CAMERA Basic photography/frequently- 1. used options 2. Other shooting options 3. Flash shooting Instruction Manual 4. Shooting and viewing movies 5. Playback options 6. Sending and receiving images 7. Using OLYMPUS Viewer 2/[ib] 8. Printing pictures 9. Camera setup 10. Customizing camera settings 11. Information 12. SAFETY PRECAUTIONS System chart Index Thank you for purchasing an Olympus digital camera. Before you start to use your new camera, please read these instructions carefully to enjoy optimum performance and a longer service life. Keep this manual in a safe place for future reference. We recommend that you take test shots to get accustomed to your camera before taking important photographs. The screen and camera illustrations shown in this manual were produced during the development stages and may differ from the actual product. The contents in this manual are based on fi rmware version 1.0 for this camera. If there are additions and/or modifi cations of functions due to fi rmware update for the camera, the contents will differ. For the latest information, please visit the Olympus website. Unpack the box contents The following items are included with the camera. If anything is missing or damaged, contact the dealer from whom you purchased the camera. Body cap Strap USB cable AV cable Camera CB-USB6 (Monaural) CB-AVC3 • Computer software CD-ROM • Instruction manual • Warranty card Camera Lithium ion Lithium ion grip battery charger MCG-1 PS-BLS1 (BLS-1) PS-BCS1 (BCS-1) or BLS-5 or BCS-5 Attaching the strap Attaching the grip 1 Thread the strap in 2 Lastly, pull the strap Use a coin or similar object the direction of the tight making sure to tighten the screw. -

Color Control of LED Luminaires by Robert Bell

Color control of LED luminaires BY ROBERT BELL Why it is not as easy as you might think. Another description is by hue, saturation, not create every color your eye can see. Below A bit about additive and luminance, HSL. (Some say “intensity” is a hypothetical locus of an RGB system color mixing or “lightness” instead of “luminance.”) rendered on the entire visible light spectrum. WITH RECENT MASS ACCEPTANCE Equally valid is hue, saturation, and value, of solid-state LED lighting, it’s time HSV. Value is sometimes referred to as for an explanation of this technology’s brightness and is similar to luminance. complexities and ways in which it can be However, saturation in HSL and HSV differ tamed. LED luminaires use the output of dramatically. For simplicity, I define hue multiple sources to achieve different colors as color and saturation as the amount of and intensities. Additive color mixing is color. I also try to remember if “L” is set to nothing new to our industry. We’ve done 100%, that is white, 0% is black, and 50% it for years on cycloramas with gelled is pure color when saturation is 100%. As luminaires hitting the same surface, but for “V”, 0% is black and 100% is pure color, control can be tricky. The first intelligent and the saturation value has to make up the luminaire I used was a spotlight that had difference. That over simplifies it, but let’s three MR16 lamps, fitted with red, green, carry on, as we’re not done yet. and blue filters. -

PRECISE COLOR COMMUNICATION COLOR CONTROL from PERCEPTION to INSTRUMENTATION Knowing Color

PRECISE COLOR COMMUNICATION COLOR CONTROL FROM PERCEPTION TO INSTRUMENTATION Knowing color. Knowing by color. In any environment, color attracts attention. An infinite number of colors surround us in our everyday lives. We all take color pretty much for granted, but it has a wide range of roles in our daily lives: not only does it influence our tastes in food and other purchases, the color of a person’s face can also tell us about that person’s health. Even though colors affect us so much and their importance continues to grow, our knowledge of color and its control is often insufficient, leading to a variety of problems in deciding product color or in business transactions involving color. Since judgement is often performed according to a person’s impression or experience, it is impossible for everyone to visually control color accurately using common, uniform standards. Is there a way in which we can express a given color* accurately, describe that color to another person, and have that person correctly reproduce the color we perceive? How can color communication between all fields of industry and study be performed smoothly? Clearly, we need more information and knowledge about color. *In this booklet, color will be used as referring to the color of an object. Contents PART I Why does an apple look red? ········································································································4 Human beings can perceive specific wavelengths as colors. ························································6 What color is this apple ? ··············································································································8 Two red balls. How would you describe the differences between their colors to someone? ·······0 Hue. Lightness. Saturation. The world of color is a mixture of these three attributes.