30 Orthogonal Subspaces

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Grassmann Manifold

The Grassmann Manifold 1. For vector spaces V and W denote by L(V; W ) the vector space of linear maps from V to W . Thus L(Rk; Rn) may be identified with the space Rk£n of k £ n matrices. An injective linear map u : Rk ! V is called a k-frame in V . The set k n GFk;n = fu 2 L(R ; R ): rank(u) = kg of k-frames in Rn is called the Stiefel manifold. Note that the special case k = n is the general linear group: k k GLk = fa 2 L(R ; R ) : det(a) 6= 0g: The set of all k-dimensional (vector) subspaces ¸ ½ Rn is called the Grassmann n manifold of k-planes in R and denoted by GRk;n or sometimes GRk;n(R) or n GRk(R ). Let k ¼ : GFk;n ! GRk;n; ¼(u) = u(R ) denote the map which assigns to each k-frame u the subspace u(Rk) it spans. ¡1 For ¸ 2 GRk;n the fiber (preimage) ¼ (¸) consists of those k-frames which form a basis for the subspace ¸, i.e. for any u 2 ¼¡1(¸) we have ¡1 ¼ (¸) = fu ± a : a 2 GLkg: Hence we can (and will) view GRk;n as the orbit space of the group action GFk;n £ GLk ! GFk;n :(u; a) 7! u ± a: The exercises below will prove the following n£k Theorem 2. The Stiefel manifold GFk;n is an open subset of the set R of all n £ k matrices. There is a unique differentiable structure on the Grassmann manifold GRk;n such that the map ¼ is a submersion. -

2 Hilbert Spaces You Should Have Seen Some Examples Last Semester

2 Hilbert spaces You should have seen some examples last semester. The simplest (finite-dimensional) ex- C • A complex Hilbert space H is a complete normed space over whose norm is derived from an ample is Cn with its standard inner product. It’s worth recalling from linear algebra that if V is inner product. That is, we assume that there is a sesquilinear form ( , ): H H C, linear in · · × → an n-dimensional (complex) vector space, then from any set of n linearly independent vectors we the first variable and conjugate linear in the second, such that can manufacture an orthonormal basis e1, e2,..., en using the Gram-Schmidt process. In terms of this basis we can write any v V in the form (f ,д) = (д, f ), ∈ v = a e , a = (v, e ) (f , f ) 0 f H, and (f , f ) = 0 = f = 0. i i i i ≥ ∀ ∈ ⇒ ∑ The norm and inner product are related by which can be derived by taking the inner product of the equation v = aiei with ei. We also have n ∑ (f , f ) = f 2. ∥ ∥ v 2 = a 2. ∥ ∥ | i | i=1 We will always assume that H is separable (has a countable dense subset). ∑ Standard infinite-dimensional examples are l2(N) or l2(Z), the space of square-summable As usual for a normed space, the distance on H is given byd(f ,д) = f д = (f д, f д). • • ∥ − ∥ − − sequences, and L2(Ω) where Ω is a measurable subset of Rn. The Cauchy-Schwarz and triangle inequalities, • √ (f ,д) f д , f + д f + д , | | ≤ ∥ ∥∥ ∥ ∥ ∥ ≤ ∥ ∥ ∥ ∥ 2.1 Orthogonality can be derived fairly easily from the inner product. -

Orthogonal Complements (Revised Version)

Orthogonal Complements (Revised Version) Math 108A: May 19, 2010 John Douglas Moore 1 The dot product You will recall that the dot product was discussed in earlier calculus courses. If n x = (x1: : : : ; xn) and y = (y1: : : : ; yn) are elements of R , we define their dot product by x · y = x1y1 + ··· + xnyn: The dot product satisfies several key axioms: 1. it is symmetric: x · y = y · x; 2. it is bilinear: (ax + x0) · y = a(x · y) + x0 · y; 3. and it is positive-definite: x · x ≥ 0 and x · x = 0 if and only if x = 0. The dot product is an example of an inner product on the vector space V = Rn over R; inner products will be treated thoroughly in Chapter 6 of [1]. Recall that the length of an element x 2 Rn is defined by p jxj = x · x: Note that the length of an element x 2 Rn is always nonnegative. Cauchy-Schwarz Theorem. If x 6= 0 and y 6= 0, then x · y −1 ≤ ≤ 1: (1) jxjjyj Sketch of proof: If v is any element of Rn, then v · v ≥ 0. Hence (x(y · y) − y(x · y)) · (x(y · y) − y(x · y)) ≥ 0: Expanding using the axioms for dot product yields (x · x)(y · y)2 − 2(x · y)2(y · y) + (x · y)2(y · y) ≥ 0 or (x · x)(y · y)2 ≥ (x · y)2(y · y): 1 Dividing by y · y, we obtain (x · y)2 jxj2jyj2 ≥ (x · y)2 or ≤ 1; jxj2jyj2 and (1) follows by taking the square root. -

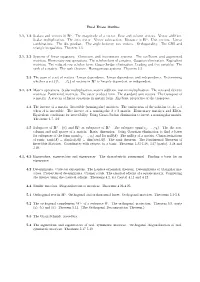

Final Exam Outline 1.1, 1.2 Scalars and Vectors in R N. the Magnitude

Final Exam Outline 1.1, 1.2 Scalars and vectors in Rn. The magnitude of a vector. Row and column vectors. Vector addition. Scalar multiplication. The zero vector. Vector subtraction. Distance in Rn. Unit vectors. Linear combinations. The dot product. The angle between two vectors. Orthogonality. The CBS and triangle inequalities. Theorem 1.5. 2.1, 2.2 Systems of linear equations. Consistent and inconsistent systems. The coefficient and augmented matrices. Elementary row operations. The echelon form of a matrix. Gaussian elimination. Equivalent matrices. The reduced row echelon form. Gauss-Jordan elimination. Leading and free variables. The rank of a matrix. The rank theorem. Homogeneous systems. Theorem 2.2. 2.3 The span of a set of vectors. Linear dependence. Linear dependence and independence. Determining n whether a set {~v1, . ,~vk} of vectors in R is linearly dependent or independent. 3.1, 3.2 Matrix operations. Scalar multiplication, matrix addition, matrix multiplication. The zero and identity matrices. Partitioned matrices. The outer product form. The standard unit vectors. The transpose of a matrix. A system of linear equations in matrix form. Algebraic properties of the transpose. 3.3 The inverse of a matrix. Invertible (nonsingular) matrices. The uniqueness of the solution to Ax = b when A is invertible. The inverse of a nonsingular 2 × 2 matrix. Elementary matrices and EROs. Equivalent conditions for invertibility. Using Gauss-Jordan elimination to invert a nonsingular matrix. Theorems 3.7, 3.9. n n n 3.5 Subspaces of R . {0} and R as subspaces of R . The subspace span(v1,..., vk). The the row, column and null spaces of a matrix. -

LINEAR ALGEBRA FALL 2007/08 PROBLEM SET 9 SOLUTIONS In

MATH 110: LINEAR ALGEBRA FALL 2007/08 PROBLEM SET 9 SOLUTIONS In the following V will denote a finite-dimensional vector space over R that is also an inner product space with inner product denoted by h ·; · i. The norm induced by h ·; · i will be denoted k · k. 1. Let S be a subset (not necessarily a subspace) of V . We define the orthogonal annihilator of S, denoted S?, to be the set S? = fv 2 V j hv; wi = 0 for all w 2 Sg: (a) Show that S? is always a subspace of V . ? Solution. Let v1; v2 2 S . Then hv1; wi = 0 and hv2; wi = 0 for all w 2 S. So for any α; β 2 R, hαv1 + βv2; wi = αhv1; wi + βhv2; wi = 0 ? for all w 2 S. Hence αv1 + βv2 2 S . (b) Show that S ⊆ (S?)?. Solution. Let w 2 S. For any v 2 S?, we have hv; wi = 0 by definition of S?. Since this is true for all v 2 S? and hw; vi = hv; wi, we see that hw; vi = 0 for all v 2 S?, ie. w 2 S?. (c) Show that span(S) ⊆ (S?)?. Solution. Since (S?)? is a subspace by (a) (it is the orthogonal annihilator of S?) and S ⊆ (S?)? by (b), we have span(S) ⊆ (S?)?. ? ? (d) Show that if S1 and S2 are subsets of V and S1 ⊆ S2, then S2 ⊆ S1 . ? Solution. Let v 2 S2 . Then hv; wi = 0 for all w 2 S2 and so for all w 2 S1 (since ? S1 ⊆ S2). -

A Guided Tour to the Plane-Based Geometric Algebra PGA

A Guided Tour to the Plane-Based Geometric Algebra PGA Leo Dorst University of Amsterdam Version 1.15{ July 6, 2020 Planes are the primitive elements for the constructions of objects and oper- ators in Euclidean geometry. Triangulated meshes are built from them, and reflections in multiple planes are a mathematically pure way to construct Euclidean motions. A geometric algebra based on planes is therefore a natural choice to unify objects and operators for Euclidean geometry. The usual claims of `com- pleteness' of the GA approach leads us to hope that it might contain, in a single framework, all representations ever designed for Euclidean geometry - including normal vectors, directions as points at infinity, Pl¨ucker coordinates for lines, quaternions as 3D rotations around the origin, and dual quaternions for rigid body motions; and even spinors. This text provides a guided tour to this algebra of planes PGA. It indeed shows how all such computationally efficient methods are incorporated and related. We will see how the PGA elements naturally group into blocks of four coordinates in an implementation, and how this more complete under- standing of the embedding suggests some handy choices to avoid extraneous computations. In the unified PGA framework, one never switches between efficient representations for subtasks, and this obviously saves any time spent on data conversions. Relative to other treatments of PGA, this text is rather light on the mathematics. Where you see careful derivations, they involve the aspects of orientation and magnitude. These features have been neglected by authors focussing on the mathematical beauty of the projective nature of the algebra. -

Introduction to MATLAB®

Introduction to MATLAB® Lecture 2: Vectors and Matrices Introduction to MATLAB® Lecture 2: Vectors and Matrices 1 / 25 Vectors and Matrices Vectors and Matrices Vectors and matrices are used to store values of the same type A vector can be either column vector or a row vector. Matrices can be visualized as a table of values with dimensions r × c (r is the number of rows and c is the number of columns). Introduction to MATLAB® Lecture 2: Vectors and Matrices 2 / 25 Vectors and Matrices Creating row vectors Place the values that you want in the vector in square brackets separated by either spaces or commas. e.g 1 >> row vec=[1 2 3 4 5] 2 row vec = 3 1 2 3 4 5 4 5 >> row vec=[1,2,3,4,5] 6 row vec= 7 1 2 3 4 5 Introduction to MATLAB® Lecture 2: Vectors and Matrices 3 / 25 Vectors and Matrices Creating row vectors - colon operator If the values of the vectors are regularly spaced, the colon operator can be used to iterate through these values. (first:last) produces a vector with all integer entries from first to last e.g. 1 2 >> row vec = 1:5 3 row vec = 4 1 2 3 4 5 Introduction to MATLAB® Lecture 2: Vectors and Matrices 4 / 25 Vectors and Matrices Creating row vectors - colon operator A step value can also be specified with another colon in the form (first:step:last) 1 2 >>odd vec = 1:2:9 3 odd vec = 4 1 3 5 7 9 Introduction to MATLAB® Lecture 2: Vectors and Matrices 5 / 25 Vectors and Matrices Exercise In using (first:step:last), what happens if adding the step value would go beyond the range specified by last? e.g: 1 >>v = 1:2:6 Introduction to MATLAB® Lecture 2: Vectors and Matrices 6 / 25 Vectors and Matrices Exercise Use (first:step:last) to generate the vector v1 = [9 7 5 3 1 ]? Introduction to MATLAB® Lecture 2: Vectors and Matrices 7 / 25 Vectors and Matrices Creating row vectors - linspace function linspace (Linearly spaced vector) 1 >>linspace(x,y,n) linspace creates a row vector with n values in the inclusive range from x to y. -

Math 102 -- Linear Algebra I -- Study Guide

Math 102 Linear Algebra I Stefan Martynkiw These notes are adapted from lecture notes taught by Dr.Alan Thompson and from “Elementary Linear Algebra: 10th Edition” :Howard Anton. Picture above sourced from (http://i.imgur.com/RgmnA.gif) 1/52 Table of Contents Chapter 3 – Euclidean Vector Spaces.........................................................................................................7 3.1 – Vectors in 2-space, 3-space, and n-space......................................................................................7 Theorem 3.1.1 – Algebraic Vector Operations without components...........................................7 Theorem 3.1.2 .............................................................................................................................7 3.2 – Norm, Dot Product, and Distance................................................................................................7 Definition 1 – Norm of a Vector..................................................................................................7 Definition 2 – Distance in Rn......................................................................................................7 Dot Product.......................................................................................................................................8 Definition 3 – Dot Product...........................................................................................................8 Definition 4 – Dot Product, Component by component..............................................................8 -

The Orthogonal Projection and the Riesz Representation Theorem1

FORMALIZED MATHEMATICS Vol. 23, No. 3, Pages 243–252, 2015 DOI: 10.1515/forma-2015-0020 degruyter.com/view/j/forma The Orthogonal Projection and the Riesz Representation Theorem1 Keiko Narita Noboru Endou Hirosaki-city Gifu National College of Technology Aomori, Japan Gifu, Japan Yasunari Shidama Shinshu University Nagano, Japan Summary. In this article, the orthogonal projection and the Riesz re- presentation theorem are mainly formalized. In the first section, we defined the norm of elements on real Hilbert spaces, and defined Mizar functor RUSp2RNSp, real normed spaces as real Hilbert spaces. By this definition, we regarded sequ- ences of real Hilbert spaces as sequences of real normed spaces, and proved some properties of real Hilbert spaces. Furthermore, we defined the continuity and the Lipschitz the continuity of functionals on real Hilbert spaces. Referring to the article [15], we also defined some definitions on real Hilbert spaces and proved some theorems for defining dual spaces of real Hilbert spaces. As to the properties of all definitions, we proved that they are equivalent pro- perties of functionals on real normed spaces. In Sec. 2, by the definitions [11], we showed properties of the orthogonal complement. Then we proved theorems on the orthogonal decomposition of elements of real Hilbert spaces. They are the last two theorems of existence and uniqueness. In the third and final section, we defined the kernel of linear functionals on real Hilbert spaces. By the last three theorems, we showed the Riesz representation theorem, existence, uniqu- eness, and the property of the norm of bounded linear functionals on real Hilbert spaces. -

Lesson 1 Introduction to MATLAB

Math 323 Linear Algebra and Matrix Theory I Fall 1999 Dr. Constant J. Goutziers Department of Mathematical Sciences [email protected] Lesson 1 Introduction to MATLAB MATLAB is an interactive system whose basic data element is an array that does not require dimensioning. This allows easy solution of many technical computing problems, especially those with matrix and vector formulations. For many colleges and universities MATLAB is the Linear Algebra software of choice. The name MATLAB stands for matrix laboratory. 1.1 Row and Column vectors, Formatting In linear algebra we make a clear distinction between row and column vectors. • Example 1.1.1 Entering a row vector. The components of a row vector are entered inside square brackets, separated by blanks. v=[1 2 3] v = 1 2 3 • Example 1.1.2 Entering a column vector. The components of a column vector are also entered inside square brackets, but they are separated by semicolons. w=[4; 5/2; -6] w = 4.0000 2.5000 -6.0000 • Example 1.1.3 Switching between a row and a column vector, the transpose. In MATLAB it is possible to switch between row and column vectors using an apostrophe. The process is called "taking the transpose". To illustrate the process we print v and w and generate the transpose of each of these vectors. Observe that MATLAB commands can be entered on the same line, as long as they are separated by commas. v, vt=v', w, wt=w' v = 1 2 3 vt = 1 2 3 w = 4.0000 2.5000 -6.0000 wt = 4.0000 2.5000 -6.0000 • Example 1.1.4 Formatting. -

Linear Algebra I: Vector Spaces A

Linear Algebra I: Vector Spaces A 1 Vector spaces and subspaces 1.1 Let F be a field (in this book, it will always be either the field of reals R or the field of complex numbers C). A vector space V D .V; C; o;˛./.˛2 F// over F is a set V with a binary operation C, a constant o and a collection of unary operations (i.e. maps) ˛ W V ! V labelled by the elements of F, satisfying (V1) .x C y/ C z D x C .y C z/, (V2) x C y D y C x, (V3) 0 x D o, (V4) ˛ .ˇ x/ D .˛ˇ/ x, (V5) 1 x D x, (V6) .˛ C ˇ/ x D ˛ x C ˇ x,and (V7) ˛ .x C y/ D ˛ x C ˛ y. Here, we write ˛ x and we will write also ˛x for the result ˛.x/ of the unary operation ˛ in x. Often, one uses the expression “multiplication of x by ˛”; but it is useful to keep in mind that what we really have is a collection of unary operations (see also 5.1 below). The elements of a vector space are often referred to as vectors. In contrast, the elements of the field F are then often referred to as scalars. In view of this, it is useful to reflect for a moment on the true meaning of the axioms (equalities) above. For instance, (V4), often referred to as the “associative law” in fact states that the composition of the functions V ! V labelled by ˇ; ˛ is labelled by the product ˛ˇ in F, the “distributive law” (V6) states that the (pointwise) sum of the mappings labelled by ˛ and ˇ is labelled by the sum ˛ C ˇ in F, and (V7) states that each of the maps ˛ preserves the sum C. -

Week 1 – Vectors and Matrices

Week 1 – Vectors and Matrices Richard Earl∗ Mathematical Institute, Oxford, OX1 2LB, October 2003 Abstract Algebra and geometry of vectors. The algebra of matrices. 2x2 matrices. Inverses. Determinants. Simultaneous linear equations. Standard transformations of the plane. Notation 1 The symbol R2 denotes the set of ordered pairs (x, y) – that is the xy-plane. Similarly R3 denotes the set of ordered triples (x, y, z) – that is, three-dimensional space described by three co-ordinates x, y and z –andRn denotes a similar n-dimensional space. 1Vectors A vector can be thought of in two different ways. Let’s for the moment concentrate on vectors in the xy-plane. From one point of view a vector is just an ordered pair of numbers (x, y). We can associate this • vector with the point in R2 which has co-ordinates x and y. We call this vector the position vector of the point. From the second point of view a vector is a ‘movement’ or translation. For example, to get from • the point (3, 4) to the point (4, 5) we need to move ‘one to the right and one up’; this is the same movement as is required to move from ( 2, 3) to ( 1, 2) or from (1, 2) to (2, 1) . Thinking about vectors from this second point of− view,− all three− of− these movements− are the− same vector, because the same translation ‘one right, one up’ achieves each of them, even though the ‘start’ and ‘finish’ are different in each case. We would write this vector as (1, 1) .