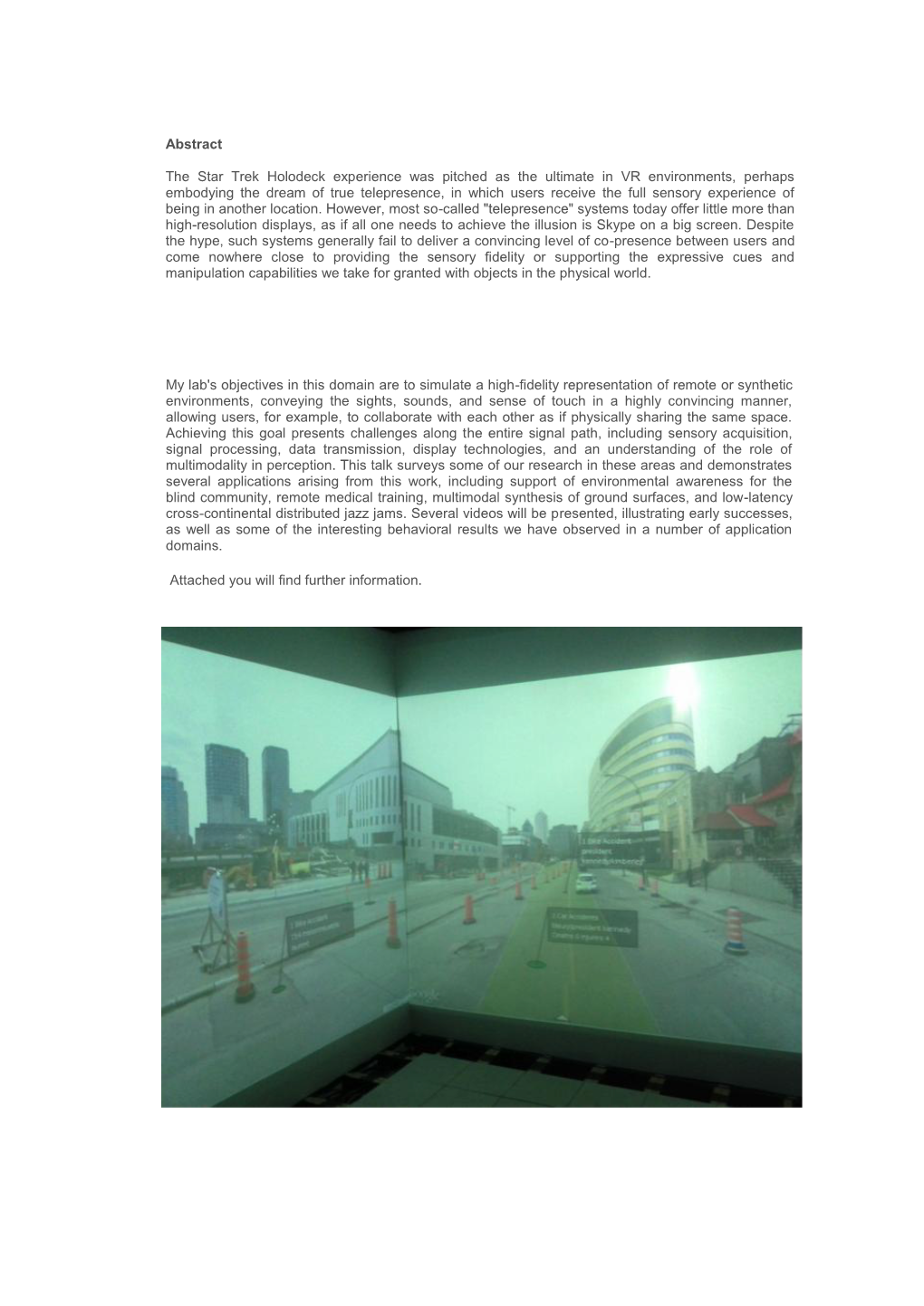

Abstract the Star Trek Holodeck Experience Was Pitched As

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Gene Roddenberry: the Star Trek Philosophy

LIVING TREKISM – Database Gene Roddenberry: The Star Trek Philosophy "I think probably the most often asked question about the show is: ‘Why the Star Trek Phenomenon?’ And it could be an important question because you can ask: ‘How can a simple space opera with blinking lights and zap-guns and a goblin with pointy ears reach out and touch the hearts and minds of literally millions of people and become a cult in some cases?’ Obviously, what this means is, that television has incredible power. They’re saying that if Star Trek can do this, then perhaps another carefully calculated show could move people in other directions – as to keep selfish interest to creating other cults for selfish purposes – industrial cartels, political parties, governments. Ultimate power in this world, as you know, has always been one simple thing: the control and manipulation of minds. Fortunately, in the attempt however to manipulate people through any – so called Star Trek Formula – is doomed to failure, and I’ll tell you why in just a moment. First of all, our show did not reach and affect all these people because it was deep and great literature. Star Trek was not Ibsen or Shakespeare. To get a prime time show – network show – on the air and to keep it there, you must attract and hold a minimum of 18 million people every week. You have to do that in order to move people away from Gomer Pyle, Bonanza, Beverly Hill Billies and so on. And we tried to do this with entertainment, action, adventure, conflict and so on. -

Download the Borg Assimilation

RESISTANCE IS FUTILE… BORG CUBES Monolithic, geometric monstrosities capable of YOU WILL BE ASSIMILATED. defeating fleets of ships, they are a force to be Adding the Borg to your games of Star Trek: Ascendancy feared. introduces a new threat to the Galaxy. Where other civilizations may be open to negotiation, the Borg are single-mindedly BORG SPIRES dedicated to assimilating every civilization they encounter into Borg Spires mark Systems under Borg control. the Collective. The Borg are not colonists or explorers. They are Over the course of the game, Borg Spires will build solely focused on absorbing other civilizations’ technologies. new Borg Cubes. The Borg are not controlled by a player, but are a threat to all the forces in the Galaxy. Adding the Borg also allows you to play BORG ASSIMILATION NODES games with one or two players. The rules for playing with fewer Borg Assimilation Nodes are built around Spires. Built than three players are on page 11. Nodes indicate how close the Spire is to completing a new Borg Cube and track that Borg System’s current BORG COMPONENTS Shield Modifier. • Borg Command Console Card & Cube Card BORG TECH CARDS • 5 Borg Cubes & 5 Borg Spires Players claim Borg Tech Cards when they defeat • 15 Borg Assimilation Nodes & 6 Resource Nodes the Borg in combat. The more Borg technology you • 20 Borg Exploration Cards acquire, the better you will fare against the Borg. • 7 Borg System Discs • 20 Borg Technology Cards BORG COMMAND CARDS • 30 Borg Command Cards Borg Command Cards direct the Cubes’ movement • 9 Borg Dice during the Borg’s turn and designate the type of System each Cube targets. -

Beyond the Final Frontier: Star Trek, the Borg and the Post-Colonial

Beyond the Final Frontier: Star Trek, the Borg and the Post-colonial Lynette Russell and Nathan Wolski Over the last three decades, Star Trek has become, to use Bernardi's term, a "mega-text" (1998: 11). Star Trek's mega-text consists of much more than the various studio-produced television series and films - it also includes (among other things) novels, Internet chat groups, conventions and fanzines. That Star Trek's premise of space exploration is a thinly disguised metaphor for colonialism has been extensively analysed (see Bernardi, 1998; Hastie, 1996; Ono 1996; Richards, 1997). Boyd describes the utopian future presented in Star Trek the Next Generation (STNG) as based on "nineteenth-century essentialist definitions of human nature, building ... on faith in perfection, progress, social evolution, and free will" (1996: 96-97). Exploration, colonisation and assimilation are never far from the surface of the STNG text. Less apparent, however, are aspects of the series which challenge the hegemonic view of this narrative and which present a post-colonial critique. In this paper we will explore a range of post-colonial moments and an emerging self reflexivity in the second generation series, focusing on those episodes of Star Trek: the Next Generation (STNG) and Star Trek: Voyager which feature an alien race known as the Borg. Others in space Much has been written about the role of the alien in science fiction as a means of exploring issues of otherness. As Wolmark notes: "Science fiction provides a rich source of metaphors for the depiction of otherness and the 'alien' is one of the most familiar: it enables difference to be constructed in terms of binary oppositions which reinforce relations of domination and subordination" (1994: 2). -

The History of Star Trek

The History of Star Trek The original Star Trek was the brainchild of Gene Roddenberry (1921-1991), a US TV producer and scriptwriter. His idea was to make a TV series that combined the futuristic possibilities of science fiction with the drama and excitement of TV westerns (his original title for the series was ‘Wagon Train to the Stars’). Star Trek was first aired on American TV in 1966, and ran for three series. Each episode was a self-contained adventure/mystery, but they were all linked together by the premise of a gigantic spaceship, crewed by a diverse range of people, travelling about the galaxy on a five-year mission ‘to explore new life and new civilisations, to boldly go where no man has gone before’. Although not especially successful it attracted a loyal fan-base, partly male fans that liked the technological and special effects elements of the show. But the show also attracted a large number of female fans, many of whom were drawn to the complex interaction and dynamic between the three main characters, the charismatic but impetuous Captain Kirk (William Shatner), the crotchety old doctor McCoy (DeForest Kelley) and the coldly logical Vulcan science officer Spock (Leonard Nimoy). After the show was cancelled in 1969 the fans conducted a lengthy and ultimately successful campaign to resurrect the franchise. Roddenberry enjoyed success with several motion pictures, including Star Trek: The Motion Picture (1979); action-thriller Star Trek II: The Wrath of Khan (1982); Star Trek III: The Search for Spock (1984) and the more comic Star Trek IV: The Voyage Home (1986). -

Star Trek Ascendancy Cardass

GAME CONTENTS EXPLORATION CARDS This set includes everything you need to add the Cardassians The 10 new Exploration Cards include 4 Crises, 4 Discoveries to your games of Star Trek: Ascendancy. The set includes: and 2 Civilization Discovery Cards. • 10 New Exploration Cards • 10 New Systems Discs, including Cardassia Prime CONFRONTATIONS • 30 Cardassian Starships with 3 Fleet Markers & Cards The 4 new Cardassian • 10 Cardassian Control Nodes Crisis Cards introduce • 15 Cardassian Advancements “Confrontations,” • 3 Cardassian Trade Agreements where a rival player • Cardassian Turn Summary Card places one of their • Cardassian Command Console with 2 Sliders Starships in the same • 19 Resource Nodes System with the Ship • 76 Tokens & 27 Space Lanes that Discovered the System. ADDING CARDASSIANS TO YOUR GAME What happens after that is up to the two player involved in To integrate the Cardassians into your games of Star Trek: the Confrontation. Will it lead to peaceful trade relations? Or Ascendancy, shuffle the 10 new Exploration Cards into the will it spark a hostile diplomatic incident? Exploration Cards from the core set and add the 9 System Discs into the mix of System Discs from the core set. Adding the Cardassians to your game increases the number ARMISTICE ACCORDS of possible players by 1. The Cardassian player begins the When a player game with the same starting number of Ships, Control Discovers a new Nodes, starting Resources, etc. Each additional player adds System and draws the approximately an hour to the game’s duration. Cardassian Armistice Accords, they have COMMAND CONSOLE stumbled into a border Like the three factions dispute that requires included in the core set, them to relinquish the Cardassians have a Control of one of their unique Command Console Systems in exchange with two Special Rules that for Control of a Cardassian System. -

Infinite Diversity in Infinite Combinations: Portraits of Individuals with Disabilities in Star Trek

Infinite Diversity in Infinite Combinations: Portraits of Individuals With Disabilities in Star Trek Terry L. Shepherd An Article Published in TEACHING Exceptional Children Plus Volume 3, Issue 6, July 2007 Copyright © 2007 by the author. This work is licensed to the public under the Creative Commons Attribution License. Infinite Diversity in Infinite Combinations: Portraits of Individuals With Disabilities in Star Trek Terry L. Shepherd Abstract Weekly television series have more influence on American society than any other form of media, and with many of these series available on DVDs, television series are readily ac- cessible to most consumers. Studying television series provides a unique perspective on society’s view of individuals with disabilities and influences how teachers and peers view students with disabilities. Special education teachers can use select episodes to differenti- ate between the fact and fiction of portrayed individuals with disabilities with their stu- dents, and discuss acceptance of peers with disabilities. With its philosophy of infinite diversity in infinite combinations, Star Trek has portrayed a number of persons with dis- abilities over the last forty years. Examples of select episodes and implications for special education teachers for using Star Trek for instructional purposes through guided viewing are discussed. Keywords videotherapy, disabilities, television, bibliotherapy SUGGESTED CITATION: Shepherd, T.L. (2007). Infinite diversity in infinite combinations: Portraits of individuals with disabilities in Star Trek. TEACHING Exceptional Children Plus, 3(6) Article 1. Retrieved [date] from http://escholarship.bc.edu/education/tecplus/vol3/iss6/art1 "Families, societies, cultures -- A Reflection of Society wouldn't have evolved without com- For the last fifty years, television has passion and tolerance -- they would been a reflection of American society, but it have fallen apart without it." -- Kes to also has had a substantial impact on public the Doctor (Braga, Menosky, & attitudes. -

DELTA QUADRANTDELTA Sample File Sample

FAR FROM HOME , SOMEWHERE ALONG THIS JOURNEY, WE LL FIND A WAY BACK. MISTER PARIS, SET A COURSE FOR HOME. - CAPTAIN KATHRYN JANEWAY The Delta Quadrant Sourcebook provides Gamemasters and Players with a wealth of information to aid in playing characters or running adventures set within the ever-expanding Star Trek universe. The Delta Quadrant Sourcebook includes: ■■ Detailed information about the ■■ A dozen new species to choose post-war Federation and U.S.S. from during character creation, Voyager»s monumental mission, including Ankari, Ocampa, bringing the Star Trek Adventures Talaxians, and even Liberated Borg! timeline up to 2379. ■■ A selection of alien starships, ■■ Information on many of the species including Kazon raiders, Voth inhabiting the quadrant, including city-ships, Hirogen warships, and QUADRANT DELTA the Kazon Collective, the Vidiian a devastating collection of new Sodality, the Malon, the Voth, Borg vessels. and more. ■■ Guidance to aid the Gamemaster SOURCEBOOK ■■ Extensive content on the Borg in running missions and continuing Collective, including their history, voyages in the Delta Quadrant, hierarchy, locations, processes, with a selection of adventure seeds and technology. and Non-Player Characters. This book requires the Star Trek Adventures core rulebook to use. MUH051069 Sample file TM & © 2020 CBS Studios Inc. © 2020 Paramount Pictures Corp. STAR TREK and related marks and logos are trademarks of CBS Studios Inc. All Rights Reserved. DELTA QUADRANT MUH051069 Printed in Lithuania © SOURCEBOOK HOMEBOUND PATH OF -

Never Surrender

AirSpace Season 3, Episode 12: Never Surrender Emily: We spent the first like 10 minutes of Galaxy Quest watching the movie being like, "Oh my God, that's the guy from the... That's that guy." Nick: Which one? Emily: The guy who plays Guy. Nick: Oh yeah, yeah. Guy, the guy named Guy. Matt: Sam Rockwell. Nick: Sam Rockwell. Matt: Yeah. Sam Rockwell has been in a ton of stuff. Emily: Sure. Apparently he's in a ton of stuff. Nick: Oh. And they actually based Guy's character on a real person who worked on Star Trek and played multiple roles and never had a name. And that actor's name is Guy. Emily: Stop. Is he really? Nick: 100 percent. Intro music in and under Nick: Page 1 of 8 Welcome to the final episode of AirSpace, season three from the Smithsonian's National Air and Space Museum. I'm Nick. Matt: I'm Matt. Emily: And I'm Emily. Matt: We started our episodes in 2020 with a series of movie minis and we're ending it kind of the same way, diving into one of the weirdest, funniest and most endearing fan films of all time, Galaxy Quest. Nick: The movie came out on December 25th, 1999, and was one of the first widely popular movies that spotlighted science fiction fans as heroes, unlike the documentary Trekkies, which had come out a few years earlier and had sort of derided Star Trek fans as weird and abnormal. Galaxy Quest is much more of a love note to that same fan base. -

Brain and Dualism in Star Trek

Feature 101 nature of human experience” (Hayles 245) and ignores “the importance of embodiment” (20). Brain and Dualism in Star Trek Moreover, this essay will show that in the vast majority of cases, these interactions constitute one of two events: Victor Grech a dybbuk, which, in Jewish mythology, is defined as the possession of the body by a malevolent spirit, usually that Introduction of a dead person, or outright possession by beings with IN THE PHILOSOPHIES that deal with the mind, dual- superhuman powers. The narratives then focus on coun- ism is the precept that mental phenomena are, to some termeasures that need to be undertaken in order to re- degree, non-physical and not completely dependent on store the original personality into its former body, there- the physical body, which includes the organic brain. by emulating a morality play, with good mastering evil. René Descartes (1596-1650) popularised this concept, maintaining that the mind is an immaterial and non- Narratives physical essence that gives rise to self-awareness and con- For the purposes of this essay, only sources that are ca- sciousness. Dualism can be extended to include the no- nonical to the ST gesamtkunstwerk are considered, i.e. the tion that more broadly asserts that the universe contains televisions series and the movies. two types of substances, on the one hand, the impalpable mind and consciousness and, on the other hand, com- Mind resides in brain mon matter. This is in contrast with other world-views, The certain knowledge that consciousness somehow re- such as monism, which asserts that all objects contained sides within the physical brain is acknowledged in Dan- in the universe are reducible to one reality, and pluralism iel’s “Spock’s Brain.” In this episode, the Enterprise’s Vul- which asserts that the number of truly fundamental reali- can first officer has his brain forcibly removed surgically. -

Toward the Holodeck: Integrating Graphics, Sound, Character and Story

Toward the Holodeck: Integrating Graphics, Sound, Character and Story W. Swartout,1 R. Hill,1 J. Gratch,1 W.L. Johnson,2 C. Kyriakakis,3 C. LaBore,2 R. Lindheim,1 S. Marsella,2 D. Miraglia,1 B. Moore,1 J. Morie,1 J. Rickel,2 M. Thi baux,2 L. Tuch,1 R. Whitney2 and J. Douglas1 1USC Institute for Creative Technologies, 13274 Fiji Way, Suite 600, Marina del Rey, CA, 90292 2USC Information Sciences Institute, 4676 Admiralty Way, Suite 1001, Marina del Rey, CA 90292 3USC Integrated Media Systems Center, 3740 McClintock Avenue, EEB 432, Los Angeles, CA, 90089 http://ict.usc.edu, http://www.isi.edu, http://imsc.usc.edu ABSTRACT he split his forces or keep them together? If he splits them, how We describe an initial prototype of a holodeck-like environment should they be organized? If not, which crisis takes priority? The that we have created for the Mission Rehearsal Exercise Project. pressure of the decision grows as a crowd of local civilians The goal of the project is to create an experience learning system begins to form around the accident site. A TV cameraman shows where the participants are immersed in an environment where up and begins to film the scene. they can encounter the sights, sounds, and circumstances of real- This is the sort of dilemma that daily confronts young Army world scenarios. Virtual humans act as characters and coaches in decision-makers in a growing number of peacekeeping and an interactive story with pedagogical goals. disaster relief missions around the world. -

Gene Roddenberry's Birthplace for Serious Trekkie Collectors

Gene Roddenberry's birthplace For serious Trekkie collectors 40th anniversary of Star Trek creation and phenomena Ideal location for Roddenberry Museum and Trekkie Cafe’ On web site http://www.startrek.com/startrek/view/news/article/ 26555.html for more information Email to: [email protected] Star Trek creator - Gene Roddenberry’s birthplace 1907 E. Yandell Dr., El Paso, TX • Location is close to major commerce streets – Montana and Cotton • Property is cinderblock, steel, and brick, built in1969 and easily remodeled for Museum & Café • $699,000 starting bid for 1907/1905 • Option to buy adjacent property (made up of 3 separate offices) @ 1909 E. Yandell to successful bidder of 1907/1905 E. Yandell • Air Conditioned office space of approx. 2612 sq. ft. • Parking lot in rear (gravel), total lot property of approx. 6750 sq. ft. Could serve as a gaming center adjacent to café or other possibilities abound • Option of $399,000 for adjacent 1909 property • Birthplace location and option to buy adjacent property – ideal for long term investors looking to • Birthplace certified by City of El Paso, plaque memorialize this site in honor of Gene Roddenberry. provided Its long term historical value could be similar to that • Documented on CBS Paramount web site: witnessed by Ernest Hemingway’s property in Key http://www.startrek.com/startrek/view/news/arti West Florida cle/26555.html • Property (includes two separate offices) can be easily converted to a Star Trek Café, souvenir shop, and Roddenberry Museum • Air Conditioned office space of approx. 2035 sq. ft. • Parking lot in rear (gravel), total lot property of approx. -

1 Open Ninth: Conversations Beyond the Courtroom Live

1 OPEN NINTH: CONVERSATIONS BEYOND THE COURTROOM LIVE LONG AND PROSPER EPISODE 6 SEPTEMBER 25, 2016 HOSTED BY: FREDERICK J. LAUTEN 2 (Music.) >> Welcome to Episode 6 of "Open Ninth: Conversations Beyond the Courtroom" in the Ninth Judicial Circuit Court of Florida. And now here's your host, Chief Judge Frederick J. Lauten. (Music.) >> JUDGE LAUTEN: Hello. I'm here today with Orange County Judge Faye Allen. Judge Allen's been on the bench in Orange County as an Orange County judge since 2005. She received her bachelor of arts degree in criminology from Florida A & M and her Juris Doctorate from Florida State University. Judge Allen, first of all, welcome. >> JUDGE ALLEN: Thank you. >> JUDGE LAUTEN: I understand that you consider yourself a Trekkie. For those listeners who don't know what a Trekkie is, think Star Trek. Think fan. Think the phenomena of a Star Trek fan and its multiple fans. And I think the definition is an avid fan of Star Trek science fiction, television shows, and films or, by extension, a person interested in space travel. Before we talk a little bit about your love of Star Trek, Gene Roddenberry, Captain Kirk, Spock, McCoy, and everything else that is the Star Trek franchise -- and it's 3 truly a franchise -- I'd like to talk a little bit about your background. So why don't you tell me where you're from originally, and why you decided to become a judge. >> JUDGE ALLEN: Fred, I'm originally from Quincy, Florida. It's a few miles west of Tallahassee, the capital.