6585 P LTRC 2010.Indd

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Does Washback Exist?

DOCUMENT RESUME FL 020 178 ED 345 513 AUTHOR Alderson, J. Charles; Wall,Dianne TITLE Does Washback Exist? PUB DATE Feb 92 Symposium on the NOTE 23p.; Paper presented at a Educational and Social Impactsof Language Tests, Language Testing ResearchColloquium (February 1992). For a related document, seeFL 020 177. PUB TYPE Reports - Evaluative/Feasibility(142) Speeches/Conference Papers (150) EDRS PRICE MF01/PC01 Plus Postage. DESCRIPTORS *Classroom Techniques;Educational Environment; Educational Research; EducationalTheories; Foreign Countries; Language Research;*Language Tests; *Learning Processes; LiteratureReviewa; Research Needs; Second LanguageInstruction; *Second Languages; *Testing the Test; Turkey IDENTIFIERS Nepal; Netherlands; *Teaching to ABSTRACT The concept of washback, orbackwash, defined as the influence of testing oninstruction, is discussed withrelation to second second language teaching andtesting. While the literature of be language testing suggests thattests are commonly considered to powerful determiners of whathappens in the classroom, Lheconcept of washback is not well defined.The first part of the discussion focuses on the concept, includingseveral different interpretations of the phenomenon. It isfound to be a far more complextopic than suggested by the basic washbackhypothesis, which is alsodiscussed and outlined. The literature oneducation in general is thenreviewed for additional information on theissues involved. Very little several research was found that directlyrelated to the subject, but studies are highlighted.Following this, empirical research on language testing is consulted forfurther insight. Studies in Turkey, the Netherlands, and Nepal arediscussed. Finally, areas for additional research are proposed,including further definition of washback, motivation and performance,the role of educational explanatory setting, research methodology,learner perceptions, and factors. A 39-item bibliography isappended. -

British Council, London (England). English Language *Communicative Competence

DOCUMENT RESUME ED 258 440 FL 014 475 AUTHOR Alderson, J. Charles, 54.; Hughes, Arthur, Ed. TITLE Issues in Language Testing. ELT Documents 111. INSTITUTION British Council, London (England). English Language and Literature Div. REPORT NO ISBN-0-901618-51-9 PUB DATE 81 NOTE 211p, PUB TYPE Collected Works - General (020)-- Reports - Descriptive (141) EDRS PRICE MF01/PC09 Plus Postage. DESCRIPTORS *Communicative Competence (Languages); Conference Proceedings; *English (Second Language); *English for Special Purposes; *Language Proficiency; *Language Tests; Second Language Instruction; Test Validity ABSTRACT A symposium focusing on problems in the assessment of foreign or second language learning brought seven applied linguists together to discuss three areas of debate: communicative language testing, testing of English for specific purposes, and general language proficiency assessment. In each of these areas, the participants reviewed selected papers on the topic, reacted to them on paper, and discussed them as a group. The collected papers, reactions, and discussion reports on communicative language testing include the following: "Communicative Language Testing: Revolution or Evolution" (Keith Morrow) ancl responses by Cyril J. Weir, Alan Moller, and J. Charles Alderson. The next section, 9n testing of English for specific purposes, includes: "Specifications for an English Language Testing Service" (Brendan J. Carroll) and responses by Caroline M. Clapham, Clive Criper, and Ian Seaton. The final section, on general language proficiency, includes: "Basic Concerns /Al Test Validation" (Adrian S. Palmer and Lyle F. Bachman) and "Why Are We Interested in General Language Proficiency'?" (Helmut J. Vollmer), reactions of Arthur Hughes and Alan Davies, and the `subsequent response of Helmut J. Vollmer. -

Aurora Tsai's Review of the Diagnosis of Reading in a Second Or Foreign

Reading in a Foreign Language April 2015, Volume 27, No. 1 ISSN 1539-0578 pp. 117–121 Reviewed work: The Diagnosis of Reading in a Second or Foreign Language. (2015). Alderson, C., Haapakangas, E., Huhta, A., Nieminen, L., & Ullakonoja, R. New York and London: Routledge. Pp. 265. ISBN 978-0-415-66290-1 (paperback). $49.95 Reviewed by Aurora Tsai Carnegie Mellon University United States http://www.amazon.com In The Diagnosis of Reading in a Second or Foreign Language, Charles Alderson, Eeva-Leena Haapakangas, Ari Huhta, Lea Nieminen, and Riikka Ullakonoja discuss prominent theories concerning the diagnosis of reading in a second or foreign language (SFL). Until now, the area of diagnosis in SFL reading has received little attention, despite its increasing importance as the number of second language learners continues to grow across the world. As the authors point out, researchers have not yet established a theory of how SFL diagnosis works, which makes it difficult to establish reliable procedures for accurately diagnosing and helping students in need. In this important contribution to reading and diagnostic research, Alderson et al. illustrate the challenges involved in carrying out diagnostic procedures, conceptualize a theory of diagnosis, provide an overview of SFL reading, and highlight important factors to consider in diagnostic assessment. Moreover, they provide detailed examples of tests developed specifically for diagnosis, most of which arise from their most current research projects. Because this book covers a wide variety of SFL reading and diagnostic topics, researchers in applied linguistics, second language acquisition, language assessment, education, and even governmental organizations or military departments would consider it an enormously helpful and enlightening resource on SFL reading. -

Language and Linguistics 2009

Visit us at: www.cambridge.org/asia ASIA SALES CONTACTS Cambridge University Press Asia 79 Anson Road #06-04 China – Beijing Office Singapore 079906 Room 1208–09, Tower B, Chengjian Plaza Phone (86) 10 8227 4100 Phone (65) 6323 2701 No. 18, Beitaipingzhuang Road, Haidian District Fax (86) 10 8227 4105 Fax (65) 6323 2370 Beijing 100088, China Email [email protected] Email [email protected] China – Shanghai Office Room N, Floor 19 Phone (86) 21 5301 4700 Zhiyuan Building Fax (86) 21 5301 4710 768, XieTu Road, Shanghai Email [email protected] 200023, China ➤ See page 9 ➤ See page 1 ➤ See page 10 China – Guangzhou Office RM 1501, East Tower, Dong Shan Plaza Phone (86) 20 8732 6913 69 Xian Lie Zhong Lu, Guangzhou 510095 Fax (86) 20 8732 6693 Language and China Email [email protected] China – Hong Kong and other areas Unit 1015–1016, Tower 1 Phone (852) 2997 7500 Millennium City 1, Fax (852) 2997 6230 Linguistics 2009 388 Kwun Tong Road Email [email protected] Kwun Tong, Kowloon Hong Kong, SAR www.cambridge.org/linguistics India Cambridge University Press India Pvt. Ltd. Phone (91) 11 2327 4196 / 2328 8533 Cambridge House, 4381/4, Ansari Road Fax (91) 11 2328 8534 Daryaganj, New Delhi – 110002, India Email [email protected] Japan Sakura Building, 1F, 1-10-1 Kanda Nishiki-cho Phone, ELT (81) 3 3295 5875 ➤ See page 1 ➤ See page 2 ➤ See page 3 Chiyoda-ku, Tokyo – 101-0054 Phone, Academic (81) 3 3291 4068 Japan Email [email protected] Malaysia Suite 9.01, 9th Floor, Amcorp Tower Phone (603) 7954 4043 Amcorp Trade Centre, 18 Persiaran Barat Fax (603) 7954 4127 46050 Petaling Jaya, Malaysia Email [email protected] Philippines 4th Floor, Port Royal Place Phone (632) 8405734/35 118 Rada Street, Legaspi Village Fax (632) 8405734 Makati City, Philippines [email protected] South Korea 2FL, Jeonglim Building Phone (82) 2 2547 2890 254-27, Nonhyun-dong, Gangnam-gu, Fax (82) 2 2547 4411 Seoul 135-010, South Korea Email [email protected] Taiwan 11F-2, No. -

A Systematic Review of the Research Evidence

This is a repository copy of Do New Technologies Facilitate the Acquisition of Reading Skills? : A Systematic Review of the Research Evidence. White Rose Research Online URL for this paper: https://eprints.whiterose.ac.uk/75113/ Version: Published Version Conference or Workshop Item: Handley, Zoe Louise orcid.org/0000-0002-4732-3443 and Walter, Catherine (2010) Do New Technologies Facilitate the Acquisition of Reading Skills? : A Systematic Review of the Research Evidence. In: British Association of Applied Linguistics, 09-11 Sep 2010. Reuse Items deposited in White Rose Research Online are protected by copyright, with all rights reserved unless indicated otherwise. They may be downloaded and/or printed for private study, or other acts as permitted by national copyright laws. The publisher or other rights holders may allow further reproduction and re-use of the full text version. This is indicated by the licence information on the White Rose Research Online record for the item. Takedown If you consider content in White Rose Research Online to be in breach of UK law, please notify us by emailing [email protected] including the URL of the record and the reason for the withdrawal request. [email protected] https://eprints.whiterose.ac.uk/ Applied Linguistics, Global and Local Proceedings of the 43rd Annual Meeting of the British Association for Applied Linguistics 9-11 September 2010 University of Aberdeen Edited by Robert McColl Millar & Mercedes Durham Applied Linguistics, Global and Local Proceedings of the 43rd Annual Meeting of the British Association for Applied Linguistics 9-11 September 2010 University of Aberdeen Edited by Robert McColl Millar & Mercedes Durham Applied Linguistics, Global and Local Proceedings of the 43rd Annual Meeting of the British Association for Applied Linguistics 9-11 September 2010 University of Aberdeen Published by Scitsiugnil Press 1 Maiden Road, London, UK And produced in the UK Copyright © 2011 Copyright subsists with the individual contributors severally in their own contributions. -

13EUROSLA 249-252.Qxd

About the authors Riikka Alanen is a professor of applied linguistics at the Centre for Applied Language Studies of the University of Jyväskylä, Finland. Her research interests include L2 learn- ing and teaching as task-mediated activity and the role of consciousness and agency in the learning process. She has written articles in Finnish and English about children’s L2 learning process as well as their beliefs about English as a Foreign Language. She is also the co-editor of the volume Language in Action: Vygotsky and Leontievian legacy today (2007). J. Charles Alderson is professor of Linguistics and English Language Education at Lancaster University. He is a specialist in language testing and the author of numerous books, book chapters and academic articles on the topic, as well as on reading in a sec- ond or foreign language. He is a former co-editor of the journal Language Testing, co- editor of the Cambridge Language Assessment Series and a recipient of the Lifetime Achievement Award of the International Language Testing Association (ILTA). See also http://www.ling.lancs.ac.uk/profiles/J-Charles-Alderson/ Inge Bartning is a professor of French at Stockholm University. She has taught and pub- lished in the domain of French syntax, semantics and pragmatics. In the last two decades her main interest has been in French L2 acquisition, in particular the domain of developmental stages, advanced learners and ultimate attainment of morpho-syntax, discourse and information structure. She is currently participating in a joint project ‘High Level Proficiency in Second Language Use’ with three other departments at Stockholm university (see www.biling.su.se/~AAA). -

Curriculum and Syllabus Design in ELT. Report on the Dunford House Seminar (England, United Kingdom, July 16-26, 1984)

DOCUMENT RESUME ED 358 706 FL 021 249 AUTHOR Toney, Terry, Ed. TITLE Curriculum and Syllabus Design in ELT. Report on the Dunford House Seminar (England, United Kingdom, July 16-26, 1984). INSTITUTION British Council, London (England). PUB DATE 85 NOTE 152p.; For other Dunford House Seminar proceedings, see FL 021 247-257. AVAILABLE FROMEnglish Language Division, The British Council, Medlock Street, Manchester M15 4AA, England, United Kingdom. PUB TYPE Collected Works Conference Proceedings (021) EDRS PRICE MF01/PC07 Plus Postage. DESCRIPTORS *Behavioral Objectives; Case Studies; Communicative Competence (Languages); *Curriculum Design; Curriculum Development; Curriculum Evaluation; Developing Nations; Educational Technology; Elementary Secondary Education; *English (Second Language); Foreign Countries; Higher Education; *Language Teachers; *Language Tests; Modern Languages; Research Methodology; Second Language Instruction; *Teacher Education; Teaching Methods IDENTIFIERS Egypt; Hong Kong; Yemen ABSTRACT Proceedings of a seminar on curriculum and syllabus design for training English-as-a-Second-Language teachers are presented in the form of papers, presentations, and summary narrative. They include: "Curriculum and Syllabus Design in ELT"; "Key Issues in Curriculum and Syllabus Design for ELT" (Christopher Brumfit); "Approaches to Curriculum Design" (Janet Maw); "Graded Objectives in Modern Languages" (Sheila Rowell); "Performance Objectives in the Hong Kong DTEO" (Richard Cauldwell); "The Relationship between Teaching and Learning" -

Intersections Between SLA and Language Testing Research

EUROSLA MONOGRAPHS SERIES 1 COMMUNICATIVE PROFICIENCY AND LINGUISTIC DEVELOPMENT: intersections between SLA and language testing research EDITED BY INGE BARTNING University of Stockholm MAISA MARTIN University of Jyväskylä INEKE VEDDER University of Amsterdam EUROPEAN SECOND LANGUAGE ASSOCIATION 2010 Eurosla monographs Eurosla publishes a monographs series, available open access on the association’s website. The series includes both single-author and edited volumes on any aspect of second langua- ge acquisition. Manuscripts are evaluated with a double blind peer review system to ensu- re that they meet the highest qualitative standards. Editors Gabriele Pallotti (Series editor), University of Modena and Reggio Emilia Fabiana Rosi (Assistant editor), University of Modena and Reggio Emilia © The Authors 2010 Published under the Creative Commons "Attribution Non-Commercial No Derivatives 3.0" license ISBN 978-1-4466-6993-8 First published by Eurosla, 2010 Graphic design and layout: Pia ’t Lam An online version of this volume can be downloaded from eurosla.org Table of contents Foreword 5 Developmental stages in second-language acquisition and levels of second-language proficiency: Are there links between them? Jan H. Hulstijn, J. Charles Alderson and Rob Schoonen 11 Designing and assessing L2 writing tasks across CEFR proficiency levels Riikka Alanen, Ari Huhta and Mirja Tarnanen 21 On Becoming an Independent User Maisa Martin, Sanna Mustonen, Nina Reiman and Marja Seilonen 57 Communicative Adequacy and Linguistic Complexity in L2 writing -

J. Charles Alderson Is Professor of Linguistics and English Language Education at Lancaster University

Contributors J. Charles Alderson is Professor of Linguistics and English Language Education at Lancaster University. He was Director of the Revision Project that produced the IELTS test; Scientifi c Coordinator of DIALANG (www. dialang.org); Academic Adviser to the British Council’s Hungarian English Examination Reform Project; and is former co-editor of the international journal Language Testing and the Cambridge Language Assessment Series (Cambridge University Press). He has taught and lectured in over 50 coun- tries worldwide, been consultant to numerous language education projects, and is internationally well-known for his teaching, research and publications in language testing and assessment, programme and course evaluation, reading in a foreign language and teacher training. Alan Davies is Emeritus Professor of Applied Linguistics in the University of Edinburgh where he has been attached since the mid-1960s. Over the years he has spent long periods working in Kenya, Nepal, Australia and Hong Kong. He has served as Editor of the journals Applied Linguistics and Language Testing and includes among his recent publications: The Native Speaker: Myth and Reality (2003), A Glossary of Applied Linguistics (2005), An Introduction to Applied Linguistics (2nd edn, 2007), and the edited volume The Handbook of Applied Linguistics (2004). His book Academic English Testing: 1950–1989 was published in 2008. Tom Hunter has had over 35 years experience in ELT. In most recent years this has largely been in international aid development contexts where, as a trainer-trainer, curriculum expert, project manager and policy special- ist, he has honed his skills. He has worked on both long and short term consultancies in a number of countries, including Bangladesh, Kenya, Ethiopia, Mexico, Iran, Sri Lanka, Afghanistan and Uzbekistan. -

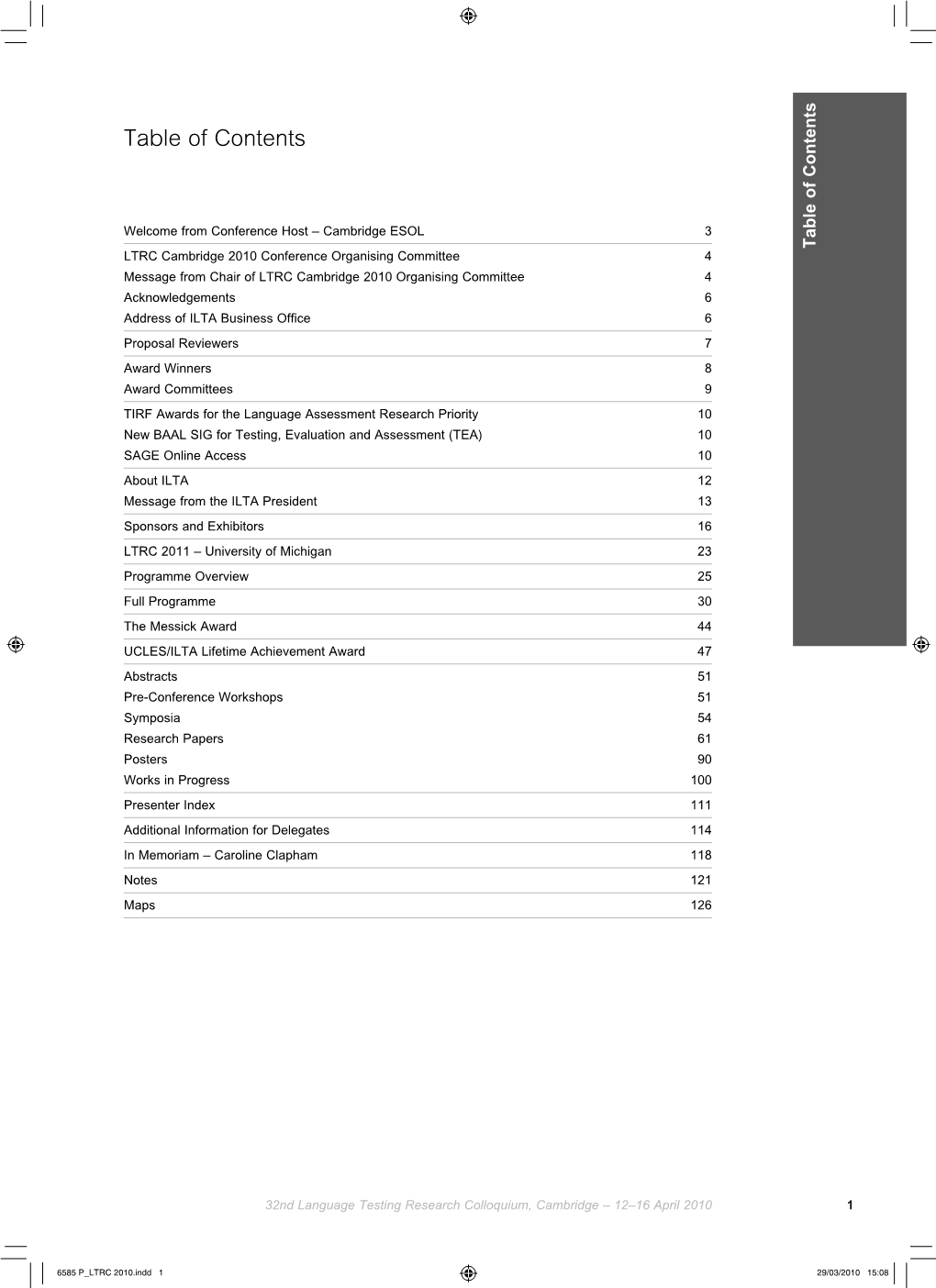

Table of Contents

EUROSLA MONOGRAPHS SERIES 1 COMMUNICATIVE PROFICIENCY AND LINGUISTIC DEVELOPMENT: intersections between SLA and language testing research EDITED BY INGE BARTNING University of Stockholm MAISA MARTIN University of Jyväskylä INEKE VEDDER University of Amsterdam EUROPEAN SECOND LANGUAGE ASSOCIATION 2010 Eurosla monographs Eurosla publishes a monographs series, available open access on the association’s website. The series includes both single-author and edited volumes on any aspect of second langua- ge acquisition. Manuscripts are evaluated with a double blind peer review system to ensu- re that they meet the highest qualitative standards. Editors Gabriele Pallotti (Series editor), University of Modena and Reggio Emilia Fabiana Rosi (Assistant editor), University of Modena and Reggio Emilia © The Authors 2010 Published under the Creative Commons "Attribution Non-Commercial No Derivatives 3.0" license First published by Eurosla, 2010 An online version of this volume can be downloaded from eurosla.org Graphic design and layout: Pia ’t Lam First edition (august 2010) printed by Edisegno srl, Rome, Italy Table of contents Foreword 5 Developmental stages in second-language acquisition and levels of second-language proficiency: Are there links between them? Jan H. Hulstijn, J. Charles Alderson and Rob Schoonen 11 Designing and assessing L2 writing tasks across CEFR proficiency levels Riikka Alanen, Ari Huhta and Mirja Tarnanen 21 On Becoming an Independent User Maisa Martin, Sanna Mustonen, Nina Reiman and Marja Seilonen 57 Communicative Adequacy -

Studies in Language Testing24

Roger Hawkey Hawkey Roger Theory and Practice Impact Studies in Language Testing 24 Impact Theory and Practice Studies in Studies of the IELTS test and Progetto Lingue 2000 Language Language teaching and testing programmes have long been considered to exert a powerful infl uence on a wide range of stakeholders, including learners and test takers, teachers and textbook writers, test developers and Testing Impact Theory institutions. However, the actual nature of this infl uence and the extent to which it may be positive or negative have only recently been subject to and Practice empirical investigation through research studies of impact. 24 Roger Hawkey This book describes two recent case studies to investigate impact in specifi c educational contexts. One analyses the impact of the International English Language Testing System (IELTS) – a high-stakes English language profi ciency test used worldwide among international students; the second focuses on the Progetto Lingue 2000 (Year 2000 Languages Project) – a major national language teaching reform programme introduced by the Ministry of Education in Italy. Key features of the volume include: • an up-to-date review of the relevant literature on impact, including clarifi cation of the concept of impact and related terms such as washback • a detailed explication of the process of impact study using actual cases as examples • practical guidance on matters such as questionnaire design, interviews, Studies in permissions and confi dentiality, data collection, management and analysis Language Testing • a comprehensive discussion of washback and impact issues in relation to language teaching reform as well as language testing. With its combination of theoretical overview and practical advice, this 24 olume is a useful manual on how to conduct impact studies and will be of Series Editors particular interest to both language test researchers and students of language testing. -

A Lifetime of Language Testing: an Interview with J. Charles Alderson

A Lifetime of Language Testing: An Interview with J. Charles Alderson Tineke Brunfaut Lancaster University, UK Brunfaut, T. (2014). A lifetime of language testing: An interview with J. Charles Alderson. Language Assessment Quarterly, 11 (1), 103-119. Professor J. Charles Alderson grew up in the town of Burnley, in the North-West of England and is still based in the North West, but in the ancient city of Lancaster. From Burnley to Lancaster, however, lies a journey and a career which took him all around the world to share his knowledge, skills, and experience in language testing, and to learn from others on language test development and research projects. Charles has worked with, and advised language testing teams, in countries as diverse as Austria, Brazil, the Baltic States, China, Ethiopia, Finland, Hungary, Hong Kong, Malaysia, Slovenia, Spain, Sri Lanka, Tanzania and the United Kingdom – to name just a few. He has been a consultant to, for example, the British Council, the Council of Europe (CoE), the European Commission, the International Civil Aviation Organization (ICAO), the UK’s Department for International Development (DfID – formerly known as the Overseas Development Agency, or ODA) and the Programme for International Student Assessment (PISA) of the Organisation for Economic Co- operation and Development (OECD). For over 30 years, however, Lancaster University in the UK has been his home institution, where he has taught courses on, for example, language assessment, language acquisition, curriculum design, research methodology, statistics, and applied linguistics. The list of post-graduate students supervised by Charles Alderson is 1 several pages long, and has resulted in a worldwide language testing alumni network.