Installing and Configuring Sun Java System Calendar Server 6.3 with Sun Cluster 3.1 Software

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Openoffice.Org News Highlights Table of Contents Octo Ber 2004

OpenOffice.org News Highlights Table of Contents Octo ber 2004 ................................................................................................ R eplacing FrameMaker with OOo Writer ............................................................................................. Ger mans claim Linux lowers costs ......................................................................................................... Ope n approach offers Mindef more choice ............................................................................................ Ball mer calls for horse-based attack on Star Office ............................................................................... Ope n for Business - The 2004 OfB Choice Awards .............................................................................. Sep tember 2004 ............................................................................................ Ope nOffice.org reveals marketing ambitions ......................................................................................... No nprofit brings Linux and open source to Hawaii ............................................................................... UK charity builds Linux network on a shoestring .................................................................................. N SW opens door to Linux offers ............................................................................................................ L eading Edge Forum Report 2004 - Open Source: Open for Business ................................................. -

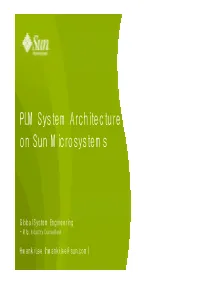

PLM System Architecture on Sun Microsystems

PLM System Architecture on Sun Microsystems Global System Engineering - Mfg. Industry Consultant Hwanki Lee ([email protected]) Agenda - PLM on Sun - PLM System Architecture - Sun’s Solutions 1 PLM on Sun 2 Sun Microsystems on PLM Market Hardware, Infrastructure software, architecture and network computing expertise PLM software and services including systems implementation, integration, consulting and outsourcing services 3 PLM 솔루션 구성요소 Industry Specific Design and Collaboration Tools Custom-specific EAI Generic EAI Tools PLM Software Middleware WebWeb ServerServer WASWAS ServerServer Infrastructure SunSun JavaJava SystemSystem SolutionSolution Database MySQLMySQL NAS / SAN Storage / Backup StorageTekStorageTek제품군제품군 OS/ Server Platform SolarisSolaris SPAECSPAEC EnterpriseEnterprise ServerServer제품군제품군 Sun Java Systems Sun Java Systems and J2EE Technologies Service Consultants & Integration Services Web, Networking, Java, XML, J2EE, JSP's Servlets등이 운영되는 PLM 환경에서 확장성, 가용성, 보안성 등을 보장하며 24x7의 운영환경을 제공 4 Sun 제공 솔루션 Developer Environment Database/ Storage Platform Application Infrastructure Virtualization xVM, Ops Center, Grid Engine Operating System Servers Systems Storage Backup Microprocessor 5 Reference Architecture <UGS TeamCenter User configuration> <MatrixOne Engineering Central Sizing Study> <PTC Windchill User configuration> 6 R&D Datacenter를 위한 Architecture High Performance HPC / CAD Farm Throuput Computing PLM Server Visual Workstation Virtualization on X86 1Socket/8Core/64 Thread Virtualization on Solaris 10 Domain C Domain B -

Sun Java System Application Server 8 Developer's Guide

Sun Java™ System Application Server 8 Developer’s Guide Sun Microsystems, Inc. 4150 Network Circle Santa Clara, CA 95054 U.S.A. Part No: 817-6087 Copyright © 2004 Sun Microsystems, Inc., 4150 Network Circle, Santa Clara, California 95054, U.S.A. All rights reserved. Sun Microsystems, Inc. has intellectual property rights relating to technology embodied in the product that is described in this document. In particular, and without limitation, these intellectual property rights may include one or more of the U.S. patents listed at http://www.sun.com/patents and one or more additional patents or pending patent applications in the U.S. and in other countries. THIS PRODUCT CONTAINS CONFIDENTIAL INFORMATION AND TRADE SECRETS OF SUN MICROSYSTEMS, INC. USE, DISCLOSURE OR REPRODUCTION IS PROHIBITED WITHOUT THE PRIOR EXPRESS WRITTEN PERMISSION OF SUN MICROSYSTEMS, INC. U.S. Government Rights - Commercial software. Government users are subject to the Sun Microsystems, Inc. standard license agreement and applicable provisions of the FAR and its supplements. Use is subject to license terms. This distribution may include materials developed by third parties. Sun, Sun Microsystems, the Sun logo, Java, and the Java Coffee Cup logo are trademarks or registered trademarks of Sun Microsystems, Inc. in the U.S. and other countries. This product is covered and controlled by U.S. Export Control laws and may be subject to the export or import laws in other countries. Nuclear, missile, chemical biological weapons or nuclear maritime end uses or end users, whether direct or indirect, are strictly prohibited. Export or reexport to countries subject to U.S. -

Tiered Storage and the Sun Java System Messaging Server

Tiered Storage and Sun Java™ System Messaging Server White Paper June 2009 Abstract Service providers face unrelenting pressure to increase storage for user mailboxes. This paper explains how using production products, such as Sun Communications Suite software, the Solaris ZFS™ fi le system, and a Sun Storage array, can cut storage costs by half, while performance (measured by messages per second) can be increased by more than 50 percent in environments with millions of users. Extensive load testing has shown that this single-rack solution provides a cost-effective message store for two million users. It is easily replicated and highly modular. Sun Microsystems, Inc. Table of Contents Introduction—The Market Environment ...........................................................3 Goals and Requirements .................................................................................4 Performance ....................................................................................................... 4 Scalability .......................................................................................................... 5 Cost ................................................................................................................... 5 Backup ............................................................................................................... 5 Availability ......................................................................................................... 5 Java System Messaging Server Message Store ...................................................6 -

System Virtualization Support in Sun Java System Products

SystemVirtualization Support in Sun Java System Products October 2009 This document is maintained by Sun Java System team. Software Products Covered by this Statement This document summarizes Sun support for Sun Java System products when used in conjunction with system virtualization products and features. It applies to Sun products contained in the following Sun Java System suites: ■ Sun GlassFish Portfolio ■ Sun Java Application Platform Suite ■ Sun Java Identity Management Suite ■ Sun Java Composite Application Platform Suite ■ Sun B2B Suite ■ Sun ESB Suite ■ Sun MDM Suite ■ Sun Java Web Infrastructure Suite ■ Sun Java Communications Suite Refer to the Sun Java Enterprise System (Java ES) and Communications Suite product pages for more information on these suites. The Sun Java Availability Suite and Solaris Cluster are not addressed in this support statement. Refer to the Solaris Cluster product information for further details on Solaris Cluster's support for operating system virtualization. Introduction A core capability of system virtualization offerings is the ability to execute multiple operating system (OS) instances on shared hardware. Functionally, an application deployed to an OS hosted in a virtualized environment is generally unaware that the underlying platform has been virtualized. Sun performs testing of its Sun Java System products on select system virtualization and OS combinations to help validate that the Sun Java System products continue to function on properly sized and configured virtualized environments as they do on non-virtualized systems. System Resource Sizing The combination of being able to deploy multiple OS instances and applications on a single system and the ease by which system resources can be allocated to OS instances increases the likelihood of realizing undersized environments for your applications. -

2. Avaya System Products Using the Sun Java System Application Server on a Sun Microsystems Operating System: None 3

A Security Vulnerability in Sun Java System Application Server May Expose an Application's WEB-INF and META-INF Content (Sun 245446) Original Release Date: January 28, 2009 Last Revised: January 28, 2009 Number: ASA-2009-033 Risk Level: None Advisory Version: 1.0 Advisory Status: Final 1. Overview: A new Sun Alert Notification from Sun Microsystems has been issued and is described below. Additional information about this issue may be found on the sunsolve.sun.com website, although a maintenance contract with Sun may be required to view the information. 245446 A Security Vulnerability in Sun Java System Application Server May Expose an Application's WEB-INF and META-INF Content Product: Sun Java System Application Server 8.1, Sun Java System Application Server 8.2 Category: Security Date Released: 21-Jan-2009 http://sunsolve.sun.com/search/document.do?assetkey=1-66-245446-1 The Common Vulnerabilities and Exposures project (cve.mitre.org) has assigned the name CVE-2009-0278 to this issue. 2. Avaya System Products using the Sun Java System Application Server on a Sun Microsystems Operating System: None 3. Additional Information: Additional information may also be available via the Avaya support website and through your Avaya account representative. Please contact your Avaya product support representative, or dial 1-800-242-2121, with any questions. 4. Disclaimer: ALL INFORMATION IS BELIEVED TO BE CORRECT AT THE TIME OF PUBLICATION AND IS PROVIDED "AS IS". AVAYA INC., ON BEHALF ITSELF AND ITS SUBSIDIARIES AND AFFILIATES (HEREINAFTER COLLECTIVELY REFERRED TO AS "AVAYA"), DISCLAIMS ALL WARRANTIES, EITHER EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE AND FURTHERMORE, AVAYA MAKES NO REPRESENTATIONS OR WARRANTIES THAT THE STEPS RECOMMENDED WILL ELIMINATE SECURITY OR VIRUS THREATS TO CUSTOMERS' SYSTEMS. -

Sun Java System Directory Server Enterprise Edition 6.3.1 Release Notes

Sun Java System Directory Server Enterprise Edition 6.3.1 Release Notes Part No: 820–5817 May 2010 Copyright © 2010, Oracle and/or its affiliates. All rights reserved. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related software documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, the following notice is applicable: U.S. GOVERNMENT RIGHTS Programs, software, databases, and related documentation and technical data delivered to U.S. Government customers are “commercial computer software” or “commercial technical data” pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, the use, duplication, disclosure, modification, and adaptation shall be subject to the restrictions and license terms setforth in the applicable Government contract, and, to the extent applicable by the terms of the Government contract, the additional rights set forth in FAR 52.227-19, Commercial Computer Software License (December 2007). Oracle America, Inc., 500 Oracle Parkway, Redwood City, CA 94065. -

Sunlook Template for Openoffice.Org and Staroffice

US Sun Role Manager 4.1 Manual Installation Guide Sun Microsystems, Inc. 4150 Network Circle Santa Clara, CA 95054 U.S.A. Part No: 820-5794 September 2008 ©2008 Sun Microsystems, Inc. 4150 Network Circle Santa Clara, CA 95054 U.S.A. Sun Microsystems, Inc. has intellectual property rights relating to technology embodied in the product that is described in this document. In particular, and without limitation, these intellectual property rights may include one or more U.S. patents or pending patent applications in the U.S. and in other countries. U.S. Government Rights – Commercial software. Government users are subject to the Sun Microsystems, Inc. standard license agreement and applicable provisions of the FAR and its supplements. This distribution may include materials developed by third parties. Parts of the product may be derived from Berkeley BSD systems, licensed from the University of California. UNIX is a registered trademark in the U.S. and other countries, exclusively licensed through X/Open Company, Ltd. Sun, Sun Microsystems, the Sun logo, the Solaris logo, the Java Coffee Cup logo, docs.sun.com, Java, Solaris, J2EE, JDBC, JDE and JVM are trademarks or registered trademarks of Sun Microsystems, Inc. in the U.S. and other countries. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. in the U.S. and other countries. Products bearing SPARC trademarks are based upon an architecture developed by Sun Microsystems, Inc. ORACLE is a registered trademark of ORACLE CORPORATION. The OPEN LOOK and SunTM Graphical User Interface was developed by Sun Microsystems, Inc. -

We Listen. We Strategize. We Execute

We Listen. We Strategize. We Execute. Enterprise Integration Solutions and Consulting Services The XTS Group is an ERP solutions provider. We can help maximize the efficiency and productivity of our clients business’ processes, through best-practices and CONTACT A RECRUITER TODAY: outstanding innovations. Our strategic global model combines the best of on-shore and [email protected] off-shore software design and development to deliver best-in-class solutions to our [email protected] clients. Corporate Headquarters 1304 W Walnut Hill Ln. y XTS Group provides expertise in Suite 220, implementation, integration, and Irving, TX 75038 USA optimization US Offices (972) 714 – 9987 ext 146 We provide: (972) 580 – 0987 ext 146 1. Architecture planning www.xtsgroup.com 2. Implementation services India United Kingdom 3. Custom development 545, Road# 27 95 Wilton Road 4. Systems integration Jubilee Hills Suite 3 5. Operations planning Hyderabad, AP 500 033 London, SW1V 1BZ 6. Optimization services India United Kingdom Singapore No.1 North Bridge Road High Street Center # 22-07 Singapore 179094 300,000 200,000 100,000 02 03 04 05 06 ¾ SAP, an acronym for Systems, NetWeaver is an SAP Exchange The application is designed Controlling provides you Applications and Products in Data application builder from SAP for Infrastructure (SAP XI) is to be installed while minimizing with information for Processing is an enterprise resource integrating business processes and SAP's Enterprise Application disruptions to the daily flow of management decision-making. planning (ERP) software product databases from a number of Integration (EAI) software, a business. The goal is that It facilitates coordination, capable of integrating multiple sources while exploiting the component of the NetWeaver customers will use MDM as a monitoring and optimization of business applications, with each leading Web services technologies. -

Lifetime Support Policy: Oracle and Sun Systems Software

Oracle and Sun System Software and Operating Systems Oracle Lifetime Support Policy September 21, 2021, 2021 | Version 1.00 Copyright © 2021, Oracle and/or its affiliates TABLE OF CONTENTS This brochure provides lifecycle timeframes for system software that is typically sold Additional Sun software is located in the standalone, but used in conjunction with specific Oracle or Sun products. Product Oracle Fusion Middleware, Oracle areas include server and storage management, Sun third-party, communication and Technology and Oracle Applications collaboration and Sun Ray. Lifetime Support Policy brochures located on www.oracle.com. ORACLE LIFETIME SUPPORT: 2 Please see the Oracle Software Oracle Communications Session Border Controller (Formerly Acme Packet Net-Net) 5 Technical Support Policies document Oracle Communications Tunneled Session Controller (Formerly Acme Packet NetNet) 5 located on www.oracle.com for Oracle Communications Application Session Controller (Formerly Acme Packet Net-Net) 5 additional terms and descriptions. Oracle Communications Unified Session Manager (Formerly Acme Packet Net-Net) 6 Oracle Communications Subscriber Aware Load Balancer (Formerly Acme Packet Net-Net) 6 Oracle Communications Border Gateway (Formerly Acme Packet Net-Net Border Gateway) 6 Oracle Communications Core Session Manager 7 Oracle Communications Session Router (Formerly Acme Packet Net-Net Session Router) 7 Oracle Communications Security Gateway (Formerly Acme Packet Net-Net Security Gateway) 7 Oracle Communications Session Monitor Family of -

Sun Java System Directory Server 5.2 Administration Guide 2005Q1

Sun Java™ System Directory Server 5.2 Administration Guide 2005Q1 Sun Microsystems, Inc. 4150 Network Circle Santa Clara, CA 95054 U.S.A. Part No: 817-7613-10 Copyright © 2005 Sun Microsystems, Inc., 4150 Network Circle, Santa Clara, California 95054, U.S.A. All rights reserved. Sun Microsystems, Inc. has intellectual property rights relating to technology embodied in the product that is described in this document. In particular, and without limitation, these intellectual property rights may include one or more of the U.S. patents listed at http://www.sun.com/patents and one or more additional patents or pending patent applications in the U.S. and in other countries. THIS PRODUCT CONTAINS CONFIDENTIAL INFORMATION AND TRADE SECRETS OF SUN MICROSYSTEMS, INC. USE, DISCLOSURE OR REPRODUCTION IS PROHIBITED WITHOUT THE PRIOR EXPRESS WRITTEN PERMISSION OF SUN MICROSYSTEMS, INC. U.S. Government Rights - Commercial software. Government users are subject to the Sun Microsystems, Inc. standard license agreement and applicable provisions of the FAR and its supplements. This distribution may include materials developed by third parties. Parts of the product may be derived from Berkeley BSD systems, licensed from the University of California. UNIX is a registered trademark in the U.S. and in other countries, exclusively licensed through X/Open Company, Ltd. Sun, Sun Microsystems, the Sun logo, Java, Solaris, JDK, Java Naming and Directory Interface, JavaMail, JavaHelp, J2SE, iPlanet, the Duke logo, the Java Coffee Cup logo, the Solaris logo, the SunTone Certified logo and the Sun ONE logo are trademarks or registered trademarks of Sun Microsystems, Inc. in the U.S. -

Installation Guide

Installation Guide Please select your version iPlanet 6.x Server Sun Java System Web Server 6.x Installation Instructions for iPlanet 6.x Server Step 1: Download Symantec Root and Intermediate CA Certificate 1. Download the Root CA from here. 2. Save the file as Root.txt 3. Download the Intermediate CA certificate from here. Select the appropriate Intermediate CA certificate for your SSL Certificate type. Copy the Intermediate CA certificate and paste it on a Notepad. 4. Save the files as intermediate.txt Step 2: Install Symantec Root and Intermediate CA Certificate 1. Select the server instance to manage and click Manage and make sure to choose https- yourdomain 2. Click Security 3. Click Install Certificate 4. Check the Trusted Certificate Authority option and enter in the key pair password 5. Enter a Certificate Name. For example: Symantec Root CA 6. Select Server Certificate Chain. 7. Enter the key pair file password. 8. Enter a Certificate Name. For example: Symantec Primary Intermediate. 9. Select Message text (with headers). 10. Open the intermediate.txt and copy the entire certificate 11. Paste the text from the Intermediate certificate into the box and click OK. NOTE: Do not shut down and restart the server. Proceed to the instructions below first. Step 3: Manually set both server / client trust for the Symantec Intermediate CA Certificate NOTE: By default, iPlanet 6 does not automatically trust the {Symantectermediate CA certificate once it has been added to trusted CA store. The Symantec Intermediate CA certificate has to be manually trusted. 1. Select the server instance to manage and click Manage.