Enlightening the I/O Path: a Holistic Approach for Application Performance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Low Level Tracing for Latency Analysis

Low Level Tracing for Latency Analysis From Baremetal to Hardware Tracing Blocks Suchakrapani Datt Sharma & Thomas Bertauld Oct 12, 2016 École Polytechnique de Montréal Laboratoire DORSAL whoami Suchakra ● PhD student, Computer Engineering (Prof Michel Dagenais) DORSAL Lab, École Polytechnique de Montréal – UdeM ● Works on debugging, tracing and trace aggregation (LTTng, eBPF), hardware tracing and VMs. ● Loves poutine, samosas and bikes POLYTECHNIQUE MONTREAL – Suchakrapani Datt Sharma whoami Thomas ● MSc student, Computer Engineering (Prof Michel Dagenais) DORSAL Lab, École Polytechnique de Montréal – UdeM ● Worked on embedded systems tracing, baremetal systems, trace analysis and now in financial-tech domain ● Loves computer games POLYTECHNIQUE MONTREAL – Suchakrapani Datt Sharma Agenda Latency ● Introduction ● Tools and techniques Hardware Tracing ● Intel Processor Trace ● ARM CoreSight ● Hardware trace based analysis Baremetal Tracing ● Heterogeneous system challenges ● Low level traces with barectf POLYTECHNIQUE MONTREAL – Suchakrapani Datt Sharma Latency POLYTECHNIQUE MONTREAL – Suchakrapani Datt Sharma SS ncy Super Complex Stuff Late S POLYTECHNIQUE MONTREAL – Suchakrapani Datt Sharma SS S POLYTECHNIQUE MONTREAL – Suchakrapani Datt Sharma SS S Hardware Interrupt POLYTECHNIQUE MONTREAL – Suchakrapani Datt Sharma Delay between interrupt and do_IRQ() SS S Hardware Interrupt Interrupt Handler POLYTECHNIQUE MONTREAL – Suchakrapani Datt Sharma Delay between interrupt and Interrupt do_IRQ() Handler Delay SS S Hardware Interrupt Interrupt -

Oracle Solaris 11 Express What's

ORACLE SOLARIS 11 EXPRESS 2010.11 | WHAT’S NEW ORACLE SOLARIS 11 EXPRESS 2010.11 WHAT’S NEW Oracle Solaris is the industry leading operating system for the enterprise. Oracle Solaris 11 Express 2010.11 raises the bar on the innovation introduced in Oracle Solaris 10, with a unique feature set NEW FEATURE HIGHLIGHTS that few other operating systems can offer. Oracle Solaris 11 Express 2010.11 has been tested and • Oracle Solaris 10 Zones for business optimized for Oracle hardware and software, and is an integral part of Oracle's combined hardware investment protection and software portfolio. • Built-in network virtualization and resource Oracle Solaris 11 Express 2010.11 provides customers with the latest access to Oracle Solaris 11 management for enhanced server workload technology, allowing developers, architects and administrators to test and deploy within an consolidation enterprise environments and greatly simplify their day to day operations. Oracle Solaris 11 Express 2010.11 has the reliability, availability, and serviceability that you'd expect from a leading • Greatly reduced planned and unplanned downtime with new package management tools enterprise operating system. with safe system upgrade With new features such as network based package management tools to greatly decrease planned • New optimizations and features designed to system downtime and provide for a completely safe system upgrade, built-in network virtualization deliver proven scalability and reliability as an and resource control management for an unprecedented level of flexibility for application integrated component of Oracle's Exadata and consolidation, and on-disk ZFS encryption to provide the highest levels of security in your data Exalogic systems center, Oracle Solaris 11 Express 2010.11 is the most exciting release of the Oracle Solaris platform to date. -

Yocto Project and Embedded OS

Yocto Project and Jeffrey Osier-Mixon Embedded OS •What is the Yocto Project and why is it important? •Working with an open source collaborative project & community Kevin McGrath •Yocto Project concepts in a nutshell: environment, metadata, tools • Using Yocto cross-compiler • Running kernel via qemu th • Module installation, virtio, etc. July 28 2015 • Lessons learned, capabilities 11:00 PDT (GMT -7) 1 Yocto Project and Embedded OS Our guests Jeffrey Osier-Mixon: Jeff "Jefro" Osier-Mixon works for Intel Corporation in Intel's Open Source Technology Center, where his current role is community manager for the Yocto Project.. Jefro also works as a community architect and consultant for a number of open source projects and speaks regularly at open source conferences worldwide. He has been deeply involved with open source since the early 1990s. Kevin McGrath : Instructor at Oregon State University. I primarily teach the operating systems sequence and the senior capstone project sequence, but have taught architecture, assembly programming, introductory programming classes, and just about anything else that needs someone to teach it. While my background is in network security and high performance computing (computational physics), today I mostly live in the embedded space, leading to the “ECE wannabe” title in my department. Oleg Verge (Moderator): Technical Program Manager Intel Higher Education, System Engineer MCSE,CCNA, VCP Intel® IoT Developer Kit v1.0 Hardware components = + + Helpful Linux* tools (GCC tool chain, perf, oProfile, Software image + etc.), required drivers (Wi-Fi*, Bluetooth®, etc.), useful = API libraries, and daemons like LighttPD and Node.js. + Intel XDK Support for various IDEs = + + + For C/C++ For java, For Arduino* For Visual + node.js.,html5 sketches Programming Cloud services = Intel IoT Analytics includes capabilities for data collection, + storage, visualization, and analysis of sensor data. -

Rootfs Made Easy with Buildroot

Kernel Recipes 2013 Rootfs made easy with Buildroot How kernel developers can finally solve the rootfs problem. Thomas Petazzoni Bootlin [email protected] - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 1/1 Thomas Petazzoni I CTO and embedded Linux engineer at Bootlin I Embedded Linux development: kernel and driver development, system integration, boot time and power consumption optimization, consulting, etc. I Embedded Linux training, Linux driver development training and Android system development training, with materials freely available under a Creative Commons license. I We're hiring! I http://bootlin.com I Contributing the kernel support for the new Armada 370 and Armada XP ARM SoCs from Marvell (widely used in NAS devices). I Major contributor to Buildroot, an open-source, simple and fast embedded Linux build system I Living in Toulouse, south west of France - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 2/1 Doing kernel development is awesome, but... - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 3/1 A kernel without a root filesystem is kind of useless input: ImExPS/2 Generic Explorer Mouse as /devices/fpga:07/serio1/input/input1 Root-NFS: no NFS server address VFS: Unable to mount root fs via NFS, trying floppy. VFS: Cannot open root device "(null)" or unknown-block(2,0) Please append a correct "root=" boot option; here are the available partitions: Kernel panic - not syncing: VFS: Unable to mount root fs on unknown-block(2,0) - Kernel, drivers and embedded Linux - Development, consulting, training and support - https://bootlin.com 4/1 Solutions often used by kernel dev I A complete Linux distribution + Readily available - Large (can hardly be used as an initramfs) - Not available for all architectures - Not easy to customize. -

Microprocessor

Microprocessor A microprocessor is a computer processor that incorporates the functions of a central processing unit on a single integrated circuit (IC), or at most a few integrated circuits. The microprocessor is a multipurpose, clock driven, register based, digital-integrated circuit that accepts binary data as input, processes it according to instructions stored in its memory, and provides results as output. Microprocessors contain both combinational logic and sequential digital logic. Microprocessors operate on numbers and symbols represented in the binary numeral system. The integration of a whole CPU onto a single chip or on a few chips greatly reduced the cost of processing power, increasing efficiency. Integrated circuit processors are produced in large numbers by highly automated processes, resulting in a low per-unit cost. Single- chip processors increase reliability because there are many fewer electrical connections to fail. As microprocessor designs improve, the cost of manufacturing a chip (with smaller components built on a semiconductor chip the same size) generally stays the same. Before microprocessors, small computers had been built using racks of circuit boards with many medium- and small-scale integrated circuits. Microprocessors combined this into one or a few large-scale ICs. Continued increases in microprocessor capacity have since rendered other forms of computers almost completely obsolete (see history of computing hardware), with one or more microprocessors used in everything from the smallest embedded systems -

Yocto Project Application Developer's Guide Is for the 1.4.3 Release of the Yocto Project

Jessica Zhang, Intel Corporation <[email protected]> by Jessica Zhang Copyright © 2010-2014 Linux Foundation Permission is granted to copy, distribute and/or modify this document under the terms of the Creative Commons Attribution-Share Alike 2.0 UK: England & Wales [http://creativecommons.org/licenses/by-sa/2.0/uk/] as published by Creative Commons. Manual Notes • This version of the Yocto Project Application Developer's Guide is for the 1.4.3 release of the Yocto Project. To be sure you have the latest version of the manual for this release, go to the Yocto Project documentation page [http://www.yoctoproject.org/documentation] and select the manual from that site. Manuals from the site are more up-to-date than manuals derived from the Yocto Project released TAR files. • If you located this manual through a web search, the version of the manual might not be the one you want (e.g. the search might have returned a manual much older than the Yocto Project version with which you are working). You can see all Yocto Project major releases by visiting the Releases [https://wiki.yoctoproject.org/wiki/Releases] page. If you need a version of this manual for a different Yocto Project release, visit the Yocto Project documentation page [http://www.yoctoproject.org/ documentation] and select the manual set by using the "ACTIVE RELEASES DOCUMENTATION" or "DOCUMENTS ARCHIVE" pull-down menus. • To report any inaccuracies or problems with this manual, send an email to the Yocto Project discussion group at [email protected] or log into the freenode #yocto channel. -

Vorlage Für Dokumente Bei AI

OSS Disclosure Document Date: 27-Apr-2018 CM-AI/PJ-CC OSS Licenses used in Suzuki Project Page 1 Table of content 1 Overview ..................................................................................................................... 11 2 OSS Licenses used in the project ................................................................................... 11 3 Package details for OSS Licenses usage ........................................................................ 12 3.1 7 Zip - LZMA SDK ............................................................................................................. 12 3.2 ACL 2.2.51 ...................................................................................................................... 12 3.3 Alsa Libraries 1.0.27.2 ................................................................................................... 12 3.4 AES - Advanced Encryption Standard 1.0 ......................................................................... 12 3.5 Alsa Plugins 1.0.26 ........................................................................................................ 13 3.6 Alsa Utils 1.0.27.2 ......................................................................................................... 13 3.7 APMD 3.2.2 ................................................................................................................... 13 3.8 Atomic_ops .................................................................................................................... 13 3.9 Attr 2.4.46 ................................................................................................................... -

LKP-Tests Linux Kernel Performance Test Tool What Is LKP-Tests

LKP-tests Linux kernel performance test tool What is LKP-tests ● Framework to run benchmarks ○ Fetch/install the benchmarks ○ Prepare test environment ■ For example, format disk ○ Run benchmark with various parameters ■ Collect performance statistics ○ Benchmark/performance statistics parsing ● Framework for performance analysis ○ Result compare ● Used for benchmark running and reproducing regression captured by 0-Day Highlights ● Rich benchmarks support ● Capture performance statistics (monitors) in addition to benchmark score ○ For example, perf stat, perf profile, vmstat, etc. ● Compare/analyze all performance statistics data (benchmark and monitors) ○ Unified data model Benchmarks ● Integrated ~50 benchmarks ○ Fetch/install benchmarks ○ Run benchmarks with various parameters ○ Parse result of benchmarks ● Mostly micro-benchmarks, several macro-benchmarks ● Mostly server workload Benchmark List - 1 ● Scheduler ○ Hackbench, perf-bench-sched-pipe, etc. ● File system/IO ○ Fio, fsmark, iozone, dbench, dd, fileio, postmark, etc. ● Network ○ Netperf, iperf, apachebench, ku-latency, nepim, netpipe, nuttcp, qperf, siege, sockperf, stutter, tbench, thrulay, etc. ● Scalability ○ Reaim, aim7, will-it-scale, etc. ● Memory management ○ vm-scalability, chromeswap, exit_free, pft, perf-bench-numa-mem, pmbench, swapin, tlbflush, etc. Benchmark List - 2 ● Database ○ Pgbench, oltp, etc. ● Noise ○ Ftq, fwq, etc. ● HPC ○ Hpcc, linpack ● Workload emulation ○ Blogbench, ebizzy (web application server), kbuild, pbzip2, pigz, pixz, plzip, pxz, etc. ● Others -

List of Applications Updated in ARL #2581

List of Applications Updated in ARL #2581 Application Name Publisher .NET Core Runtime 3.0 Preview Microsoft .NET Core Toolset 3.1 Preview Microsoft .NET Framework 4.5 Microsoft .NET Framework 4.6 Microsoft .NET Framework Developer Pack 4.7 Microsoft .NET Framework Multi-Targeting Pack for Windows Store Apps 4.5 RC Microsoft .NET Framework SDK 4.8 Microsoft _connect.BRAIN 4.8 Bizerba 2200 TapeStation Software 3.1 Agilent Technologies 2200 TapeStation Software 3.2 Agilent Technologies 24x7 Automation Suite 3.6 SoftTree Technologies 3500 Rack Configuration Software 6.0 Bently Nevada 365 16.0 Microsoft 3D Sprint 2.10 3D Systems 3D Sprint 2.11 3D Systems 3D Sprint 2.12 3D Systems 3D Viewer Microsoft 3PAR Host Explorer VMware 4.0 HP 4059 Extended Edition Attendant Console 2.1 ALE International 4uKey 1.4 Tenorshare 4uKey 1.6 Tenorshare 4uKey 2.2 Tenorshare 50 Accounts 21.0 Sage Group 50 Accounts 25.1 Sage Group 793 Controller Software 5.8 MTS Systems 793 Controller Software 5.9 MTS Systems 793 Controller Software 6.1 MTS Systems 7-Zip 19.00 Igor Pavlov ABAQUS 2018 Student Dassault Systemes ABAQUS 2019 Student Dassault Systemes Abstract 73.0 Elastic Projects ABU Service 14.10 Teradata Access Client 3.5 Barracuda Networks Access Client 3.7 Barracuda Networks Access Client 4.1 Barracuda Networks Access Module for Azure 15.1 Teradata Access Security Gateway (ASG) Soft Key Avaya AccuNest 10.3 Gerber Technology AccuNest 11.0 Gerber Technology ACDSee 2.3 Free ACD Systems ACDSee 2.4 Free ACD Systems ACDSee Photo Studio 2019 Professional ACD Systems -

Systemtap Times

Systemtap times April 2009 Frank Ch. Eigler <[email protected]> systemtap lead why trace/probe ● to monitor future – background monitoring, flight recording – programmed response ● to debug present – symbolic, source-level exploration – unforseen problems ● to analyze past – collect traces – analyze dumps rich capabilities ● system-wide (kernel + userspace) programmable tracing/probing ● compatible with a wide range of kernels, distributions ● operates on live system, no patch/reconfigure/recompile/reboot ● measure time, access any data, explore control flow, correlate events, inject faults ● integrated access to multiple tracing facilities consider alternatives ● ftrace – hard-coded, kernel-only, single-user – we share instrumentation hooks, some infrastructure ● ksplice – unprotected, kernel-only, x86 – maybe let's share code recompilation process ● dtrace – not available on linux – we share ambitions examples ● http://sourceware.org/systemtap/examples/ ● http://sourceware.org/systemtap/wiki/WarStories ● ordinary – log events, filtered + correlated + summarized – call graphs with variables – measure times/values, indexed by anything – graph cpu/net/disk utilization, act upon thresholds ● esoteric – kernel-enforced file naming policy filters – security bug band-aids operation part 1 ● compile probe script foo.stp: – parse script – combine it with tapset (library of scripts by experts) – combine it with debugging information, probe catalogues, event source metadata – generate C code with safety checks – compile into kernel module with kbuild – result: vanilla kernel module operation part 2 ● run probe module foo.ko: – load into kernel – detach (flight-recorder mode) or consume trace live – unload ● probe module may be cached, reused, shared with other machines running same kernel ● sysadmins can authorize others to run precompiled modules the “upstream” question ● but it already works on your machine – not a driver; not a filesystem – uses vanilla module APIs – a little like X.org or glibc or kgdb – or even latencytop .. -

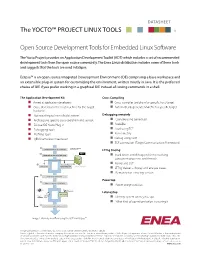

Open Source Development Tools for Embedded Linux Software The

DATASHEET The YOCTO™ PROJECT LINUX TOOLS 1 Open Source Development Tools for Embedded Linux Software The Yocto Project provides an Application Development Toolkit (ADT) which includes a set of recommended development tools from the open source community. The Enea Linux distribution includes some of these tools and suggests that the tools are used in Eclipse. Eclipse™ is an open source Integrated Development Environment (IDE) comprising a base workspace and an extensible plug-in system for customizing the environment, written mostly in Java. It is the preferred choice of IDE if you prefer working in a graphical IDE instead of issuing commands in a shell. The Application Development Kit Cross Compiling n Aimed at application developers n Cross-compiler toolchain for specific host/target n Cross-develop on the host machine for the target n Automatically generate Makefile for specific target hardware n Not requiring to learn a build system Debugging remotely n Architecture specific cross-toolchain and sysroot n Compile using generated n Eclipse IDE Yocto Plug-in n Makefile n Debugging tools n Load using TCF n Profiling tools n Run remotely n QEMU emulator integration n Debug using GDB n TCF connection (Target Communication Framework) LTTng tracing n Track down and debug problems involving concurrent processes and threads. n Kernel and UST n LTTng Viewer – display and analyze traces n Remotely run a tracing session Powertop n Power usage statistics Latencytop n Identify system latency hiccups n What kind of operation/action is causing it Image 1: Provides workflow using the ADT and Eclipse™ The Linux trademark is owned by Linus Torvalds and administered by the Linux Mark Institute. -

The Linux Kernel: Configuring the Kernel Part 22

The Linux Kernel: Configuring the Kernel Part 22 In this article, we will continue to configure the kernel hacks and then we will configure the whole security system. The next feature to configure is needed by Alpha and s390 processor (Force weak percpu definitions). This feature offers a fix for an addressing issue commonly seen in such processors. Other processors do not need this feature enabled. Kernel dumps can be tested with this special debugging tool (Linux Kernel Dump Test Tool Module). This software will allow a kernel developer to trigger a fake error that will cause a kernel dump. The kernel developers can then ensure that the dumps complete successfully. The kernel offers some different error injection modules that allow kernel developers to test the notifiers (CPU notifier error injection module), (PM notifier error injection module), and (Memory hotplug notifier error injection module). A notifier informs the system that the hardware is present, which is important for hotplugging. These error injection modules trigger an error in this notification system so developers can test the notification system's error handling abilities. The "Faultinjection framework" driver offers various tools for testing faulthandling. The "Latency measuring infrastructure" driver provides the LatencyTOP tool used to find the userspace object that is blocking/interfering with a kernel execution/task. Next, we have a submenu titled "Tracers" that contains a list of various tracers. A tracer is a piece of code that watches various kernel functions. Every time a particular function starts, a tracer will be called to watch the function.