Liff: Light Field Features in Scale and Depth

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Multi-Perspective Stereoscopy from Light Fields

Multi-Perspective stereoscopy from light fields The MIT Faculty has made this article openly available. Please share how this access benefits you. Your story matters. Citation Changil Kim, Alexander Hornung, Simon Heinzle, Wojciech Matusik, and Markus Gross. 2011. Multi-perspective stereoscopy from light fields. ACM Trans. Graph. 30, 6, Article 190 (December 2011), 10 pages. As Published http://dx.doi.org/10.1145/2070781.2024224 Publisher Association for Computing Machinery (ACM) Version Author's final manuscript Citable link http://hdl.handle.net/1721.1/73503 Terms of Use Creative Commons Attribution-Noncommercial-Share Alike 3.0 Detailed Terms http://creativecommons.org/licenses/by-nc-sa/3.0/ Multi-Perspective Stereoscopy from Light Fields Changil Kim1,2 Alexander Hornung2 Simon Heinzle2 Wojciech Matusik2,3 Markus Gross1,2 1ETH Zurich 2Disney Research Zurich 3MIT CSAIL v u s c Disney Enterprises, Inc. Input Images 3D Light Field Multi-perspective Cuts Stereoscopic Output Figure 1: We propose a framework for flexible stereoscopic disparity manipulation and content post-production. Our method computes multi-perspective stereoscopic output images from a 3D light field that satisfy arbitrary prescribed disparity constraints. We achieve this by computing piecewise continuous cuts (shown in red) through the light field that enable per-pixel disparity control. In this particular example we employed gradient domain processing to emphasize the depth of the airplane while suppressing disparities in the rest of the scene. Abstract tions of autostereoscopic and multi-view autostereoscopic displays even glasses-free solutions become available to the consumer. This paper addresses stereoscopic view generation from a light field. We present a framework that allows for the generation However, the task of creating convincing yet perceptually pleasing of stereoscopic image pairs with per-pixel control over disparity, stereoscopic content remains difficult. -

Light Field Saliency Detection with Deep Convolutional Networks Jun Zhang, Yamei Liu, Shengping Zhang, Ronald Poppe, and Meng Wang

1 Light Field Saliency Detection with Deep Convolutional Networks Jun Zhang, Yamei Liu, Shengping Zhang, Ronald Poppe, and Meng Wang Abstract—Light field imaging presents an attractive alternative � � � �, �, �∗, �∗ … to RGB imaging because of the recording of the direction of the � incoming light. The detection of salient regions in a light field �� �, �, �1, �1 �� �, �, �1, �540 … image benefits from the additional modeling of angular patterns. … For RGB imaging, methods using CNNs have achieved excellent � � results on a range of tasks, including saliency detection. However, �� �, �, ��, �� Micro-lens … it is not trivial to use CNN-based methods for saliency detection Main lens Micro-lens Photosensor image on light field images because these methods are not specifically array �� �, �, �375, �1 �� �, �, �375, �540 (a) (b) designed for processing light field inputs. In addition, current � � ∗ ∗ light field datasets are not sufficiently large to train CNNs. To �� � , � , �, � … overcome these issues, we present a new Lytro Illum dataset, �� �−4, �−4, �, � �� �−4, �4, �, � … which contains 640 light fields and their corresponding ground- … truth saliency maps. Compared to current light field saliency � � � � , � , �, � datasets [1], [2], our new dataset is larger, of higher quality, � 0 0 … contains more variation and more types of light field inputs. Sub-aperture Main lens Micro-lens Photosensor image This makes our dataset suitable for training deeper networks array �� �4, �−4, �, � �� �4, �4, �, � and benchmarking. Furthermore, we propose a novel end-to- (c) (d) end CNN-based framework for light field saliency detection. Specifically, we propose three novel MAC (Model Angular Fig. 1. Illustrations of light field representations. (a) Micro-lens image Changes) blocks to process light field micro-lens images. -

Convolutional Networks for Shape from Light Field

Convolutional Networks for Shape from Light Field Stefan Heber1 Thomas Pock1,2 1Graz University of Technology 2Austrian Institute of Technology [email protected] [email protected] Abstract x η x η y y y y Convolutional Neural Networks (CNNs) have recently been successfully applied to various Computer Vision (CV) applications. In this paper we utilize CNNs to predict depth information for given Light Field (LF) data. The proposed method learns an end-to-end mapping between the 4D light x x field and a representation of the corresponding 4D depth ξ ξ field in terms of 2D hyperplane orientations. The obtained (a) LF (b) depth field prediction is then further refined in a post processing step by applying a higher-order regularization. Figure 1. Illustration of LF data. (a) shows a sub-aperture im- Existing LF datasets are not sufficient for the purpose of age with vertical and horizontal EPIs. The EPIs correspond to the training scheme tackled in this paper. This is mainly due the positions indicated with dashed lines in the sub-aperture im- to the fact that the ground truth depth of existing datasets is age. (b) shows the corresponding depth field, where red regions inaccurate and/or the datasets are limited to a small num- are close to the camera and blue regions are further away. In the EPIs a set of 2D hyperplanes is indicated with yellow lines, where ber of LFs. This made it necessary to generate a new syn- corresponding scene points are highlighted with the same color in thetic LF dataset, which is based on the raytracing software the sub-aperture representation in (b). -

Head Mounted Display Optics II

Head Mounted Display Optics II Gordon Wetzstein Stanford University EE 267 Virtual Reality Lecture 8 stanford.edu/class/ee267/ Lecture Overview • focus cues & the vergence-accommodation conflict • advanced optics for VR with focus cues: • gaze-contingent varifocal displays • volumetric and multi-plane displays • near-eye light field displays • Maxwellian-type displays • AR displays Magnified Display • big challenge: virtual image appears at fixed focal plane! d • no focus cues d’ f 1 1 1 + = d d ' f Importance of Focus Cues Decreases with Age - Presbyopia 0D (∞cm) 4D (25cm) 8D (12.5cm) 12D (8cm) Nearest focus distance focus Nearest 16D (6cm) 8 16 24 32 40 48 56 64 72 Age (years) Duane, 1912 Cutting & Vishton, 1995 Relative Importance of Depth Cues The Vergence-Accommodation Conflict (VAC) Real World: Vergence & Accommodation Match! Current VR Displays: Vergence & Accommodation Mismatch Accommodation and Retinal Blur Blur Gradient Driven Accommodation Blur Gradient Driven Accommodation Blur Gradient Driven Accommodation Blur Gradient Driven Accommodation Blur Gradient Driven Accommodation Blur Gradient Driven Accommodation Top View Real World: Vergence & Accommodation Match! Top View Screen Stereo Displays Today (including HMDs): Vergence-Accommodation Mismatch! Consequences of Vergence-Accommodation Conflict • Visual discomfort (eye tiredness & eyestrain) after ~20 minutes of stereoscopic depth judgments (Hoffman et al. 2008; Shibata et al. 2011) • Degrades visual performance in terms of reaction times and acuity for stereoscopic vision -

Light Field Features in Scale and Depth

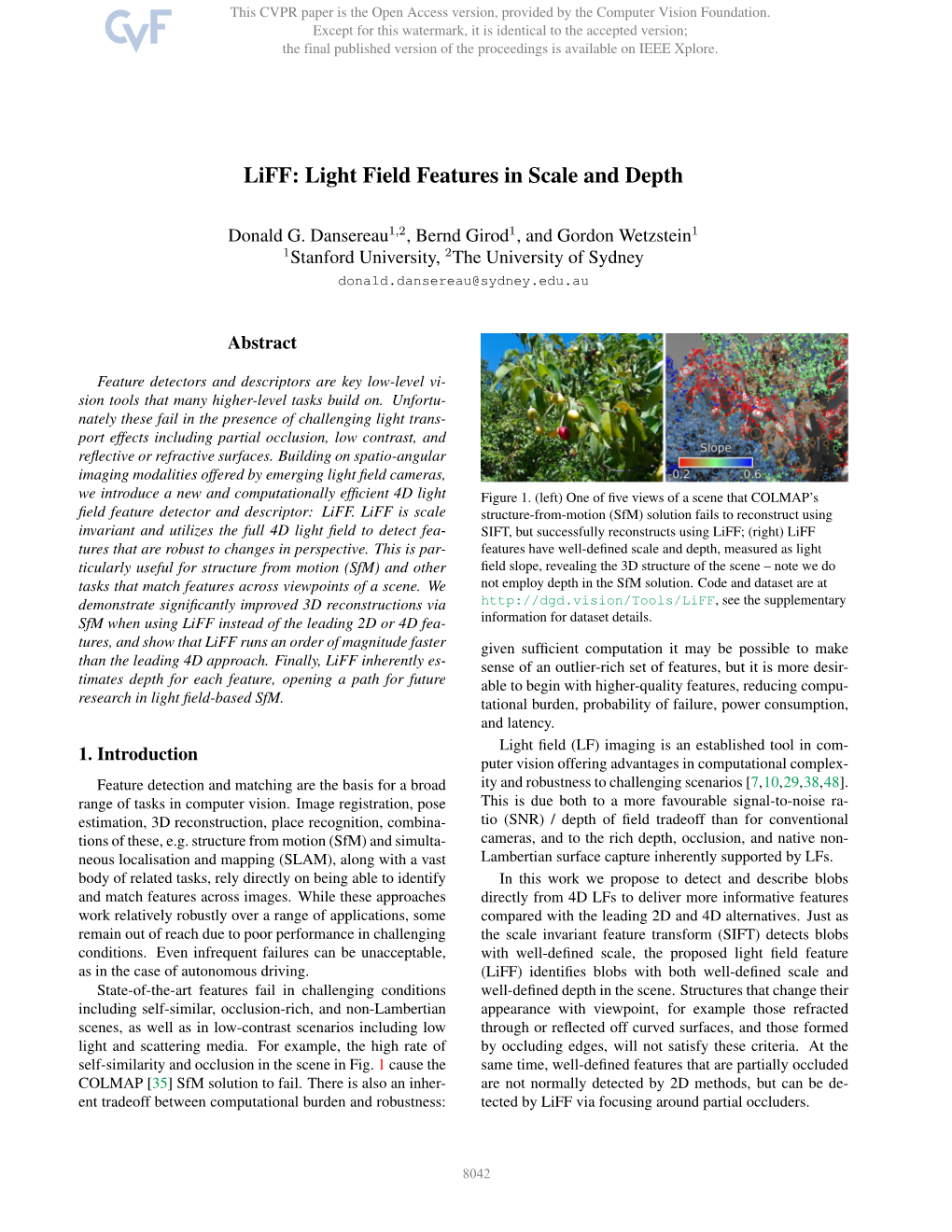

LiFF: Light Field Features in Scale and Depth Donald G. Dansereau1;2, Bernd Girod1, and Gordon Wetzstein1 1Stanford University, 2The University of Sydney Abstract—Feature detectors and descriptors are key low-level vision tools that many higher-level tasks build on. Unfortunately these fail in the presence of challenging light transport effects including partial occlusion, low contrast, and reflective or re- fractive surfaces. Building on spatio-angular imaging modalities offered by emerging light field cameras, we introduce a new and computationally efficient 4D light field feature detector and descriptor: LiFF. LiFF is scale invariant and utilizes the full 4D light field to detect features that are robust to changes in perspective. This is particularly useful for structure from motion (SfM) and other tasks that match features across viewpoints of a scene. We demonstrate significantly improved 3D reconstructions Fig. 1. (left) One of five views of a scene that COLMAP’s structure-from- via SfM when using LiFF instead of the leading 2D or 4D features, motion (SfM) solution fails to reconstruct using SIFT, but successfully and show that LiFF runs an order of magnitude faster than the reconstructs using LiFF; (right) LiFF features have well-defined scale leading 4D approach. Finally, LiFF inherently estimates depth and depth, measured as light field slope, revealing the 3D structure of for each feature, opening a path for future research in light the scene – note we do not employ depth in the SfM solution. Code field-based SfM. and dataset are at http://dgd.vision/Tools/LiFF, see the supplementary information for dataset details. -

Light Field Rendering Marc Levo Y and Pat Hanrahan Computer Science Department Stanford University

Proc. ACM SIGGRAPH ’96. (with corrections, July,1996) Light Field Rendering Marc Levo y and Pat Hanrahan Computer Science Department Stanford University Abstract •The display algorithms for image-based rendering require Anumber of techniques have been proposed for flying modest computational resources and are thus suitable for real- through scenes by redisplaying previously rendered or digitized time implementation on workstations and personal computers. views. Techniques have also been proposed for interpolating •The cost of interactively viewing the scene is independent of between views by warping input images, using depth information scene complexity. or correspondences between multiple images. In this paper,we •The source of the pre-acquired images can be from a real or describe a simple and robust method for generating newviews virtual environment, i.e. from digitized photographs or from from arbitrary camera positions without depth information or fea- rendered models. In fact, the twocan be mixed together. ture matching, simply by combining and resampling the available The forerunner to these techniques is the use of environ- images. The keytothis technique lies in interpreting the input ment maps to capture the incoming light in a texture map images as 2D slices of a 4D function - the light field. This func- [Blinn76, Greene86]. An environment map records the incident tion completely characterizes the flowoflight through unob- light arriving from all directions at a point. The original use of structed space in a static scene with fixed illumination. environment maps was to efficiently approximate reflections of We describe a sampled representation for light fields that the environment on a surface. -

Learning a Deep Convolutional Network for Light-Field Image Super-Resolution

Learning a Deep Convolutional Network for Light-Field Image Super-Resolution Youngjin Yoon Hae-Gon Jeon Donggeun Yoo Joon-Young Lee In So Kweon [email protected] [email protected] [email protected] [email protected] [email protected] Robotics and Computer Vision Lab., KAIST Abstract trade-off between a spatial and an angular resolution in a restricted sensor resolution. A micro-lens array, placed be- Commercial Light-Field cameras provide spatial and an- tween a sensor and a main lens, is used to encode angular gular information, but its limited resolution becomes an im- information of light rays. Therefore, enhancing LF images portant problem in practical use. In this paper, we present resolution is crucial to take full advantage of LF imaging. a novel method for Light-Field image super-resolution (SR) For image super-resolution (SR), most conventional way via a deep convolutional neural network. Rather than the is to perform optimizations with prior information [16, 26]. conventional optimization framework, we adopt a data- The optimization-based approach shows many promising driven learning method to simultaneously up-sample the results, however it generally requires parameter tuning to angular resolution as well as the spatial resolution of a adjust the weight between a data fidelity term and a prior Light-Field image. We first augment the spatial resolution of term (e.g., local patch sizes, color consistency parame- each sub-aperture image to enhance details by a spatial SR ters, etc.). Away from the optimization paradigm, recently network. Then, novel views between the sub-aperture im- data-driven learning methods based on deep neural network ages are generated by an angular super-resolution network. -

Improving Resolution and Depth-Of-Field of Light Field Cameras Using a Hybrid Imaging System

Improving Resolution and Depth-of-Field of Light Field Cameras Using a Hybrid Imaging System Vivek Boominathan, Kaushik Mitra, Ashok Veeraraghavan Rice University 6100 Main St, Houston, TX 77005 [vivekb, Kaushik.Mitra, vashok] @rice.edu Abstract Lytro Hybrid Imager camera (our system) Current light field (LF) cameras provide low spatial res- olution and limited depth-of-field (DOF) control when com- 11 megapixels 0.1 megapixels 9 X 9 angular pared to traditional digital SLR (DSLR) cameras. We show 9 X 9 angular resolution. that a hybrid imaging system consisting of a standard LF resolution. camera and a high-resolution (HR) standard camera en- ables (a) achieve high-resolution digital refocusing, (b) bet- Fundamental Angular Angular Resolution resolution trade-off ter DOF control than LF cameras, and (c) render grace- 11 megapixels DSLR no angular ful high-resolution viewpoint variations, all of which were camera previously unachievable. We propose a simple patch-based information. algorithm to super-resolve the low-resolution (LR) views of Spatial Resolution the light field using the high-resolution patches captured us- Figure 1: Fundamental resolution trade-off in light-field ing a HR SLR camera. The algorithm does not require the imaging: Given a fixed resolution sensor there is an inverse LF camera and the DSLR to be co-located or for any cali- relationship between spatial resolution and angular resolu- bration information regarding the two imaging systems. We tion that can be captured. By using a hybrid imaging system build an example prototype using a Lytro camera (380×380 containing two sensors, one a high spatial resolution camera pixel spatial resolution) and a 18 megapixel (MP) Canon and another a light-field camera, one can reconstruct a high DSLR camera to generate a light field with 11 MP reso- resolution light field. -

The Fresnel Zone Light Field Spectral Imager

Air Force Institute of Technology AFIT Scholar Theses and Dissertations Student Graduate Works 3-23-2017 The rF esnel Zone Light Field Spectral Imager Francis D. Hallada Follow this and additional works at: https://scholar.afit.edu/etd Part of the Optics Commons Recommended Citation Hallada, Francis D., "The rF esnel Zone Light Field Spectral Imager" (2017). Theses and Dissertations. 786. https://scholar.afit.edu/etd/786 This Thesis is brought to you for free and open access by the Student Graduate Works at AFIT Scholar. It has been accepted for inclusion in Theses and Dissertations by an authorized administrator of AFIT Scholar. For more information, please contact [email protected]. The Fresnel Zone Light Field Spectral Imager THESIS Francis D. Hallada, Maj, USAF AFIT-ENP-MS-17-M-095 DEPARTMENT OF THE AIR FORCE AIR UNIVERSITY AIR FORCE INSTITUTE OF TECHNOLOGY Wright-Patterson Air Force Base, Ohio DISTRIBUTION STATEMENT A APPROVED FOR PUBLIC RELEASE; DISTRIBUTION UNLIMITED The views expressed in this document are those of the author and do not reflect the official policy or position of the United States Air Force, the United States Department of Defense or the United States Government. This material is declared a work of the U.S. Government and is not subject to copyright protection in the United States. AFIT-ENP-MS-17-M-095 THE FRESNEL ZONE LIGHT FIELD SPECTRAL IMAGER THESIS Presented to the Faculty Department of Engineering Physics Graduate School of Engineering and Management Air Force Institute of Technology Air University Air Education and Training Command in Partial Fulfillment of the Requirements for the Degree of Master of Science Francis D. -

Suitability Analysis of Holographic Vs Light Field and 2D Displays

SUBMITTED TO THE JOURNAL OF IEEE TRANSACTIONS ON MULTIMEDIA,20 SEPTEMBER 2019 1 Suitability Analysis of Holographic vs Light Field and 2D Displays for Subjective Quality Assessment of Fourier Holograms Ayyoub Ahar, Member, IEEE, Maksymilian Chlipala, Tobias Birnbaum, Member, IEEE, Weronika Zaperty, Athanasia Symeonidou, Member, IEEE, Tomasz Kozacki, Malgorzata Kujawinska, and Peter Schelkens, Member, IEEE Abstract—Visual quality assessment of digital holograms is via a complete pipeline for high-quality dynamic holography facing many challenges. Main difficulties are related to the with full-parallax and wide field of view (FoV) [2]. limited spatial resolution and angular field of view of holographic In this regard, one of the core challenges is modeling the displays in combination with the complexity of steering and operating them for such tasks. Alternatively, non-holographic perceived visual quality of the rendered holograms, which displays – and in particular light-field displays – can be utilized has a vital impact on steering the other components of the to visualize the numerically reconstructed content of a digital holographic imaging pipeline. While the design of highly hologram. However, their suitability as alternative for holo- efficient numerical methods in Computer-Generated Holog- graphic displays has not been validated. In this research, we have raphy (CGH) [3], [4], [5], [6], [7] and efficient encoders investigated this issue via a set of comprehensive experiments. We used Fourier holographic principle to acquire a diverse set for holographic content [2], [8], [9], [10], [11] is gaining of holograms, which were either computer-generated from point momentum, Visual Quality Assessment (VQA) of holograms clouds or optically recorded from real macroscopic objects. -

Deep Sparse Light Field Refocusing

STUDENT, PROF, COLLABORATOR: BMVC AUTHOR GUIDELINES 1 Deep Sparse Light Field Refocusing Shachar Ben Dayan School of Electrical Engineering [email protected] The Iby and Aladar Fleischman Faculty David Mendlovic of Engineering [email protected] Tel Aviv University Tel Aviv, Israel Raja Giryes [email protected] Abstract Light field photography enables to record 4D images, containing angular informa- tion alongside spatial information of the scene. One of the important applications of light field imaging is post-capture refocusing. Current methods require for this purpose a dense field of angle views; those can be acquired with a micro-lens system or with a compressive system. Both techniques have major drawbacks to consider, including bulky structures and angular-spatial resolution trade-off. We present a novel implemen- tation of digital refocusing based on sparse angular information using neural networks. This allows recording high spatial resolution in favor of the angular resolution, thus, enabling to design compact and simple devices with improved hardware as well as bet- ter performance of compressive systems. We use a novel convolutional neural network whose relatively small structure enables fast reconstruction with low memory consump- tion. Moreover, it allows handling without re-training various refocusing ranges and noise levels. Results show major improvement compared to existing methods. 1 Introduction Light field photography has attracted significant attention in recent years due to its unique capability to extract depth without active components [10, 12, 13]. While 2D cameras only capture the total amount of light at each pixel on the sensor, namely, the projection of the light in the scene, light field cameras also record the direction of each ray intersecting with the sensor in a single capture. -

An Equivalent F-Number for Light Field Systems: Light Efficiency, Signal-To-Noise Ratio, and Depth of Field Ivo Ihrke

An Equivalent F-Number for Light Field Systems: Light Efficiency, Signal-to-Noise Ratio, and Depth of Field Ivo Ihrke To cite this version: Ivo Ihrke. An Equivalent F-Number for Light Field Systems: Light Efficiency, Signal-to-Noise Ratio, and Depth of Field. QCAV, 2019, Mulhouse, France. hal-02144991 HAL Id: hal-02144991 https://hal.inria.fr/hal-02144991 Submitted on 3 Jun 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. An Equivalent F-Number for Light Field Systems: Light Efficiency, Signal-to-Noise Ratio, and Depth of Field Ivo Ihrke independent scientist ABSTRACT The paper discusses the light efficiency and SNR of light field imaging systems in comparison to classical 2D imaging. In order to achieve this goal, I define the \equivalent f-number" as a concept of capturing the light gathering ability of the light field system. Since the f-number, in classical imaging systems, is conceptually also linked with the depth-of-field, I discuss an appropriate depth-of-field interpretation for light field systems. Keywords: light field imaging, light efficiency, f-number, equivalent f-number 1. INTRODUCTION Even though the F/# is a simple concept, it captures major important features of imaging systems and is therefore in popular use.