Performance, Security, and Safety Requirements Testing for Smart Systems Through Systematic Software Analysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Intel and Mobileye Autonomous Driving Solutions

PRODUCT BRIEF Autonomous Driving Intel and Mobileye Autonomous Driving Solutions Together, Mobileye and Intel deliver scalable and versatile solutions using purpose-built software and efficient, powerful compute that will make autonomous driving an economic reality. Total Technology and Resources Making the self-driving car a reality requires an unprecedented integration of technology and expertise. Together, Mobileye and Intel provide comprehensive, scalable solutions to enable autonomous driving engineered for safety and affordability. With a combination of industry-leading computer vision, unique algorithms, crowdsourced mapping, efficient driving policy, a mathematical model designed for safety, and high-performing, low-power system designs, we deliver a versatile road map that scales to millions, not thousands, of vehicles. Purpose-built software and hardware Mobileye’s portfolio of innovative software across all pillars of autonomous driving (the “eyes”) complements Intel’s high-performance computing and connectivity expertise (the “brains”). This winning package supports complex and computationally intense vision processing with low power consumption, creating powerful and smart autonomous driving solutions from bumper to cloud. We start with revolutionary software from Mobileye that includes: • Low-cost, 360-degree surround-view computer vision • Crowdsourced Road Experience Management™ (REM™) mapping and localization • Sensor fusion, integrating surround vision and mapping with a multimodal sensor system • Efficient, semantic-based artificial intelligence (AI) for driving policy (decision-making) • A formal safety software layer via our Responsibility Sensitive Safety (RSS) model This advanced software calls for a powerful compute platform. We use a combination of proven Mobileye EyeQ® SoCs for computer vision, localization, sensor fusion, trajectory planning, and our RSS model designed for safety, as well as Intel® processors for translation of the planned trajectory into commands that are issued to the vehicle’s actuation system. -

The Top 10 Open Source Music Players Scores of Music Players Are Available in the Open Source World, and Each One Has Something That Is Unique

For U & Me Overview The Top 10 Open Source Music Players Scores of music players are available in the open source world, and each one has something that is unique. Here are the top 10 music players for you to check out. verybody likes to use a music player that is hassle- Amarok free and easy to operate, besides having plenty of Amarok is a part of the KDE project and is the default music Efeatures to enhance the music experience. The open player in Kubuntu. Mark Kretschmann started this project. source community has developed many music players. This The Amarok experience can be enhanced with custom scripts article lists the features of the ten best open source music or by using scripts contributed by other developers. players, which will help you to select the player most Its first release was on June 23, 2003. Amarok has been suited to your musical tastes. The article also helps those developed in C++ using Qt (the toolkit for cross-platform who wish to explore the features and capabilities of open application development). Its tagline, ‘Rediscover your source music players. Music’, is indeed true, considering its long list of features. 98 | FEBRUARY 2014 | OPEN SOURCE FOR YoU | www.LinuxForU.com Overview For U & Me Table 1: Features at a glance iPod sync Track info Smart/ Name/ Fade/ gapless and USB Radio and Remotely Last.fm Playback and lyrics dynamic Feature playback device podcasts controlled integration resume lookup playlist support Amarok Crossfade Both Yes Both Yes Both Yes Yes (Xine), Gapless (Gstreamer) aTunes Fade only -

Safe and Secure Model-Driven Design for Embedded Systems Letitia Li

Safe and secure model-driven design for embedded systems Letitia Li To cite this version: Letitia Li. Safe and secure model-driven design for embedded systems. Embedded Systems. Université Paris-Saclay, 2018. English. NNT : 2018SACLT002. tel-01894734 HAL Id: tel-01894734 https://pastel.archives-ouvertes.fr/tel-01894734 Submitted on 12 Oct 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Approche Orientee´ Modeles` pour la Suretˆ e´ et la Securit´ e´ des Systemes` Embarques´ These` de doctorat de l’Universite´ Paris-Saclay prepar´ ee´ a` Telecom ParisTech Ecole doctorale n◦580 Denomination´ (STIC) NNT : 2018SACLT002 Specialit´ e´ de doctorat: Informatique These` present´ ee´ et soutenue a` Biot, le 3 septembre` 2018, par LETITIA W. LI Composition du Jury : Prof. Philippe Collet Professeur, Universite´ Coteˆ d’Azur President´ Prof. Guy Gogniat Professeur, Universite´ de Bretagne Sud Rapporteur Prof. Maritta Heisel Professeur, University Duisburg-Essen Rapporteur Prof. Jean-Luc Danger Professeur, Telecom ParisTech Examinateur Dr. Patricia Guitton -

Beets Documentation Release 1.5.1

beets Documentation Release 1.5.1 Adrian Sampson Oct 01, 2021 Contents 1 Contents 3 1.1 Guides..................................................3 1.2 Reference................................................. 14 1.3 Plugins.................................................. 44 1.4 FAQ.................................................... 120 1.5 Contributing............................................... 125 1.6 For Developers.............................................. 130 1.7 Changelog................................................ 145 Index 213 i ii beets Documentation, Release 1.5.1 Welcome to the documentation for beets, the media library management system for obsessive music geeks. If you’re new to beets, begin with the Getting Started guide. That guide walks you through installing beets, setting it up how you like it, and starting to build your music library. Then you can get a more detailed look at beets’ features in the Command-Line Interface and Configuration references. You might also be interested in exploring the plugins. If you still need help, your can drop by the #beets IRC channel on Libera.Chat, drop by the discussion board, send email to the mailing list, or file a bug in the issue tracker. Please let us know where you think this documentation can be improved. Contents 1 beets Documentation, Release 1.5.1 2 Contents CHAPTER 1 Contents 1.1 Guides This section contains a couple of walkthroughs that will help you get familiar with beets. If you’re new to beets, you’ll want to begin with the Getting Started guide. 1.1.1 Getting Started Welcome to beets! This guide will help you begin using it to make your music collection better. Installing You will need Python. Beets works on Python 3.6 or later. • macOS 11 (Big Sur) includes Python 3.8 out of the box. -

Navigating Automotive LIDAR Technology

Navigating Automotive LIDAR Technology Mial Warren VP of Technology October 22, 2019 Outline • Introduction to ADAS and LIDAR for automotive use • Brief history of LIDAR for autonomous driving • Why LIDAR? • LIDAR requirements for (personal) automotive use • LIDAR technologies • VCSEL arrays for LIDAR applications • Conclusions What is the big deal? • “The automotive industry is the largest industry in the world” (~$1 Trillion) • “The automotive industry is > 100 years old, the supply chains are very mature” • “The advent of autonomy has opened the automotive supply chain to new players” (electronics, optoelectronics, high performance computing, artificial intelligence) (Quotations from 2015 by LIDAR program manager at a major European Tier 1 supplier.) LIDAR System Revenue The Automotive Supply Chain OEMs (car companies) Tier 1 Suppliers (Subsystems) Tier 2 Suppliers (components) 3 ADAS (Advanced Driver Assistance Systems) Levels SAE and NHTSA • Level 0 No automation – manual control by the driver • Level 1 One automatic control (for example: acceleration & braking) • Level 2 Automated steering and acceleration capabilities (driver is still in control) • Level 3 Environment detection – capable of automatic operation (driver expected to intervene) • Level 4 No human interaction required – still capable of manual override by driver • Level 5 Completely autonomous – no driver required Level 3 and up need the full range of sensors. The adoption of advanced sensors (incl LIDAR) will not wait for Level 5 or full autonomy! The Automotive LIDAR Market Image courtesy of Autonomous Stuff Emerging US $6 Billion LIDAR Market by 2024 (Source: Yole) ~70% automotive Note: Current market is >$300M for software test vehicles only! Sensor Fusion Approach to ADAS and Autonomous Vehicles Much of the ADAS development is driven by NHTSA regulation LIDAR Vision & Radar Radar Radar Vision Vision Vision & Radar Each technology has weaknesses and the combination of sensors provides high confidence. -

How Do Developers Use Wake Locks in Android Applications? a Large-Scale Empirical Study

How Do Developers Use Wake Locks in Android Applications? A Large-Scale Empirical Study Technical Report HKUST-CS15-04 Online date: November 13, 2015 Abstract: Wake locks are commonly used in Android apps to protect critical computations from being disrupted by device sleeping. However, inappropriate use of wake locks often causes various issues that can seriously impact app user experience. Unfortunately, people have limited understanding of how Android developers use wake locks in practice and the potential issues that can arise from wake lock misuses. To bridge this gap, we conducted a large-scale empirical study on 1.1 million commercial and 31 open-source Android apps. By automated program analysis of commercial apps and manual investigation of the bug reports and source code revisions of open- source apps, we made several important findings. For example, we found that developers often use wake locks to protect 15 types of computational tasks that can bring users observable or perceptible benefits. We also identified eight types of wake lock misuses that commonly cause functional or non- functional issues, only two of which had been studied by existing work. Such empirical findings can provide guidance to developers on how to appropriately use wake locks and shed light on future research on designing effective techniques to avoid, detect, and debug wake lock issues. Authors: Yepang Liu (Hong Kong University of Science and Technology) Chang Xu (Nanjing University) Shing-Chi Cheung (Hong Kong University of Science and Technology) Valerio Terragni (Hong Kong University of Science and Technology) How Do Developers Use Wake Locks in Android Apps? A Large-scale Empirical Study Yepang Liux , Chang Xu‡ , Shing-Chi Cheungx , and Valerio Terragnix x Dept. -

LIDAR – a New (Self-Driving) Vehicle for Introducing Optics to Broader Engineering and Non-Engineering Audiences Corneliu Rablau a Akettering University, Dept

LIDAR – A new (self-driving) vehicle for introducing optics to broader engineering and non-engineering audiences Corneliu Rablau a aKettering University, Dept. of Physics, 1700 University Ave., Flint, MI USA 48504 ABSTRACT Since Stanley, the self-driven Stanford car equipped with five SICK LIDAR sensors won the 2005 DARPA Challenge, the race to developing and deploying fully autonomous, self-driving vehicles has come to a full swing. By now, it has engulfed all major automotive companies and suppliers, major trucking and taxi companies, not to mention companies like Google (Waymo), Apple and Tesla. With the notable exception of the Tesla self-driving cars, a LIDAR (Light, Detection and Ranging) unit is a key component of the suit of sensors that allow autonomous vehicles to see and navigate the world. The market space for lidar units is by now downright crowded, with a number of companies and their respective technologies jockeying for long-run leading positions in the field. Major lidar technologies for autonomous driving include mechanical scanning (spinning) lidar, MEMS micro-mirror lidar, optical-phased array lidar, flash lidar, frequency- modulated continuous-wave (FMCW) lidar and others. A major technical specification of any lidar is the operating wavelength. Many existing systems use 905 nm diode lasers, a wavelength compatible with CMOS-technology detectors. But other wavelengths (like 850 nm, 940 nm and 1550 nm) are also investigated and, in the long run, the telecom near- infrared range (1550 nm) is expected to experience significant growth because it offers a larger detecting distance range (200-300 meters) within eye safety laser power limits while also offering potential better performance in bad weather conditions. -

Autonomous Vehicle Technology: a Guide for Policymakers

Autonomous Vehicle Technology A Guide for Policymakers James M. Anderson, Nidhi Kalra, Karlyn D. Stanley, Paul Sorensen, Constantine Samaras, Oluwatobi A. Oluwatola C O R P O R A T I O N For more information on this publication, visit www.rand.org/t/rr443-2 This revised edition incorporates minor editorial changes. Library of Congress Cataloging-in-Publication Data is available for this publication. ISBN: 978-0-8330-8398-2 Published by the RAND Corporation, Santa Monica, Calif. © Copyright 2016 RAND Corporation R® is a registered trademark. Cover image: Advertisement from 1957 for “America’s Independent Electric Light and Power Companies” (art by H. Miller). Text with original: “ELECTRICITY MAY BE THE DRIVER. One day your car may speed along an electric super-highway, its speed and steering automatically controlled by electronic devices embedded in the road. Highways will be made safe—by electricity! No traffic jams…no collisions…no driver fatigue.” Limited Print and Electronic Distribution Rights This document and trademark(s) contained herein are protected by law. This representation of RAND intellectual property is provided for noncommercial use only. Unauthorized posting of this publication online is prohibited. Permission is given to duplicate this document for personal use only, as long as it is unaltered and complete. Permission is required from RAND to reproduce, or reuse in another form, any of its research documents for commercial use. For information on reprint and linking permissions, please visit www.rand.org/pubs/permissions.html. The RAND Corporation is a research organization that develops solutions to public policy challenges to help make communities throughout the world safer and more secure, healthier and more prosperous. -

Certain Vision-Based Driver Assistance System Cameras, Components Thereof, and Products Containing the Same

In the Matter of CERTAIN VISION-BASED DRIVER ASSISTANCE SYSTEM CAMERAS, COMPONENTS THEREOF, AND PRODUCTS CONTAINING THE SAME 337-TA-907 Publication 4866 February 2019 U.S. International Trade Commission Washington, DC 20436 U.S. International Trade Commission COMMISSIONERS Meredith Broadbent, Chairman Dean Pinkert, Vice Chairman Irving Williamson, Commissioner David Johanson, Commissioner Rhonda Schmidtlein, Commissioner Address all communications to Secretary to the Commission United States International Trade Commission Washington, DC 20436 1,----- .______U.S. _____ International Trade Commission I Washington, DC 20436 www.usitc.gov In the Matter of CERTAIN VISION-BASED DRIVER ASSISTANCE SYSTEM CAMERAS, COMPONENTS THEREOF, AND PRODUCTS CONTAINING THE SAME 337-TA-907 Publication 4866 February 2019 UNITED STATES INTERNATIONAL TRADE COMMISSION Washington, D.C. 20436 In the Matter of Investigation No. 337-TA-907 CERTAIN VISION-BASED DRIVER ASSISTANCE SYSTEM CAMERAS, COMPONENTSTHEREOF,AND PRODUCTS CONTAINING THE SAME NOTICE OF THE COMMISSION'S DETERMINATION FINDING NO VIOLATION OF SECTION 337; TERMINATION OF THE INVESTIGATION AGENCY: U.S. International Trade Commission. ACTION: Notice. SUMMARY: Notice is hereby given that the U.S. International Trade Commission has found no violation of section 337 of the Tariff Act of 1930, 19 U.S.C. § 1337, in the above-captioned investigation, and has terminated the investigation. FOR FURTHER INFORMATION CONTACT: Amanda P. Fisherow, Office of the General Counsel, U.S. International Trade Commission, 500 E Street, S.W., Washington, D.C. 20436, telephone (202) 205-2737. The public version of the complaint can be accessed on the Commission's electronic docket (EDIS) at http://edis.usitc.gov, and will be available for inspection during official business hours. -

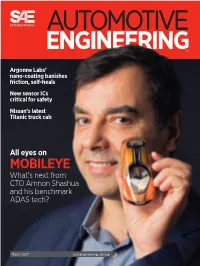

MOBILEYE What’S Next from CTO Amnon Shashua and His Benchmark ADAS Tech?

AUTOMOTIVE ENGINEERING! Argonne Labs’ nano-coating banishes friction, self-heals New sensor ICs critical for safety Nissan’s latest Titanic truck cab All eyes on MOBILEYE What’s next from CTO Amnon Shashua and his benchmark ADAS tech? March 2017 autoengineering.sae.org New eye on the road One of the industry’s hottest tech suppliers is blazing the autonomy trail by crowd-sourcing safe routes and using AI to learn to negotiate the road. Mobileye’s co-founder and CTO explains. by Steven Ashley With 15 million ADAS-equipped vehicles worldwide carrying Mobileye EyeQ vision units, Amnon Shashua’s company has been called “the benchmark for applied image processing in the [mobility] community.” mnon Shashua, co-founder and Chief image-processing ‘system-on-a-chip’ would be enough to reliably ac- Technology Officer of Israel-based Mobileye, complish the true sensing task at hand, thank you very much. And, it tells a story about when, in the year 2000, would do so more easily and inexpensively than the favored alternative he first began approaching global carmakers to radar ranging: stereo cameras that find depth using visual parallax. Awith his vision—that cameras, processor chips and software-smarts could lead to affordable advanced driver-assistance systems (ADAS) and eventually to zFAS and furious self-driving cars. Seventeen years later, some 15 million ADAS-equipped vehicles “I would go around to meet OEM customers to try worldwide carry Mobileye EyeQ vision units that use a monocular to push the idea that a monocular camera and chip camera. The company is now as much a part of the ADAS lexicon as could deliver what would be needed in a front-facing are Tier-1 heavyweights Delphi and Continental. -

Google Announces Lidar for Autonomous Cars

NEWS Google Announces Lidar for Autonomous Cars Waymo wants to do everything itself. The self-driving automobile division of Google’s parent company Alphabet, has announced that it is building all of its self-driving automobile hardware in-house. That means Waymo is manufacturing its own lidar. This shouldn’t come as a surprise. Two years ago the project director for Google’s self-driving car project started dropping hints that the tech company would be building its own lidar sensors. At the time, their self-driving prototype used a $70,000 Velodyne lidar sensor, and Google felt the price was too high to justify mass-production. Rather than wait for the market to produce a cheaper sensor, Google started developing its own. In the intervening time automotive lidar has become a red-hot market segment. It seems like every month brings a new company announcing a low-cost sensor. But it turns out that Google was not deterred: At the Detroit Auto show last Sunday, Waymo’s CEO announced that the company had produced its own lidar, and brought the technology’s price down “by more than 90%.” A quick bit of napkin math puts the final number at about US$7,500. Attentive readers will note that the unit’s cost is notably higher than solid-state offerings from Quanergy, Innoviz, and a number of other competitors. However, many of those sensors are not currently available on the market, despite lots of press attention and big PR pushes. Waymo’s sensors, on the other hand, are available right now–at least to Waymo. -

Semiconductor Industry Merger and Acquisition Activity from an Intellectual Property and Technology Maturity Perspective

Semiconductor Industry Merger and Acquisition Activity from an Intellectual Property and Technology Maturity Perspective by James T. Pennington B.S. Mechanical Engineering (2011) University of Pittsburgh Submitted to the System Design and Management Program in Partial Fulfillment of the Requirements for the Degree of Master of Science in Engineering and Management at the Massachusetts Institute of Technology September 2020 © 2020 James T. Pennington All rights reserved The author hereby grants to MIT permission to reproduce and to distribute publicly paper and electronic copies of this thesis document in whole or in part in any medium now known or hereafter created. Signature of Author ____________________________________________________________________ System Design and Management Program August 7, 2020 Certified by __________________________________________________________________________ Bruce G. Cameron Thesis Supervisor System Architecture Group Director in System Design and Management Accepted by __________________________________________________________________________ Joan Rubin Executive Director, System Design & Management Program THIS PAGE INTENTIALLY LEFT BLANK 2 Semiconductor Industry Merger and Acquisition Activity from an Intellectual Property and Technology Maturity Perspective by James T. Pennington Submitted to the System Design and Management Program on August 7, 2020 in Partial Fulfillment of the Requirements for the Degree of Master of Science in System Design and Management ABSTRACT A major method of acquiring the rights to technology is through the procurement of intellectual property (IP), which allow companies to both extend their technological advantage while denying it to others. Public databases such as the United States Patent and Trademark Office (USPTO) track this exchange of technology rights. Thus, IP can be used as a public measure of value accumulation in the form of technology rights.