Lean Six Sigma Terminology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lean Process Improvement Assessment

LEAN PROCESS IMPROVEMENT ASSESSMENT INSTRUCTIONS: Read each item, then check a box on the 1-to-5 scale to show how often this occurs in your workplace: If you checked "often" or "very often" then consider: 1 = never 2 = rarely 3 = sometimes 4 = often 5 = very often In my work area, we spend too much time looking for the items we need to do our 5S+ 1 2 3 4 5 1 jobs: files, materials, equipment, and so on. If the physical work area was better 5S+ stands for sort, straighten, shine, standardize, sustain, organized, we’d be more productive and less frustrated. and safety. When we get inputs from customers or from other sources (from paper forms or Poka-Yoke online web forms, for example), we often find that the incoming information is 1 2 3 4 5 From the Japanese term for mistake-proofing, poka-yoke is 2 incomplete or inaccurate. We have to circle back to people to get the information all about putting measures in place to prevent errors from we need. If we received complete information from them the first time around, we’d occurring as a process unfolds. be a lot more efficient. We find ourselves wondering what our customers are thinking. Are we meeting their Voice of the Customer needs and expectations? Are they happy with our work? Are there things we can do 1 2 3 4 5 When it comes to improvement, understanding the 3 to increase their satisfaction? If we had answers to these questions, we could serve customer’s spoken and unspoken requirements is essential. -

Using the DMAIC Process to Direct the Sequencing of Lean/Six Sigma Tools for Successful Project Outcomes

Using the DMAIC Process to Direct the Sequencing of Lean/Six Sigma Tools for Successful Project Outcomes Jim Ellis Certified Master Black Belt J&J Process Excellence Director – US Sales Strategic Accounts [email protected] Intent: Using the DMAIC Process to Direct the Sequencing of Lean/Six Sigma Tools for Successful Project Outcomes The purpose of this presentation is to share with the attendees my experience over the past 5 years using the DMAIC Process how to determine what Lean and/or 6 Sigma tools is the best to use when for assuring successful outcomes of your projects. Learning Objectives: 1.) Clear understanding of DAMIC Process 2.) Clear understanding of which common Lean and 6 Sigma Tools are best used in what stage of DMAIC 3.) Clear understanding of how to assess success for a project and know if you have achieved it. After Attending the attendees will: 1.) Know what the DMAIC process is and how it is applied in project planning 2.) Understand which Lean and 6 Sigma tools are used in what sequence to assure successful project outcomes © 2008 Ortho Clinical Diagnostics The Driving Forces of Change Today’s Healthcare Delivery Challenges… Declining Resources $29.9 Space Vacancy Capital Funds billion Skilled Labor Rates Medicare/Medicaid Reimbursement Laboratory Techs 5.9% Shortfall Imaging Techs 5.9% Pharmacists 8.1% RNs 8.1% Inpatient Admissions2 ED Visits2 Volume New Technology Market Pressures Increasing Pressures © 2008 Ortho Clinical Diagnostics Why are labs changing? “I need to transform my lab!” Why? • 2X Volume • ½ the -

Download Complete Curriculum

L E A N S I X S I G M A G R E E N B E LT C O U R S E T O P I C S Copyright ©2019 by Pyzdek Institute, LLC. LEAN SIX SIGMA GREEN BELT COURSE TOPICS LESSON TOPIC Overview A top-level overview of the topics covered in this course What is Six Sigma? A complete overview of Six Sigma Lean Overview 1 Waste and Value Lean Overview 2 Value Streams, Flow and Pull Lean Overview 3 Perfection Recognizing an Linking your Green Belt activities to the organization’s Opportunity vision and goals Choosing the Project- How to pick a winning project using Pareto Analysis Pareto Analysis Assessing Lean Six Sigma How to carefully assess Lean Six Sigma project candidates Project Candidates to assure success Develop the Project Plan 1 Team selection and dynamics; brainstorming; consensus decision making; nominal group technique Develop the Project Plan 2 Stakeholder analysis, communication and planning, cross functional collaboration, and Force Field Analysis Develop the Project Plan 3 Obtain a charter for your project Develop the Project Plan 4 Work breakdown structures, DMAIC tasks, network diagrams Develop the Project Plan 5 Project schedule management; project budget management Develop the Project Plan 6 Obstacle avoidance tactics and management support strategies High Level Maps 1 L-Maps, linking project charter Ys to L-Map processes High Level Maps 2 Mapping the process from supplier to customer (SIPOC) High Level Maps 3 Product family matrix 2 Voice of the Customer (VOC) 1 Kano Model, getting the voice of the customer using the critical incident technique VOC 2-CTQ Specification Link the voice of the customer to the CTQs that drive it Principles of Variation 1 How will I measure success? Are my measurements trustworthy? Scales of measurement, data types, measurement error principles. -

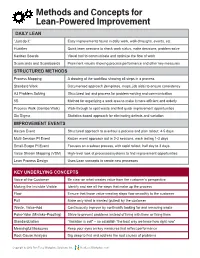

Methods and Concepts for Lean-Powered Improvement

Methods and Concepts for Lean-Powered Improvement DAILY LEAN “Just do it” Easy improvements found in daily work, walk-throughs, events, etc. Huddles Quick team sessions to check work status, make decisions, problem solve Kanban Boards Visual tool to communicate and optimize the flow of work Scorecards and Scoreboards Prominent visuals showing process performance and other key measures STRUCTURED METHODS Process Mapping A drawing of the workflow showing all steps in a process Standard Work Documented approach (templates, maps, job aids) to ensure consistency A3 Problem Solving Structured tool and process for problem-solving and communication 5S Method for organizing a work area to make it more efficient and orderly Process Walk (Gemba Walk) Walk-through to spot waste and find quick improvement opportunities Six Sigma Statistics-based approach for eliminating defects and variation IMPROVEMENT EVENTS Kaizen Event Structured approach to overhaul a process and plan rollout; 4-5 days Multi-Session PI Event Kaizen event approach but in 2-3 sessions, each lasting 1-3 days Small-Scope PI Event Focuses on a subset process, with rapid rollout; half day to 3 days Value Stream Mapping (VSM) High-level look at processes/systems to find improvement opportunities Lean Process Design Uses Lean concepts to create new processes KEY UNDERLYING CONCEPTS Voice of the Customer Be clear on what creates value from the customer’s perspective Making the Invisible Visible Identify and see all the steps that make up the process Flow Ensure that those value-creating -

Using DMAIC in Improvement of Customer Satisfaction and Facilities Provided at Commuter Stations

International Journal of Academic Research in Business and Social Sciences 2016, Vol. 6, No. 12 ISSN: 2222-6990 Using DMAIC in Improvement of Customer Satisfaction and Facilities provided at Commuter Stations Roslina Mustapha1, Nurul Fadly Habidin2 1Department of Business Management, Faculty of Management and Economics, Universiti Pendidikan Sultan Idris, 35900 Tanjung Malim, Perak, Malaysia 2Department of Management and Leadership, Faculty of Management and Economics, Universiti Pendidikan Sultan Idris, 35900 Tanjong Malim, Perak, Malaysia DOI: 10.6007/IJARBSS/6-i12/2564 URL: http://dx.doi.org/10.6007/IJARBSS/v6-i12/2564 Abstracts This paper discusses the use of six sigma approach to improvement of customer service and facilities at commuter stations. The management of commuter stations should be sensitive to the needs and desires of the passengers. In this study, DMAIC approach taking into accounts the views of the customer (VOC) to the dissatisfaction of commuter services and facilities available at commuter stations. Keywords: Six sigma, DMAIC, customer satisfaction, business management 1. Introduction The performance of a business, whether private or public sector is depends on customer satisfaction. For commuter service almost 20 years of presence in Malaysia, there are still some problems, especially to people with disabilities (PWDs) who want to use the commuter service. Using the six-sigma approach is one way to solve the problem of quality of service. Problem solving is an activity to change what is actually happening to what is supposed to happen (Evans & Lindsay, 2007). People with disabilities are not left behind in the services for commuters as it is one of the convenient public transports, safe and cheap. -

Metrics That Trigger Actionable Discussions: Prioritize Process Improvements Using Gauge R&R and SPC Capability

Metrics That Trigger Actionable Discussions: Prioritize Process Improvements Using Gauge R&R and SPC Capability by Govindarajan (Govind) Ramu If a typical process engineering review meeting at your organization involves engineers staring at scores of presentation slides and feeling overwhelmed, you may want to consider the metrics you’re using. High-level metrics like throughput yield and scrap may seem like logical choices for regular product reviews, and they are useful at a business level; however, they tend not to trigger actionable improvement discussion. In contrast, the same powerful tools that engineers use in their everyday product development and process management applications can have enhanced benefits when put to work for broader decision making about improvements. Integrating tools like failure mode and effects analysis (FMEA), gauge repeatability and reproducibility (GR&R), and statistical process control (SPC) provides a quality improvement framework that organizations can use to monitor process health, prioritize improvements, and assign resources to actions that warrant most attention. Figure 1 shows how FMEA, GR&R, and SPC can be analyzed in the context of relationships between critical to quality (CTQ) and critical to process (CTP) parameters, leading to creation of a “process health card.” In eight steps, you can build your own process health card and begin using it at your engineering review meetings. With your focus strongly on metrics of practical interest to product and process engineers—GR&R results, SPC stability status, and the potential risk to the organization of rejecting good parts or accepting bad parts (misclassification) which impacts the organization’s cost of poor quality—you should find it easier to engage in the actionable discussions that the meeting should foster. -

Chapter 3 Study Guide Tools and Techniques for Quality Design and Control

CHAPTER 3 STUDY GUIDE TOOLS AND TECHNIQUES FOR QUALITY DESIGN AND CONTROL MULTIPLE CHOICE QUESTIONS . Design for Six Sigma consists of four principal activities. Which of the following is not one of them? a. Concept development b. Design development c. Design maximization d. Design verification Answer: C AACSB: Reflective Thinking Page: 102 . In the set of matrices is used to relate the voice of the customer to technical features and production planning and control requirements the following symbols • and Δ are used to denote _____ and _____ respectively. a. a weak relationship; a strong relationship b. a very strong relationship; a weak relationship c. a strong relationship; a weak relationship d. a very strong relationship; a strong relationship Answer: B AACSB: Analytic Page: 107 . Which of the following is not a type of house of quality? a. Technical features deployment matrix b. Process plan and quality control charts c. BCG matrix d. Operating instructions Answer: C AACSB: Analytic Page: 109 . DFMEA stands for: a. design failure mode and effects analysis. b. design function mode and effects analysis c. design failure mode and efficiency analysis d. design feature methods and efficiency analysis Answer: A AACSB: Analytic Page: 109 . The purpose of DFMEA is all of the following except: a. to recommend corrective design actions. b. to estimate the effect and seriousness of the failure. c. to identify all the ways in which a failure can occur. d. to act as the “voice of the customer” in product design. Answer: D AACSB: Reflective Thinking Page: 109 . Which of the following statements is true about various service components: degree of customer contact and interaction, the degree of labor intensity, and the degree of customization? a. -

Operationalizing Voice of the Customer: How Top Performers Create Actionable Insights

Benchmarks & GalaxyTM Vendor Evaluations Series [Type a quote from the document or the summary of an interesting point. You can position the text box anywhere in the document. Use the Drawing Tools tab to change the formatting of the pull quote text box.] Operationalizing Voice of the Customer: How Top Performers Create Actionable Insights ©2012 Hypatia Research, LLC. All Rights Reserved. www.hypatiaresearch.com 781-862-5106 NOTICE: Information contained in this publication has been sourced in good faith from primary, secondary and end-user research and is believed to be reliable based upon our research methodology and analyst’s judgment. Ultimate responsibility for all decisions, use and interpretation of Hypatia research, reports or publications remains with the reader, subscriber or user thereof. Page 1 HYPATIA RESEARCH GROUP LLC. Hereby grants and assigns HYPATIA RESEARCH GROUP LLC. Hereby grants and assigns to IBM and all business units, divisions and subsidiaries thereof, worldwide syndication rights to a report entitled ―OPERATIONALIZING ENTERPRISE VOICE OF THE CUSTOMER SOFTWARE SOLUTIONS.” This license and sales agreement entitles the purchaser, IBM, to all distribution, quote, syndication, translation, and publication rights. Hypatia Research Group, LLC must be credited with proper attribution [©2014 Hypatia Research Group, LLC] in each and every instance of content usage. IBM agrees to take good faith steps to safeguard the Intellectual Property contained in this research study. The trademarked Product MatrixTM chart or GalaxyTM chart may be utilized solely for business development presentations and/or full translations of this study and for report distribution to prospects and/or clients, but may NOT be utilized as part of a press release, slide-share, or any type of public, digital, online or general distribution. -

Seven Basic Tools of Quality Control: the Appropriate Techniques for Solving Quality Problems in the Organizations

UC Santa Barbara UC Santa Barbara Previously Published Works Title Seven Basic Tools of Quality Control: The Appropriate Techniques for Solving Quality Problems in the Organizations Permalink https://escholarship.org/uc/item/2kt3x0th Author Neyestani, Behnam Publication Date 2017-01-03 eScholarship.org Powered by the California Digital Library University of California 1 Seven Basic Tools of Quality Control: The Appropriate Techniques for Solving Quality Problems in the Organizations Behnam Neyestani [email protected] Abstract: Dr. Kaoru Ishikawa was first total quality management guru, who has been associated with the development and advocacy of using the seven quality control (QC) tools in the organizations for problem solving and process improvements. Seven old quality control tools are a set of the QC tools that can be used for improving the performance of the production processes, from the first step of producing a product or service to the last stage of production. So, the general purpose of this paper was to introduce these 7 QC tools. This study found that these tools have the significant roles to monitor, obtain, analyze data for detecting and solving the problems of production processes, in order to facilitate the achievement of performance excellence in the organizations. Keywords: Seven QC Tools; Check Sheet; Histogram; Pareto Analysis; Fishbone Diagram; Scatter Diagram; Flowcharts, and Control Charts. INTRODUCTION There are seven basic quality tools, which can assist an organization for problem solving and process improvements. The first guru who proposed seven basic tools was Dr. Kaoru Ishikawa in 1968, by publishing a book entitled “Gemba no QC Shuho” that was concerned managing quality through techniques and practices for Japanese firms. -

Super Simple Lean Six Sigma Glossary

SUPER SIMPLE LEAN SIX SIGMA GLOSSARY The web is overflowing with Lean Six Sigma resources. Our glossary provides clear, straight-forward language, organized for quick access so you can easily find and understand terms that you’re searching for. For a better understanding of these terms and an advanced understanding of Lean Six Sigma, please check out our Green Belt and Black Belt Training & Certification courses. View online Lean Six Sigma Glossary including visuals & infographics at: https://goleansixsigma.com/glossary/ P300-10 COPYRIGHT 2019 GOLEANSIXSIGMA.COM. ALL RIGHTS RESERVED. 1 5S: 5S is a workplace organization technique composed for five primary phases: Sort, Set In Order, Shine, Standardize, and Sustain. 5 Whys: 5 Whys is a simple but effective method of analyzing and solving problems by asking “why” five times, or as many times as needed in order to move past symptoms and determine root cause. This approach is used in tandem with Cause- and-Effect or Fishbone diagrams. 8 Wastes (aka Muda): The 8 Wastes refer to a list of issues that get in the way of process flow and cause stagnation. The list consists of Defects, Overproduction, Waiting, Non-Utilized Talent, Transportation, Inventory, Motion, and Extra-Processing. The idea of process improvement is to identify and remove all forms of waste from a process in order to increase efficiency, reduce cost and provide customer value. A3: On a literal level, A3 refers to a ledger size (11x17) piece of paper. But in the Lean Six Sigma world, it is a tool to help see the thinking behind the problem-solving. -

Quality Control Tools for Continuous Improvement

QUALITY CONTROL TOOLS FOR CONTINUOUS IMPROVEMENT Yogesh K. More1 , Prof.J.N.Yadav2 1Mechanical Engineering Department, SITRC, Sandip Foundation, Nashik, (India) 2Assist.Prof.Mechanical Engineering Department, SITRC, Sandip Foundation, Nashik, (India) ABSTRACT The main aim of this paper is to understand the basic concept of Quality, Quality Control Tools to improve the quality as well as maintain the quality. Quality Control Tools are very important tool and they are widely used in various industries like Automobile, Production etc. The main seven quality control tools are Check Sheet, Pareto Diagram, Histogram, Cause and effect diagram, Control Chart, Run-Chart and Scatter-Diagram are generally used to improve the quality. All of these tools together can improve process and analysis that can be very helpful for quality improvements. These tools make quality improvements process easier, simple to implement. Keyword: Cause-and-effect diagram, Check Sheet, Control Chart, Histogram, Pareto Diagram, Run-Chart, Scatter-Diagram, Quality, Quality Control Tools. I. INTRODUCTION Quality improvement is a very essential topic in today‟s life. It can be express in many ways. Quality is the features and characteristics of product or services that can full fill the customer requirement or customer need. These can be achieving by using Quality Control Tools. The seven quality control tools are simple tools used for solving the problems related to the quality control. These tools were either developed in Japan or introduced to Japan by the Quality Gurus such as Deming and Juran. Kaoru Ishikawa of Japan had suggested seven simple tools that can be used for documentation, analysis and organization of data needed for quality control. -

Seven Quality Concepts 1

Running head: SEVEN QUALITY CONCEPTS 1 Seven Quality Concepts Benjamin Srock Embry-Riddle Aeronautical University Worldwide Campus Planning, Directing, and Controlling Projects PMGT-614 Jimmie Flores, Ph.D. July 2, 2017 Table of Contents History............................................................................................................................................. 3 Cause and Effect Diagrams ......................................................................................................... 3 Check Sheet ................................................................................................................................. 4 Control Charts.............................................................................................................................. 5 Histograms ................................................................................................................................... 6 Pareto Charts................................................................................................................................ 7 Scatter Plots ................................................................................................................................. 8 Flow Charts................................................................................................................................ 10 References ..................................................................................................................................... 12 SEVEN QUALITY CONCEPTS 3