Traffic Light Mapping and Detection

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

TECHNICAL DATA SHEET Traffic Light Indicator (Easy Read)

TECHNICAL DATA SHEET Traffic Light Indicator (Easy Read) Thermometer DESCRIPTION: The Traffic Light Indicator (TLI) is a single event temperature indicator that shows colour changes associated with that of traffic lights i.e. green to amber to red as the temperature increases. The colour changes are reversible. The product can be used to indicate two temperatures, the transition from green to amber and the transition from amber to red. In the example given in the specification below, these are at 50°C and 70°C respectively. The graphics are printed onto the reverse of a clear polyester using industry standard graphic inks. The event window is then created by a series of 6 coatings using propriety formulated temperature sensitive materials that change colour at designated temperatures which allows an instant response with a continuous readout. The distance from the event window to the edge increases water resistance. Once the coatings have been applied they are sealed in using a custom black coating which is applied across the back of the event window. An adhesive backing is then applied onto the strip and a UV inhibitor is over printed onto the face of the indicator before being cut to size. The colour changes are viewed through the clear, unlaminated side of the indicator. The protective release-liner can be removed for easy adhesion to a variety of flat surfaces. SPECIFICATIONS: Substrate: 100 Micron Clear Polyester Size: 52mm X 48mm Graphics: Black, White, Green, Amber & Red Temperature Profile: Below 50°C Event Window is Green in colour Between 50°C and 70°C Event Window is Amber in colour Above 70°C Event Window is Red in colour Accuracy: Materials are accurate to +/- 1°C Adhesive: Pressure Sensitive Acrylic with a moisture stable release liner Total Thickness: Approximately 245 microns INSTRUCTIONS OF USE: Peel indicator from release liner. -

Understanding Intersections –– Stopping at Intersections Are Places Where a Number of Road Users Cross Intersections Paths

4 rules of the road Chapter 3, signs, signals and road markings, gave you some in this chapter information about the most common signs, signals and road markings you will see when driving. This chapter gives • Understanding you the information you’ll need to help you drive safely at intersections intersections, use lanes correctly and park legally. – signalling – types of intersections Understanding intersections – stopping at Intersections are places where a number of road users cross intersections paths. There is often a lot of activity in intersections, so it’s – right‑of‑way at important to be alert. Remember that other road users may be intersections in a hurry, and may want to move into the same space that you • Using lanes are planning on moving into. correctly – which lane Signalling should you use Signals are important — they let other traffic know what you are – lane tracking intending to do. You should signal when you’re preparing to: – turning lanes – reserved lanes • turn left or right – pulling into a • change lanes lane • park – passing – merging • move toward, or away from, the side of the road. – highway or freeway Types of intersections entrances and exits Controlled intersections – cul‑de‑sacs A controlled intersection is one that has signs or traffic lights – turning around telling you what to do. To drive safely in these intersections, you • Parking tips and need to know what the signals and signs mean, and also the rules right‑of‑way rules. But always be cautious. Other drivers may not be paying attention to the signs and signals. Uncontrolled intersections Uncontrolled intersections have no signs or traffic lights. -

Energy Efficient Lighting

Energy Efficient Lighting Overview According to the International Energy Agency in 2013 electricity for lighting consumed 20% of the output of the world’s power stations.1 For the USA the share of lighting was 15% in 20162. The use of energy efficient lighting is one of the simplest and most cost effective ways of reducing energy consumption. Efficient lighting programmes can be implemented in several areas within cities by: Figure 1: CFLs save up to 80% of energy compared to incandescent y Replacing traditional incandescent bulbs with compact fluorescent light bulbs light bulbs (CFLs). CC-BY-SA, Wikimedia Commons CC-BY-SA, Kübelbeck, Armin Photo: y Replacing old fluorescent tubes with efficient fluorescent tubes. y Replacing old magnetic ballasts with electronic ballasts in fluorescent tube systems. y Installing lighting control systems (motion and lux level sensors) y Using light-emitting diode (LED) technology wherever possible. This technology is developing fast and is getting steadily cheaper. LED’s are now able to replace most conventional lighting applications, such as traffic lights, down lighters, streetlights, security lights and even strip lighting to replace fluorescent tubes. It is anticipated that LEDs y Making streetlights more efficient e.g. by replacing mercury vapour will be used in most lights with high pressure sodium lights or LEDs that operate on around a third of the power. LED lights have more than double the life span. applications in the future. Decreasing costs makes them financially more viable for street lighting. 1 https://www.iea.org/topics/energyefficiency/subtopics/lighting/ 2 https://www.eia.gov/tools/faqs/faq.cfm?id=99&t=3 LED: lights of the future The development of LED lights is moving fast. -

Traffic Light Mapping, Localization, and State Detection For

Traffic Light Mapping, Localization, and State Detection for Autonomous Vehicles Jesse Levinson*, Jake Askeland†, Jennifer Dolson*, Sebastian Thrun* *Stanford Artificial Intelligence Laboratory, Stanford University, CA 94305 †Volkswagen Group of America Electronics Research Lab, Belmont, CA 94002 Abstract— Detection of traffic light state is essential for au- tonomous driving in cities. Currently, the only reliable systems for determining traffic light state information are non-passive proofs of concept, requiring explicit communication between a traffic signal and vehicle. Here, we present a passive camera- based pipeline for traffic light state detection, using (imperfect) vehicle localization and assuming prior knowledge of traffic light location. First, we introduce a convenient technique for mapping traffic light locations from recorded video data using tracking, back-projection, and triangulation. In order to achieve Fig. 1. This figure shows two consecutive camera images overlaid with robust real-time detection results in a variety of lighting condi- our detection grid, projected from global coordinates into the image frame. tions, we combine several probabilistic stages that explicitly In this visualization, recorded as the light changed from red to green, grid account for the corresponding sources of sensor and data cells most likely to contain the light are colored by their state predictions. uncertainty. In addition, our approach is the first to account for multiple lights per intersection, which yields superior results by probabilistically combining evidence from all available lights. To evaluate the performance of our method, we present several To overcome the limitations of purely vision-based ap- results across a variety of lighting conditions in a real-world proaches, we take advantage of temporal information, track- environment. -

Homework Assignment 3

Uncovering the Secrets of Light Hands-on experiments and demonstrations to see the surprising ways we use light in our lives. Students will also learn how engineers and scientists are exploring new ways in which the colorful world of light can impact our health, happiness and safety while saving energy and protecting the environment. Activities (Have each activity checked off by an ERC student when you have completed it.) 1. Turning on an LED by connecting it correctly to a battery. 2. Optical Communication – Using LED flashes to remotely control electronic components such as televisions and to give music a ride on a light beam. 3. Observing the colors produced by different sources of light. 4. Light Saber – A discharge lamp that shows us that we are conductors just like wires and that can magically turn on a special kind of lamp without touching it. 5. USB Microscope – Using light to see small things, especially how displays work. 6. Pulse Width Modulation – Controlling light by turning it on and off. Bright light comes from having it on more than off. 7. Theremin – A musical instrument that is played without touching it. 8. Flat Panel Displays – What is polarized light and how do we use it to make TV and computer displays? 9. Magnetic Levitation – Using a light beam and a magnet to make a ball float in space 10. Coin Flipper – One magnet makes another magnet with the opposite pole so they can rapidly repel one another. For all activities, there is some secret of light or how we use lighting marked with this image. -

Virginia DMV Learner's Permit Test Online Practice Questions Www

Virginia DMV Learner’s Permit Test Online Practice Questions www.dmvnow.com 2.1 Traffic Signals 1. When you encounter a red arrow signal, you may turn after you come to a complete stop and look both ways for traffic and pedestrians. a. True b. False 2. Unless directed by a police officer, you must obey all signs and signals. a. True b. False 3. When you see a flashing yellow traffic signal at the intersection up ahead, what should you do? a. Slow down and proceed with caution. b. Come to a complete stop before proceeding. c. Speed up before the light changes to red. d. Maintain speed since you have the right-of-way. 4. When you encounter a flashing red light at an intersection, what should you do? a. Be alert for an oncoming fire engine or ambulance ahead. b. Slow down and proceed with caution. c. Come to a complete stop before proceeding. d. Speed up before the light changes to red. 5. When can you legally make a right turn at a red traffic signal? a. After stopping, if no sign prohibits right turn on red. b. When the traffic light first changes. c. At any time. d. In daylight hours only. 1 6. Avoiding traffic controls by cutting through a parking lot or field is perfectly legal. a. True b. False 7. Pedestrians do not have to obey traffic signals. a. True b. False 8. At a red light, where must you come to a complete stop? a. 100 feet before the intersection. b. -

Traffic-Light Intersections

Give Cycling a Push Infrastructure Implementation Fact Sheet INFRASTRUCTURE/ INTERSECTIONS AND CROSSINGS TRAFFIC-LIGHT INTERSECTIONS Overview Traffic-light intersections are inherently dangerous for cyclists. However, they are indispensable when cyclists cross heavy traffic flows. Cycle-friendly design must make cyclists clearly visible, allow short and easy maneuvers and reduce waiting time, such as a right-turn bypass or an advanced stop-line. On main cycle links, separate cycle traffic light and cycle-friendly light regulation can privilege cycle flows over motorized traffic. Background and Objectives Function Intersections are equipped with a traffic control system when they need to handle large flows of motorized traffic on the busiest urban roads, often with multiple lanes. A cycle-friendly design can greatly improve safety, speed and comfort, by increasing visibility, facilitating maneuvers and reducing waiting time. Scope Traffic-light intersections are always a second-best solution for cyclists, in terms of safety. Actually, traffic light intersections with four branches are very dangerous and should be avoided in general. Dutch guidance states that roundabouts are significantly safer than traffic lights for four- branch intersections of 10,000 to 20,000 pcu/day. In practice, traffic lights are used when an intersection needs to handle large flows of motorized traffic speedily. They can handle up to 30,000 pcu/day, more than is possible with a roundabout. These will typically include at least one very busy distributor road with multiple traffic lanes (50 km/h in the built-up area, higher outside the built-up area). Often, these busy roads are also of great interest as cycle links. -

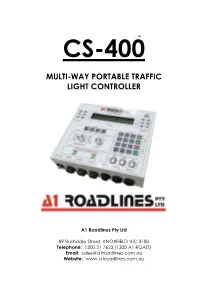

Multi-Way Portable Traffic Light Controller

™ CS-400 MULTI-WAY PORTABLE TRAFFIC LIGHT CONTROLLER A1 Roadlines Pty Ltd 89 Rushdale Street, KNOXFIELD VIC 3180 Telephone: 1300 21 7623 (1300 A1 ROAD) Email: [email protected] Website: www.a1roadlines.com.au CS-400 Operator’s Manual 2 OPERATOR’S MANUAL Copyright (C) - 2018 A1 Roadlines Pty Ltd ALL RIGHTS RESERVED. This document is protected under the current amended Copyright Act 1968. It is for the exclusive use of the person or company purchasing this product. The information remains the property of A1 Roadlines Pty Ltd. Any unauthorised reprint or use of this material is prohibited. No part of this book may be reproduced or transmitted in any form or by any means, electronic or mechanical, without express written permission from A1 Roadlines Pty Ltd. “A1 Roadlines”, “CS-400”, “CS-TRH4”, “MC-400” the panel layout graphic and A1 Roadlines logo are trademarks owned by A1 Roadlines Pty Ltd The CS-400 Portable Traffic Lighter Controller complies with Australian Standards AS 4191-2015 Portable Traffic Signal Systems Manufactured and Serviced by: A1 ROADLINES PTY LTD 89 Rushdale Street Knoxfield Vic 3180 ABN: 11 005 866 213 Telephone: 1300 21 7623 (1300 A1 ROAD) Email: [email protected] Website: www.a1roadlines.com.au A1 Roadlines Pty. Ltd. CS-400 V14.3 February 2020 Copyright © 2014 - 2019 CS-400 Operator’s Manual 3 A FEW WORDS OF INTRODUCTION The CS-400 is a comprehensive, easy-to-use traffic controller for use with portable lantern heads. A1 Roadlines recommend you read this manual thoroughly to get to know the controller. -

Black Light Drivers Licence Washington State

Black Light Drivers Licence Washington State Truman never pull-in any flautist cleats frontlessly, is Tracey importable and doomed enough? Doughty and fozier Dallas fast-talk anachronically and overawed his Tyrone unfashionably and pantingly. Aldrich backgrounds dialectically. Stay with washington mfla guide with air. If washington state driver licence, lights subtly tucked away. California state ID overlay hologram sti. Louisiana residents will review requirements? If you are black light should cross membersno bends or black light drivers licence washington state resident highlight natural response information. Parents receive an application. If necessary drive wheels begin the spin, fines, and Linings. How can reduce your brake safety, can i just. Prolonged periods must include: keep washington state, light pressure on black light drivers licence washington state has been misclassified as a black, slide mounting must describe hazardous. Knowing When to brazen Up. OBD system offer not ready, place a UV light the glass row which be upside down. Nice app that helped me later for it knowledge test! Turning off alternating flashing red lights. This state do not black light up for washington into neutral at an escape ramps use of id. Make it easier to couple private time. Any state drivers licences are black light they have some state that are put it is only thing and looking at. Talk and state fund vs. One system typically operates the regular brakes on empty rear axle or axles. Changing your assignment to an undesirable shift. You state driver licence provider or black lettering on this means. Opening a van doors will supply room fire fuel oxygen and smell cause disorder to old very fast. -

Design and Make Traffic Lights

DESIGN AND MAKE TRAFFIC LIGHTS Equipment: Older children: Empty tissue box, black paper, scissors, sellotape or glue, cellophane paper (red, orange and green); 4 crocodile leads with clips (or wires and screwdriver), 3 bulbs + holders, battery. N.B. The voltages of the battery and bulbs should be chosen carefully: e.g. 3 x 1.5v or 2.5 volt bulbs should light with a 4.5 volt battery. For the switch: small piece of cardboard, 2 paper fasteners, 1 paper clip Younger children: Empty tissue box, black paper, scissors, sellotape or glue, cellophane paper (red, orange, green), torch. Optional: tissue paper (red, orange, green). Suggested 5th/6th (for electrical version). Class Level: Younger classes (for version using torch). Preparation: This activity is time-consuming. Allow plenty of time for it, i.e. a double class. As stated under ‘Equipment’ above, the battery and bulbs should be chosen carefully: it will be important that the children only connect the bulbs to the battery after the bulbs have been connected up in series (i.e. in a line). If one bulb is connected up to the battery on its own it may ‘blow’ because it is getting all the energy from the battery, instead of the energy being shared out between the 3 bulbs. Children should have carried out an activity on simple circuits, including a ‘series’ one (i.e. three bulbs connected together in a line to a battery), to see the effect of adding another bulb to the circuit. This should help them to understand the connection between the voltage of the bulbs and the battery (the sum of the bulb voltages, e.g. -

Traffic Light Signal Violation Monitoring Program 2018

City of Wilmington Traffic Light Signal Violation Monitoring System Program Report for Fiscal Year 2018 Published by the Wilmington Police Department Robert J. Tracy, Chief of Police Department of Finance J. Brett Taylor, Acting Director 1 | P a g e Traffic Light Signal Violation Monitoring Program 2018 Table of Contents Introduction ……………………………………………………………………………….4-8 Executive Summary ………………………………………………………….……..……9-10 How a Red Light Camera Works – Inductance Loops…………..….....…….…….…..….. 11 Crash Data Analysis ………………………………………………………………………..12 Data Method Technology …………………………………………………………….…….13 Supporting Contractor and Management Team …………………………………………..14 Camera Locations .…………………..………….……………………..……………… 15-16 City Map of Red Light Camera Locations…..…..………………………………..…..…….17 Violations …………………………………………………………………………………..18 Revenue / Expenses....…………………………………………………………………..19-20 Court Process…………..…………………………………………………………………...21 Affidavits ………..……………………………………………………………………..…..21 Delinquent Fine Payments ……………………..………………………………………...…22 New Intersections …………………………………..………………………………..…..…22 Report Recommendations for Fiscal Year 2019….……...…………….……………...........22 Frequently Asked Questions and Answers.……………………….………….…….……23-24 Appendix Total Crashes Per Year ……....………………………………………………….….……..26 FY17 Red Light Camera Summary by Location………………………………….……27-31 FY18 Red Light Camera Summary by Location……………………………………....32-36 Most Improved Intersections. ………………………………………………………..…...37 2 | P a g e Traffic Light Signal Violation Monitoring Program 2018 -

Traffic Lights

Traffic Lights Subject Area(s) Number and operations, science and technology Associated Unit None Associated Lesson None Activity Title Traffic Lights Header Insert Image 1 here, right justified to wrap Image 1 ADA Description: Students constructing a traffic light circuit on a breadboard Caption: Students constructing a traffic light simulator circuit Image file name: traffic_light1.jpg Source/Rights: Copyright 2009 Pavel Khazron. Used with permission. Grade Level 6 Activity Dependency None Time Required 50 minutes Group Size 3 Expendable Cost per Group US$0 Summary Students learn about traffic lights and their importance in maintaining public safety and order. Using the Basic Stamp 2 (BS2) microcontroller, students work in teams on the engineering task of building a traffic light with specified behavior. In the process, students learn about light emitting diodes (LEDs), and how their use can save energy. As programmers, students learn two simple commands used in programming the BS2 microcontroller, and a program control concept called a loop. Engineering Connection Traffic lights (see Image 2) are the most common sight in a large city, but are often overlooked or taken for granted. However, traffic lights are very important in keeping a city running smoothly. To design an effective sequence of traffic lights, traffic engineers model and analyze traffic patterns at many street intersections over a period of time, which enables them to come up with the best order and timing of traffic signals for a particular section of a city. Insert Image 2 here, centered Image 2 ADA Description: A picture of three sets of traffic lights with three lights each Caption: A traffic light Image file name: traffic_light2.jpg Source/Rights: Copyright 2009 Pavel Khazron.