Lex & Yacc for Compiler Writing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Wait, I Don't Want to Be the Linux Administrator for SAS VA

SESUG Paper 88-2017 Wait, I don’t want to be the Linux Administrator for SAS VA Jonathan Boase; Zencos Consulting ABSTRACT Whether you are a new SAS administrator or switching to a Linux environment, you have a complex mission. This job becomes even more formidable when you are working with a system like SAS Visual Analytics that requires multiple users loading data daily. Eventually a user will have data issues or create a disruption that causes the system to malfunction. When that happens, what do you do next? In this paper, we will go through the basics of a SAS Visual Analytics Linux environment and how to troubleshoot the system when issues arise. INTRODUCTION Many companies choose to implement SAS Visual Analytics in a Linux environment. With a distributed deployment, it’s the only choice but many chose this operating system because it reduces operating costs. If you are the newly chosen SAS platform administrator, you might be more versed in a Windows environment and feel intimidated by Linux. This paper introduces using basic Linux commands and methods for troubleshooting a SAS Visual Analytics environment. The paper assumes that SAS Visual Analytics is installed on a SAS 9.4 platform for Linux and that the reader has some familiarity with other operating systems, such as Windows. PLATFORM ADMINISTRATION 101 SAS platform administrators work with three main product areas. Each area provides a different functionality based on the task the administrator needs to perform. The following figure defines each area and provides a general overview of its purpose. Figure 1 Platform Administrator Tools Operating System SAS Management SAS Environment •Contains installed Console Manager software •Access and manage the •Monitor the •Contains logs used for metadata environment troubleshooting •Control database •Configure custom alerts •Administer host system connections users •Manage user accounts •Manage the LASR server With any operating system, there is always a lot to learn. -

Drilling Network Stacks with Packetdrill

Drilling Network Stacks with packetdrill NEAL CARDWELL AND BARATH RAGHAVAN Neal Cardwell received an M.S. esting and troubleshooting network protocols and stacks can be in Computer Science from the painstaking. To ease this process, our team built packetdrill, a tool University of Washington, with that lets you write precise scripts to test entire network stacks, from research focused on TCP and T the system call layer down to the NIC hardware. packetdrill scripts use a Web performance. He joined familiar syntax and run in seconds, making them easy to use during develop- Google in 2002. Since then he has worked on networking software for google.com, the ment, debugging, and regression testing, and for learning and investigation. Googlebot web crawler, the network stack in Have you ever had the experience of staring at a long network trace, trying to figure out what the Linux kernel, and TCP performance and on earth went wrong? When a network protocol is not working right, how might you find the testing. [email protected] problem and fix it? Although tools like tcpdump allow us to peek under the hood, and tools like netperf help measure networks end-to-end, reproducing behavior is still hard, and know- Barath Raghavan received a ing when an issue has been fixed is even harder. Ph.D. in Computer Science from UC San Diego and a B.S. from These are the exact problems that our team used to encounter on a regular basis during UC Berkeley. He joined Google kernel network stack development. Here we describe packetdrill, which we built to enable in 2012 and was previously a scriptable network stack testing. -

Project1: Build a Small Scanner/Parser

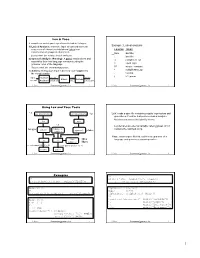

Project1: Build A Small Scanner/Parser Introducing Lex, Yacc, and POET cs5363 1 Project1: Building A Scanner/Parser Parse a subset of the C language Support two types of atomic values: int float Support one type of compound values: arrays Support a basic set of language concepts Variable declarations (int, float, and array variables) Expressions (arithmetic and boolean operations) Statements (assignments, conditionals, and loops) You can choose a different but equivalent language Need to make your own test cases Options of implementation (links available at class web site) Manual in C/C++/Java (or whatever other lang.) Lex and Yacc (together with C/C++) POET: a scripting compiler writing language Or any other approach you choose --- must document how to download/use any tools involved cs5363 2 This is just starting… There will be two other sub-projects Type checking Check the types of expressions in the input program Optimization/analysis/translation Do something with the input code, output the result The starting project is important because it determines which language you can use for the other projects Lex+Yacc ===> can work only with C/C++ POET ==> work with POET Manual ==> stick to whatever language you pick This class: introduce Lex/Yacc/POET to you cs5363 3 Using Lex to build scanners lex.yy.c MyLex.l lex/flex lex.yy.c a.out gcc/cc Input stream a.out tokens Write a lex specification Save it in a file (MyLex.l) Compile the lex specification file by invoking lex/flex lex MyLex.l A lex.yy.c file is generated -

GNU M4, Version 1.4.7 a Powerful Macro Processor Edition 1.4.7, 23 September 2006

GNU M4, version 1.4.7 A powerful macro processor Edition 1.4.7, 23 September 2006 by Ren´eSeindal This manual is for GNU M4 (version 1.4.7, 23 September 2006), a package containing an implementation of the m4 macro language. Copyright c 1989, 1990, 1991, 1992, 1993, 1994, 2004, 2005, 2006 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled “GNU Free Documentation License.” i Table of Contents 1 Introduction and preliminaries ................ 3 1.1 Introduction to m4 ............................................. 3 1.2 Historical references ............................................ 3 1.3 Invoking m4 .................................................... 4 1.4 Problems and bugs ............................................. 8 1.5 Using this manual .............................................. 8 2 Lexical and syntactic conventions ............ 11 2.1 Macro names ................................................. 11 2.2 Quoting input to m4........................................... 11 2.3 Comments in m4 input ........................................ 11 2.4 Other kinds of input tokens ................................... 12 2.5 How m4 copies input to output ................................ 12 3 How to invoke macros........................ -

UNIX X Command Tips and Tricks David B

SESUG Paper 122-2019 UNIX X Command Tips and Tricks David B. Horvath, MS, CCP ABSTRACT SAS® provides the ability to execute operating system level commands from within your SAS code – generically known as the “X Command”. This session explores the various commands, the advantages and disadvantages of each, and their alternatives. The focus is on UNIX/Linux but much of the same applies to Windows as well. Under SAS EG, any issued commands execute on the SAS engine, not necessarily on the PC. X %sysexec Call system Systask command Filename pipe &SYSRC Waitfor Alternatives will also be addressed – how to handle when NOXCMD is the default for your installation, saving results, and error checking. INTRODUCTION In this paper I will be covering some of the basics of the functionality within SAS that allows you to execute operating system commands from within your program. There are multiple ways you can do so – external to data steps, within data steps, and within macros. All of these, along with error checking, will be covered. RELEVANT OPTIONS Execution of any of the SAS System command execution commands depends on one option's setting: XCMD Enables the X command in SAS. Which can only be set at startup: options xcmd; ____ 30 WARNING 30-12: SAS option XCMD is valid only at startup of the SAS System. The SAS option is ignored. Unfortunately, ff NOXCMD is set at startup time, you're out of luck. Sorry! You might want to have a conversation with your system administrators to determine why and if you can get it changed. -

GNU M4, Version 1.4.19 a Powerful Macro Processor Edition 1.4.19, 28 May 2021

GNU M4, version 1.4.19 A powerful macro processor Edition 1.4.19, 28 May 2021 by Ren´eSeindal, Fran¸coisPinard, Gary V. Vaughan, and Eric Blake ([email protected]) This manual (28 May 2021) is for GNU M4 (version 1.4.19), a package containing an implementation of the m4 macro language. Copyright c 1989{1994, 2004{2014, 2016{2017, 2020{2021 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled \GNU Free Documentation License." i Table of Contents 1 Introduction and preliminaries ::::::::::::::::: 3 1.1 Introduction to m4 :::::::::::::::::::::::::::::::::::::::::::::: 3 1.2 Historical references :::::::::::::::::::::::::::::::::::::::::::: 3 1.3 Problems and bugs ::::::::::::::::::::::::::::::::::::::::::::: 4 1.4 Using this manual :::::::::::::::::::::::::::::::::::::::::::::: 5 2 Invoking m4::::::::::::::::::::::::::::::::::::::: 7 2.1 Command line options for operation modes ::::::::::::::::::::: 7 2.2 Command line options for preprocessor features ::::::::::::::::: 8 2.3 Command line options for limits control ::::::::::::::::::::::: 10 2.4 Command line options for frozen state ::::::::::::::::::::::::: 11 2.5 Command line options for debugging :::::::::::::::::::::::::: 11 2.6 Specifying input files on the command line ::::::::::::::::::::: -

An AWK to C++ Translator

An AWK to C++ Translator Brian W. Kernighan Bell Laboratories Murray Hill, NJ 07974 [email protected] ABSTRACT This paper describes an experiment to produce an AWK to C++ translator and an AWK class definition and supporting library. The intent is to generate efficient and read- able C++, so that the generated code will run faster than interpreted AWK and can also be modified and extended where an AWK program could not be. The AWK class and library can also be used independently of the translator to access AWK facilities from C++ pro- grams. 1. Introduction An AWK program [1] is a sequence of pattern-action statements: pattern { action } pattern { action } ... A pattern is a regular expression, numeric expression, string expression, or combination of these; an action is executable code similar to C. The operation of an AWK program is for each input line for each pattern if the pattern matches the input line do the action Variables in an AWK program contain string or numeric values or both according to context; there are built- in variables for useful values such as the input filename and record number. Operators work on strings or numbers, coercing the types of their operands as necessary. Arrays have arbitrary subscripts (‘‘associative arrays’’). Input is read and input lines are split into fields, called $1, $2, etc. Control flow statements are like those of C: if-else, while, for, do. There are user-defined and built-in functions for arithmetic, string, regular expression pattern-matching, and input and output to/from arbitrary files and processes. -

Make a Jump Start Together with Software Ag

Software AG Fast Startup FAST STARTUP PROGRAM MAKE A JUMP START TOGETHER WITH SOFTWARE AG You have great ideas, a clear vision and the drive to build something really cool. You are smart, you are fast, you are innovative and you are determined to become a rock star of the startup community. Need some free software to build your solution on? Here’s the secret. When it comes to software partnership, the key differentiators are: technology leadership, ease of use, total cost of ownership and—most importantly—reliability. Why Software AG? Why not just freeware? The key to your success is a brilliant business idea, a sustainable For a homegrown, non-mission-critical application, there is business model, openhanded investors and finally paying nothing wrong with choosing freeware. But if you need best-in- customers. class functionality and reliability to build your business on, you It’s a strong partnership that can be the differentiator in the should think twice about the software you use. success of your business. What is your innovation? What do you require as the foundation That’s why you should partner with Software AG. for your intellectual property? Why should you spend time re- Building on 45 years of customer-centric innovation, we are inventing what professional developers invented and optimized ranked as a leader in key market categories, year after year. Our over many years for their paying customers? Do you want to grow solutions are faster to implement and easier to use than anything your business or just your development team? How reliable are else on the market. -

Lex and Yacc

Lex and Yacc A Quick Tour HW8–Use Lex/Yacc to Turn this: Into this: <P> Here's a list: Here's a list: * This is item one of a list <UL> * This is item two. Lists should be <LI> This is item one of a list indented four spaces, with each item <LI>This is item two. Lists should be marked by a "*" two spaces left of indented four spaces, with each item four-space margin. Lists may contain marked by a "*" two spaces left of four- nested lists, like this: space margin. Lists may contain * Hi, I'm item one of an inner list. nested lists, like this:<UL><LI> Hi, I'm * Me two. item one of an inner list. <LI>Me two. * Item 3, inner. <LI> Item 3, inner. </UL><LI> Item 3, * Item 3, outer list. outer list.</UL> This is outside both lists; should be back This is outside both lists; should be to no indent. back to no indent. <P><P> Final suggestions: Final suggestions 2 if myVar == 6.02e23**2 then f( .. ! char stream LEX token stream if myVar == 6.02e23**2 then f( ! tokenstream YACC parse tree if-stmt == fun call var ** Arg 1 Arg 2 float-lit int-lit . ! 3 Lex / Yacc History Origin – early 1970’s at Bell Labs Many versions & many similar tools Lex, flex, jflex, posix, … Yacc, bison, byacc, CUP, posix, … Targets C, C++, C#, Python, Ruby, ML, … We’ll use jflex & byacc/j, targeting java (but for simplicity, I usually just say lex/yacc) 4 Uses “Front end” of many real compilers E.g., gcc “Little languages”: Many special purpose utilities evolve some clumsy, ad hoc, syntax Often easier, simpler, cleaner and more flexible to use lex/yacc or similar tools from the start 5 Lex: A Lexical Analyzer Generator Input: Regular exprs defining "tokens" my.flex Fragments of declarations & code Output: jflex A java program “yylex.java” Use: yylex.java Compile & link with your main() Calls to yylex() read chars & return successive tokens. -

The Cool Reference Manual∗

The Cool Reference Manual∗ Contents 1 Introduction 3 2 Getting Started 3 3 Classes 4 3.1 Features . 4 3.2 Inheritance . 5 4 Types 6 4.1 SELF TYPE ........................................... 6 4.2 Type Checking . 7 5 Attributes 8 5.1 Void................................................ 8 6 Methods 8 7 Expressions 9 7.1 Constants . 9 7.2 Identifiers . 9 7.3 Assignment . 9 7.4 Dispatch . 10 7.5 Conditionals . 10 7.6 Loops . 11 7.7 Blocks . 11 7.8 Let . 11 7.9 Case . 12 7.10 New . 12 7.11 Isvoid . 12 7.12 Arithmetic and Comparison Operations . 13 ∗Copyright c 1995-2000 by Alex Aiken. All rights reserved. 1 8 Basic Classes 13 8.1 Object . 13 8.2 IO ................................................. 13 8.3 Int................................................. 14 8.4 String . 14 8.5 Bool . 14 9 Main Class 14 10 Lexical Structure 14 10.1 Integers, Identifiers, and Special Notation . 15 10.2 Strings . 15 10.3 Comments . 15 10.4 Keywords . 15 10.5 White Space . 15 11 Cool Syntax 17 11.1 Precedence . 17 12 Type Rules 17 12.1 Type Environments . 17 12.2 Type Checking Rules . 18 13 Operational Semantics 22 13.1 Environment and the Store . 22 13.2 Syntax for Cool Objects . 24 13.3 Class definitions . 24 13.4 Operational Rules . 25 14 Acknowledgements 30 2 1 Introduction This manual describes the programming language Cool: the Classroom Object-Oriented Language. Cool is a small language that can be implemented with reasonable effort in a one semester course. Still, Cool retains many of the features of modern programming languages including objects, static typing, and automatic memory management. -

Usability Improvements for Products That Mandate Use of Command-Line Interface: Best Practices

Usability improvements for products that mandate use of command-line interface: Best Practices Samrat Dutta M.Tech, International Institute of Information Technology, Electronics City, Bangalore Software Engineer, IBM Storage Labs, Pune [email protected] ABSTRACT This paper provides few methods to improve the usability of products which mandate the use of command-line interface. At present many products make command-line interfaces compulsory for performing some operations. In such environments, usability of the product becomes the link that binds the users with the product. This paper provides few mechanisms like consolidated hierarchical help structure for the complete product, auto-complete command-line features, intelligent command suggestions. These can be formalized as a pattern and can be used by software companies to embed into their product's command-line interfaces, to simplify its usability and provide a better experience for users so that they can adapt with the product much faster. INTRODUCTION Products that are designed around a command-line interface (CLI), often strive for usability issues. A blank prompt with a cursor blinking, waiting for input, does not provide much information about the functions and possibilities available. With no click-able option and hover over facility to view snippets, some users feel lost. All inputs being commands, to learn and gain expertise of all of them takes time. Considering that learning a single letter for each command (often the first letter of the command is used instead of the complete command to reduce stress) is not that difficult, but all this seems useless when the command itself is not known. -

Lexical Analysis Lexeme Token Using Lex and Yacc Tools Examples

Lex & Yacc A compiler or an interpreter performs its task in 3 stages: 1) Lexical Analysis: scans the input stream and converts Example: Lexical analysis sequences of characters into tokens (token is a Lexeme Token classification of groups of characters) Sum identifier Lex is a tool for writing lexical analyzers. i identifier 2) Syntactic Analysis (Parsing): A parser reads tokens and := assignment_op assembles them into language constructs using the grammar rules of the language. = equal_sign Yacc is a tool for constructing parsers. 57 integer_constant 3) Actions: Acting upon input is done by code supplied by * multiplication_op the compiler writer. , comma ( left_paran input Lexical stream parse output Parser Actions stream Analyzer of tokens tree executable K. Dincer Programming Languages - Lex 1 K. Dincer Programming Languages - Lex 2 (4) (4) Using Lex and Yacc Tools *.l Lex Specification Yacc Specification *.y Lex: reads a spec file containing regular expressions and generates a C routine that performs lexical analysis. Matches sequences that identify tokens. lex yacc *.c Lex declares an external variable called yytext which lex.yy.c Custom C contains the matched string. yylex() yyparse() y.tab.c routines Yacc: reads a spec file that codifies the grammar of a libl.a cc cc Unix language and generates a parsing routine. Libraries liby.a cc -o scanner lex.yy.c -ll cc -o parser y.tab.c -ly -ll scanner parser K. Dincer Programming Languages - Lex 3 K. Dincer Programming Languages - Lex 4 (4) (4) Examples %% %% [A-Z]+[ \t\n] printf(“%s”, yytext); [+-]?[0-9]*(\.)?[0-9]+ printf(“FLOAT”); . ; /* no action specified */ digit [0-9] alphabetic [A-Za-z] %% digit [0 -9] [+-]?{digit}*( \.)?{digit}+ printf(“FLOAT”); alphanumeric ({alphabetic}|{digit}) %% digit [0-9] {alphabetic }{alphanumeric }* printf(“variable”); sign [+-] \, printf(“Comma”); %% \{ printf(“Left brace”); float val; \:\= printf(“Assignment”); {sign}?{digit}*( \.)?{digit}+ {sscanf(yytext, “%f”, &val); printf(“>%f<”, val); K.