Exploiting Parallelism in Geometry Processing with General Purpose Processors and Floating-Point SIMD Instructions

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chapter 1: Computer Abstractions and Technology 1.6 – 1.7: Performance and Power

Chapter 1: Computer Abstractions and Technology 1.6 – 1.7: Performance and power ITSC 3181 Introduction to Computer Architecture https://passlaB.githuB.io/ITSC3181/ Department of Computer Science Yonghong Yan [email protected] https://passlab.github.io/yanyh/ Lectures for Chapter 1 and C Basics Computer Abstractions and Technology • Lecture 01: Chapter 1 – 1.1 – 1.4: Introduction, great ideas, Moore’s law, aBstraction, computer components, and program execution • Lecture 02: C Basics; Memory and Binary Systems • Lecture 03: Number System, Compilation, Assembly, Linking and Program Execution ☛• Lecture 04: Chapter 1 – 1.6 – 1.7: Performance, power and technology trends • Lecture 05: – 1.8 - 1.9: Multiprocessing and Benchmarking 2 § 1.6 Performance 1.6 Defining Performance • Which airplane has the best performance? Boeing 777 Boeing 777 Boeing 747 Boeing 747 BAC/Sud BAC/Sud Concorde Concorde Douglas Douglas DC- DC-8-50 8-50 0 100 200 300 400 500 0 2000 4000 6000 8000 10000 Passenger Capacity Cruising Range (miles) Boeing 777 Boeing 777 Boeing 747 Boeing 747 BAC/Sud BAC/Sud Concorde Concorde Douglas Douglas DC- DC-8-50 8-50 0 500 1000 1500 0 100000 200000 300000 400000 Cruising Speed (mph) Passengers x mph 3 Response Time and Throughput • Response time çè Latency – How long it takes to do a task • Throughput çè Bandwidth – Total work done per unit time • e.g., tasks/transactions/… per hour • How are response time and throughput affected by – Replacing the processor with a faster version? – Adding more processors? • We’ll focus on response time for now… 4 Relative Performance • Define Performance = 1/Execution Time • “X is n time faster than Y”, i.e. -

GPU Implementation Over IPTV Software Defined Networking

Esmeralda Hysenbelliu. Int. Journal of Engineering Research and Application www.ijera.com ISSN : 2248-9622, Vol. 7, Issue 8, ( Part -1) August 2017, pp.41-45 RESEARCH ARTICLE OPEN ACCESS GPU Implementation over IPTV Software Defined Networking Esmeralda Hysenbelliu* Information Technology Faculty, Polytechnic University of Tirana, Sheshi “Nënë Tereza”, Nr.4, Tirana, Albania Corresponding author: Esmeralda Hysenbelliu ABSTRACT One of the most important issue in IPTV Software defined Network is Bandwidth Issue and Quality of Service at the client side. Decidedly, it is required high level quality of images in low bandwidth and for this reason it is needed different transcoding standards (Compression of image as much as it is possible without destroying the quality of it) as H.264, H265, VP8 and VP9. During a test performed in SMC IPTV SDN Cloud Network, it was observed that with a server HP ProLiant DL380 g6 with two physical processors there was not possible to transcode in format H.264 more than 30 channels simultaneously because CPU’s achieved 100%. This is the reason why it was immediately needed to use Graphic Processing Units called GPU’s which offer high level images processing. After GPU superscalar processor was integrated and done functional via module NVENC of FFEMPEG Program, number of channels transcoded simultaneously was tremendous increased (more than 100 channels). The aim of this paper is to real implement GPU superscalar processors in IPTV Cloud Networks by achieving improvement of performance to more than 60%. Keywords - GPU superscalar processor, Performance Improvement, NVENC, CUDA --------------------------------------------------------------------------------------------------------------------------------------- Date of Submission: 01 -05-2017 Date of acceptance: 19-08-2017 --------------------------------------------------------------------------------------------------------------------------------------- I. -

Computer Hardware Architecture Lecture 4

Computer Hardware Architecture Lecture 4 Manfred Liebmann Technische Universit¨atM¨unchen Chair of Optimal Control Center for Mathematical Sciences, M17 [email protected] November 10, 2015 Manfred Liebmann November 10, 2015 Reading List • Pacheco - An Introduction to Parallel Programming (Chapter 1 - 2) { Introduction to computer hardware architecture from the parallel programming angle • Hennessy-Patterson - Computer Architecture - A Quantitative Approach { Reference book for computer hardware architecture All books are available on the Moodle platform! Computer Hardware Architecture 1 Manfred Liebmann November 10, 2015 UMA Architecture Figure 1: A uniform memory access (UMA) multicore system Access times to main memory is the same for all cores in the system! Computer Hardware Architecture 2 Manfred Liebmann November 10, 2015 NUMA Architecture Figure 2: A nonuniform memory access (UMA) multicore system Access times to main memory differs form core to core depending on the proximity of the main memory. This architecture is often used in dual and quad socket servers, due to improved memory bandwidth. Computer Hardware Architecture 3 Manfred Liebmann November 10, 2015 Cache Coherence Figure 3: A shared memory system with two cores and two caches What happens if the same data element z1 is manipulated in two different caches? The hardware enforces cache coherence, i.e. consistency between the caches. Expensive! Computer Hardware Architecture 4 Manfred Liebmann November 10, 2015 False Sharing The cache coherence protocol works on the granularity of a cache line. If two threads manipulate different element within a single cache line, the cache coherency protocol is activated to ensure consistency, even if every thread is only manipulating its own data. -

Superscalar Fall 2020

CS232 Superscalar Fall 2020 Superscalar What is superscalar - A superscalar processor has more than one set of functional units and executes multiple independent instructions during a clock cycle by simultaneously dispatching multiple instructions to different functional units in the processor. - You can think of a superscalar processor as there are more than one washer, dryer, and person who can fold. So, it allows more throughput. - The order of instruction execution is usually assisted by the compiler. The hardware and the compiler assure that parallel execution does not violate the intent of the program. - Example: • Ordinary pipeline: four stages (Fetch, Decode, Execute, Write back), one clock cycle per stage. Executing 6 instructions take 9 clock cycles. I0: F D E W I1: F D E W I2: F D E W I3: F D E W I4: F D E W I5: F D E W cc: 1 2 3 4 5 6 7 8 9 • 2-degree superscalar: attempts to process 2 instructions simultaneously. Executing 6 instructions take 6 clock cycles. I0: F D E W I1: F D E W I2: F D E W I3: F D E W I4: F D E W I5: F D E W cc: 1 2 3 4 5 6 Limitations of Superscalar - The above example assumes that the instructions are independent of each other. So, it’s easily to push them into the pipeline and superscalar. However, instructions are usually relevant to each other. Just like the hazards in pipeline, superscalar has limitations too. - There are several fundamental limitations the system must cope, which are true data dependency, procedural dependency, resource conflict, output dependency, and anti- dependency. -

Clock Rate Improves Roughly Proportional to Improvement in L • Number of Transistors Improves Proportional to L2 (Or Faster)

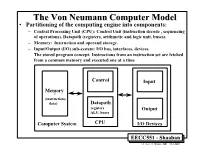

TheThe VonVon NeumannNeumann ComputerComputer ModelModel • Partitioning of the computing engine into components: – Central Processing Unit (CPU): Control Unit (instruction decode , sequencing of operations), Datapath (registers, arithmetic and logic unit, buses). – Memory: Instruction and operand storage. – Input/Output (I/O) sub-system: I/O bus, interfaces, devices. – The stored program concept: Instructions from an instruction set are fetched from a common memory and executed one at a time Control Input Memory - (instructions, data) Datapath registers Output ALU, buses Computer System CPU I/O Devices EECC551 - Shaaban #1 Lec # 1 Winter 2001 12-3-2001 Generic CPU Machine Instruction Execution Steps Instruction Obtain instruction from program storage Fetch Instruction Determine required actions and instruction size Decode Operand Locate and obtain operand data Fetch Execute Compute result value or status Result Deposit results in storage for later use Store Next Determine successor or next instruction Instruction EECC551 - Shaaban #2 Lec # 1 Winter 2001 12-3-2001 HardwareHardware ComponentsComponents ofof AnyAny ComputerComputer Five classic components of all computers: 1. Control Unit; 2. Datapath; 3. Memory; 4. Input; 5. Output } Processor Computer Keyboard, Mouse, etc. Processor Memory Devices (active) (passive) Control Input (where Unit programs, data Disk Datapath live when Output running) Display, Printer, etc. EECC551 - Shaaban #3 Lec # 1 Winter 2001 12-3-2001 CPUCPU OrganizationOrganization • Datapath Design: – Capabilities & performance characteristics of principal Functional Units (FUs): • (e.g., Registers, ALU, Shifters, Logic Units, ...) – Ways in which these components are interconnected (buses connections, multiplexors, etc.). – How information flows between components. • Control Unit Design: – Logic and means by which such information flow is controlled. – Control and coordination of FUs operation to realize the targeted Instruction Set Architecture to be implemented (can either be implemented using a finite state machine or a microprogram). -

The Multiscalar Architecture

THE MULTISCALAR ARCHITECTURE by MANOJ FRANKLIN A thesis submitted in partial ful®llment of the requirements for the degree of DOCTOR OF PHILOSOPHY (Computer Sciences) at the UNIVERSITY OF WISCONSIN Ð MADISON 1993 THE MULTISCALAR ARCHITECTURE Manoj Franklin Under the supervision of Associate Professor Gurindar S. Sohi at the University of Wisconsin-Madison ABSTRACT The centerpiece of this thesis is a new processing paradigm for exploiting instruction level parallelism. This paradigm, called the multiscalar paradigm, splits the program into many smaller tasks, and exploits ®ne-grain parallelism by executing multiple, possibly (control and/or data) depen- dent tasks in parallel using multiple processing elements. Splitting the instruction stream at statically determined boundaries allows the compiler to pass substantial information about the tasks to the hardware. The processing paradigm can be viewed as extensions of the superscalar and multiprocess- ing paradigms, and shares a number of properties of the sequential processing model and the data¯ow processing model. The multiscalar paradigm is easily realizable, and we describe an implementation of the multis- calar paradigm, called the multiscalar processor. The central idea here is to connect multiple sequen- tial processors, in a decoupled and decentralized manner, to achieve overall multiple issue. The mul- tiscalar processor supports speculative execution, allows arbitrary dynamic code motion (facilitated by an ef®cient hardware memory disambiguation mechanism), exploits communication localities, and does all of these with hardware that is fairly straightforward to build. Other desirable aspects of the implementation include decentralization of the critical resources, absence of wide associative searches, and absence of wide interconnection/data paths. -

Atmega165p Datasheet

Features • High Performance, Low Power Atmel® AVR® 8-Bit Microcontroller • Advanced RISC Architecture – 130 Powerful Instructions – Most Single Clock Cycle Execution – 32 × 8 General Purpose Working Registers – Fully Static Operation – Up to 16 MIPS Throughput at 16 MHz – On-Chip 2-cycle Multiplier • High Endurance Non-volatile Memory segments – 16 Kbytes of In-System Self-programmable Flash program memory – 512 Bytes EEPROM – 1 Kbytes Internal SRAM 8-bit – Write/Erase cyles: 10,000 Flash/100,000 EEPROM(1)(3) – Data retention: 20 years at 85°C/100 years at 25°C(2)(3) Microcontroller – Optional Boot Code Section with Independent Lock Bits In-System Programming by On-chip Boot Program with 16K Bytes True Read-While-Write Operation – Programming Lock for Software Security In-System • JTAG (IEEE std. 1149.1 compliant) Interface – Boundary-scan Capabilities According to the JTAG Standard Programmable – Extensive On-chip Debug Support – Programming of Flash, EEPROM, Fuses, and Lock Bits through the JTAG Interface Flash • Peripheral Features – Two 8-bit Timer/Counters with Separate Prescaler and Compare Mode – One 16-bit Timer/Counter with Separate Prescaler, Compare Mode, and Capture Mode – Real Time Counter with Separate Oscillator –Four PWM Channels ATmega165P – 8-channel, 10-bit ADC – Programmable Serial USART ATmega165PV – Master/Slave SPI Serial Interface – Universal Serial Interface with Start Condition Detector – Programmable Watchdog Timer with Separate On-chip Oscillator – On-chip Analog Comparator Preliminary – Interrupt and Wake-up -

Performance of a Computer (Chapter 4) Vishwani D

ELEC 5200-001/6200-001 Computer Architecture and Design Fall 2013 Performance of a Computer (Chapter 4) Vishwani D. Agrawal & Victor P. Nelson epartment of Electrical and Computer Engineering Auburn University, Auburn, AL 36849 ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 1 What is Performance? Response time: the time between the start and completion of a task. Throughput: the total amount of work done in a given time. Some performance measures: MIPS (million instructions per second). MFLOPS (million floating point operations per second), also GFLOPS, TFLOPS (1012), etc. SPEC (System Performance Evaluation Corporation) benchmarks. LINPACK benchmarks, floating point computing, used for supercomputers. Synthetic benchmarks. ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 2 Small and Large Numbers Small Large 10-3 milli m 103 kilo k 10-6 micro μ 106 mega M 10-9 nano n 109 giga G 10-12 pico p 1012 tera T 10-15 femto f 1015 peta P 10-18 atto 1018 exa 10-21 zepto 1021 zetta 10-24 yocto 1024 yotta ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 3 Computer Memory Size Number bits bytes 210 1,024 K Kb KB 220 1,048,576 M Mb MB 230 1,073,741,824 G Gb GB 240 1,099,511,627,776 T Tb TB ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 4 Units for Measuring Performance Time in seconds (s), microseconds (μs), nanoseconds (ns), or picoseconds (ps). Clock cycle Period of the hardware clock Example: one clock cycle means 1 nanosecond for a 1GHz clock frequency (or 1GHz clock rate) CPU time = (CPU clock cycles)/(clock rate) Cycles per instruction (CPI): average number of clock cycles used to execute a computer instruction. -

Chap01: Computer Abstractions and Technology

CHAPTER 1 Computer Abstractions and Technology 1.1 Introduction 3 1.2 Eight Great Ideas in Computer Architecture 11 1.3 Below Your Program 13 1.4 Under the Covers 16 1.5 Technologies for Building Processors and Memory 24 1.6 Performance 28 1.7 The Power Wall 40 1.8 The Sea Change: The Switch from Uniprocessors to Multiprocessors 43 1.9 Real Stuff: Benchmarking the Intel Core i7 46 1.10 Fallacies and Pitfalls 49 1.11 Concluding Remarks 52 1.12 Historical Perspective and Further Reading 54 1.13 Exercises 54 CMPS290 Class Notes (Chap01) Page 1 / 24 by Kuo-pao Yang 1.1 Introduction 3 Modern computer technology requires professionals of every computing specialty to understand both hardware and software. Classes of Computing Applications and Their Characteristics Personal computers o A computer designed for use by an individual, usually incorporating a graphics display, a keyboard, and a mouse. o Personal computers emphasize delivery of good performance to single users at low cost and usually execute third-party software. o This class of computing drove the evolution of many computing technologies, which is only about 35 years old! Server computers o A computer used for running larger programs for multiple users, often simultaneously, and typically accessed only via a network. o Servers are built from the same basic technology as desktop computers, but provide for greater computing, storage, and input/output capacity. Supercomputers o A class of computers with the highest performance and cost o Supercomputers consist of tens of thousands of processors and many terabytes of memory, and cost tens to hundreds of millions of dollars. -

RAMP: Research Accelerator for Multiple Processors

Technical Report UCB//CSD-05-1412, September 2005 RAMP: Research Accelerator for Multiple Processors - A Community Vision for a Shared Experimental Parallel HW/SW Platform Arvind (MIT), Krste Asanovic´ (MIT), Derek Chiou (UT Austin), James C. Hoe (CMU), Christoforos Kozyrakis (Stanford), Shih-Lien Lu (Intel), Mark Oskin (U Washington), David Patterson (UC Berkeley), Jan Rabaey (UC Berkeley), and John Wawrzynek (UC Berkeley) Project Summary Desktop processor architectures have crossed a critical threshold. Manufactures have given up attempting to extract ever more performance from a single core and instead have turned to multi-core designs. While straightforward approaches to the architecture of multi-core processors are sufficient for small designs (2–4 cores), little is really known how to build, program, or manage systems of 64 to 1024 processors. Unfortunately, the computer architecture community lacks the basic infrastructure tools required to carry out this research. While simulation has been adequate for single-processor research, significant use of simplified modeling and statistical sampling is required to work in the 2–16 processing core space. Invention is required for architecture research at the level of 64–1024 cores. Fortunately, Moore’s law has not only enabled these dense multi-core chips, it has also enabled extremely dense FPGAs. Today, for a few hundred dollars, undergraduates can work with an FPGA prototype board with almost as many gates as a Pentium. Given the right support, the research community can capitalize on this opportunity too. Today, one to two dozen cores can be programmed into a single FPGA. With multiple FPGAs on a board and multiple boards in a system, large complex architectures can be explored. -

Trends in Processor Architecture

A. González Trends in Processor Architecture Trends in Processor Architecture Antonio González Universitat Politècnica de Catalunya, Barcelona, Spain 1. Past Trends Processors have undergone a tremendous evolution throughout their history. A key milestone in this evolution was the introduction of the microprocessor, term that refers to a processor that is implemented in a single chip. The first microprocessor was introduced by Intel under the name of Intel 4004 in 1971. It contained about 2,300 transistors, was clocked at 740 KHz and delivered 92,000 instructions per second while dissipating around 0.5 watts. Since then, practically every year we have witnessed the launch of a new microprocessor, delivering significant performance improvements over previous ones. Some studies have estimated this growth to be exponential, in the order of about 50% per year, which results in a cumulative growth of over three orders of magnitude in a time span of two decades [12]. These improvements have been fueled by advances in the manufacturing process and innovations in processor architecture. According to several studies [4][6], both aspects contributed in a similar amount to the global gains. The manufacturing process technology has tried to follow the scaling recipe laid down by Robert N. Dennard in the early 1970s [7]. The basics of this technology scaling consists of reducing transistor dimensions by a factor of 30% every generation (typically 2 years) while keeping electric fields constant. The 30% scaling in the dimensions results in doubling the transistor density (doubling transistor density every two years was predicted in 1975 by Gordon Moore and is normally referred to as Moore’s Law [21][22]). -

Intro Evolution of Superscalar Processor

Superscalar Processors Superscalar Processors vs. VLIW • 7.1 Introduction • 7.2 Parallel decoding • 7.3 Superscalar instruction issue • 7.4 Shelving • 7.5 Register renaming • 7.6 Parallel execution • 7.7 Preserving the sequential consistency of instruction execution • 7.8 Preserving the sequential consistency of exception processing • 7.9 Implementation of superscalar CISC processors using a superscalar RISC core • 7.10 Case studies of superscalar processors TECH Computer Science CH01 Superscalar Processor: Intro Emergence and spread of superscalar processors • Parallel Issue • Parallel Execution • {Hardware} Dynamic Instruction Scheduling • Currently the predominant class of processors 4Pentium 4PowerPC 4UltraSparc 4AMD K5- 4HP PA7100- 4DEC α Evolution of superscalar processor Specific tasks of superscalar processing Parallel decoding {and Dependencies check} Decoding and Pre-decoding • What need to be done • Superscalar processors tend to use 2 and sometimes even 3 or more pipeline cycles for decoding and issuing instructions • >> Pre-decoding: 4shifts a part of the decode task up into loading phase 4resulting of pre-decoding f the instruction class f the type of resources required for the execution f in some processor (e.g. UltraSparc), branch target addresses calculation as well 4the results are stored by attaching 4-7 bits • + shortens the overall cycle time or reduces the number of cycles needed The principle of perdecoding Number of perdecode bits used Specific tasks of superscalar processing: Issue 7.3 Superscalar instruction issue • How and when to send the instruction(s) to EU(s) Instruction issue policies of superscalar processors: Issue policies ---Performance, tread-----Æ Issue rate {How many instructions/cycle} Issue policies: Handing Issue Blockages • CISC about 2 • RISC: Issue stopped by True dependency Issue order of instructions • True dependency Æ (Blocked: need to wait) Aligned vs.