Hacking Web Performance Moving Beyond the Basics of Web Performance Optimization

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Data Compression: Dictionary-Based Coding 2 / 37 Dictionary-Based Coding Dictionary-Based Coding

Dictionary-based Coding already coded not yet coded search buffer look-ahead buffer cursor (N symbols) (L symbols) We know the past but cannot control it. We control the future but... Last Lecture Last Lecture: Predictive Lossless Coding Predictive Lossless Coding Simple and effective way to exploit dependencies between neighboring symbols / samples Optimal predictor: Conditional mean (requires storage of large tables) Affine and Linear Prediction Simple structure, low-complex implementation possible Optimal prediction parameters are given by solution of Yule-Walker equations Works very well for real signals (e.g., audio, images, ...) Efficient Lossless Coding for Real-World Signals Affine/linear prediction (often: block-adaptive choice of prediction parameters) Entropy coding of prediction errors (e.g., arithmetic coding) Using marginal pmf often already yields good results Can be improved by using conditional pmfs (with simple conditions) Heiko Schwarz (Freie Universität Berlin) — Data Compression: Dictionary-based Coding 2 / 37 Dictionary-based Coding Dictionary-Based Coding Coding of Text Files Very high amount of dependencies Affine prediction does not work (requires linear dependencies) Higher-order conditional coding should work well, but is way to complex (memory) Alternative: Do not code single characters, but words or phrases Example: English Texts Oxford English Dictionary lists less than 230 000 words (including obsolete words) On average, a word contains about 6 characters Average codeword length per character would be limited by 1 -

A New Way of Accelerating Web by Compressing Data with Back Reference-Prefer Geflochtener

442 The International Arab Journal of Information Technology, Vol. 14, No. 4, July 2017 A New Way of Accelerating Web by Compressing Data with Back Reference-Prefer Geflochtener Kushwaha Singh1, Challa Krishna2, and Saini Kumar1 1Department of Computer Science and Engineering, Rajasthan Technical University, India 2Department of Computer Science and Engineering, Panjab University, India Abstract: This research focused on the synthesis of an iterative approach to improve speed of the web and also learning the new methodology to compress the large data with enhanced backward reference preference. In addition, observations on the outcomes obtained from experimentation, group-benchmarks compressions, and time splays for transmissions, the proposed system have been analysed. This resulted in improving the compression of textual data in the Web pages and with this it also gains an interest in hardening the cryptanalysis of the data by maximum reducing the redundancies. This removes unnecessary redundancies with 70% efficiency and compress pages with the 23.75-35% compression ratio. Keywords: Backward references, shortest path technique, HTTP, iterative compression, web, LZSS and LZ77. Received April 25, 2014; accepted August 13, 2014 1. Introduction Algorithm 1: LZ77 Compression Compression is the reduction in data size of if a sufficient length is matched or it may correlate better with next input. information to save space and bandwidth. This can be While (! empty lookaheadBuffer) done on Web data or the entire page including the { header. Data compression is a technique of removing get a remission (position, length) to longer match from search white spaces, inserting a single repeater for the repeated buffer; bytes and replace smaller bits for frequent characters if (length>0) Data Compression (DC) is not only the cost effective { Output (position, length, nextsymbol); technique due to its small size for data storage but it transpose the window length+1 position along; also increases the data transfer rate in data } communication [2]. -

Let's Encrypt: 30,229 Jan, 2018 | Let's Encrypt: 18,326 Jan, 2016 | Let's Encrypt: 330 Feb, 2017 | Let's Encrypt: 8,199

Let’s Encrypt: An Automated Certificate Authority to Encrypt the Entire Web Josh Aas∗ Richard Barnes∗ Benton Case Let’s Encrypt Cisco Stanford University Zakir Durumeric Peter Eckersley∗ Alan Flores-López Stanford University Electronic Frontier Foundation Stanford University J. Alex Halderman∗† Jacob Hoffman-Andrews∗ James Kasten∗ University of Michigan Electronic Frontier Foundation University of Michigan Eric Rescorla∗ Seth Schoen∗ Brad Warren∗ Mozilla Electronic Frontier Foundation Electronic Frontier Foundation ABSTRACT 1 INTRODUCTION Let’s Encrypt is a free, open, and automated HTTPS certificate au- HTTPS [78] is the cryptographic foundation of the Web, providing thority (CA) created to advance HTTPS adoption to the entire Web. an encrypted and authenticated form of HTTP over the TLS trans- Since its launch in late 2015, Let’s Encrypt has grown to become the port [79]. When HTTPS was introduced by Netscape twenty-five world’s largest HTTPS CA, accounting for more currently valid cer- years ago [51], the primary use cases were protecting financial tificates than all other browser-trusted CAs combined. By January transactions and login credentials, but users today face a growing 2019, it had issued over 538 million certificates for 223 million do- range of threats from hostile networks—including mass surveil- main names. We describe how we built Let’s Encrypt, including the lance and censorship by governments [99, 106], consumer profiling architecture of the CA software system (Boulder) and the structure and ad injection by ISPs [30, 95], and insertion of malicious code of the organization that operates it (ISRG), and we discuss lessons by network devices [68]—which make HTTPS important for prac- learned from the experience. -

Daftar Pustaka.Pdf

Hak cipta dan penggunaan kembali: Lisensi ini mengizinkan setiap orang untuk menggubah, memperbaiki, dan membuat ciptaan turunan bukan untuk kepentingan komersial, selama anda mencantumkan nama penulis dan melisensikan ciptaan turunan dengan syarat yang serupa dengan ciptaan asli. Copyright and reuse: This license lets you remix, tweak, and build upon work non-commercially, as long as you credit the origin creator and license it on your new creations under the identical terms. Team project ©2017 Dony Pratidana S. Hum | Bima Agus Setyawan S. IIP DAFTAR PUSTAKA Adhitama, G. 2009. Perbandingan Algoritma Huffman Dengan Algoritma Shannon-Fano. Institut Teknologi Bandung. Alakuijala, H., Kliuchnikov, E., Szabadka, Z., & Vandevenne, L. 2015. Comparison of Brotli, Deflate, Zopfli, LZMA, LZHAM and Bzip2 Compression Algorithm. Alakuijala, J., & Szabadka, Z. 2016. Internet Engineering Task Force (IETF). Tersedia dalam: https://www.ietf.org/rfc/rfc7932.txt. [Diakses tanggal 11 November 2017] Anggriani, M. 2011. Perbandingan Metode Kompresi Huffman dan Dynamic Markov Compression (DMC). Bell, C. A. 2007. Expert MySQL. New York: Apress. Boedi, D., Rustamaji, H. C., & Nugraha, M. A. 2009. Aplikasi Kompresi SMS Berbasis JAVA ME Dengan Metode Kompresi LZW-Huffman. UPN "Veteran" Yogyakarta,. Dalam Seminar Nasional Informatika. Darmawan, D. 2014. Pengembangan E-Learning Teori dan Desain. Eka, N. 2016. Pengembangan E-learning Dengan Schoology Pada Materi Dinamika Benda Tegar. Universitas Lampung Salomon, D. 2007. Data Compression The Complete Reference Fourth Edition, hal. 2. Fransisca, C. 2014. Implementasi Algoritma Kompresi Deflate Pada Website Berbasis PHP dan Basis Data Mysql. Google. 2015. Github. Brotli. Tersedia dalam: https://github.com/google/brotli. [Diakses tanggal 25 Mei 2017] Harahap, E. M., Rachmawati, D., & Herriyance. -

Colorado College Is Hiring!

Colorado College is hiring! Early Childhood Teacher (multiple positions available) - Children’s Center Under minimal supervision while in the classroom provide nurturing early care and educational experience to benefit the children and families of the CC Community. Design and implement age-appropriate individualized curriculum to foster growth and development socially, emotionally, physically and cognitively for each child. Be flexible, adaptive, reliable, and a confidential resource for families in an assigned classroom (infant, toddler or pre-school). Full position description: https://employment.coloradocollege.edu/postings/4697 OC (on call) Educational Assistant- Children’s Center Hours may vary Monday through Friday between 7:30 and 5:30 up to 1000 hours per fiscal year. Under general supervision, assists with providing on-site early childhood education and supervision for infants, toddlers and preschool children when teachers are sick and/or on vacation, teacher daily breaks, teacher daily planning times, daily kitchen duties, and working in the afterschool care program. Full position description: https://employment.coloradocollege.edu/postings/4607 Counselor (Occasional) Provide professional mental health services to the students of Colorado College. Positions Type: On Call Full position description: https://employment.coloradocollege.edu/postings/4746 Driver/Automotive Technician Performs maintenance and repair of college vehicles and maintenance equipment; drives fleet vehicles, including highway buses. Position Type: Full-time -

Combinatorial Optimization Problems in Internet Applications

Poznań University of Technology Institute of Computing Science Combinatorial optimization problems in Internet applications Doctoral thesis Jakub Marszałkowski Supervisor: prof. dr hab. inż. Maciej Drozdowski Poznań, 2017 Contents 1 Introduction 4 1.1 Motivation . 4 1.2 Scope and Puropose . 5 1.3 Methodology . 6 1.4 Common webpage-related factors . 10 1.5 Outline of the Thesis . 11 2 Layout Partitioning for Advertisements Fit 13 2.1 Website’s Layouts and Ad Placement . 13 2.2 Problem Formulation . 16 2.3 Objective Functions . 19 2.3.1 Max Ad Number Function . 20 2.3.2 Max Most Difficult to Pack Ad Unit Function . 20 2.3.3 Min Single Ad Waste . 20 2.4 Solution Method . 21 2.4.1 Combining Ad Units . 22 2.4.2 Valid Column Widths List . 23 2.4.3 Browsing Layouts . 24 2.4.4 Selecting Final Results . 25 2.4.5 Example For a Small Instance . 25 2.5 Benchmarks . 27 2.5.1 Data Sets . 27 2.5.2 Webmaster Survey . 27 2.6 Computational Experiments . 29 2.6.1 Input Parameters . 29 2.6.2 Execution Times . 31 2.6.3 Layout Partitioning Results and Discussion . 31 2.7 Conclusions . 35 3 Tag Cloud Construction 37 3.1 Tag Clouds . 37 3.2 Problem Analysis and Related Work Survey . 38 3.2.1 Tag cloud taxonomy . 38 3.2.2 Related work . 40 3.2.3 Tag Cloud Usability Studies . 42 3.2.4 Tag Clouds for the Web . 43 3.2.5 Client Side . 44 3.2.6 Analysis of Packing Problem Properties . -

Product End User License Agreement

End User License Agreement If you have another valid, signed agreement with Licensor or a Licensor authorized reseller which applies to the specific products or services you are downloading, accessing, or otherwise receiving, that other agreement controls; otherwise, by using, downloading, installing, copying, or accessing Software, Maintenance, or Consulting Services, or by clicking on "I accept" on or adjacent to the screen where these Master Terms may be displayed, you hereby agree to be bound by and accept these Master Terms. These Master Terms also apply to any Maintenance or Consulting Services you later acquire from Licensor relating to the Software. You may place orders under these Master Terms by submitting separate Order Form(s). Capitalized terms used in the Agreement and not otherwise defined herein are defined at https://terms.tibco.com/posts/845635-definitions. 1. Applicability. These Master Terms represent one component of the Agreement for Licensor's products, services, and partner programs and apply to the commercial arrangements between Licensor and Customer (or Partner) listed below. Additional terms referenced below shall apply. a. Products: i. Subscription, Perpetual, or Term license Software ii. Cloud Service (Subject to the Cloud Service Terms found at https://terms.tibco.com/?types%5B%5D=post&feed=recent#cloud-services) iii. Equipment (Subject to the Equipment Terms found at https://terms.tibco.com/?types%5B%5D=post&feed=recent#equipment-terms) b. Services: i. Maintenance (Subject to the Maintenance terms found at https://terms.tibco.com/?types%5B%5D=post&feed=recent#october-maintenance) ii. Consulting Services (Subject to the Consulting terms found at https://terms.tibco.com/?types%5B%5D=post&feed=recent#supplemental-terms) iii. -

Learning HTTP 2.Pdf

L e a r n i n g H T T P/2 A PRACTICAL GUIDE FOR BEGINNERS Stephen Ludin & Javier Garza Learning HTTP/2 A Practical Guide for Beginners Stephen Ludin and Javier Garza Beijing Boston Farnham Sebastopol Tokyo Learning HTTP/2 by Stephen Ludin and Javier Garza Copyright © 2017 Stephen Ludin, Javier Garza. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://oreilly.com/safari). For more information, contact our corporate/insti‐ tutional sales department: 800-998-9938 or [email protected]. Acquisitions Editor: Brian Anderson Indexer: Wendy Catalano Editors: Virginia Wilson and Dawn Schanafelt Interior Designer: David Futato Production Editor: Shiny Kalapurakkel Cover Designer: Karen Montgomery Copyeditor: Kim Cofer Illustrator: Rebecca Demarest Proofreader: Sonia Saruba June 2017: First Edition Revision History for the First Edition 2017-05-14: First Release 2017-10-27: Second Release See http://oreilly.com/catalog/errata.csp?isbn=9781491962442 for release details. The O’Reilly logo is a registered trademark of O’Reilly Media, Inc. Learning HTTP/2, the cover image, and related trade dress are trademarks of O’Reilly Media, Inc. While the publisher and the authors have used good faith efforts to ensure that the information and instructions contained in this work are accurate, the publisher and the authors disclaim all responsibility for errors or omissions, including without limitation responsibility for damages resulting from the use of or reliance on this work. -

2018-12 Internet/Email SIG Notes Click Here to Return to the Computer Club’S Website

12/6/2018 2018-12 Internet/Email SIG Notes - Google Docs 2018-12 Internet/Email SIG Notes Click here to return to the Computer Club’s Website IT Guy Shares The Funniest Requests He Got That Made Him Laugh Out Loud Sebastian from Germany share s his worst work stories, and they’re so ridiculous, they make grandmas look like hackers. From incompetent managers to clueless users, Sebastian has seen it all. After all, he’s been working in IT for 15 years. Now, he’s an IT administrator for multiple global companies. “If there is one thing that you can count on, then it’s the stupidity of users,” Sebastian told Bored Panda. “Unfortunately, [it’s evident] every day… not kidding!” Why is the Computer Club’s Website marked as “ not secure” Security has been one of Chrome’s core principles since the beginning—we’re constantly working to keep you safe as you browse the web. Nearly two years ago, we announced that Chrome would eventually mark all sites that are not encrypted with HTTPS as “not secure”. This makes it easier to know whether your personal information is safe as it travels across the web, whether you’re checking your bank account or buying concert tickets . Starting today , (July 24, 2018) we’re rolling out these changes to all Chrome users. Chrome’s new password manager stops you from using the same password for every website Google is releasing an entire new design for Chrome today with new features and tweaks to the browser’s overall appearance. -

Caddy Et Traefik : Des Concurrents Sérieux À Nginx Et Haproxy ? Mickaël Masquelin | Quelques Mots Sur Le Laboratoire …

Caddy et Traefik : des concurrents sérieux à nginx et HAProxy ? Mickaël Masquelin | Quelques mots sur le laboratoire … • Unité Mixte de Recherche • 5 tutelles : • Le CNRS • L’Université de Lille • L’Université de Polytechnique HdF • L’Ecole Centrale • Le groupe YNCREA – ISEN • 6 sites géographiquement distants • Environ 500 personnes (chercheurs, ingénieurs, administratifs, étudiants, …) Mickaël Masquelin – IEMN 10/03/2018 2 Journée thématique “Retours d’expériences” Les objectifs Mickaël Masquelin – IEMN 10/03/2018 3 Journée thématique “Retours d’expériences” RETOUR D’EXPERIENCE SUR CES NOUVELLES APPLIS WEB QUI FACILITENT LA VIE L’idée est de faire un retour d’expérience sur ces nouvelles applications web qui pointent le bout de leur nez et qui viennent révolutionner un peu le paysage informatique … Les objectifs Mickaël Masquelin – IEMN 10/03/2018 4 Journée thématique “Retours d’expériences” RETOUR D’EXPERIENCE SUR CES NOUVELLES APPLIS WEB QUI FACILITENT LA VIE L’idée est de faire un retour d’expérience sur ces nouvelles applications web qui pointent le bout de leur nez et qui viennent révolutionner un peu le paysage informatique … ESSAYER DE VOUS DONNER QUELQUES PISTES J’espère que vous repartirez de la journée thématique avec pleins d’idées, des envies de changer la manière dont vous travaillez, dont vous déployez vos applications ou vos Les objectifs services dans vos contextes respectifs … Mickaël Masquelin – IEMN 10/03/2018 5 Journée thématique “Retours d’expériences” RETOUR D’EXPERIENCE SUR CES NOUVELLES APPLIS WEB QUI FACILITENT -

Introduction

HTTP Request Smuggling in 2020 – New Variants, New Defenses and New Challenges Amit Klein SafeBreach Labs Introduction HTTP Request Smuggling (AKA HTTP Desyncing) is an attack technique that exploits different interpretations of a stream of non-standard HTTP requests among various HTTP devices between the client (attacker) and the server (including the server itself). Specifically, the attacker manipulates the way various HTTP devices split the stream into individual HTTP requests. By doing this, the attacker can “smuggle” a malicious HTTP request through an HTTP device to the server abusing the discrepancy in the interpretation of the stream of requests and desyncing between the server’s view of the HTTP request (and response) stream and the intermediary HTTP device’s view of these streams. In this way, for example, the malicious HTTP request can be "smuggled" as a part of the previous HTTP request. HTTP Request Smuggling was invented in 2005, and recently, additional research cropped up. This research field is still not fully explored, especially when considering open source defense systems such as mod_security’s community rule-set (CRS). These HTTP Request Smuggling defenses are rudimentary and not always effective. My Contribution My contribution is three-fold. I explore new attacks and defense mechanisms, and I provide some “challenges”. 1. New attacks: I provide some new HTTP Request Smuggling variants and show how they work against various proxy-server (or proxy-proxy) combinations. I also found a bypass for mod_security CRS (assuming HTTP Request Smuggling is possible without it). An attack demonstration script implementing my payloads is available in SafeBreach Labs’ GitHub repository (https://github.com/SafeBreach-Labs/HRS). -

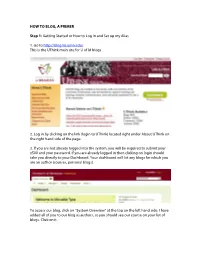

How to Blog, a Primer

HOW TO BLOG, A PRIMER Step 1: Getting Started or How to Log In and Set up my Alias 1. Go to http://blog.lib.umn.edu/ This is the UThink main site for U of M blogs. 2. Log in by clicking on the link (login to UThink) located right under About UThink on the right hand side of the page. 3. If you are not already logged into the system, you will be required to submit your x500 and your password. If you are already logged in then clicking on login should take you directly to your Dashboard. Your dashboard will list any blogs for which you are an author (courses, personal blogs). To access our blog, click on “System Overview” at the top on the left hand side. I have added all of you to our blog as authors, so you should see our course on your list of blogs. Click on it. 4. Now you should be on the author page for our blog. This is where you can create entries, upload files, and edit entries. 5. For those of you who haven’t used UThink before: You can set up your own alias for posting. This means that when you post an entry or a make a comment, only your alias will show (not your email address or your name). As the blog administrator, I will be the only person who knows that it is you posting. If you are a little nervous about posting, this is a good way to stay somewhat anonymous. To set up your alias, click on the link in the upper right-hand corner of the screen that says, Hi x500 number (in the image above, the link says Hi puot0002).