Logic Programming

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

CSC 242: Artificial Intelligence

CSC 242: Artificial Intelligence Course website: www.cs.rochester.edu/u/kyros/courses/csc242 Instructor: Kyros Kutulakos Office: 623 CSB Extension: x5-5860 Email: [email protected] Hours: TR 3:30-4:30 (or by appointment) TA: Joel Tetreault Email: [email protected] Hours: M 1:00-2:00 Recitations: TBA Textbooks: Russell & Norvig, Artificial Intelligence Wilensky, Common LISPcraft Another very good LISP textbook: Winston & Horn, LISP (Addison-Wesley) Common Lisp • LISP is one of the most common “AI programming languages” • LISP (=LISt Processing) is a language whose main power is in manipulating lists of symbols: (a b c (d e f (g h))) arithmetic operations are also included (sqrt (+ (* 3 3) (* 4 4))) • Lisp is a general-purpose, interpreter-based language • All computation consists of expression evaluations: lisp-prompt>(sqrt (+ (* 3 3) (* 4 4))) lisp-prompt> 25 • Since data are list structures and programs are list structures, we can manipulate programs just like data • Lisp is the second-oldest high-level programming language (after Fortran) Getting Started • Command-line invocation unix-prompt>cl system responds with loading & initialization messages followed by a Lisp prompt USER(1): whenever you have balanced parentheses & hit return, the value of the expression (or an error message) are returned USER(1): (+ 1 2) 3 saying “hello” USER(2): ‘hello HELLO USER(3): “Hello” “Hello” • Exiting Lisp: USER(2):(exit) unix-prompt> Getting Started (cont.) • Reading a file f that is in the same directory from which you are running Lisp: -

Redis in Action

IN ACTION Josiah L. Carlson FOREWORD BY Salvatore Sanfilippo MANNING Redis in Action Redis in Action JOSIAH L. CARLSON MANNING Shelter Island For online information and ordering of this and other Manning books, please visit www.manning.com. The publisher offers discounts on this book when ordered in quantity. For more information, please contact Special Sales Department Manning Publications Co. 20 Baldwin Road PO Box 261 Shelter Island, NY 11964 Email: [email protected] ©2013 by Manning Publications Co. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by means electronic, mechanical, photocopying, or otherwise, without prior written permission of the publisher. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in the book, and Manning Publications was aware of a trademark claim, the designations have been printed in initial caps or all caps. Recognizing the importance of preserving what has been written, it is Manning’s policy to have the books we publish printed on acid-free paper, and we exert our best efforts to that end. Recognizing also our responsibility to conserve the resources of our planet, Manning books are printed on paper that is at least 15 percent recycled and processed without the use of elemental chlorine. Manning Publications Co. Development editor: Elizabeth Lexleigh 20 Baldwin Road Technical proofreaders: James Philips, Kevin Chang, PO Box 261 and Nicholas Lindgren Shelter Island, NY 11964 Java translator: Eric Van Dewoestine Copyeditor: Benjamin Berg Proofreader: Katie Tennant Typesetter: Gordan Salinovic Cover designer: Marija Tudor ISBN 9781935182054 Printed in the United States of America 1 2 3 4 5 6 7 8 9 10 – MAL – 18 17 16 15 14 13 To my dear wife, See Luan, and to our baby girl, Mikela brief contents PART 1 GETTING STARTED . -

Lists in Lisp and Scheme

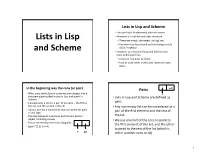

Lists in Lisp and Scheme • Lists are Lisp’s fundamental data structures • However, it is not the only data structure Lists in Lisp – There are arrays, characters, strings, etc. – Common Lisp has moved on from being merely a LISt Processor and Scheme • However, to understand Lisp and Scheme you must understand lists – common funcAons on them – how to build other useful data structures with them In the beginning was the cons (or pair) Pairs nil • What cons really does is combines two objects into a a two-part object called a cons in Lisp and a pair in • Lists in Lisp and Scheme are defined as Scheme • Conceptually, a cons is a pair of pointers -- the first is pairs the car, and the second is the cdr • Any non empty list can be considered as a • Conses provide a convenient representaAon for pairs pair of the first element and the rest of of any type • The two halves of a cons can point to any kind of the list object, including conses • We use one half of the cons to point to • This is the mechanism for building lists the first element of the list, and the other • (pair? ‘(1 2) ) => #t to point to the rest of the list (which is a nil either another cons or nil) 1 Box nota:on Z What sort of list is this? null Where null = ‘( ) null a a d A one element list (a) null null b c a b c > (set! z (list ‘a (list ‘b ‘c) ‘d)) (car (cdr z)) A list of 3 elements (a b c) (A (B C) D) ?? Pair? Equality • Each Ame you call cons, Scheme allocates a new memory with room for two pointers • The funcAon pair? returns true if its • If we call cons twice with the -

Lisp Class Level 2 Day 2

Allegro CL Certification Program Lisp Programming Series Level 2 1 Goals for Level 2 · Build on Level 1 ± Assumes you can write functions, use the debugger, understand lists and list processing · Detailed Exposure To ± Functions ± Macros ± Object-oriented programming 2 Format of the Course · One 2-hour presentation each week ± Lecture ± Question and answer ± Sample code · Lecture notes available online (http://www.franz.com/lab/) · Homework · One-on-one help via email 3 Session 1 (today) · Advanced features of Lisp functions · Structures, Hash Tables, Bits and Bytes · Macros · Closures 4 Session 2 Common Lisp Object System (CLOS) · Top Ten things to do in CLOS · Classes, instances, and inheritance · Methods · Class precedence list · Programming style 5 Session 3 · Performance considerations with CLOS · The garbage collector · Error conditions · Using the IDE to make Windows™ windows 6 Homework · Lisp is best learnt by hands-on experience · Many exercises for each session · Will dedicate class time to reviewing exercises · Email instructor for one-on-one assistance doing the homework or any other questions relevant to the class ± [email protected] 7 Getting Allegro Common Lisp · This class is based on version 6.2. · Trial version should be sufficient for this module ± Download free from http://www.franz.com/ ± Works for 60 days · I will be using ACL on Windows · You can use ACL on UNIX, but the development environment is different, and I won©t be using it. 8 Allegro CL Certification Program Lisp Programming Series Level 2 Session 2.1.1 -

Impatient Perl

Impatient Perl version: 9 September 2010 Copyright 2004-2010 Greg London Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled "GNU Free Documentation License". Cover Art (Front and Back) on the paperback version of Impatient Perl is excluded from this license. Cover Art is Copyright Greg London 2004, All Rights Reserved. For latest version of this work go to: http://www.greglondon.com 1 Table of Contents 1 The Impatient Introduction to Perl..........................................................................................................7 1.1 The history of perl in 100 words or less..........................................................................................7 1.2 Basic Formatting for this Document...............................................................................................7 1.3 Do You Have Perl Installed.............................................................................................................8 1.4 Your First Perl Script, EVER..........................................................................................................9 1.5 Default Script Header......................................................................................................................9 1.6 Free Reference Material................................................................................................................10 -

Programming Languages Scheme Part 4 and Other Functional 2020

Programming Languages Scheme part 4 and other functional 2020 1 Instructor: Odelia Schwartz Lots of equalities! Summary: § eq? for symbolic atoms, not numeric (eq? ‘a ‘b) § = for numeric, not symbolic (= 5 7) § eqv? for numeric and symbolic 2 equal versus equalsimp (define (equalsimp lis1 lis2) (define (equal lis1 lis2) (cond (cond ((null? lis1) (null? lis2)) ((not (list? lis1)) (eq? lis1 ((null? lis2) #f) lis2)) ((eq? (car lis1) (car lis2)) ((not (list? lis2)) #f) (equalsimp (cdr lis1) ((null? lis1) (null? lis2)) (cdr lis2))) ((null? lis2) #f) (else #f) ((equal (car lis1) (car ) lis2)) ) (equal (cdr lis1) (cdr lis2))) (else #f) ) ) 3 append (define (append lis1 lis2) (cond ((null? lis1) lis2) (else (cons (car lis1) (append (cdr lis1) lis2))) )) § Reminding ourselves of cons (run it on csi): (cons ‘(a b) ‘(c d)) returns ((a b) c d) (cons ‘((a b) c) ‘(d (e f))) returns (((a b) c) d (e f)) 4 Adding a list of numbers § This works: (+ 3 7 10 2) § This doesn’t work: (+ (3 7 10 2)) § Why? 5 Adding a list of numbers § This works: (+ 3 7 10 2) § This doesn’t work: (+ (3 7 10 2)) How would we achieve the second option? 6 Adding a list of numbers § This works: (+ 3 7 10 2) § This doesn’t work: (+ (3 7 10 2)) How would we achieve the second option? Breakout groups 7 Adding a list of numbers § We want: (+ (3 7 10 2)) (define (adder a_list) (cond ((null? a_list) 0) (else (eval(cons '+ a_list))) ) ) 8 Adding a list of numbers § We want: (+ (3 7 10 2)) (define (adder a_list) (cond ((null? a_list) 0) (else (eval(cons '+ a_list))) ) ) We’ll do a little -

COMMON LISP: a Gentle Introduction to Symbolic Computation COMMON LISP: a Gentle Introduction to Symbolic Computation

COMMON LISP: A Gentle Introduction to Symbolic Computation COMMON LISP: A Gentle Introduction to Symbolic Computation David S. Touretzky Carnegie Mellon University The Benjamin/Cummings Publishing Company,Inc. Redwood City, California • Fort Collins, Colorado • Menlo Park, California Reading, Massachusetts• New York • Don Mill, Ontario • Workingham, U.K. Amsterdam • Bonn • Sydney • Singapore • Tokyo • Madrid • San Juan Sponsoring Editor: Alan Apt Developmental Editor: Mark McCormick Production Coordinator: John Walker Copy Editor: Steven Sorenson Text and Cover Designer: Michael Rogondino Cover image selected by David S. Touretzky Cover: La Grande Vitesse, sculpture by Alexander Calder Copyright (c) 1990 by Symbolic Technology, Ltd. Published by The Benjamin/Cummings Publishing Company, Inc. This document may be redistributed in hardcopy form only, and only for educational purposes at no charge to the recipient. Redistribution in electronic form, such as on a web page or CD-ROM disk, is prohibited. All other rights are reserved. Any other use of this material is prohibited without the written permission of the copyright holder. The programs presented in this book have been included for their instructional value. They have been tested with care but are not guaranteed for any particular purpose. The publisher does not offer any warranties or representations, nor does it accept any liabilities with respect to the programs. Library of Congress Cataloging-in-Publication Data Touretzky, David S. Common LISP : a gentle introduction to symbolic computation / David S. Touretzky p. cm. Includes index. ISBN 0-8053-0492-4 1. COMMON LISP (Computer program language) I. Title. QA76.73.C28T68 1989 005.13'3±dc20 89-15180 CIP ISBN 0-8053-0492-4 ABCDEFGHIJK - DO - 8932109 The Benjamin/Cummings Publishing Company, Inc. -

CS 314 Principles of Programming Languages Lecture 16: Functional Programming

CS 314 Principles of Programming Languages Lecture 16: Functional Programming Zheng (Eddy) Zhang Rutgers University April 2, 2018 Review: Computation Paradigms Functional: Composition of operations on data. • No named memory locations • Value binding through parameter passing • Key operations: Function application and Function abstraction • Basis in lambda calculus 2 Review: Pure Functional Languages Fundamental concept: application of (mathematical) functions to values 1. Referential transparency: the value of a function application is independent of the context in which it occurs • value of foo(a, b, c) depends only on the values of foo, a, b and c • it does not depend on the global state of the computation ⇒ all vars in function must be local (or parameters) 2. The concept of assignment is NOT part of function programming • no explicit assignment statements • variables bound to values only through the association of actual parameters to formal parameters in function calls • thus no need to consider global states 3 Review: Pure Functional Languages 3. Control flow is governed by function calls and conditional expressions ⇒ no loop ⇒ recursion is widely used 4. All storage management is implicit • needs garbage collection 5. Functions are First Class Values • can be returned from a subroutine • can be passed as a parameter • can be bound to a variable 4 Review: Pure Functional Languages A program includes: 1. A set of function definitions 2. An expression to be evaluated E.g. in scheme, > (define length (lambda (x) (if (null? x) 0 (+ 1 ( length (rest x)))))) > (length '(A LIST OF 7 THINGS)) 5 5 LISP • Functional language developed by John McCarthy in the mid 50’s • Semantics based on Lambda Calculus • All functions operate on lists or symbols called: “S-expression” • Only five basic functions: list functions con, car, cdr, equal, atom, & one conditional construct: cond • Useful for list-processing (LISP) applications • Program and data have the same syntactic form “S-expression” • Originally used in Artificial Intelligence 6 SCHEME • Developed in 1975 by Gerald J. -

Python Tutorial Release 3.7.0

Python Tutorial Release 3.7.0 Guido van Rossum and the Python development team September 02, 2018 Python Software Foundation Email: [email protected] CONTENTS 1 Whetting Your Appetite 3 2 Using the Python Interpreter 5 2.1 Invoking the Interpreter ....................................... 5 2.2 The Interpreter and Its Environment ............................... 6 3 An Informal Introduction to Python 9 3.1 Using Python as a Calculator .................................... 9 3.2 First Steps Towards Programming ................................. 16 4 More Control Flow Tools 19 4.1 if Statements ............................................ 19 4.2 for Statements ............................................ 19 4.3 The range() Function ....................................... 20 4.4 break and continue Statements, and else Clauses on Loops .................. 21 4.5 pass Statements ........................................... 22 4.6 Defining Functions .......................................... 22 4.7 More on Defining Functions ..................................... 24 4.8 Intermezzo: Coding Style ...................................... 29 5 Data Structures 31 5.1 More on Lists ............................................ 31 5.2 The del statement .......................................... 35 5.3 Tuples and Sequences ........................................ 36 5.4 Sets .................................................. 37 5.5 Dictionaries .............................................. 38 5.6 Looping Techniques ......................................... 39 5.7 -

Lists in Lisp and Scheme

Lists in Lisp and Scheme Lisp Lists • Lists are Lisp’s fundamental data structures, • Lists in Lisp and its descendants are very but there are others Lists in Lisp simple linked lists – Arrays, characters, strings, etc. – Represented as a linear chain of nodes – Common Lisp has moved on from being • Each node has a (pointer to) a value (car of merely a LISt Processor and Scheme list) and a pointer to the next node (cdr of list) • However, to understand Lisp and Scheme – Last node’s cdr pointer is to null you must understand lists • Lists are immutable in Scheme – common functions on them • Typical access pattern is to traverse the list a – how to build other useful data structures from its head processing each node with them In the beginning was the cons (or pair) Pairs Box and pointer notation • What cons really does is combines two objects into a a Common notation: two-part object called a cons in Lisp and a pair in use diagonal line in • Lists in Lisp and Scheme are defined as (a) cdr part of a cons Scheme cell for a pointer to • Conceptually, a cons is a pair of pointers -- the first is pairs null a a null the car, and the second is the cdr • Any non empty list can be considered as a • Conses provide a convenient representation for pairs A one element list (a) of any type pair of the first element and the rest of • the list The two halves of a cons can point to any kind of (a b c) object, including conses • We use one half of a cons cell to point to • This is the mechanism for building lists the first element of the list, and the -

A Short Reference Manual for LISP CMSC 420 Hanan Samet

Fall 2008 A Short Reference Manual for LISP CMSC 420 Hanan Samet This guide details a few important functions of FRANZ LISP and COMMON LISP. Note, that all predefined (i.e., built-in) function and symbol names in FRANZ LISP are in lower case. That includes nil and t. In COMMON LISP, however, they are defined in upper case. The expression reader converts all symbols to upper case, so that you may use either upper or lower case in your programs. Intrinsic Functions These are the functions which come predefined, and are available at sign-on to LISP. This is not a complete list. Functions that are specific to FRANZ LISP are marked with [F], whereas functions that are specific to COMMON LISP are marked with [C]. 1 S-expression manipulation (car arg) Returns the first element in list arg, or the left pointer of an s-expression (cons node). (cdr arg) Return the rest of list arg, or the right pointer of an s- expression (cons node). (cons a1 a2) Allocate and return a cons node that points to a1 and a2. (list a1 a2 ...an ) Return a list of all the arguments. (append a1 a2) Return the merge of the two lists a1 and a2. (reverse arg) Reverses the list arg. (subst new old sexp) Return a copy of sexp with all old’s converted to new’s. 2 S-expression predicates (null arg) Return t if arg is nil; nil otherwise. (atom arg) Return nil if arg is a cons node; t otherwise. (eq a1 a2) Return t if a1 and a2 are the same pointer; nil otherwise. -

CH 12: Lists and Recursive Search

12 Lists and Recursive Search Chapter Lisp processing of arbitrary symbol structures Objectives Building blocks for data structures Designing accessors The symbol list as building block car cdr cons Recursion as basis of list processing cdr recursion car-cdr recursion The tree as representative of the structure of a list Chapter 12.1 Functions, Lists, and Symbolic Computing Contents 12.2 Lists as Recursive Structures 12.3 Nested Lists, Structure, and car/cdr Recursion 12.1 Functions, Lists, and Symbolic Computing Symbolic Although the Chapter 11 introduced Lisp syntax and demonstrated a few Computing useful Lisp functions, it did so in the context of simple arithmetic examples. The real power of Lisp is in symbolic computing and is based on the use of lists to construct arbitrarily complex data structures of symbolic and numeric atoms, along with the forms needed for manipulating them. We illustrate the ease with which Lisp handles symbolic data structures, as well as the naturalness of data abstraction techniques in Lisp, with a simple database example. Our database application requires the manipulation of employee records containing name, salary, and employee number fields. These records are represented as lists, with the name, salary, and number fields as the first, second, and third elements of a list. Using nth, it is possible to define access functions for the various fields of a data record. For example: (defun name-field (record) (nth 0 record)) will have the behavior: > (name-field ‘((Ada Lovelace) 45000.00 38519)) (Ada Lovelace) Similarly, the functions salary-field and number-field may be defined to access the appropriate fields of a data record.