Time-Causal Decomposition of Geomagnetic Time Series Into Secular Variation, Solar Quiet, and Disturbance Signals

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

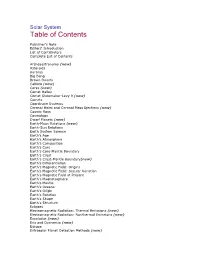

Table of Contents

Solar System Table of Contents Publisher's Note Editors' Introduction List of Contributors Complete List of Contents Archeaostronomy (new) Asteroids Auroras Big Bang Brown Dwarfs Callisto (new) Ceres (new) Comet Halley Comet Shoemaker-Levy 9 (new) Comets Coordinate Systems Coronal Holes and Coronal Mass Ejections (new) Cosmic Rays Cosmology Dwarf Planets (new) Earth-Moon Relations (new) Earth-Sun Relations Earth System Science Earth's Age Earth's Atmosphere Earth's Composition Earth's Core Earth's Core-Mantle Boundary Earth's Crust Earth's Crust-Mantle Boundary(new) Earth's Differentiation Earth's Magnetic Field: Origins Earth's Magnetic Field: Secular Variation Earth's Magnetic Field at Present Earth's Magnetosphere Earth's Mantle Earth's Oceans Earth's Origin Earth's Rotation Earth's Shape Earth's Structure Eclipses Electromagnetic Radiation: Thermal Emissions (new) Electromagnetic Radiation: Nonthermal Emissions (new) Enceladus (new) Eris and Dysnomia (new) Europa Extrasolar Planet Detection Methods (new) Extrasolar Planetary Systems Extraterrestrial Life in the Solar System Gamma-Ray Bursters Ganymede (new) General Relativity Gravity Measurement Greenhouse Effect Habitable Zones (new) Hertzsprung-Russell Diagram Iapetus (new) Impact Cratering Infrared Astronomy Interstellar Clouds and the Interstellar Medium Interplanetary Environment (new) Io Jovian Planets Jupiter's Atmosphere Jupiter's Great Red Spot Jupiter's Interior (new) Jupiter's Magnetic Field and Radiation Belts Jupiter's Ring System (new) Jupiter's Satellites Kuiper Belt -

Four Centuries of Geomagnetic Secular Variation from Historical Records

Fourcenturies of geomagnetic secular variationfrom historical records ByAndrewJackson 1,ArtR.T. Jonkers 2 andMatthewR.W alker 1 1School ofEarth Sciences, Leeds University,Leeds LS29JT, UK 2Departmentof History, V rijeUniversiteit, Amsterdam, The Netherlands Wepresent anewmodel of the magnetic eld at the core{mantle boundary for the interval 1590{1990. Themodel, called gufm1,is based on amassive newcompilation of historical observations of the magnetic eld. The greater part of the newdataset originates from unpublished observations taken by mariners engaged in merchant and naval shipping. Considerable attention is given to both correction of data for possible mislocation (originating from poor knowledge of longitude) and to proper allocation of error in the data. Weadopt astochastic model for uncorrected positional errors that properly accounts for the nature of the noise process based on aBrownian motion model. Thevariability of navigational errors as afunction of the duration of the voyages that wehave analysed isconsistent with this model. For the period before 1800, more than 83 000 individual observations of magnetic declination wererecorded at more than 64 000 locations; more than 8000 new observations are for the 17th century alone. Thetime-dependent eld model that weconstruct from the dataset is parametrized spatially in terms of spherical harmonics and temporally in B-splines, using atotal of 36 512 parameters. Themodel has improved the resolution of the core eld, and represents the longest continuous model of the eld available. However, full exploitation of the database may demand anew modelling methodology. Keywords: Earth’score; geom agneticsec ularvariation ; magnetic¯ eld;m aritimehistory 1.Intro duction TheEarth has possessed amagnetic eld for more than 4billion years, generated in the ®uid core. -

Magnetic Power Spectrum, with Application to Planetary Dynamo Radii

Earth and Planetary Science Letters 401 (2014) 347–358 Contents lists available at ScienceDirect Earth and Planetary Science Letters www.elsevier.com/locate/epsl A new model for the (geo)magnetic power spectrum, with application to planetary dynamo radii ∗ Benoit Langlais a, , Hagay Amit a, Hugo Larnier a,b, Erwan Thébault c, Antoine Mocquet a a Laboratoire de Planétologie et Géodynamique, CNRS UMR 6112, Université de Nantes, 44322 Nantes cedex 3, France b Institut de Physique du Globe de Strasbourg, UdS–CNRS UMR7516, EOST – Université de Strasbourg, 5 rue René Descartes, 67084 Strasbourg Cedex, France c Équipe de Géomagnétisme, Institut de Physique du Globe de Paris, CNRS UMR 7154, 75252 Paris cedex 5, France a r t i c l e i n f o a b s t r a c t Article history: We propose two new analytical expressions to fit the Mauersberger–Lowes geomagnetic field spectrum Received 6 November 2013 at the core–mantle boundary. These can be used to estimate the radius of the outer liquid core where the Received in revised form 23 April 2014 geodynamo operates, or more generally the radius of the planetary dynamo regions. We show that two Accepted 10 May 2014 sub-families of the geomagnetic field are independent of spherical harmonics degree n at the core–mantle Available online 9 July 2014 boundary and exhibit flat spectra. The first is the non-zonal field, i.e., for spherical harmonics order m Editor: C. Sotin different from zero. The second is the quadrupole family, i.e., n + m even. The flatness of their spectra is Keywords: motivated by the nearly axisymmetric time-average paleomagnetic field (for the non-zonal field) and the magnetic power spectrum dominance of rotational effects in core dynamics (for the quadrupole family). -

Losing the Geomagnetic Shield: a Critical Issue for Space Settlement Philip K

Losing the Geomagnetic Shield: A Critical Issue for Space Settlement Philip K. Chapman1 February 3, 2017 Abstract. The geomagnetic field seems to be collapsing. This has happened many times in the deep past, but never since civilization began. One implication is that the cost of space settlement will increase substantially if we do not expedite deployment of initial facilities in low Earth orbit. Another implication, less certain but much more damaging, is that the collapse may lead to catastrophic global cooling before the end of this century. We must establish self-sufficient communities off Earth before that happens. 1. The ELEO Shelter As Al Globus et al have pointed out,2 the annual radiation dose in equatorial low Earth orbit (ELEO, below 600 km altitude) is less than the limit for occupational exposure that is recommended by the International Commission on Radiological Protection. The first extraterrestrial habitats and assembly plants will need little if any radiation shielding if they are located in such an orbit, reducing the mass that must be launched from Earth by orders of magnitude. These fortunate conditions create a haven in ELEO where we can develop the infrastructure for space settlement at relatively low cost. This is a major opportunity to shrink the technical and economic barriers to growth of a true interplanetary society. The most important functions of early facilities in ELEO include: 1. Assembling and launching missions to obtain radiation shielding materials at low cost (probably water or slag from a near-Earth asteroid). 2. Developing experience concerning the long-term physiological requirements for living and working in space (with or without frequent transfers to and from free fall), in order to specify acceptable rotation rates and centrifugal pseudo-gravity levels. -

Mercury's Resonant Rotation from Secular Orbital Elements

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Institute of Transport Research:Publications Mercury’s resonant rotation from secular orbital elements Alexander Stark a, Jürgen Oberst a,b, Hauke Hussmann a a German Aerospace Center, Institute of Planetary Research, D-12489 Berlin, Germany b Moscow State University for Geodesy and Cartography, RU-105064 Moscow, Russia The final publication is available at Springer via http://dx.doi.org/10.1007/s10569-015-9633-4. Abstract We used recently produced Solar System ephemerides, which incorpo- rate two years of ranging observations to the MESSENGER spacecraft, to extract the secular orbital elements for Mercury and associated uncer- tainties. As Mercury is in a stable 3:2 spin-orbit resonance these values constitute an important reference for the planet’s measured rotational pa- rameters, which in turn strongly bear on physical interpretation of Mer- cury’s interior structure. In particular, we derive a mean orbital period of (87.96934962 ± 0.00000037) days and (assuming a perfect resonance) a spin rate of (6.138506839 ± 0.000000028) ◦/day. The difference between this ro- tation rate and the currently adopted rotation rate (Archinal et al., 2011) corresponds to a longitudinal displacement of approx. 67 m per year at the equator. Moreover, we present a basic approach for the calculation of the orientation of the instantaneous Laplace and Cassini planes of Mercury. The analysis allows us to assess the uncertainties in physical parameters of the planet, when derived from observations of Mercury’s rotation. 1 1 Introduction Mercury’s orbit is not inertially stable but exposed to various perturbations which over long time scales lead to a chaotic motion (Laskar, 1989). -

Solar Activity, Earth's Rotation Rate and Climate Variations in the Secular And

Physics and Chemistry of the Earth 31 (2006) 99–108 www.elsevier.com/locate/pce Solar activity, Earth’s rotation rate and climate variations in the secular and semi-secular time scales S. Duhau * Facultad de Ciencias Exactas, Departamento de Fı´sica, Universidad de Buenos Aires, Argentina Received 17 January 2005; accepted 18 March 2005 Available online 29 March 2006 Abstract By applying the wavelet formalism to sudden storm commencements and aa geomagnetic indices and solar total irradiation, as a proxy data for solar sources of climate-forcing, we have searched the signatures of those variables on the Northern Hemisphere surface temperature. We have found that cyclical behaviour in surface temperature is not clearly related to none of these variables, so we have suggested that besides them surface temperature might be related to Earth’s rotation rate variations. Also it has been suggested that in the long-term Earth’s rotation rate variations might be excited by geomagnetic storm time variations which, in turn, depends on solar activity. The study of these phenomena and its relationships is addressed in the present paper. With this purpose we perform an analysis of the evolution during the last 350 years of the signals that conform the 11-year sunspot cycle maxima envelope for different time scales. The result is applied to analyze the relationship between long-term variations in sunspot number and excess of length of day; and to unearth the signature in surface temperature of this last from that of sudden storm commencement and aa geomagnetic indices and total solar irradiation. As solar dynamo experiments transient processes in all the time scales from seconds to centuries, Fourier analysis and its requirement for the process to be stationary is likely to produce spurious periodicities. -

Analysis of the Secular Variations of Longitudes of the Sun, Mercury and Venus from Optical Observations

Highlights of Astronomy, Vol. 12 International Astronomical Union, 2002 H. Rickman, ed. Analysis of the Secular Variations of Longitudes of the Sun, Mercury and Venus from Optical Observations Y.B. Kolesnik Institute of Astronomy of the Russian Academy of Sciences, Piatnitskaya str. 48, 109017 Moscow, Russia Abstract. About 240 000 optical observations of the Sun, Mercury and Venus, accumulated during the era of classical astrometry from Bradley up to our days, are incorporated to analyse the secular variation of the lon gitudes of innermost planets. A significant discrepancy between modern ephemerides and optical observations is discovered. The possible sources of discrepancy are discussed. The tidal acceleration of the Moon has been revised to conform the lunar theory with the ephemerides of the planets. The offset and residual rotation of Hipparcos-based system with respect to the dynamical equinox is determined. Interpretation of this rotation is given. 1. Observations and method of analysis A mass of 244960 observations of the Sun, Mercury and Venus accumulated during historical period of astronomy from 1750 to 2000 have been incorporated. In the transformation procedure a set of corrections were formed by direct comparison of standard star catalogue with ICRS-based catalogue rotated from J2000 to the respective epoch by use of modern precession constant and Hip- parcos based proper motions. The systematic differences are interpolated onto observed positions of planets and applied. Other corrections account for dif ferences in modern and historical astronomical constants. The N70E catalogue (Kolesnik 1997) rigidly rotated onto Hipparcos frame was used as a reference catalogue. Observations were compared with DE405 ephemeris. -

Improvement of the Secular Variation Curve of the Geomagnetic Field In

LETTER Earth Planets Space, 51, 1325–1329, 1999 Improvement of the secular variation curve of the geomagnetic field in Egypt during the last 6000 years Hatem Odah∗ Institute for Study of the Earth’s Interior, Okayama University, Misasa, Tottori-ken 682-0193, Japan (Received July 14, 1999; Revised October 28, 1999; Accepted November 1, 1999) A total of 115 ceramic specimens out of 41 samples from 9 archeological sites in Giza, Fayoum, Benisuef, El Minia, Malawy, and Sohag were collected. They represent 14 well-determined ages covering the last 6000 years. Rock magnetic properties such as Curie temperature and hysteresis loops have been measured for these samples to identify the magnetic carrier; it is found to be fine-grained magnetite. All specimens were investigated using the classic Thellier double heating technique (Thellier and Thellier, 1959) and the modification after Odah et al. (1995) to obtain the paleointensity data. These paleointensities and the previous results of Odah et al. (1995) were used to improve the secular variation curve of the geomagnetic field in Egypt for the last 6000 years. This curve shows a maximum of 72.8 μT at about 250 AD and a minimum of 33 μT at about 3500 BC. It also shows a general decrease of the magnetic moment during the last 2000 years. 1. Introduction the samples were collected, have been thoroughly studied by Egypt, with its long and well-documented history, pro- many egyptologists and ceramicists (e.g., Boak, 1933; Bres- vides well-dated archeological materials suitable for pale- ciani, 1968; O’Connor, 1997 and others). -

Aas 16-495 Nonlinear Analytical Equations Of

AAS 16-495 NONLINEAR ANALYTICAL EQUATIONS OF RELATIVE MOTION ON J2-PERTURBED ECCENTRIC ORBITS Bradley Kuiack∗ and Steve Ulrichy Future spacecraft formation flying missions will require accurate autonomous guid- ance systems to calculate a reference, or desired, relative motion during reconfig- uration maneuvers. However, the efficiency in terms of propellant used for such maneuvers depends on the fidelity of the dynamics model used for calculating the reference relative motion. Therefore, an efficient method for calculating rela- tive motion should have an analytical solution, be applicable to an eccentric orbit, and should take into account the J2 perturbation. This paper accomplishes this through an exact analytical solution of the relative motion between two spacecraft based on the orbital elements of each spacecraft. Specifically, by propagating the J2-perturbed osculating orbital elements forward in time and solving the exact so- lution at each time step, an accurate representation of the true spacecraft relative motion is obtained. When compared to a numerical simulator, the proposed an- alytical solution is shown to accurately model the relative motion, with bounded errors on the order of meters over a wide range of eccentricity values. INTRODUCTION Formation flying of multiple spacecraft is a key enabling technology for many future scientific missions such as enhanced stellar optical interferometers and virtual platforms for Earth or Sun observations. However, formation flying involves several considerations beyond those of a sin- gle spacecraft mission. This is particularly true for the guidance system, which is responsible for calculating a reference, or desired, relative motion to be actively tracked during reconfiguration ma- neuvers by the on-board control system of a follower spacecraft. -

Observed Auroral Ovals Secular Variation Inferred from Auroral Boundary Data

geosciences Article Observed Auroral Ovals Secular Variation Inferred from Auroral Boundary Data Bruno Zossi 1,2 , Hagay Amit 3, Mariano Fagre 4,5 and Ana G. Elias 1,2,* 1 Laboratorio de Ionosfera, Atmósfera Neutra y Magnetosfera (LIANM), Department de Física, Facultad de Ciencias Exactas y Tecnología, Universidad Nacional de Tucumán, Tucuman 4000, Argentina; [email protected] 2 INFINOA (CONICET-UNT), Tucuman 4000, Argentina 3 Laboratoire de Planétologie et de Géodynamique, CNRS, Université de Nantes, Nantes Atlantiques Universités, CEDEX 3, 44322 Nantes, France; [email protected] 4 Consejo Nacional de Investigaciones Científicas y Técnicas (CONICET), Tucuman 4000, Argentina; [email protected] 5 Laboratorio de Telecomunicaciones, Departmento de Electricidad, Electrónica y Computación, Facultad de Ciencias Exactas y Tecnología, Universidad Nacional de Tucumán, Tucuman 4000, Argentina * Correspondence: [email protected] Abstract: We analyze the auroral boundary corrected geomagnetic latitude provided by the Auroral Boundary Index (ABI) database to estimate long-term changes of core origin in the area enclosed by this boundary during 1983–2016. We design a four-step filtering process to minimize the solar contribution to the auroral boundary temporal variation for the northern and southern hemispheres. This process includes filtering geomagnetic and solar activity effects, removal of high-frequency signal, and additional removal of a ~20–30-year dominant solar periodicity. Comparison of our results with the secular change of auroral plus polar cap areas obtained using a simple model Citation: Zossi, B.; Amit, H.; Fagre, of the magnetosphere and a geomagnetic core field model reveals a decent agreement, with area M.; Elias, A.G. -

Bearworks Cyclostratigraphic Trends of Δ13c in Upper Cambrian Strata

BearWorks MSU Graduate Theses Spring 2017 Cyclostratigraphic Trends of δ13C in Upper Cambrian Strata, Great Basin, Usa: Implications for Astronomical Forcing Wesley Donald Weichert Missouri State University As with any intellectual project, the content and views expressed in this thesis may be considered objectionable by some readers. However, this student-scholar’s work has been judged to have academic value by the student’s thesis committee members trained in the discipline. The content and views expressed in this thesis are those of the student-scholar and are not endorsed by Missouri State University, its Graduate College, or its employees. Follow this and additional works at: https://bearworks.missouristate.edu/theses Part of the Geochemistry Commons, and the Sedimentology Commons Recommended Citation Weichert, Wesley Donald, "Cyclostratigraphic Trends of δ13C in Upper Cambrian Strata, Great Basin, Usa: Implications for Astronomical Forcing" (2017). MSU Graduate Theses. 3143. https://bearworks.missouristate.edu/theses/3143 This article or document was made available through BearWorks, the institutional repository of Missouri State University. The work contained in it may be protected by copyright and require permission of the copyright holder for reuse or redistribution. For more information, please contact [email protected]. CYCLOSTRATIGRAPHIC TRENDS OF δ13C IN UPPER CAMBRIAN STRATA, GREAT BASIN, USA: IMPLICATIONS FOR ASTRONOMICAL FORCING A Masters Thesis Presented to The Graduate College of Missouri State University -

The Secular Variation in the Geomagnetic Field and Other Geophysical Phenomena

THE SECULAR VARIATION IN THE GEOMAGNETIC FIELD AND OTHER GEOPHYSICAL PHENOMENA With 7 figures by GY. B A R T A Roland Eötvös Geophysical Institute, Budapest. Received 28. 9. 1963. SUMMARY The author makes a comparison between the pulsation recognizable in the secular variation of the geomagnetic field of about a 50 years period and similar periods observable in the speed of the Eart's rotation, in the amptitude of the variation of the polar altitude, attd in the variation of sea level. From the conformity of the periods he draws the con clusion, that the secular variation of the geomagnetic field is connected with a large-scale movement of masses in the interior of the Earth. Supposed, that the eccentricity of about 350-400 km of the Earth's magnetic centre is running together with the Earth's inner core, then the eccentricity of the masses in that dimension produces with respect to direction and size the triaxiality of the Earth known from geodesy, i.e. the equatorial ellipticity. It is possible, that the secular variation of the geo magnetic field can be attributed to tidal forces acting on the inner core, and in consequence, to displacement of the core. it is well known that the magnetic field of the Earth is slowly changing. Long series of observations have proved that the total period of a variation amounts to about 500 years. This period is long in the history of magnetic measurements, but very short as compared with the periods of geological ages. It is, therefore, a great problem whether a slow variation bound to the interior of the Earth, i.