Protecting the Vote

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Fourteen-Billion-Dollar Election Emerging Campaign Finance Trends and Their Impact on the 2020 Presidential Race and Beyond

12 The Fourteen-Billion-Dollar Election Emerging Campaign Finance Trends and their Impact on the 2020 Presidential Race and Beyond Michael E. Toner and Karen E. Trainer The 2020 presidential and congressional election was the most expensive election in American history, shattering previous fundraising and spending records. Total spending on the 2020 election totaled an estimated $14 bil- lion, which was more than double the amount spent during the 2016 cycle and more than any previous election in U.S. history. 1 The historic 2020 spending tally was more than was spent in the previous two election cycles combined.2 Moreover, former Vice President Joseph Biden and Senator Kamala Harris made fundraising history in 2020 as their presidential campaign became the first campaign in history to raise over $1 billion in a single election cycle, with a total of $1.1 billion.3 For their part, President Trump and Vice Presi- dent Pence raised in excess of $700 million for their presidential campaign, more than double the amount that they raised in 2016.4 The record amount of money expended on the 2020 election was also fu- eled by a significant increase in spending by outside groups such as Super PACs as well as enhanced congressional candidate fundraising. Political party expenditures increased in 2020, but constituted a smaller share of total electoral spending. Of the $14 billion total, approximately $6.6 billion was spent in connection with the presidential race and $7.2 billion was expended at the congressional level.5 To put those spending amounts into perspective, the $7.2 billion tally at the congressional level nearly equals the GDP of Monaco.6 More than $1 billion of the $14 billion was spent for online advertising on platforms such as Facebook and Google.7 203 204 Michael E. -

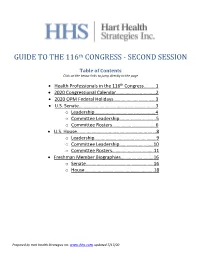

GUIDE to the 116Th CONGRESS

th GUIDE TO THE 116 CONGRESS - SECOND SESSION Table of Contents Click on the below links to jump directly to the page • Health Professionals in the 116th Congress……….1 • 2020 Congressional Calendar.……………………..……2 • 2020 OPM Federal Holidays………………………..……3 • U.S. Senate.……….…….…….…………………………..…...3 o Leadership…...……..…………………….………..4 o Committee Leadership….…..……….………..5 o Committee Rosters……….………………..……6 • U.S. House..……….…….…….…………………………...…...8 o Leadership…...……………………….……………..9 o Committee Leadership……………..….…….10 o Committee Rosters…………..…..……..…….11 • Freshman Member Biographies……….…………..…16 o Senate………………………………..…………..….16 o House……………………………..………..………..18 Prepared by Hart Health Strategies Inc. www.hhs.com, updated 7/17/20 Health Professionals Serving in the 116th Congress The number of healthcare professionals serving in Congress increased for the 116th Congress. Below is a list of Members of Congress and their area of health care. Member of Congress Profession UNITED STATES SENATE Sen. John Barrasso, MD (R-WY) Orthopaedic Surgeon Sen. John Boozman, OD (R-AR) Optometrist Sen. Bill Cassidy, MD (R-LA) Gastroenterologist/Heptalogist Sen. Rand Paul, MD (R-KY) Ophthalmologist HOUSE OF REPRESENTATIVES Rep. Ralph Abraham, MD (R-LA-05)† Family Physician/Veterinarian Rep. Brian Babin, DDS (R-TX-36) Dentist Rep. Karen Bass, PA, MSW (D-CA-37) Nurse/Physician Assistant Rep. Ami Bera, MD (D-CA-07) Internal Medicine Physician Rep. Larry Bucshon, MD (R-IN-08) Cardiothoracic Surgeon Rep. Michael Burgess, MD (R-TX-26) Obstetrician Rep. Buddy Carter, BSPharm (R-GA-01) Pharmacist Rep. Scott DesJarlais, MD (R-TN-04) General Medicine Rep. Neal Dunn, MD (R-FL-02) Urologist Rep. Drew Ferguson, IV, DMD, PC (R-GA-03) Dentist Rep. Paul Gosar, DDS (R-AZ-04) Dentist Rep. -

Baltimore County Voters' Guide 2020 Election

Baltimore County Voters’ Guide 2020 General Election About this Voters’ Guide This Voters’ Guide is published by the League of Women Voters. The League has a long tradition of publishing the verbatim responses of candidates to questions important to voters. The League offers this Voters’ Guide to assist citizens in their decision-making process as they prepare for participation in the general election. The League of Women Voters does not support or oppose any candidate or political party. All candidates were asked to provide biographical information and to respond to a nonpartisan questionnaire. Candidates running for the same office were asked identical questions. Responses from candidates who will appear on the ballot are printed exactly as submitted to the LWV. We did not edit for content, spelling, or grammar. Presidential candidates’ responses were limited to 750 characters. All other candidates’ responses were limited to 400 characters and any additional material was cut off at that point. If a candidate did not respond by the print deadline, "No response received by deadline" is printed. Additional information on the candidates is available at www.VOTE411.org, which has any updates received after the deadline. Candidate websites provide additional biographical and policy information. If the candidate submitted a campaign website, it is listed under her/his name. The League assumes no responsibility for errors and/or omissions. 1 Table of Contents Baltimore County Voters’ Guide 2020 General Election ................................................... -

Statement of GBC President & CEO

Statement of GBC President & CEO Donald Fry on Death of George Floyd Like many nationwide, the Greater Baltimore Committee and I are deeply saddened and dismayed by the brutal and senseless murder of George Floyd while in the custody of Minneapolis police. His murder was an act of cruel injustice - plain and simple. The GBC stands with all in the Greater Baltimore community and throughout the state and nation who demand justice for Mr. Floyd and his family. We mourn the tragic loss of George Floyd, Ahmaud Arbery, Breonna Taylor and so many others, like Freddie Gray, who have died as a result of misconduct and prejudice. The protests and demonstrations in Baltimore and across the country are filled with frustration, trauma, anger and tears. These emotions are an understandable reaction to the individual and systemic racism that is regrettably pervasive in American society. Every person deserves civil, humane treatment from police and from each other, and it is imperative that we take decisive action to eradicate racism from our society. During this deeply painful and unsettling time in our nation's history, it is our responsibility to ensure that this injustice drives positive action to address centuries of failures. This will require real, meaningful changes of attitude and behavior in our personal and professional lives. The GBC and I are committed to devote our time and energy to build bridges for racial unity, work towards solutions to address systemic societal inequities, demand just and responsible policing in Baltimore and beyond and build more equitable and inclusive workplaces. Most importantly, we commit to listen. -

The Mid-South Tribune “Where There Is No Vision, the People Will Perish

The Mid-South Tribune “Where there is no vision, the people will perish. ” Aug. 28-Sept. 4, 2020 Special Edition , Memphis, TN Comp www.blackinformationhighway.com The Mid-South GOSPEL Tribune, Black Information Highway.com, BIHMST on YouTube,The Mid-South Tribune and Black Information Highway blog Celebrating our 25th Anniversary Trump’s policies at convention What the hell do you have to include 10 million jobs in 10 months lose? Time to tell Dems ‘No’! WASHINGTON, D.C. -“Presi - dent Trump continues to build on a tremendously successful first Why we are endorsing President Donald J. Trump term with clear, achievable and By Ms. Arelya J. Mitchell, African Americans should reject responsive priorities for the Publisher/Editor-in-Chief the Democrat Party’s paved gold Great American Comeback in his The Mid-South Tribune roads to Marxism, Socialism, second term. Black Americans and Communism – under the can vote assured that Donald J. “What the hell do you have to guise of Democrat Socialism. Trump has kept his promises to lose?” If ever there was a cross - The Biden-Harris-Sanders- us and has a plan to do even more roads moment it was when Pres - AOC-Congressional Black Cau - for our country because he will ident Donald J. Trump asked this cus-Nancy Pelosi-Black Lives continue to fight for us,” said rhetorical question of the black Matter-Antifa ticket will take the Paris Dennard, Republican Na - community. We say ‘nothing’ as black community and America tional Committee Senior Com - we did in 2016. We, again, en - into economic genocide. -

At-A-Glance 080720

THE COOK POLITICAL REPORT 2020 House At-A-Glance August 7, 2020 # SUMMARY: 232 Democrats 199 Republicans 4 Vacant Seats 0 300 ! CANDIDATES: This list of potential candidates for the 2020 elections is highly speculative and contains names that have been mentioned as either publicly or privately considering candidacies, or worthy of consideration as candidates or recruiting prospects by the parties or interest groups. The numerical key indicating our assessment of each person’s likelihood of running is obviously important, given the “long and dirty” nature of this list, which will be updated each week. (1) Announced candidacy or certain to run (2) Likely to run (3) Maybe (4) Mentioned but unlikely " DISTRICT DESCRIPTION: The CityLab Congressional Density Index (CDI) classifies every congressional district by the density of its neighborhoods using a machine- learning algorithm. Read more about it here. Pure Rural ! ! A mix of very rural areas and small cities with some suburban areas. Rural-suburban Mix ! " Significant suburban and rural populations with almost no dense urban areas. Sparse suburban " " Predominantly suburban, with a mixture of sprawling exurb-style neighborhoods and denser neighborhoods typical of inner-ring suburbs. Often contains a small rural population and a small urban core. Dense suburban " # Predominantly suburban, especially denser inner-ring suburbs, Also significant urban populations. Urban-suburban mix $ # A mix of urban areas and inner-ring suburbs. Pure urban $ $ Almost entirely dense urban neighborhoods. B Alabama Filing Deadline: November 8, 2019 | Primary Date: March 3, 2020 | Runoff Date: July 14, 2020 DIST DESCRIPTION PVI CANDIDATES RATING AL-01 Southwest corner: Mobile R+15 OPEN (Byrne) (R) Solid R Rural-suburban mix Democrats: - James Averhart, non-profit CEO (1) Republicans: - Jerry Carl, Mobile County Commissioner (1) AL-02 Southeast corner: Wiregrass, part of Montgomery R+16 OPEN (Roby) (R) Solid R Pure rural Democrats: - Phyllis Harvey-Hall, (1) Republicans: - Barry Moore, frmr. -

Presidential and Congressional Election

STATISTICS OF THE PRESIDENTIAL AND CONGRESSIONAL ELECTION FROM OFFICIAL SOURCES FOR THE ELECTION OF NOVEMBER 3, 2020 SHOWING THE HIGHEST VOTE FOR PRESIDENTIAL ELECTORS, AND THE VOTE CAST FOR EACH NOMINEE FOR UNITED STATES SENATOR, REPRESENTATIVE, RESIDENT COMMISSIONER, AND DELEGATE TO THE ONE HUNDRED SEVENTEENTH CONGRESS, TOGETHER WITH A RECAPITULATION THEREOF, INCLUDING THE ELECTORAL VOTE COMPILED BY THE OFFICE OF THE CLERK U.S. HOUSE OF REPRESENTATIVES CHERYL L. JOHNSON http://clerk.house.gov (Published on FEBRUARY 26, 2021) WASHINGTON : 2021 STATISTICS OF THE PRESIDENTIAL AND CONGRESSIONAL ELECTION OF NOVEMBER 3, 2020 (Number which precedes name of candidate designates Congressional District. Since party names for Presidential Electors for the same candidate vary from State to State, the most commonly used name is listed in parentheses.) ALABAMA FOR PRESIDENTIAL ELECTORS Democratic .......................................................................................................................................................................... 849,624 Republican .......................................................................................................................................................................... 1,441,170 Independent ........................................................................................................................................................................ 25,176 Write-in .............................................................................................................................................................................. -

Administration of Donald J. Trump, 2020 Digest of Other White House

Administration of Donald J. Trump, 2020 Digest of Other White House Announcements December 31, 2020 The following list includes the President's public schedule and other items of general interest announced by the Office of the Press Secretary and not included elsewhere in this Compilation. January 1 In the morning, the President traveled to the Trump International Golf Club in West Palm Beach, FL. In the afternoon, the President returned to his private residence at the Mar-a-Lago Club in Palm Beach, FL, where he remained overnight. The President announced the designation of the following individuals as members of a Presidential delegation to attend the World Economic Forum in Davos-Klosters, Switzerland, from January 20 through January: Steven T. Mnuchin (head of delegation); Wilbur L. Ross, Jr.; Eugene Scalia; Elaine L. Chao; Robert E. Lighthizer; Keith J. Krach; Ivanka M. Trump; Jared C. Kushner; and Christopher P. Liddell. January 2 In the morning, the President traveled to the Trump International Golf Club in West Palm Beach, FL. In the afternoon, the President returned to his private residence at the Mar-a-Lago Club in Palm Beach, FL, where he remained overnight. During the day, President had a telephone conversation with President Recep Tayyip Erdogan of Turkey to discuss bilateral and regional issues, including the situation in Libya and the need for deescalation of the conflict in Idlib, Syria, in order to protect civilians. January 3 In the morning, the President was notified of the successful U.S. strike in Baghdad, Iraq, that killed Maj. Gen. Qasem Soleimani of the Islamic Revolutionary Guard Corps of Iran, commander of the Quds Force. -

Returning Roosevelt

NASCAR VIRUS OUTBREAK FACES NC governor US economy shrank Comics stick with OKs Charlotte 4.8% last quarter, stand-up, even as race in May with worst to come clubs are closed Back page Page 8 Page 16 Online: Get the latest news on the virus outbreak » stripes.com/coronavirus stripes.com Volume 79, No. 9 ©SS 2020 THURSDAY, APRIL 30, 2020 50¢/Free to Deployed Areas Esper defers Sailors begin projects Returning moving back to fund US to the onto carrier border wall Roosevelt hit by virus BY JOHN VANDIVER BY CAITLIN DOORNBOS Stars and Stripes Stars and Stripes STUTTGART, Germany YOKOSUKA NAVAL BASE, — Military projects primarily Japan — The Navy began moving aimed at countering Russia, in- sailors back aboard the aircraft cluding upgrades to a U.S. Navy carrier USS Theodore Roosevelt, base in Spain, are among those sidelined in Guam after nearly a being put on the backburner as fourth of its 4,000-member crew the Pentagon shifts funds to pay tested positive for coronavirus. for a border wall. The sailors returning to the ship Defense Secretary Mark Esper Wednesday had been restricted to this week or- off-base hotel rooms for nearly a dered $545 month as officials tested all crew million in members for the coronavirus and construction sanitized the carrier, according projects to to a statement Wednesday from be redirect- the Joint Region Marianas Public ed to fund a Affairs Office in Guam. wall along Sailors who tested negative for the U.S.’s coronavirus in three separate southern tests were allowed to embark the border with Roosevelt. -

A Newsletter for Conservative Republicans

A Newsletter for Conservative Republicans FLYING HIGH…AND DIGGING AND BORING TO KEEP BREVARD COUNTY RED AND GET CONSERVATIVES ELECTED Editor and Publisher: Stuart Gorin Designer and Assistant Publisher: Frank Montelione Number 130 September 2020 PRESIDENT TRUMP RE-NOMINATED AT REPUBLICAN NATIONAL CONVENTION FROM THE EDITOR’S DESK: The joyous Republican National Convention gathering in MY TWO CENTS Charlotte, North Carolina, and Washington, DC, August 24- By Stuart Gorin 27 re-nominated President Donald Trump and Vice President Mike Pence and was a far cry from the doom-and- Columnist Star Parker, who founded the gloom Democrat event held one week earlier in Milwaukee, non-profit think tank CURE (Center for Wisconsin. Urban Renewal and Education), placed billboards in Milwaukee that read: “Tired of poverty? Finish School, Take Any Job, Get Married, Save & Invest, Give Back To Your Neighborhood.” An inspiring message…except to the city’s social media morons, who challenged the billboards as being “racist and sexist.” -0- The federal government has “Tomahawk” cruise missiles and “Apache,” “Blackhawk,” “Kiowa” and “Lakota” helicopters. Its code name in the attack that killed Osama bin Laden was “Geronimo.” All good. So why is the name Washington That convention featured videos of a bevy of critical anti- “Redskins” offensive? Trump so-called entertainers, while the Republican one -0- focused on everyday Americans telling how the President has made their lives better. What is a “statesman”? According to the Fake News media, it is every RINO Republican who agrees with Democrats on all Republican National Committee Chair Ronna McDaniel big issues – especially those that sabotage the President. -

American Nephrology Nurses Association

American Nephrology Nurses Association Daily Capitol Hill Update – Wednesday, April 29, 2020 (The following information comes from Bloomberg Government Website) Schedules: White House and Congress WHITE HOUSE 10am: President Trump participates in phone call with industry leaders from food, agriculture 11am: Trump meets with Louisiana Gov. John Bel Edwards 12:30pm: Trump has lunch with Sec. of State Mike Pompeo 4pm: Trump participates in roundtable with industry execs on reopening economy CONGRESS Senate to return next week even as the House scrapped plans to reconvene Congressional, Health Policy, and Political News FEMA, HHS Acknowledges Shortages to Panel: House Oversight and Reform Chairwoman Carolyn Maloney (D-N.Y.) said yesterday in a statement that officials from the Federal Emergency Management Agency and Department of Health and Human Services had told lawmakers in briefings that states face shortages of testing supplies as well as personal protective equipment, such as masks and medical gowns. o The acknowledgment comes after weeks of President Donald Trump stating governors have sufficient testing and equipment. o Trump said in a Twitter post last night that “the only reason the U.S. has reported one million cases of CoronaVirus is that our Testing is sooo much better than any other country in the World.” Doctors Want Medicare Program Reinstated: The nation’s doctors want the Trump administration to restart a Medicare loan payment program that offers over $40 billion to help them weather losses in revenue during the coronavirus crisis. More than 24,000 loans had been approved for doctors, other caregivers, and suppliers of durable medical equipment since March 28 under Medicare’s Advance Payment Program. -

Biden Wins Primary Delayed by Virus COLUMBUS, Ohio (AP) — Ballots

Serving Oregon’s South Coast Since 1878 Baseball changes? COVID-19 crisis MLB might use regional divisions, B1 Shutter Creek employees speak out, A3 CLOUDY, RAIN 58 • 50 FORECAST A9 | WEDNESDAY, APRIL 29, 2020 | theworldlink.com | $2 Proposal to limit council JILLIAN WARD the ballot measure, a “yes” vote not exceed recent Social Security increase in a municipal public said Briggs. “Therefore, it is The World will amend the city charter to cost-of-living increases. safety fee. But last May, his permitted. require that any decision to raise Measure 6-176 — as well as wife showed him their water bill “I turned around and didn’t NORTH BEND — As ballots or add North Bend taxes, fees or Measure 6-177, regarding the and “the (public safety fee) was get more than three steps before go out to voters this week, North other revenue-generating mecha- public safety fee — was brought almost half of the total bill.” I thought to myself, ‘This is an Bend residents are being asked nisms be decided by popular vote to the ballot by North Bend That sent him marching to open checkbook from my pocket if they want to take some power during either a May or Novem- Citizens for Good Faith Govern- North Bend City Hall to ask and everyone else in the city. back from their city council. ber election. ment. The chairman for the local where the council got its legal They don’t even have to ask me, Measure 6-176 asks voters, It would allow for exceptions grassroots group, John Briggs, authority to make this decision because it’s not prohibited for “Shall the power to add or in “charges otherwise subject to said he had grown concerned regardless of a popular vote them to set up a fee any time increase fees be removed from voter approval or subject to stan- over how the city council was against it.