Interactive Internet TV Architecture Based on Scalable Video Coding

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Capabilities of the Horchow Auditorium and the Orientation

Performance Capabilities of Horchow Auditorium and Atrium at the Dallas Museum of Art Horchow Auditorium Capacity and Stage: The auditorium seats 333 people (with a 12 removable chair option in the back), maxing out the capacity at 345). The stage is 45’ X 18’and the screen is 27’ X 14’. A height adjustable podium, microphone, podium clock and light are standard equipment available. Installed/Available Equipment Sound: Lighting: 24 channel sound board 24 fixed lights 4 stage monitors (with up to 4 Mixes) 5 movers (these give a wide array of lighting looks) 6 hardwired microphones 4 wireless lavaliere microphones 2 handheld wireless microphones (with headphone option) 9-foot Steinway Concert Grand Piano 3 Bose towers (these have been requested by Acoustic performers before and work very well) Music stands Projection Panasonic PTRQ32 4K 20,000 Lumen Laser Projector Preferred Video Formats in Horchow Blu Ray DVD Apple ProRes 4:2:2 Standard in a .mov wrapper H.264 in a .mov wrapper Formats we can use, but are not optimal MPEG-1/2 Dirac / VC-2 DivX® (1/2/3/4/5/6) MJPEG (A/B) MPEG-4 ASP WMV 1/2 XviD WMV 3 / WMV-9 / VC-1 3ivX D4 Sorenson 1/3 H.261/H.263 / H.263i DV H.264 / MPEG-4 AVC On2 VP3/VP5/VP6 Cinepak Indeo Video v3 (IV32) Theora Real Video (1/2/3/4) Atrium Capacity and Stage: The Atrium seats up to 500 people (chair rental required). The stage available to be installed in the Atrium is 16’ x 12’ x 1’. -

(A/V Codecs) REDCODE RAW (.R3D) ARRIRAW

What is a Codec? Codec is a portmanteau of either "Compressor-Decompressor" or "Coder-Decoder," which describes a device or program capable of performing transformations on a data stream or signal. Codecs encode a stream or signal for transmission, storage or encryption and decode it for viewing or editing. Codecs are often used in videoconferencing and streaming media solutions. A video codec converts analog video signals from a video camera into digital signals for transmission. It then converts the digital signals back to analog for display. An audio codec converts analog audio signals from a microphone into digital signals for transmission. It then converts the digital signals back to analog for playing. The raw encoded form of audio and video data is often called essence, to distinguish it from the metadata information that together make up the information content of the stream and any "wrapper" data that is then added to aid access to or improve the robustness of the stream. Most codecs are lossy, in order to get a reasonably small file size. There are lossless codecs as well, but for most purposes the almost imperceptible increase in quality is not worth the considerable increase in data size. The main exception is if the data will undergo more processing in the future, in which case the repeated lossy encoding would damage the eventual quality too much. Many multimedia data streams need to contain both audio and video data, and often some form of metadata that permits synchronization of the audio and video. Each of these three streams may be handled by different programs, processes, or hardware; but for the multimedia data stream to be useful in stored or transmitted form, they must be encapsulated together in a container format. -

Codec Is a Portmanteau of Either

What is a Codec? Codec is a portmanteau of either "Compressor-Decompressor" or "Coder-Decoder," which describes a device or program capable of performing transformations on a data stream or signal. Codecs encode a stream or signal for transmission, storage or encryption and decode it for viewing or editing. Codecs are often used in videoconferencing and streaming media solutions. A video codec converts analog video signals from a video camera into digital signals for transmission. It then converts the digital signals back to analog for display. An audio codec converts analog audio signals from a microphone into digital signals for transmission. It then converts the digital signals back to analog for playing. The raw encoded form of audio and video data is often called essence, to distinguish it from the metadata information that together make up the information content of the stream and any "wrapper" data that is then added to aid access to or improve the robustness of the stream. Most codecs are lossy, in order to get a reasonably small file size. There are lossless codecs as well, but for most purposes the almost imperceptible increase in quality is not worth the considerable increase in data size. The main exception is if the data will undergo more processing in the future, in which case the repeated lossy encoding would damage the eventual quality too much. Many multimedia data streams need to contain both audio and video data, and often some form of metadata that permits synchronization of the audio and video. Each of these three streams may be handled by different programs, processes, or hardware; but for the multimedia data stream to be useful in stored or transmitted form, they must be encapsulated together in a container format. -

Mcgraw-Hill New York Chicago San Francisco Lisbon London Madrid Mexico City Milan New Delhi San Juan Seoul Singapore Sydney Toronto Mcgraw-Hill Abc

Y L F M A E T Team-Fly® Streaming Media Demystified Michael Topic McGraw-Hill New York Chicago San Francisco Lisbon London Madrid Mexico City Milan New Delhi San Juan Seoul Singapore Sydney Toronto McGraw-Hill abc Copyright © 2002 by The McGraw-Hill Companies, Inc. All rights reserved. Manufactured in the United States of America. Except as permitted under the United States Copyright Act of 1976, no part of this publication may be reproduced or distrib- uted in any form or by any means, or stored in a database or retrieval system, without the prior written permission of the publisher. 0-07-140962-9 The material in this eBook also appears in the print version of this title: 0-07-138877-X. All trademarks are trademarks of their respective owners. Rather than put a trademark symbol after every occurrence of a trademarked name, we use names in an editorial fashion only, and to the benefit of the trademark owner, with no intention of infringement of the trademark. Where such designations appear in this book, they have been printed with initial caps. McGraw-Hill eBooks are available at special quantity discounts to use as premiums and sales promotions, or for use in cor- porate training programs. For more information, please contact George Hoare, Special Sales, at george_hoare@mcgraw- hill.com or (212) 904-4069. TERMS OF USE This is a copyrighted work and The McGraw-Hill Companies, Inc. (“McGraw-Hill”) and its licensors reserve all rights in and to the work. Use of this work is subject to these terms. -

For Immediate Release Press Contact Wendy Espey Phone: +61 2 9635 4881 [email protected]

For Immediate Release Press Contact Wendy Espey Phone: +61 2 9635 4881 [email protected] 3ivx MPEG-4 5.0 Global Release Sydney, Australia — June 6, 2007 — 3ivx Technologies Pty. Ltd., the MPEG-4 Video & Audio specialist, announces the immediate world wide availability of version 5.0 of the 3ivx MPEG-4 compression suite for Windows, Mac OS X & Linux. This release is the culmination of 7 years research and development. 3ivx’s compression technology enables the transmission and storage of video which would otherwise be too large to store or transmit. 3ivx MPEG-4 5.0 consists of separate MPEG-4 Audio, MPEG-4 Video and MP4 File Format components for authoring and playback of MPEG-4 media. Technical Details 3ivx MPEG-4 5.0 is an MPEG-4 Part 2 Advanced Simple Profile implementation for Mac OS X, Windows Vista and Linux. 3ivx MPEG-4 5.0 now includes support for B-Frames, Interlaced Decoding and Glo- bal Motion Compensation. MPEG, H.263 and Custom Quantization Matrices, Pixel Aspect Ratios, NVop Suppression, Variable Framerate Encoding and Quarter Pixel or Half Pixel Motion Interpolation with 8x8 or 16x16 Motion Vector functionality has also been added. New CPU Complexity Controls enable realtime video encoding by allowing Speed vs Quality trade-offs, combined with VBR, CBR and ABR Single Pass modes provide enhanced Live Streaming capabilities. Video Archiving and Offline Production benefit from Dual Pass modes with Fast First Pass and Credit Suppression. Error Detection, Recovery and Concealment aid in the playback of error prone streams while the MP4 Media Tools can be used to recondition and repair malformed pre-exist- ing MPEG-4 streams to enable broader interoperability. -

Hi3716m V430 Hi3716m V430 Cable HD Chip Brief Data Sheet

Hi3716M V430 Hi3716M V430 Cable HD Chip Brief Data Sheet Key Specifications CPU Graphics and Display Processing (Imprex 2.0 High-performance ARM Cortex-A7 processor Processing Engine) Built-in I-cache, D-cache, and L2 cache Hardware TDE Hardware Java acceleration 3-layer OSD Floating-point coprocessor Two video layers Memory Control Interfaces 16-bit and 32-bit color depth DDR3/DDR3L interface Full-hardware anti-aliasing and anti-flicker − Maximum capacity of 512 MB for an external DDR IE, NR, and CCS SDRAM or of 128 MB/256 MB for a built-in DDR DEI SDRAM Audio and Video Interfaces − 16-bit data width PAL, NTSC, and SECAM standard outputs, and forcible A SPI NOR flash/SPI NAND flash, a parallel SLC NAND standard conversion flash, or a SPI NOR flash+a parallel SLC NAND flash Aspect ratio of 4:3 or 16:9, forcible aspect ratio Video Decoding (HiVXE 2.0 Processing Engine) conversion, and free scaling H.265 Main/Main 10@Level 4.1 high-tier 1080p50(60)/1080i/720p/576p/576i/480p/480i outputs H.264 BP/MP/HP@Level 4.2; MVC HD and SD output for the same source MPEG-1 Color gamut compliant with the xvYCC (IEC 61966-2-4) MPEG-2 SP@ML and MP@HL standard MPEG-4 SP@Levels 0–3, ASP@Levels 0–5, GMC, and HDMI 1.4b with HDCP1.4 MPEG-4 short header format (H.263 baseline) Analog video interfaces AVS baseline@Level 6.0 and AVS+ − One CVBS interface VC-1 SP@ML, MP@HL, and AP@Levels 0–3 − One built-in VDAC VP6/VP8 − VBI 1-channel 1080p@60 fps decoding Audio interface − Audio-left and audio-right channels Image Decoding − S/PDIF -

Input Formats & Codecs

Input Formats & Codecs Pivotshare offers upload support to over 99.9% of codecs and container formats. Please note that video container formats are independent codec support. Input Video Container Formats (Independent of codec) 3GP/3GP2 ASF (Windows Media) AVI DNxHD (SMPTE VC-3) DV video Flash Video Matroska MOV (Quicktime) MP4 MPEG-2 TS, MPEG-2 PS, MPEG-1 Ogg PCM VOB (Video Object) WebM Many more... Unsupported Video Codecs Apple Intermediate ProRes 4444 (ProRes 422 Supported) HDV 720p60 Go2Meeting3 (G2M3) Go2Meeting4 (G2M4) ER AAC LD (Error Resiliant, Low-Delay variant of AAC) REDCODE Supported Video Codecs 3ivx 4X Movie Alaris VideoGramPiX Alparysoft lossless codec American Laser Games MM Video AMV Video Apple QuickDraw ASUS V1 ASUS V2 ATI VCR-2 ATI VCR1 Auravision AURA Auravision Aura 2 Autodesk Animator Flic video Autodesk RLE Avid Meridien Uncompressed AVImszh AVIzlib AVS (Audio Video Standard) video Beam Software VB Bethesda VID video Bink video Blackmagic 10-bit Broadway MPEG Capture Codec Brooktree 411 codec Brute Force & Ignorance CamStudio Camtasia Screen Codec Canopus HQ Codec Canopus Lossless Codec CD Graphics video Chinese AVS video (AVS1-P2, JiZhun profile) Cinepak Cirrus Logic AccuPak Creative Labs Video Blaster Webcam Creative YUV (CYUV) Delphine Software International CIN video Deluxe Paint Animation DivX ;-) (MPEG-4) DNxHD (VC3) DV (Digital Video) Feeble Files/ScummVM DXA FFmpeg video codec #1 Flash Screen Video Flash Video (FLV) / Sorenson Spark / Sorenson H.263 Forward Uncompressed Video Codec fox motion video FRAPS: -

Computational Complexity Optimization on H.264 Scalable/Multiview Video Coding

Computational Complexity Optimization on H.264 Scalable/Multiview Video Coding By Guangyao Zhang A thesis submitted in partial fulfilment for the requirements for the degree of PhD, at the University of Central Lancashire April 2014 Student Declaration Concurrent registration for two or more academic awards I declare that while registered as a candidate for the research degree, I have not been a registered candidate or enrolled student for another award of the University or other academic or professional institution. Material submitted for another award I declare that no material contained in the thesis has been used in any other submission for an academic award and is solely my own work. Collaboration This work presented in this thesis was carried out at the ADSIP (Applied Digital Signal and Image Processing) Research Centre, University of Central Lancashire. The work described in the thesis is entirely the candidate’s own work. Signature of Candidate ________________________________________ Type of Award Doctor of Philosophy School School of Computing, Engineering and Physical Sciences Abstract Abstract The H.264/MPEG-4 Advanced Video Coding (AVC) standard is a high efficiency and flexible video coding standard compared to previous standards. The high efficiency is achieved by utilizing a comprehensive full search motion estimation method. Although the H.264 standard improves the visual quality at low bitrates, it enormously increases the computational complexity. The research described in this thesis focuses on optimization of the computational complexity on H.264 scalable and multiview video coding. Nowadays, video application areas range from multimedia messaging and mobile to high definition television, and they use different type of transmission systems. -

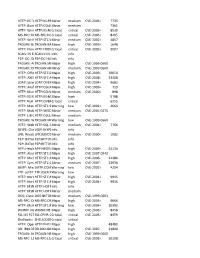

HTTP: IIS "Propfind" Rem HTTP:IIS:PROPFIND Minor Medium

HTTP: IIS "propfind"HTTP:IIS:PROPFIND RemoteMinor DoS medium CVE-2003-0226 7735 HTTP: IkonboardHTTP:CGI:IKONBOARD-BADCOOKIE IllegalMinor Cookie Languagemedium 7361 HTTP: WindowsHTTP:IIS:NSIISLOG-OF Media CriticalServices NSIISlog.DLLcritical BufferCVE-2003-0349 Overflow 8035 MS-RPC: DCOMMS-RPC:DCOM:EXPLOIT ExploitCritical critical CVE-2003-0352 8205 HTTP: WinHelp32.exeHTTP:STC:WINHELP32-OF2 RemoteMinor Buffermedium Overrun CVE-2002-0823(2) 4857 TROJAN: BackTROJAN:BACKORIFICE:BO2K-CONNECT Orifice 2000Major Client Connectionhigh CVE-1999-0660 1648 HTTP: FrontpageHTTP:FRONTPAGE:FP30REG.DLL-OF fp30reg.dllCritical Overflowcritical CVE-2003-0822 9007 SCAN: IIS EnumerationSCAN:II:IIS-ISAPI-ENUMInfo info P2P: DC: DirectP2P:DC:HUB-LOGIN ConnectInfo Plus Plus Clientinfo Hub Login TROJAN: AOLTROJAN:MISC:AOLADMIN-SRV-RESP Admin ServerMajor Responsehigh CVE-1999-0660 TROJAN: DigitalTROJAN:MISC:ROOTBEER-CLIENT RootbeerMinor Client Connectmedium CVE-1999-0660 HTTP: OfficeHTTP:STC:DL:OFFICEART-PROP Art PropertyMajor Table Bufferhigh OverflowCVE-2009-2528 36650 HTTP: AXIS CommunicationsHTTP:STC:ACTIVEX:AXIS-CAMERAMajor Camerahigh Control (AxisCamControl.ocx)CVE-2008-5260 33408 Unsafe ActiveX Control LDAP: IpswitchLDAP:OVERFLOW:IMAIL-ASN1 IMail LDAPMajor Daemonhigh Remote BufferCVE-2004-0297 Overflow 9682 HTTP: AnyformHTTP:CGI:ANYFORM-SEMICOLON SemicolonMajor high CVE-1999-0066 719 HTTP: Mini HTTP:CGI:W3-MSQL-FILE-DISCLSRSQL w3-msqlMinor File View mediumDisclosure CVE-2000-0012 898 HTTP: IIS MFCHTTP:IIS:MFC-EXT-OF ISAPI FrameworkMajor Overflowhigh (via -

NATAS-Boston/New England Student Production Awards

NATAS-Boston/New England Student Production Awards STANDARD RULES PURPOSE: To recognize outstanding student achievements in production by conferring awards of merit in the Boston/New England chapter’s award region. the presentation of these awards is intended to be an incentive for the continued pursuit of excellence by those studying media and journalism and to focus public attention on outstanding cultural, educational, technological, entertainment, news and informational achievements by undergraduate college and/or high school students. ELIGIBILITY: 1. Programs or program segments conceived, produced and executed by students at a high school, university, college or technical/vocational school within the Boston/New England Chapter’s award area are eligible for student award consideration. 2. Returning students who previously worked as professionals are not eligible. 3. No professional services may have been employed in the production of the entry. 4. Faculty involvement can only be advisory. 5. Entrants must be enrolled as a full-time student as of the entry deadline. 6. Students may enter work that was produced as a class assignment, extra-curricular assignment or in conjunction with their academic experience. 7. Students who perform professional work may enter their work in the professional awards competition, provided they meet all eligibility requirements. 8. No entry may be submitted to more than one Chapter’s awards. 9. The entry does not need to have been broadcast, cablecast or webcast to be eligible. 10. Entries must be submitted as they were originally transmitted. 11. There may not be any post-broadcast changes, except edits for time, as noted on the category descriptions that follow. -

Hi3796m V200 Brief Data Sheet

Hi3796M V200 Hi3796M V200 Brief Data Sheet Key Specifications High-Performance CPU AAC-LC and HE-AAC V1/V2 decoding 64-bit quad-core high-performance ARM Cortex A53 APE, FLAC, Ogg, AMR-NB, and AMR-WB decoding Integrated multimedia acceleration engine NEON G.711 (u/a) audio decoding Hardware Java acceleration G.711 (u/a), AMR-NB, AMR-WB, and AAC-LC audio Integrated hardware floating-point coprocessor encoding Integrated L1 and L2 Cache HE-AAC transcoding DD (AC3) 3D GPU Channel Decoding and TS De-multiplexing Integrated high-performance multi-core GPU Mali 450 One embedded QAM module, supporting the ITU OpenGL ES 2.0/1.1 and OpenVG 1.1 J83-A/B/C standard Memory Control Interfaces Multi TS processing simultaneously DDR3/DDR3L/DDR4 interface, supporting maximum TS interface for Full Band Capture device 32-bit data width Various descrambling algorithms of the DVB eMMC 5.0 flash interface Security Processing SPI NOR flash interface Advanced CA SPI NAND flash interface Downloadable Conditional Access System Asynchronous/Synchronous NAND flash interface TVOS security mechanism − SLC/MLC flash memory Secure boot, secure storage, and secure upgrade − Maximum 64-bit ECC DRM and hardware watermark Video Decoding (HiVXE 2.0 Processing Engine) HDCP 2.2/1.4 protection for HDMI outputs AVS2 Main-10bit Profile, Level 8.2.60, supporting Graphics and Display Processing (Imprex 2.0 maximum 4K x 2K@60 fps 10-bit decoding Processing Engine) H.265/HEVC Main/Main 10 Profile@Level 5.1 high-tier, Dolby Vision, HDR10, HLG, and SLF -

Video Encoding with Open Source Tools

Video Encoding with Open Source Tools Steffen Bauer, 26/1/2010 LUG Linux User Group Frankfurt am Main Overview Basic concepts of video compression Video formats and codecs How to do it with Open Source and Linux 1. Basic concepts of video compression Characteristics of video streams Framerate Number of still pictures per unit of time of video; up to 120 frames/s for professional equipment. PAL video specifies 25 frames/s. Interlacing / Progressive Video Interlaced: Lines of one frame are drawn alternatively in two half-frames Progressive: All lines of one frame are drawn in sequence Resolution Size of a video image (measured in pixels for digital video) 768/720×576 for PAL resolution Up to 1920×1080p for HDTV resolution Aspect Ratio Dimensions of the video screen; ratio between width and height. Pixels used in digital video can have non-square aspect ratios! Usual ratios are 4:3 (traditional TV) and 16:9 (anamorphic widescreen) Why video encoding? Example: 52 seconds of DVD PAL movie (16:9, 720x576p, 25 fps, progressive scan) Compression Video codec Raw Size factor Comment 1300 single frames, MotionTarga, Raw frames 1.1 GB - uncompressed HUFFYUV 459 MB 2.2 / 55% Lossless compression MJPEG 60 MB 20 / 95% Motion JPEG; lossy; intraframe only lavc MPEG-2 24 MB 50 / 98% Standard DVD quality X.264 MPEG-4 5.3 MB 200 / 99.5% High efficient video codec AVC Basic principles of multimedia encoding Video compression Lossy compression Lossless (irreversible; (reversible; using shortcomings statistical encoding) in human perception) Intraframe encoding