Were We Destined to Live in Facebook's World?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Is Italy an “Atlantic” Country?

Is Italy an “Atlantic” Country? Marco Mariano IS ITALY AN “ATLANTIC” COUNTRY?* [Italians] have always flourished under a strong hand, whether Caesar’s or Hildebrand’s, Cavour’s or Crispi’s. That is because they are not a people like ourselves or the English or the Germans, loving order and regulation and government for their own sake....When his critics accuse [Mussolini] of unconstitutionality they only recommend him the more to a highly civilized but naturally lawless people. (Anne O’ Hare McCormick, New York Times Magazine, July 22, 1923) In this paper I will try to outline the emergence of the idea of Atlantic Community (from now on AC) during and in the aftermath of World War II and the peculiar, controversial place of Italy in the AC framework. Both among American policymakers and in public discourse, especially in the press, AC came to define a transatlantic space including basically North American and Western European countries, which supposedly shared political and economic principles and institutions (liberal democracy, individual rights and the rule of law, free market and free trade), cultural traditions (Christianity and, more generally, “Western civilization”) and, consequently, national interests. While the preexisting idea of Western civilization was defined mainly in cultural- historical terms and did not imply any institutional obligation, now the impeding threat of the cold war and the confrontation with the Communist block demanded the commitment to be part of a “community” with shared beliefs and needs, in which every single member is responsible for the safety and prosperity of all the other members. The obvious political counterpart of such a discourse on Euro-American relations was the birth of the North Atlantic Treaty Organization (NATO) on April 4, 1949. -

Online Media and the 2016 US Presidential Election

Partisanship, Propaganda, and Disinformation: Online Media and the 2016 U.S. Presidential Election The Harvard community has made this article openly available. Please share how this access benefits you. Your story matters Citation Faris, Robert M., Hal Roberts, Bruce Etling, Nikki Bourassa, Ethan Zuckerman, and Yochai Benkler. 2017. Partisanship, Propaganda, and Disinformation: Online Media and the 2016 U.S. Presidential Election. Berkman Klein Center for Internet & Society Research Paper. Citable link http://nrs.harvard.edu/urn-3:HUL.InstRepos:33759251 Terms of Use This article was downloaded from Harvard University’s DASH repository, and is made available under the terms and conditions applicable to Other Posted Material, as set forth at http:// nrs.harvard.edu/urn-3:HUL.InstRepos:dash.current.terms-of- use#LAA AUGUST 2017 PARTISANSHIP, Robert Faris Hal Roberts PROPAGANDA, & Bruce Etling Nikki Bourassa DISINFORMATION Ethan Zuckerman Yochai Benkler Online Media & the 2016 U.S. Presidential Election ACKNOWLEDGMENTS This paper is the result of months of effort and has only come to be as a result of the generous input of many people from the Berkman Klein Center and beyond. Jonas Kaiser and Paola Villarreal expanded our thinking around methods and interpretation. Brendan Roach provided excellent research assistance. Rebekah Heacock Jones helped get this research off the ground, and Justin Clark helped bring it home. We are grateful to Gretchen Weber, David Talbot, and Daniel Dennis Jones for their assistance in the production and publication of this study. This paper has also benefited from contributions of many outside the Berkman Klein community. The entire Media Cloud team at the Center for Civic Media at MIT’s Media Lab has been essential to this research. -

Hillary Clinton's Campaign Was Undone by a Clash of Personalities

64 Hillary Clinton’s campaign was undone by a clash of personalities more toxic than anyone imagined. E-mails and memos— published here for the first time—reveal the backstabbing and conflicting strategies that produced an epic meltdown. BY JOSHUA GREEN The Front-Runner’s Fall or all that has been written and said about Hillary Clin- e-mail feuds was handed over. (See for yourself: much of it is ton’s epic collapse in the Democratic primaries, one posted online at www.theatlantic.com/clinton.) Fissue still nags. Everybody knows what happened. But Two things struck me right away. The first was that, outward we still don’t have a clear picture of how it happened, or why. appearances notwithstanding, the campaign prepared a clear The after-battle assessments in the major newspapers and strategy and did considerable planning. It sweated the large newsweeklies generally agreed on the big picture: the cam- themes (Clinton’s late-in-the-game emergence as a blue-collar paign was not prepared for a lengthy fight; it had an insuf- champion had been the idea all along) and the small details ficient delegate operation; it squandered vast sums of money; (campaign staffers in Portland, Oregon, kept tabs on Monica and the candidate herself evinced a paralyzing schizophrenia— Lewinsky, who lived there, to avoid any surprise encounters). one day a shots-’n’-beers brawler, the next a Hallmark Channel The second was the thought: Wow, it was even worse than I’d mom. Through it all, her staff feuded and bickered, while her imagined! The anger and toxic obsessions overwhelmed even husband distracted. -

Executive Order No. 2-21 Designating Juneteenth As an Official City Holiday

EXECUTIVE ORDER NO. 2-21 DESIGNATING JUNETEENTH AS AN OFFICIAL CITY HOLIDAY AND RENAMING THE HOLIDAY FORMERLY KNOWN AS COLUMBUS DAY TO INDIGENOUS PEOPLES’ DAY WHEREAS, the City of Philadelphia holds an integral place in our nation’s founding as the birthplace of democracy, the Constitution, and the Declaration of Independence, where the following words were written: “that all men are created equal, that they are endowed by their Creator with certain unalienable rights, that among these are life, liberty and the pursuit of happiness”; WHEREAS, despite these words, the United States continued to be stained by the institution of slavery and racism; WHEREAS, President Lincoln’s Emancipation Proclamation, ending slavery in the Confederacy, did not mean true freedom for all enslaved Africans; WHEREAS, on June 19, 1865, Major General Gordon Granger issued an order informing the people of Texas “that in accordance with a proclamation from the Executive of the United States, all slaves are free”; WHEREAS, the General’s order established the basis for the holiday now known as Juneteenth, which is now the most popular annual celebration of emancipation of slavery in the United States; WHEREAS, on June 19, 2019, Governor Tom Wolf designated June 19th as Juneteenth National Freedom Day in Pennsylvania; WHEREAS, the City of Philadelphia is a diverse and welcoming city where, according to the 2018 American Community Survey, 40% of residents are Black; WHEREAS, Juneteenth has a unique cultural and historical significance here in Philadelphia and across the country. WHEREAS, Juneteenth represents the resiliency of the human spirit, the triumph of emancipation and marks a day of reflection; WHEREAS, the need to acknowledge institutional and structural racism is needed now more than ever; WHEREAS, the City of Philadelphia is committed to work for true equity for all Philadelphia residents, and toward healing our communities; WHEREAS, the story of Christopher Columbus is deeply complicated. -

Legislative Resolution 351

LR351 LR351 ONE HUNDRED SECOND LEGISLATURE FIRST SESSION LEGISLATIVE RESOLUTION 351 Introduced by Council, 11; Cook, 13. WHEREAS, for more than 130 years, Juneteenth National Freedom Day has been the oldest and only African-American holiday observed in the United States; and WHEREAS, Juneteenth is also known as Emancipation Day, Emancipation Celebration, Freedom Day, and Jun-Jun; and WHEREAS, Juneteenth commemorates the strong survival instinct of African Americans who were first brought to this country stacked in the bottom of slave ships in a month-long journey across the Atlantic Ocean, known as the Middle Passage; and WHEREAS, approximately 11.5 million African Americans survived the voyage to the New World. The number that died is likely greater; and WHEREAS, events in the history of the United States which led to the Civil War centered around sectional differences between the North and the South that were based on the economic and social divergence caused by the existence of slavery; and WHEREAS, President Abraham Lincoln was inaugurated as President of the United States in 1861, and he believed and stated that the paramount objective of the Civil War was to save the Union rather than save or destroy slavery; and -1- LR351 LR351 WHEREAS, President Lincoln also stated his wish was that all men everywhere could be free, thus adding to a growing anticipation by slaves that their ultimate liberty was at hand; and WHEREAS, in 1862, the first clear signs that the end of slavery was imminent came when laws abolishing slavery in the territories -

The Atlantic Monthly | January/February 2004

The Atlantic Monthly | January/February 2004 STATE OF THE UNION [Governance] Nation-Building 101 The chief threats to us and to world order come from weak, collapsed, or failed states. Learning how to fix such states—and building necessary political support at home—will be a defining issue for America in the century ahead BY FRANCIS FUKUYAMA ..... "I don't think our troops ought to be used for what's called nation-building. I think our troops ought to be used to fight and win war." —George W. Bush, October 11, 2000 "We meet here during a crucial period in the history of our nation, and of the civilized world. Part of that history was written by others; the rest will be written by us ... Rebuilding Iraq will require a sustained commitment from many nations, including our own: we will remain in Iraq as long as necessary, and not a day more." (italics added) —George W. Bush, February 26, 2003 he transformation of George W. Bush from a presidential candidate opposed to nation- building into a President committed to writing the history of an entire troubled part of the world is one of the most dramatic illustrations we have of how the September 11 terrorist attacks changed American politics. Under Bush's presidency the United States has taken responsibility for the stability and political development of two Muslim countries— Afghanistan and Iraq. A lot now rides on our ability not just to win wars but to help create self-sustaining democratic political institutions and robust market-oriented economies, and not only in these two countries but throughout the Middle East. -

How America Went Haywire

Have Smartphones Why Women Bully Destroyed a Each Other at Work Generation? p. 58 BY OLGA KHAZAN Conspiracy Theories. Fake News. Magical Thinking. How America Went Haywire By Kurt Andersen The Rise of the Violent Left Jane Austen Is Everything The Whitest Music Ever John le Carré Goes SEPTEMBER 2017 Back Into the Cold THEATLANTIC.COM 0917_Cover [Print].indd 1 7/19/2017 1:57:09 PM TerTeTere msm appppply.ly Viistsits ameierier cancaanexpexpresre scs.cs.s com/om busbubusinesspsplatl inuummt to learnmn moreorer . Hogarth &Ogilvy Hogarth 212.237.7000 CODE: FILE: DESCRIPTION: 29A-008875-25C-PBC-17-238F.indd PBC-17-238F TAKE A BREAK BEFORE TAKING ONTHEWORLD ABREAKBEFORETAKING TAKE PUB/POST: The Atlantic -9/17issue(Due TheAtlantic SAP #: #: WORKORDER PRODUCTION: AP.AP PBC.17020.K.011 AP.AP al_stacked_l_18in_wide_cmyk.psd Art: D.Hanson AP17006A_003C_EarlyCheckIn_SWOP3.tif 008875 BLEED: TRIM: LIVE: (CMYK; 3881 ppi; Up toDate) (CMYK; 3881ppi;Up 15.25” x10” 15.75”x10.5” 16”x10.75” (CMYK; 908 ppi; Up toDate), (CMYK; 908ppi;Up 008875-13A-TAKE_A_BREAK_CMYK-TintRev.eps 008875-13A-TAKE_A_BREAK_CMYK-TintRev.eps (Up toDate), (Up AP- American Express-RegMark-4C.ai AP- AmericanExpress-RegMark-4C.ai (Up toDate), (Up sbs_fr_chg_plat_met- at americanexpress.com/exploreplatinum at PlatinumMembership Business of theworld Explore FineHotelsandResorts. hand-picked 975 atover head your andclear early Arrive TerTeTere msm appppply.ly Viistsits ameierier cancaanexpexpresre scs.cs.s com/om busbubusinesspsplatl inuummt to learnmn moreorer . Hogarth &Ogilvy Hogarth 212.237.7000 -

The US Perspective on NATO Under Trump: Lessons of the Past and Prospects for the Future

The US perspective on NATO under Trump: lessons of the past and prospects for the future JOYCE P. KAUFMAN Setting the stage With a new and unpredictable administration taking the reins of power in Wash- ington, the United States’ future relationship with its European allies is unclear. The European allies are understandably concerned about what the change in the presidency will mean for the US relationship with NATO and the security guar- antees that have been in place for almost 70 years. These concerns are not without foundation, given some of the statements Trump made about NATO during the presidential campaign—and his description of NATO on 15 January 2017, just days before his inauguration, as ‘obsolete’. That comment, made in a joint interview with The Times of London and the German newspaper Bild, further exacerbated tensions between the United States and its closest European allies, although Trump did claim that the alliance is ‘very important to me’.1 The claim that it is obsolete rested on Trump’s incorrect assumption that the alliance has not been engaged in the fight against terrorism, a position belied by NATO’s support of the US conflict in Afghanistan. Among the most striking observations about Trump’s statements on NATO is that they are contradicted by comments made in confirmation hear- ings before the Senate by General James N. Mattis (retired), recently confirmed as Secretary of Defense, who described the alliance as ‘essential for Americans’ secu- rity’, and by Rex Tillerson, now the Secretary of State.2 It is important to note that the concerns about the future relationships between the United States and its NATO allies are not confined to European governments and policy analysts. -

The Atlantic at Marina Bay

The City. The Harbor. ...and in between... The Atlantic at Marina Bay The City. The Harbor. ...and in between... The Atlantic at Marina Bay Marina Bay’s Newest Luxury Condominium Complex Marina Bay offers an unparalleled lifestyle with its elegant residences, charming boardwalk, renowned restaurants, and one of the largest full service marinas south of Boston. From recreation to shopping, there is something for everyone. Nearby Quincy, an active marine community with three yacht clubs, arina Bay... also boasts some 27 miles of coastline with nearby beaches and MOnly minutes from Boston, a walking trails. It is also a golfer's haven with several courses, including luxury waterfront community the new Granite Links Golf Club which features stunning views of the featuring a charming boardwalk. spectacular Boston skyline. Marina Bay on Boston Harbor, (New England's largest full service marina) offers many amenities including … • Free Parking • High Speed Capacity Fuel Dock • Marina Bay’s Annual Fishing Tournament "Gone Fishin" • Controlled Access to Docks • Casual and Upscale Dining 2 Restaurants are plentiful at Marina Bay, offering dockside seating with panoramic views of the yacht basin. Siros for fine italian dining . Skyline for an eclectic menu . Captain Fishbones Seafood for lunch . Krabby Joe's casual atmosphere to relax with friends . Waterworks and Waterclub for nightly entertainment and dancing. n addition to the many Irestaurants, shops abound from major retailers to one of a kind boutiques and day spas. 3 Each year millions of tourists visit Boston to take in its sights . stroll along the Freedom Trail . watch the Regatta on the Charles River . -

Trump, American Hegemony and the Future of the Liberal International Order

Trump, American hegemony and the future of the liberal international order DOUG STOKES* The postwar liberal international order (LIO) has been a largely US creation. Washington’s consensus, geopolitically bound to the western ‘core’ during the Cold War, went global with the dissolution of the Soviet Union and the advent of systemic unipolarity. Many criticisms can be levelled at US leadership of the LIO, not least in respect of its claim to moral superiority, albeit based on laudable norms such as human rights and democracy. For often cynical reasons the US backed authoritarian regimes throughout the Cold War, pursued disastrous forms of regime change after its end, and has been deeply hostile to alternative (and often non-western) civilizational orders that reject its dogmas. Its successes, however, are manifold. Its ‘empire by invitation’ has helped secure a durable European peace, soften east Asian security dilemmas, and underwrite the strategic preconditions for complex and pacifying forms of global interdependence. Despite tactical differences between global political elites, a postwar commit- ment to maintain the LIO, even in the context of deep structural shifts in interna- tional relations, has remained resolute—until today. The British vote to leave the EU (arguably as much a creation of the United States as of its European members), has weakened one of the most important institutions of the broader US-led LIO. More destabilizing to the foundations of the LIO has been the election of President Trump. His administration has actively -

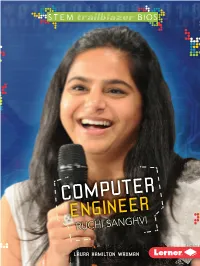

Computer Engineer Ruchi Sanghvi

STEM TRAILBLAZER BIOS COMPUTER ENGINEER RUCHI SANGHVI THIS PAGE LEFT BLANK INTENTIONALLY STEM TRAILBLAZER BIOS COMPUTER ENGINEER RUCHI SANGHVI LAURA HAMILTON WAXMAN Lerner Publications Minneapolis For Caleb, my computer wizard Copyright © 2015 by Lerner Publishing Group, Inc. All rights reserved. International copyright secured. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means—electronic, mechanical, photocopying, recording, or otherwise—without the prior written permission of Lerner Publishing Group, Inc., except for the inclusion of brief quotations in an acknowledged review. Lerner Publications Company A division of Lerner Publishing Group, Inc. 241 First Avenue North Minneapolis, MN 55401 USA For reading levels and more information, look up this title at www.lernerbooks.com. Content Consultant: Robert D. Nowak, PhD, McFarland-Bascom Professor in Engineering, Electrical and Computer Engineering, University of Wisconsin–Madison Library of Congress Cataloging-in-Publication Data Waxman, Laura Hamilton. Computer engineer Ruchi Sanghvi / Laura Hamilton Waxman. pages cm. — (STEM trailblazer bios) Includes index. ISBN 978–1–4677–5794–2 (lib. bdg. : alk. paper) ISBN 978–1–4677–6283–0 (eBook) 1. Sanghvi, Ruchi, 1982–—Juvenile literature. 2. Computer engineers—United States— Biography—Juvenile literature. 3. Women computer engineers—United States—Biography— Juvenile literature. I. Title. QA76.2.S27W39 2015 621.39092—dc23 [B] 2014015878 Manufactured in the United States of America 1 – PC – 12/31/14 The images in this book are used with the permission of: picture alliance/Jan Haas/Newscom, p. 4; © Dinodia Photos/Alamy, p. 5; © Tadek Kurpaski/flickr.com (CC BY 2.0), p. -

Coders-Noten.Indd 1 16-05-19 09:20 1

Noten coders-noten.indd 1 16-05-19 09:20 1. DE SOFTWARE-UPDATE DIE DE WERKELIJKHEID HEEFT VERANDERD 1 Adam Fisher, Valley of Genius: The Uncensored History of Silicon Valley (As Told by the Hackers, Founders, and Freaks Who Made It Boom, (New York: Twelve, 2017), 357. 2 Fisher, Valley of Genius, 361. 3 Dit segment is gebaseerd op een interview van mij met Sanghvi, en op verscheidene boeken, artikelen en video’s over de begindagen van Facebook, waaronder: Daniela Hernandez, ‘Facebook’s First Female Engineer Speaks Out on Tech’s Gender Gap’, Wired, 12 december 2014, https://www.wired.com/2014/12/ruchi-qa/; Mark Zucker berg, ‘Live with the Original News Feed Team’, Facebookvideo, 25:36, 6 september 2016, https://www.facebook.com/zuck/ videos/10103087013971051; David Kirkpatrick, The Facebook Effect: The Inside Story of the Company That Is Connecting the World (New York: Simon & Schuster, 2011); INKtalksDirector, ‘Ruchi Sanghvi: From Facebook to Facing the Unknown’, YouTube, 11:50, 20 maart 2012, https://www.youtube.com/watch?v=64AaXC00bkQ; TechCrunch, ‘TechFellow Awards: Ruchi Sanghvi’, TechCrunch-video, 4:40, 4 maart 2012, https://techcrunch.com/video/techfellow-awards-ruchi- coders-noten.indd 2 16-05-19 09:20 sanghvi/517287387/; FWDus2, ‘Ruchi’s Story’, YouTube, 1:24, 10 mei 2013, https://www.youtube.com/watch?v=i86ibVt1OMM; alle video’s geraadpleegd op 16 augustus 2018. 4 Clare O’Connor, ‘Video: Mark Zucker berg in 2005, Talking Facebook (While Dustin Moskovitz Does a Keg Stand)’, Forbes, 15 augustus 2011, geraadpleegd op 7 oktober 2018, https://www.forbes.com/sites/ clareoconnor/2011/08/15/video-mark-Zucker berg-in-2005-talking- facebook-while-dustin-moskovitz-does-a-keg-stand/#629cb86571a5.