A Measure of Research Taste

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Understanding the Initiation of the Publishing Process

Part 1 of a 3-part Series: Writing for a Biomedical Publication UNDERSTANDING THE INITIATION OF THE PUBLISHING PROCESS Deana Hallman Navarro, MD Maria D. González Pons, PhD Deana Hallman Navarro, MD BIOMEDICAL SCIENTIFIC PUBLISHING Publish New knowledge generated from scientific research must be communicated if it is to be relevant Scientists have an obligation to the provider of funds to share the findings with the external research community and to the public Communication Personal communication Public lectures, seminars, e-publication, press conference or new release ◦ Unable to critically evaluate its validity Publication ◦ Professional scientific journals – 1665 ◦ 1ry channel for communication of knowledge ◦ Arbiter of authenticity/legitimacy of knowledge Responsibility shared among authors, peer reviewers, editors and scientific community How to Communicate Information Publications, brief reports, abstracts, case reports, review article, letter to the editor, conference reports, book reviews… 1ry full-length research publication-1968 ◦ Definition: the first written disclosure of new knowledge that would enable the reader to: Repeat exactly the experiments described To assess fully the observations reported Evaluate the intellectual processes involved Development of the Manuscript To repeat exactly the experiments: ◦ Need a comprehensive, detailed methodology section To assess fully the observations: ◦ Need a very detailed results section With graphs, charts, figures, tables, … And full exposure of hard data Development -

A Comprehensive Framework to Reinforce Evidence Synthesis Features in Cloud-Based Systematic Review Tools

applied sciences Article A Comprehensive Framework to Reinforce Evidence Synthesis Features in Cloud-Based Systematic Review Tools Tatiana Person 1,* , Iván Ruiz-Rube 1 , José Miguel Mota 1 , Manuel Jesús Cobo 1 , Alexey Tselykh 2 and Juan Manuel Dodero 1 1 Department of Informatics Engineering, University of Cadiz, 11519 Puerto Real, Spain; [email protected] (I.R.-R.); [email protected] (J.M.M.); [email protected] (M.J.C.); [email protected] (J.M.D.) 2 Department of Information and Analytical Security Systems, Institute of Computer Technologies and Information Security, Southern Federal University, 347922 Taganrog, Russia; [email protected] * Correspondence: [email protected] Abstract: Systematic reviews are powerful methods used to determine the state-of-the-art in a given field from existing studies and literature. They are critical but time-consuming in research and decision making for various disciplines. When conducting a review, a large volume of data is usually generated from relevant studies. Computer-based tools are often used to manage such data and to support the systematic review process. This paper describes a comprehensive analysis to gather the required features of a systematic review tool, in order to support the complete evidence synthesis process. We propose a framework, elaborated by consulting experts in different knowledge areas, to evaluate significant features and thus reinforce existing tool capabilities. The framework will be used to enhance the currently available functionality of CloudSERA, a cloud-based systematic review Citation: Person, T.; Ruiz-Rube, I.; Mota, J.M.; Cobo, M.J.; Tselykh, A.; tool focused on Computer Science, to implement evidence-based systematic review processes in Dodero, J.M. -

And Should Not, Change Toxic Tort Causation Doctrine

THE MORE WE KNOW, THE LESS INTELLIGENT WE ARE? - HOW GENOMIC INFORMATION SHOULD, AND SHOULD NOT, CHANGE TOXIC TORT CAUSATION DOCTRINE Steve C. Gold* Advances in genomic science are rapidly increasing our understanding of dis- ease and toxicity at the most fundamental biological level. Some say this heralds a new era of certainty in linking toxic substances to human illness in the courtroom. Others are skeptical. In this Article I argue that the new sciences of molecular epidemiology and toxicogenomics will evince both remarkable explanatory power and intractable complexity. Therefore, even when these sciences are brought to bear, toxic tort claims will continue to present their familiar jurisprudential problems. Nevertheless, as genomic information is used in toxic tort cases, the sci- entific developments will offer courts an opportunity to correct mistakes of the past. Courts will miss those opportunities, however, if they simply transfer attitudesfrom classical epidemiology and toxicology to molecular and genomic knowledge. Using the possible link between trichloroethyleneand a type of kidney cancer as an exam- ple, I describe several causation issues where courts can, if they choose, improve doctrine by properly understanding and utilizing genomic information. L Introduction ............................................... 370 II. Causation in Toxic Torts Before Genomics ................... 371 A. The Problem .......................................... 371 B. Classical Sources of Causation Evidence ................ 372 C. The Courts Embrace Epidemiology - Perhaps Too Tightly ................................................ 374 D. Evidence Meets Substance: Keepers of the Gate ......... 379 E. The Opposing Tendency ................................ 382 III. Genomics, Toxicogenomics, and Molecular Epidemiology: The M ore We Know ........................................ 383 A. Genetic Variability and "Background" Risk .............. 384 B. Genetic Variability and Exposure Risk ................... 389 C. -

Dean, School of Science

Dean, School of Science School of Science faculty members seek to answer fundamental questions about nature ranging in scope from the microscopic—where a neuroscientist might isolate the electrical activity of a single neuron—to the telescopic—where an astrophysicist might scan hundreds of thousands of stars to find Earth-like planets in their orbits. Their research will bring us a better understanding of the nature of our universe, and will help us address major challenges to improving and sustaining our quality of life, such as developing viable resources of renewable energy or unravelling the complex mechanics of Alzheimer’s and other diseases of the aging brain. These profound and important questions often require collaborations across departments or schools. At the School of Science, such boundaries do not prevent people from working together; our faculty cross such boundaries as easily as they walk across the invisible boundary between one building and another in our campus’s interconnected buildings. Collaborations across School and department lines are facilitated by affiliations with MIT’s numerous laboratories, centers, and institutes, such as the Institute for Data, Systems, and Society, as well as through participation in interdisciplinary initiatives, such as the Aging Brain Initiative or the Transiting Exoplanet Survey Satellite. Our faculty’s commitment to teaching and mentorship, like their research, is not constrained by lines between schools or departments. School of Science faculty teach General Institute Requirement subjects in biology, chemistry, mathematics, and physics that provide the conceptual foundation of every undergraduate student’s education at MIT. The School faculty solidify cross-disciplinary connections through participation in graduate programs established in collaboration with School of Engineering, such as the programs in biophysics, microbiology, or molecular and cellular neuroscience. -

“Hard-Boiled” And/Or “Soft-Boiled” Pedagogy?

Interdisciplinary Contexts of Special Pedagogy NUMBER 16/2017 BOGUSŁAW ŚLIWERSKI Maria Grzegorzewska Pedagogical University in Warsaw “Hard-boiled” and/or “soft-boiled” pedagogy? ABSTRACT : Bogusław Śliwerski, “Hard-boiled” and/or “soft-boiled” pedagogy? Inter- disciplinary Contexts of Special Pedagogy, No. 16, Poznań 2017. Pp. 13-28. Adam Mickiewicz University Press. ISSN 2300-391X The article elaborates on the condition of partial moral anomy in the academic envi- ronment, in Poland that not only weakens the ethical causal power of scientific staff, but also results in weakening social capital and also in pathologies in the process of scientific promotion. The narration has been subject to a metaphor of eggs (equiva- lent of types of scientific publications or a contradiction thereof) and their hatching in various types of hens breeding (scientific or pseudo-academic schools) in order to sharpen the conditions and results of academic dishonesty and dysfunction and to notice problems in reviewing scientific degrees and titles’ dissertations. KEY WORDS : science, logology, reviews, scientific publications, pathologies, academic dishonesty, scientists The Republic of Poland’s post-socialist transformation period brings pathologies in various areas of life, including academic. In the years 2002-2015, I participated in the accreditation of academic universities and higher vocational schools and thus, I had the op- portunity to closely observe operation thereof and to initially assess their activity. For almost two years I have not been included in the accreditation team, probably due to the publicly formulated criti- 14 BOGUSŁAW ŚLIWERSKI cism of the body, whose authorities manipulate the assessment of units providing education at faculties and employ for related tasks persons of low scientific credibility, including, among others: owners of Slovak postdoctoral academic titles. -

Helpful Tips on How to Get Published in Journals

Helpful tips on how to get published in journals Peter Neijens What you should consider before submitting a paper 2 In general Publishing is as much a social as an intellectual process! Know the standards and expectations Learn to think like a reviewer Think of publishing as persuasive communication Be aware of tricks, but also of pitfalls 3 Preliminary questions Why publish? Basic decisions: authorship, responsibilities, publication types Judging the quality of your paper Finding the “right” outlet 4 Why publish? Discursive argument • Innovative contribution • Of interest to the scientific community Strategic argument • Publish or perish • Issue ownership • Visibility 5 Authorship Inclusion – who is an author? • All involved in the work of paper writing • All involved in the work necessary for the paper to be written • All involved in funding/ grants that made the study possible Order – who is first author? • The person who wrote the paper • The one who did most of the work for the study • The person that masterminded the paper • The most senior of the researchers 6 Responsibilities Hierarchical approach • First author masterminds and writes the paper • Second author contributes analyses, writes smaller parts • Third author edits, comments, advises Egalitarian approach • All authors equally share the work: alphabetical order • Authors alternate with first authorship in different papers 7 Publication plan Quantity or quality Aiming low or high Timing Topic sequence Focus: least publishable unit (LPU) 8 LPU / MPU Least publishable -

Precision Medicine Initiative: Building a Large US Research Cohort

Precision Medicine Initiative: Building a Large U.S. Research Cohort February 11-12, 2015 PARTICIPANT LIST Goncalo Abecasis, D. Phil. Philip Bourne, Ph.D. Professor of Biostatistics Associate Director for Data Science University of Michigan, Ann Arbor Office of the Director National Institutes of Health Christopher Austin, M.D. Director Murray Brilliant, Ph.D. National Center for Advancing Translational Sciences Director National Institutes of Health Center for Human Genetics Marshfield Clinic Research Foundation Vikram Bajaj, Ph.D. Chief Scientist Greg Burke, M.D., M.Sc. Google Life Sciences Professor and Director Wake Forest School of Medicine Dixie Baker, Ph.D. Wake Forest University Senior Partner Martin, Blanck and Associates Antonia Calafat, Ph.D. Chief Dana Boyd Barr, Ph.D. Organic Analytical Toxicology Branch Professor, Exposure Science and Environmental Health Centers for Disease Control and Prevention Rollins School of Public Health Emory University Robert Califf, M.D. Vice Chancellor for Clinical and Translational Research Jonathan Bingham, M.B.A. Duke University Medical Center Product Manager, Genomics Google, Inc. Rex Chisholm, Ph.D. Adam and Richard T. Lind Professor of Medical Eric Boerwinkle, Ph.D. Genetics Professor and Chair Vice Dean for Scientific Affairs and Graduate Studies Human Genetics Center Associate Vice President for Research University of Texas Health Science Center Northwestern University Associate Director Human Genome Sequencing Center Rick Cnossen, M.S. Baylor College of Medicine Director Global Healthcare Solutions Erwin Bottinger, M.D. HIMSS Board of Directors Professor PCHA/Continua Health Alliance Board of Directors The Charles Bronfman Institute for Personalized Intel Corporation Medicine Icahn School of Medicine at Mount Sinai - 1 - Francis Collins, M.D., Ph.D. -

Count Down: Six Kids Vie for Glory at the World's Toughest Math

Count Down Six Kids Vie for Glory | at the World's TOUGHEST MATH COMPETITION STEVE OLSON author of MAPPING HUMAN HISTORY, National Book Award finalist $Z4- 00 ACH SUMMER SIX MATH WHIZZES selected from nearly a half million EAmerican teens compete against the world's best problem solvers at the Interna• tional Mathematical Olympiad. Steve Olson, whose Mapping Human History was a Na• tional Book Award finalist, follows the members of a U.S. team from their intense tryouts to the Olympiad's nail-biting final rounds to discover not only what drives these extraordinary kids but what makes them both unique and typical. In the process he provides fascinating insights into the creative process, human intelligence and learning, and the nature of genius. Brilliant, but defying all the math-nerd stereotypes, these athletes of the mind want to excel at whatever piques their cu• riosity, and they are curious about almost everything — music, games, politics, sports, literature. One team member is ardent about water polo and creative writing. An• other plays four musical instruments. For fun and entertainment during breaks, the Olympians invent games of mind-boggling difficulty. Though driven by the glory of winning this ultimate math contest, in many ways these kids are not so different from other teenagers, finding pure joy in indulging their personal passions. Beyond the Olympiad, Steve Olson sheds light on such questions as why Americans feel so queasy about math, why so few girls compete in the subject, and whether or not talent is innate. Inside the cavernous gym where the competition takes place, Count Down reveals a fascinating subculture and its engaging, driven inhabitants. -

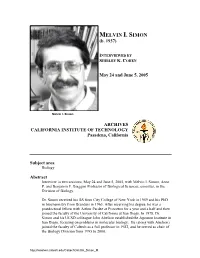

Interview with Melvin I. Simon

MELVIN I. SIMON (b. 1937) INTERVIEWED BY SHIRLEY K. COHEN May 24 and June 5, 2005 Melvin I. Simon ARCHIVES CALIFORNIA INSTITUTE OF TECHNOLOGY Pasadena, California Subject area Biology Abstract Interview in two sessions, May 24 and June 5, 2005, with Melvin I. Simon, Anne P. and Benjamin F. Biaggini Professor of Biological Sciences, emeritus, in the Division of Biology. Dr. Simon received his BS from City College of New York in 1959 and his PhD in biochemistry from Brandeis in 1963. After receiving his degree, he was a postdoctoral fellow with Arthur Pardee at Princeton for a year and a half and then joined the faculty of the University of California at San Diego. In 1978, Dr. Simon and his UCSD colleague John Abelson established the Agouron Institute in San Diego, focusing on problems in molecular biology. He (along with Abelson) joined the faculty of Caltech as a full professor in 1982, and he served as chair of the Biology Division from 1995 to 2000. http://resolver.caltech.edu/CaltechOH:OH_Simon_M In this interview, he discusses his education in Manhattan’s Yeshiva High School (where science courses were taught by teachers from the Bronx High School of Science), at CCNY, and as a Brandeis graduate student working working with Helen Van Vunakis on bacteriophage. Recalls his unsatisfactory postdoc experience at Princeton and his delight at arriving at UC San Diego, where molecular biology was just getting started. Discusses his work on bacterial organelles; recalls his and Abelson’s vain efforts to get UCSD to back a full-scale initiative in molecular biology and their subsequent founding of their own institute. -

Boston, Cambridge Are Ground Zero for Life Sciences

OPINION | JUAN ENRIQUEZ Boston, Cambridge are ground zero for life sciences BOYDEN PHOTO BY DOMINICK REUTER; LINDQUIST BY JOHN SOARES/WHITEHEAD INSTITUTE; GLOBE STAFF PHOTOS Top row: Robert Langer, Jack Szostak, and George Church. Bottom: Susan Lindquist and Edward S. Boyden. MARCH 16, 2015 BOSTONCAMBRIDGE is ground zero for the development and deployment of many of the bleeding edge life science discoveries and technologies. One corner alone, Vassar and Main Street in Cambridge, will likely generate 1 to 2 percent of the future world GDP. On a per capitSah baraesis, nowhere else coTmweese tclose in terms o3f Creomsemaerncths grants, patents, and publications that drive new gene code, which in turn alters the evolution of bacteria, plants, animals, and ourselves. CONTINUE READING BELOW ▼ At MIT, Bob Langer is generating the equivalent of a small country’s economy out of a single lab; his more than 1,080 patents have helped launch or enabled more than 300 pharma, chemical, biotech, and medical device companies. At Harvard, George Whitesides’ 12 companies have generated a market capitalization of over $20 billion. Harvard Med’s resident geniusenfant terrible, George Church, is the brains behind dozens and dozens of successful startups. (All this of course in addition to these folks being at the very top of their academic fields). In terms of the most basic questions about life itself, Ting Wu takes billions of lines of gene code across all organisms and meticulously compares each and every gene, looking for highly conserved gene code. She is narrowing in on exactly what DNA sequences are essential to all life forms, from bacteria through plants, animals, and even politicians. -

A Large-Scale Study of Health Science Frandsen, Tove Faber; Eriksen, Mette Brandt; Hammer, David Mortan Grøne; Buck Christensen, Janne

University of Southern Denmark Fragmented publishing a large-scale study of health science Frandsen, Tove Faber; Eriksen, Mette Brandt; Hammer, David Mortan Grøne; Buck Christensen, Janne Published in: Scientometrics DOI: 10.1007/s11192-019-03109-9 Publication date: 2019 Document version: Submitted manuscript Citation for pulished version (APA): Frandsen, T. F., Eriksen, M. B., Hammer, D. M. G., & Buck Christensen, J. (2019). Fragmented publishing: a large-scale study of health science. Scientometrics, 119(3), 1729–1743. https://doi.org/10.1007/s11192-019- 03109-9 Go to publication entry in University of Southern Denmark's Research Portal Terms of use This work is brought to you by the University of Southern Denmark. Unless otherwise specified it has been shared according to the terms for self-archiving. If no other license is stated, these terms apply: • You may download this work for personal use only. • You may not further distribute the material or use it for any profit-making activity or commercial gain • You may freely distribute the URL identifying this open access version If you believe that this document breaches copyright please contact us providing details and we will investigate your claim. Please direct all enquiries to [email protected] Download date: 29. Sep. 2021 Fragmented publishing: a large-scale study of health science Tove Faber Frandsen1,, Mette Brandt Eriksen2, David Mortan Grøne Hammer3,4, Janne Buck Christensen5 1 University of Southern Denmark, Department of Design and Communication, Universitetsparken 1, 6000 Kolding, Denmark, ORCID 0000-0002-8983-5009 2 The University Library of Southern Denmark, University of Southern Denmark, Campusvej 55, 5230 Odense M, Denmark. -

Programmers, Professors, and Parasites: Credit and Co-Authorship in Computer Science

Sci Eng Ethics (2009) 15:467–489 DOI 10.1007/s11948-009-9119-4 ORIGINAL PAPER Programmers, Professors, and Parasites: Credit and Co-Authorship in Computer Science Justin Solomon Received: 21 September 2008 / Accepted: 27 January 2009 / Published online: 27 February 2009 Ó Springer Science+Business Media B.V. 2009 Abstract This article presents an in-depth analysis of past and present publishing practices in academic computer science to suggest the establishment of a more consistent publishing standard. Historical precedent for academic publishing in computer science is established through the study of anecdotes as well as statistics collected from databases of published computer science papers. After examining these facts alongside information about analogous publishing situations and stan- dards in other scientific fields, the article concludes with a list of basic principles that should be adopted in any computer science publishing standard. These principles would contribute to the reliability and scientific nature of academic publications in computer science and would allow for more straightforward discourse in future publications. Keywords Co-authorship Á Computer science research Á Publishing In November 2002, a team of computer scientists, engineers, and other researchers from IBM and the Lawrence Livermore National Laboratory presented a conference paper announcing the development of a record-breaking supercomputer. This supercomputer, dubbed the BlueGene/L, would sport a ‘‘target peak processing power’’ of 360 trillion floating-point operations every second [1], enough to simulate the complexity of a mouse’s brain [2]. While the potential construction of the BlueGene/L was a major development for the field of supercomputing, the paper announcing its structure unwittingly suggested new industry standards for sharing co-authorship credit in the field of computer science.