Revealing the Detailed Lineage of Script Outputs Using Hybrid Provenance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Download File

Annex 2: List of tested and analyzed data sharing tools (non-exhaustive) Below are the specifications of the tools surveyed, as to February 2015, with some updates from April 2016. The tools selected in the context of EU BON are available in the main text of the publication and are described in more details. This list is also available on the EU BON Helpdesk website, where it will be regularly updated as needed. Additional lists are available through the GBIF resources page, the DataONE software tools catalogue, the BioVel BiodiversityCatalogue and the BDTracker A.1 GBIF Integrated Publishing Toolkit (IPT) Main usage, purpose, selected examples The Integrated Publishing Toolkit is a free open source software tool written in Java which is used to publish and share biodiversity data sets and metadata through the GBIF network. Designed for interoperability, it enables the publishing of content in databases or text files using open standards, namely, the Darwin Core and the Ecological Metadata Language. It also provides a 'one-click' service to convert data set metadata into a draft data paper manuscript for submission to a peer-reviewed journal. Currently, the IPT supports three core types of data: checklists, occurrence datasets and sample based data (plus datasets at metadata level only). The IPT is a community-driven tool. Core development happens at the GBIF Secretariat but the coding, documentation, and internationalization are a community effort. New versions incorporate the feedback from the people who actually use the IPT. In this way, users can help get the features they want by becoming involved. The user interface of the IPT has so far been translated into six languages: English, French, Spanish, Traditional Chinese, Brazilian Portuguese, Japanese (Robertson et al, 2014). -

Dataone Operations Documentation Release 2.0.1

DataONE Operations Documentation Release 2.0.1 DataONE Jul 01, 2020 Contents 1 Contents 3 1.1 Project Resources............................................3 1.2 Member Nodes Resources........................................ 23 1.3 Coordinating Nodes........................................... 61 1.4 Development............................................... 77 1.5 DataONE Environments......................................... 81 1.6 Glossary................................................. 82 2 Indices and Tables 87 Index 89 i ii DataONE Operations Documentation, Release 2.0.1 Operations documentation for the DataONE project and deployed infrastructure. Contents 1 DataONE Operations Documentation, Release 2.0.1 2 Contents CHAPTER 1 Contents 1.1 Project Resources 1.1.1 Communications Real Time DataONE uses Slack for messaging between project participants. Anyone may join the DataONE Slack organization by requesting an invite at: https://slack.dataone.org/ Video Conferencing DatONE uses Zoom for video conferencing within the project and for webinars. Project participants needing Zoom for project purposes should contact support @ dataone.org. Discussion Lists DataONE uses Google Groups for email list management. The DataONE.org Google Groups are managed as a dataone.org subdomain of nceas.ucsb.edu and includes the following lists: 3 DataONE Operations Documentation, Release 2.0.1 Address Description [email protected] For contacting administrators of the DataONE infrastructure. [email protected] A voluntary participation mailing list for disseminating information to the community with interest in DataONE. [email protected] Internal communications for the DataONE core development team. [email protected] Member Node forum, for discussion related to Member Nodes and dissem- inating information to DataONE Member Node operators. [email protected] General development related discussion. [email protected] Private list for the DataONE User Group Committee members. -

Panel Discussion Presentation: "Dataone: Facilitating Escience Through Collaboration"

University of Massachusetts Medical School eScholarship@UMMS University of Massachusetts and New England Area Librarian e-Science Symposium 2011 e-Science Symposium Apr 6th, 12:00 AM - 11:15 AM Panel Discussion presentation: "DataONE: Facilitating eScience through Collaboration" Suzie Allard University of Tennessee Follow this and additional works at: https://escholarship.umassmed.edu/escience_symposium Part of the Library and Information Science Commons This work is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 License. Repository Citation Allard, S. (2011). Panel Discussion presentation: "DataONE: Facilitating eScience through Collaboration". University of Massachusetts and New England Area Librarian e-Science Symposium. https://doi.org/ 10.13028/9dck-z235. Retrieved from https://escholarship.umassmed.edu/escience_symposium/2011/ program/9 Creative Commons License This work is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 License. This material is brought to you by eScholarship@UMMS. It has been accepted for inclusion in University of Massachusetts and New England Area Librarian e-Science Symposium by an authorized administrator of eScholarship@UMMS. For more information, please contact [email protected]. DataONE: Facilitating eScience through Collaboration Suzie Allard, Ph.D. University of Tennessee Presented at the Third University of Massachusetts & New England Area Librarian eScience Symposium 6 April 2011 A prominent feature of e-Science is the generation of immense data sets that can be rapidly disseminated to other researchers via the internet. -- Donna Kafel, MLIS Project Coordinator for the New England e-Science Portal for Librarians “e-Science and Its Relevance for Research Libraries” Environmental Science Challenges 3 Vision: Provide universal access to data about life on earth and the environment that sustains it. -

Introduction to Apis

Reading data directly into your analysis script: Introduction to APIs TALK ABSTRACT: Do you write scripts to analyze your data? An Application Programming Interface (API) can provide direct access to data and metadata in online repositories, saving you time and increasing the reproducibility of your analyses. This talk will provide an introduction in R to using APIs from several repositories of ecological data. RECORDING SCRIPT: Hello, my name is Erica Krimmel and this career central talk will provide an introduction to APIs, including how they can be used for reading data directly into an analysis script. RECORDING SCRIPT: This talk is brought to you by the ESA Data Help Desk, a collaboration between The Arctic Data Center, the Consortium of Universities for the Advancement of Hydrologic Science (CUAHSI), DataONE, the Environmental Data Initiative (EDI), the Global Biodiversity Information Facility (GBIF), iDigBio, NEON, and Neotoma. You can find out more about the Data Help Desk and see a full list of our activities at the 2020 ESA Career Central by following the bit.ly link on this slide. You can also find us on Twitter this week using the hashtag #DataHelpDesk. What is an API? RECORDING SCRIPT: Let’s begin with the basics. An API is an application programming interface, which is essentially a way for an organization to allow external users to interact with their systems. A really common scenario is when an organization, like Google, has a system, like Google Maps, that lots of external users, like this AllTrails app, want to use. It would be risky to provide external users with direct access to the system and so the organization makes access available via an API. -

Latest Onedrive for Windows Setup

DataONE Python Products unknown 2019-07-03 CONTENTS 1 Utilities (for end users) 3 1.1 DataONE Command Line Utilities and Examples............................3 1.2 DataONE ONEDrive...........................................3 1.3 DataONE Command Line Interface...................................3 2 Member Node (for Member Node partners)5 2.1 Generic Member Node (GMN).....................................5 3 Python Libraries (for software developers)7 3.1 DataONE Common Library for Python.................................7 3.2 DataONE Client Library for Python...................................7 3.3 DataONE Science Metadata Validator for Python............................7 3.4 DataONE Test Utilities..........................................7 3.5 DataONE Dev Tools...........................................8 3.6 DataONE CSW Harvester........................................8 4 Contents 9 4.1 DataONE Utilities / Examples......................................9 4.2 DataONE ONEDrive........................................... 25 4.3 DataONE Command Line Interface (CLI)................................ 57 4.4 Generic Member Node (GMN)..................................... 85 4.5 Indices and tables............................................ 161 4.6 DataONE Common Library for Python................................. 162 4.7 Indices and tables............................................ 246 4.8 DataONE Client Library for Python................................... 246 4.9 Indices and tables............................................ 284 4.10 DataONE Science -

Dataone: Enabling Data-Intensive Biological and Environmental Research Through Cyberinfrastructure

DataONE: Enabling Data-Intensive Biological and Environmental Research through Cyberinfrastructure Leadership Team: William Michener1, Suzie Allard2, John Cobb3, Robert Cook3, Patricia Cruse4, Mike Frame5, Stephanie Hampton6, Vivian Hutchison5, Matthew Jones6, Steve Kelling7, Rebecca Koskela1, Carol Tenopir2, Dave Vieglais8, Todd Vision9, Bruce Wilson2 Co‐Investigators: Paul Allen7, Peter Buneman10, Randy Butler11, Ewa Deelman12, David DeRoure13, Cliff Duke14, Carole Goble15, Donald Hobern16, Peter Honeyman17, Jeffery Horsburgh18, John Kunze4, Bertram Ludaescher19, Maribeth Manoff2, Line Pouchard3, Robert Sandusky20, Ryan Scherle9, Mark Servilla1, Jake Weltzin5 1University of New Mexico; 2University of Tennessee; 3Oak Ridge National Laboratory; 4University of California ‐ California Digital Library; 5U.S. Geological Survey; 6National Center for Ecological Analysis and Synthesis ‐ University of California ‐ Santa Barbara; 7Cornell University; 8University of Kansas; 9National Evolutionary Synthesis Center, University of North Carolina; 10University of Edinburgh; 11University of Illinois ‐ Urbana Champaign; 12University of Southern California; 13University of Southampton; 14Ecological Society of America; 15University of Manchester; 16Atlas of Living Australia; 17University of Michigan; 18Utah State University; 19University of California –Davis; 20University of Illinois –Chicago Abstract: Addressing the Earth's environmental problems requires that we change the ways that we do science; DataONE is designed to be the foundation for new innovative -

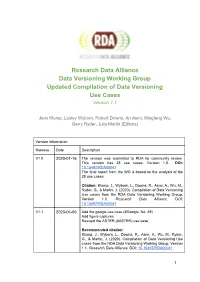

Research Data Alliance Data Versioning Working Group Updated Compilation of Data Versioning Use Cases Version 1.1

Research Data Alliance Data Versioning Working Group Updated Compilation of Data Versioning Use Cases Version 1.1 Jens Klump, Lesley Wyborn, Robert Downs, Ari Asmi, Mingfang Wu, Gerry Ryder, Julia Martin (Editors) Version information Release Date Description V1.0 2020-01-16 The version was submitted to RDA for community review. This version has 38 use cases. Version 1.0. DOI: 10.15497/RDA00041 The final report from the WG is based on the analysis of the 38 use cases. Citation: Klump, J., Wyborn, L., Downs, R., Asmi, A., Wu, M., Ryder, G., & Martin, J. (2020). Compilation of Data Versioning Use cases from the RDA Data Versioning Working Group. Version 1.0. Research Data Alliance. DOI: 10.15497/RDA00041 V1.1 2020-04-06 Add the google use case (#Google, No. 39) Add figure captures Revised the ASTER (#ASTER) use case Recommended citation: Klump, J., Wyborn, L., Downs, R., Asmi, A., Wu, M., Ryder, G., & Martin, J. (2020). Compilation of Data Versioning Use cases from the RDA Data Versioning Working Group. Version 1.1. Research Data Alliance. DOI: 10.15497/RDA00041 1 Foreword Data versioning is a fundamental element to ensuring the reproducibility of research. Work in other Research Data Alliance (RDA) groups on data provenance and data citation, as well as the W3C Dataset Exchange Working Group (DXWG), have highlighted that definitions of data versioning concepts and recommended practices are still missing. An important driver to more closely examine data versioning practices came from the work of the RDA Working Group (WG) on Data Citation, whose final report recognised the need for systematic data versioning practices. -

Dataone: Facilitating Escience Through Collaboration

Journal of eScience Librarianship Volume 1 Issue 1 Article 3 2012-02-14 DataONE: Facilitating eScience through Collaboration Suzie Allard University of Tennessee Let us know how access to this document benefits ou.y Follow this and additional works at: https://escholarship.umassmed.edu/jeslib Part of the Library and Information Science Commons Repository Citation Allard S. DataONE: Facilitating eScience through Collaboration. Journal of eScience Librarianship 2012;1(1): e1004. https://doi.org/10.7191/jeslib.2012.1004. Retrieved from https://escholarship.umassmed.edu/jeslib/vol1/iss1/3 Creative Commons License This work is licensed under a Creative Commons Attribution 4.0 License. This material is brought to you by eScholarship@UMMS. It has been accepted for inclusion in Journal of eScience Librarianship by an authorized administrator of eScholarship@UMMS. For more information, please contact [email protected]. JESLIB 2012; 1(1): 4-17 doi:10.7191/jeslib.2012.1004 DataONE: Facilitating eScience Through Collaboration Suzie Allard University of Tennessee, Knoxville, TN, USA Abstract Objective: To introduce DataONE, a multi- are the primary focus of the discussion. institutional, multinational, and interdisciplinary collaboration that is developing the cyberinfra- Results: DataONE is highly collaborative. This structure and organizational structure to support is a result of its cyberinfrastructure architecture, the full information lifecycle of biological, ecologi- its interdisciplinary nature, and its organizational cal, and environmental data and tools to be used diversity. The organizational structure of an agile by researchers, educators, and the public at management team, diverse leadership team, and large. productive working groups provides for a suc- cessful collaborative environment where substan- Setting: The dynamic world of data intensive tial contributions to the DataONE mission have science at the point it interacts with the grand been made by a large number of people. -

Best Practices for Preparing Environmental Data Sets to Share and Archive

Advertise your data using datacasting tools To make your data available using standard and open software tools you should: Use standard language and terms to clearly communicate to others that your data are available for reuse and that you expect ethical and appropriate use of your data Use an open source datacasting (RSS or other type) service that enables you to advertise your data and the options for others to obtain access to it (RSS, GeoRSS, DatacastingRSS) Description Rationale: Additional Information: Examples: http://www.nsidc.org/libre/ http://datacasting.jpl.nasa.gov/ Tags: access, data services, discover Assign descriptive file names File names should reflect the contents of the file and include enough information to uniquely identify the data file. File names may contain information such as project acronym, study title, location, investigator, year(s) of study, data type, version number, and file type. When choosing a file name, check for any database management limitations on file name length and use of special characters. Also, in general, lower-case names are less software and platform dependent. Avoid using spaces and special characters in file names, directory paths and field names. Automated processing, URLs and other systems often use spaces and special characters for parsing text string. Instead, consider using underscore ( _ ) or dashes ( - ) to separate meaningful parts of file names. Avoid $ % ^ & # | : and similar. If versioning is desired a date string within the file name is recommended to indicate the version. Avoid using file names such as mydata.dat or 1998.dat. Description Rationale: Clear, descriptive, and unique file names may be important when your data file is combined in a directory or FTP site with your own data files or with the data files of other investigators. -

The Environmental Data Initiative (EDI)

The Environmental Data Initiative (EDI) Supporting Curation and Archiving of Environmental Data EDI is funded by the NSF DEB Overview EDI’s mission and approaches - Corinna Gries Data repository and publishing - Duane Costa Data publication workflow support - Colin Smith Outreach and training - Kristin Vanderbilt Data integration support - Margaret O’Brien https://environmentaldatainitiative.org/ https://portal.edirepository.org @EDIgotdata edi-got-data History - we are standing on the shoulders of giants EDI Digital Object Identifiers Minted DataONE Member Node Repository Infrastructure Almost 40 years of LTER data management experience 1980s 2010 2015 Mission and Goals Accelerate the curation, archiving, and dissemination of environmental data Ensure that environmental data are: ● Deposited into a data repository for long-term preservation and data integrity ● Easily discoverable and seamlessly accessible ● Documented with rich science metadata to enable reuse and integration Curation Data curation support for data providers ● Experienced data managers on staff ● Consultation ● Training ● Data Curation Tool Development Archiving EDI’s repository builds on cyber infrastructure developed for LTER Certified and registered as trustworthy repository (re3data, Nature, ESA, others) Data are documented in Ecological Metadata Language standard High quality standards enforced through automated congruence and completeness checking Dissemination DataONE member node Local search interface Digital Object Identifier (DOI) through DataCite Collaborations -

Dataone Personas

University of Tennessee, Knoxville TRACE: Tennessee Research and Creative Exchange DataONE Sociocultural and Usability & Assessment Working Groups Communication and Information 4-28-2015 DataONE Personas UA/SC WG Personas Subgroup Follow this and additional works at: https://trace.tennessee.edu/utk_dataone Part of the Library and Information Science Commons Recommended Citation UA/SC WG Personas Subgroup, "DataONE Personas" (2015). DataONE Sociocultural and Usability & Assessment Working Groups. https://trace.tennessee.edu/utk_dataone/128 This Creative Written Work is brought to you for free and open access by the Communication and Information at TRACE: Tennessee Research and Creative Exchange. It has been accepted for inclusion in DataONE Sociocultural and Usability & Assessment Working Groups by an authorized administrator of TRACE: Tennessee Research and Creative Exchange. For more information, please contact [email protected]. DataONE Personas Developed by the DataONE Socio-cultural and Usability & Assessment Working Groups (SC/UA) Subgroup on Personas Kevin Crowston <[email protected]> Ahrash Bissell <[email protected]> Bruce Grant <[email protected]> Maribeth Manoff <[email protected]> Rebecca Davis <[email protected]> Last edited: 28 April 2015 Text and figures ©2015. Photographs are copyright by their creators. This document is licensed under the Creative Commons Attribution- NonCommercial-NoDerivatives 4.0 International License (CC BY-NC-ND). Please cite as: DataONE Socio-cultural and Usability & Assessment Working Groups Subgroup on Personas. (2015). DataONE Personas. Available from: http://dataone.org/persona-methods. Acknowledgements DataONE is a collaboration among many partner organizations. It is funded by the US National Science Foundation (NSF) under a Cooperative Agreement under Grant Numbers 0830944 and 1430508. -

UX Report: Dataone Toolbox for Matlab User-Experience Test Results

University of Tennessee, Knoxville TRACE: Tennessee Research and Creative Exchange DataONE Sociocultural and Usability & Assessment Working Groups Communication and Information 11-2-2015 UX Report: DataONE Toolbox for MatLab User-Experience Test Results UAWG University of Tennessee, Knoxville Follow this and additional works at: https://trace.tennessee.edu/utk_dataone Part of the Library and Information Science Commons Recommended Citation UAWG, "UX Report: DataONE Toolbox for MatLab User-Experience Test Results" (2015). DataONE Sociocultural and Usability & Assessment Working Groups. https://trace.tennessee.edu/utk_dataone/163 This Creative Written Work is brought to you for free and open access by the Communication and Information at TRACE: Tennessee Research and Creative Exchange. It has been accepted for inclusion in DataONE Sociocultural and Usability & Assessment Working Groups by an authorized administrator of TRACE: Tennessee Research and Creative Exchange. For more information, please contact [email protected]. DataONE Toolbox for MatLab User-Experience Test Results Usability and Assessment WorkGroup, DataONE November 2, 2015 The DataONE Usability and Assessment Working Group with members of the Cyberinfrastructure Working Group conducted a user-experience study of the DataONE toolbox for MatLab during the DataONE All-Hands Meeting in October 2015. METHODOLOGY Protocol The user-experience testing of the DataONE toolbox for MatLab is not intended for research but solely as product development. Testing was conducted on October 27, 2015 during the afternoon session of the DataONE All-Hands Meeting. The testing was conducted by Rachel Volentine, CCI-ORNL User- Experience Lab Manager at the University of Tennessee, Knoxville, and members of the Cyberinfrastructure working group, including Matt Jones, Chris Jones, Lauren Walker, and Yang Cao.