CSE 30321 – Lecture 04 – in Class Example Handout

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1 Introduction

Cambridge University Press 978-0-521-76992-1 - Microprocessor Architecture: From Simple Pipelines to Chip Multiprocessors Jean-Loup Baer Excerpt More information 1 Introduction Modern computer systems built from the most sophisticated microprocessors and extensive memory hierarchies achieve their high performance through a combina- tion of dramatic improvements in technology and advances in computer architec- ture. Advances in technology have resulted in exponential growth rates in raw speed (i.e., clock frequency) and in the amount of logic (number of transistors) that can be put on a chip. Computer architects have exploited these factors in order to further enhance performance using architectural techniques, which are the main subject of this book. Microprocessors are over 30 years old: the Intel 4004 was introduced in 1971. The functionality of the 4004 compared to that of the mainframes of that period (for example, the IBM System/370) was minuscule. Today, just over thirty years later, workstations powered by engines such as (in alphabetical order and without specific processor numbers) the AMD Athlon, IBM PowerPC, Intel Pentium, and Sun UltraSPARC can rival or surpass in both performance and functionality the few remaining mainframes and at a much lower cost. Servers and supercomputers are more often than not made up of collections of microprocessor systems. It would be wrong to assume, though, that the three tenets that computer archi- tects have followed, namely pipelining, parallelism, and the principle of locality, were discovered with the birth of microprocessors. They were all at the basis of the design of previous (super)computers. The advances in technology made their implementa- tions more practical and spurred further refinements. -

Instruction Latencies and Throughput for AMD and Intel X86 Processors

Instruction latencies and throughput for AMD and Intel x86 processors Torbj¨ornGranlund 2019-08-02 09:05Z Copyright Torbj¨ornGranlund 2005{2019. Verbatim copying and distribution of this entire article is permitted in any medium, provided this notice is preserved. This report is work-in-progress. A newer version might be available here: https://gmplib.org/~tege/x86-timing.pdf In this short report we present latency and throughput data for various x86 processors. We only present data on integer operations. The data on integer MMX and SSE2 instructions is currently limited. We might present more complete data in the future, if there is enough interest. There are several reasons for presenting this report: 1. Intel's published data were in the past incomplete and full of errors. 2. Intel did not publish any data for 64-bit operations. 3. To allow straightforward comparison of an important aspect of AMD and Intel pipelines. The here presented data is the result of extensive timing tests. While we have made an effort to make sure the data is accurate, the reader is cautioned that some errors might have crept in. 1 Nomenclature and notation LNN means latency for NN-bit operation.TNN means throughput for NN-bit operation. The term throughput is used to mean number of instructions per cycle of this type that can be sustained. That implies that more throughput is better, which is consistent with how most people understand the term. Intel use that same term in the exact opposite meaning in their manuals. The notation "P6 0-E", "P4 F0", etc, are used to save table header space. -

45-Year CPU Evolution: One Law and Two Equations

45-year CPU evolution: one law and two equations Daniel Etiemble LRI-CNRS University Paris Sud Orsay, France [email protected] Abstract— Moore’s law and two equations allow to explain the a) IC is the instruction count. main trends of CPU evolution since MOS technologies have been b) CPI is the clock cycles per instruction and IPC = 1/CPI is the used to implement microprocessors. Instruction count per clock cycle. c) Tc is the clock cycle time and F=1/Tc is the clock frequency. Keywords—Moore’s law, execution time, CM0S power dissipation. The Power dissipation of CMOS circuits is the second I. INTRODUCTION equation (2). CMOS power dissipation is decomposed into static and dynamic powers. For dynamic power, Vdd is the power A new era started when MOS technologies were used to supply, F is the clock frequency, ΣCi is the sum of gate and build microprocessors. After pMOS (Intel 4004 in 1971) and interconnection capacitances and α is the average percentage of nMOS (Intel 8080 in 1974), CMOS became quickly the leading switching capacitances: α is the activity factor of the overall technology, used by Intel since 1985 with 80386 CPU. circuit MOS technologies obey an empirical law, stated in 1965 and 2 Pd = Pdstatic + α x ΣCi x Vdd x F (2) known as Moore’s law: the number of transistors integrated on a chip doubles every N months. Fig. 1 presents the evolution for II. CONSEQUENCES OF MOORE LAW DRAM memories, processors (MPU) and three types of read- only memories [1]. The growth rate decreases with years, from A. -

Cuda C Best Practices Guide

CUDA C BEST PRACTICES GUIDE DG-05603-001_v9.0 | June 2018 Design Guide TABLE OF CONTENTS Preface............................................................................................................ vii What Is This Document?..................................................................................... vii Who Should Read This Guide?...............................................................................vii Assess, Parallelize, Optimize, Deploy.....................................................................viii Assess........................................................................................................ viii Parallelize.................................................................................................... ix Optimize...................................................................................................... ix Deploy.........................................................................................................ix Recommendations and Best Practices.......................................................................x Chapter 1. Assessing Your Application.......................................................................1 Chapter 2. Heterogeneous Computing.......................................................................2 2.1. Differences between Host and Device................................................................ 2 2.2. What Runs on a CUDA-Enabled Device?...............................................................3 Chapter 3. Application Profiling............................................................................. -

Multi-Cycle Datapathoperation

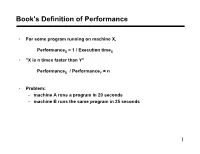

Book's Definition of Performance • For some program running on machine X, PerformanceX = 1 / Execution timeX • "X is n times faster than Y" PerformanceX / PerformanceY = n • Problem: – machine A runs a program in 20 seconds – machine B runs the same program in 25 seconds 1 Example • Our favorite program runs in 10 seconds on computer A, which hasa 400 Mhz. clock. We are trying to help a computer designer build a new machine B, that will run this program in 6 seconds. The designer can use new (or perhaps more expensive) technology to substantially increase the clock rate, but has informed us that this increase will affect the rest of the CPU design, causing machine B to require 1.2 times as many clockcycles as machine A for the same program. What clock rate should we tellthe designer to target?" • Don't Panic, can easily work this out from basic principles 2 Now that we understand cycles • A given program will require – some number of instructions (machine instructions) – some number of cycles – some number of seconds • We have a vocabulary that relates these quantities: – cycle time (seconds per cycle) – clock rate (cycles per second) – CPI (cycles per instruction) a floating point intensive application might have a higher CPI – MIPS (millions of instructions per second) this would be higher for a program using simple instructions 3 Performance • Performance is determined by execution time • Do any of the other variables equal performance? – # of cycles to execute program? – # of instructions in program? – # of cycles per second? – average # of cycles per instruction? – average # of instructions per second? • Common pitfall: thinking one of the variables is indicative of performance when it really isn’t. -

CS2504: Computer Organization

CS2504, Spring'2007 ©Dimitris Nikolopoulos CS2504: Computer Organization Lecture 4: Evaluating Performance Instructor: Dimitris Nikolopoulos Guest Lecturer: Matthew Curtis-Maury CS2504, Spring'2007 ©Dimitris Nikolopoulos Understanding Performance Why do we study performance? Evaluate during design Evaluate before purchasing Key to understanding underlying organizational motivation How can we (meaningfully) compare two machines? Performance, Cost, Value, etc Main issue: Need to understand what factors in the architecture contribute to overall system performance and the relative importance of these factors Effects of ISA on performance 2 How will hardware change affect performance CS2504, Spring'2007 ©Dimitris Nikolopoulos Airplane Performance Analogy Airplane Passengers Range Speed Boeing 777 375 4630 610 Boeing 747 470 4150 610 Concorde 132 4000 1250 Douglas DC-8-50 146 8720 544 Fighter Jet 4 2000 1500 What metric do we use? Concorde is 2.05 times faster than the 747 747 has 1.74 times higher throughput What about cost? And the winner is: It Depends! 3 CS2504, Spring'2007 ©Dimitris Nikolopoulos Throughput vs. Response Time Response Time: Execution time (e.g. seconds or clock ticks) How long does the program take to execute? How long do I have to wait for a result? Throughput: Rate of completion (e.g. results per second/tick) What is the average execution time of the program? Measure of total work done Upgrading to a newer processor will improve: response time Adding processors to the system will improve: throughput 4 CS2504, Spring'2007 ©Dimitris Nikolopoulos Example: Throughput vs. Response Time Suppose we know that an application that uses both a desktop client and a remote server is limited by network performance. -

Analysis of Body Bias Control Using Overhead Conditions for Real Time Systems: a Practical Approach∗

IEICE TRANS. INF. & SYST., VOL.E101–D, NO.4 APRIL 2018 1116 PAPER Analysis of Body Bias Control Using Overhead Conditions for Real Time Systems: A Practical Approach∗ Carlos Cesar CORTES TORRES†a), Nonmember, Hayate OKUHARA†, Student Member, Nobuyuki YAMASAKI†, Member, and Hideharu AMANO†, Fellow SUMMARY In the past decade, real-time systems (RTSs), which must in RTSs. These techniques can improve energy efficiency; maintain time constraints to avoid catastrophic consequences, have been however, they often require a large amount of power since widely introduced into various embedded systems and Internet of Things they must control the supply voltages of the systems. (IoTs). The RTSs are required to be energy efficient as they are used in embedded devices in which battery life is important. In this study, we in- Body bias (BB) control is another solution that can im- vestigated the RTS energy efficiency by analyzing the ability of body bias prove RTS energy efficiency as it can manage the tradeoff (BB) in providing a satisfying tradeoff between performance and energy. between power leakage and performance without affecting We propose a practical and realistic model that includes the BB energy and the power supply [4], [5].Itseffect is further endorsed when timing overhead in addition to idle region analysis. This study was con- ducted using accurate parameters extracted from a real chip using silicon systems are enabled with silicon on thin box (SOTB) tech- on thin box (SOTB) technology. By using the BB control based on the nology [6], which is a novel and advanced fully depleted sili- proposed model, about 34% energy reduction was achieved. -

Exam 1 Solutions

Midterm Exam ECE 741 – Advanced Computer Architecture, Spring 2009 Instructor: Onur Mutlu TAs: Michael Papamichael, Theodoros Strigkos, Evangelos Vlachos February 25, 2009 EXAM 1 SOLUTIONS Problem Points Score 1 40 2 20 3 15 4 20 5 25 6 20 7 (bonus) 15 Total 140+15 • This is a closed book midterm. You are allowed to have only two letter-sized cheat sheets. • No electronic devices may be used. • This exam lasts 1 hour 50 minutes. • If you make a mess, clearly indicate your final answer. • For questions requiring brief answers, please provide brief answers. Do not write an essay. You can be penalized for verbosity. • Please show your work when needed. We cannot give you partial credit if you do not clearly show how you arrive at a numerical answer. • Please write your name on every sheet. EXAM 1 SOLUTIONS Problem 1 (Short answers – 40 points) i. (3 points) A cache has the block size equal to the word length. What property of program behavior, which usually contributes to higher performance if we use a cache, does not help the performance if we use THIS cache? Spatial locality ii. (3 points) Pipelining increases the performance of a processor if the pipeline can be kept full with useful instructions. Two reasons that often prevent the pipeline from staying full with useful instructions are (in two words each): Data dependencies Control dependencies iii. (3 points) The reference bit (sometimes called “access” bit) in a PTE (Page Table Entry) is used for what purpose? Page replacement The similar function is performed by what bit or bits in a cache’s tag store entry? Replacement policy bits (e.g. -

A Performance Analysis Tool for Intel SGX Enclaves

sgx-perf: A Performance Analysis Tool for Intel SGX Enclaves Nico Weichbrodt Pierre-Louis Aublin Rüdiger Kapitza IBR, TU Braunschweig LSDS, Imperial College London IBR, TU Braunschweig Germany United Kingdom Germany [email protected] [email protected] [email protected] ABSTRACT the provider or need to refrain from offloading their workloads Novel trusted execution technologies such as Intel’s Software Guard to the cloud. With the advent of Intel’s Software Guard Exten- Extensions (SGX) are considered a cure to many security risks in sions (SGX)[14, 28], the situation is about to change as this novel clouds. This is achieved by offering trusted execution contexts, so trusted execution technology enables confidentiality and integrity called enclaves, that enable confidentiality and integrity protection protection of code and data – even from privileged software and of code and data even from privileged software and physical attacks. physical attacks. Accordingly, researchers from academia and in- To utilise this new abstraction, Intel offers a dedicated Software dustry alike recently published research works in rapid succession Development Kit (SDK). While it is already used to build numerous to secure applications in clouds [2, 5, 33], enable secure network- applications, understanding the performance implications of SGX ing [9, 11, 34, 39] and fortify local applications [22, 23, 35]. and the offered programming support is still in its infancy. This Core to all these works is the use of SGX provided enclaves, inevitably leads to time-consuming trial-and-error testing and poses which build small, isolated application compartments designed to the risk of poor performance. -

Trends in Processor Architecture

A. González Trends in Processor Architecture Trends in Processor Architecture Antonio González Universitat Politècnica de Catalunya, Barcelona, Spain 1. Past Trends Processors have undergone a tremendous evolution throughout their history. A key milestone in this evolution was the introduction of the microprocessor, term that refers to a processor that is implemented in a single chip. The first microprocessor was introduced by Intel under the name of Intel 4004 in 1971. It contained about 2,300 transistors, was clocked at 740 KHz and delivered 92,000 instructions per second while dissipating around 0.5 watts. Since then, practically every year we have witnessed the launch of a new microprocessor, delivering significant performance improvements over previous ones. Some studies have estimated this growth to be exponential, in the order of about 50% per year, which results in a cumulative growth of over three orders of magnitude in a time span of two decades [12]. These improvements have been fueled by advances in the manufacturing process and innovations in processor architecture. According to several studies [4][6], both aspects contributed in a similar amount to the global gains. The manufacturing process technology has tried to follow the scaling recipe laid down by Robert N. Dennard in the early 1970s [7]. The basics of this technology scaling consists of reducing transistor dimensions by a factor of 30% every generation (typically 2 years) while keeping electric fields constant. The 30% scaling in the dimensions results in doubling the transistor density (doubling transistor density every two years was predicted in 1975 by Gordon Moore and is normally referred to as Moore’s Law [21][22]). -

Theoretical Peak FLOPS Per Instruction Set on Modern Intel Cpus

Theoretical Peak FLOPS per instruction set on modern Intel CPUs Romain Dolbeau Bull – Center for Excellence in Parallel Programming Email: [email protected] Abstract—It used to be that evaluating the theoretical and potentially multiple threads per core. Vector of peak performance of a CPU in FLOPS (floating point varying sizes. And more sophisticated instructions. operations per seconds) was merely a matter of multiplying Equation2 describes a more realistic view, that we the frequency by the number of floating-point instructions will explain in details in the rest of the paper, first per cycles. Today however, CPUs have features such as vectorization, fused multiply-add, hyper-threading or in general in sectionII and then for the specific “turbo” mode. In this paper, we look into this theoretical cases of Intel CPUs: first a simple one from the peak for recent full-featured Intel CPUs., taking into Nehalem/Westmere era in section III and then the account not only the simple absolute peak, but also the full complexity of the Haswell family in sectionIV. relevant instruction sets and encoding and the frequency A complement to this paper titled “Theoretical Peak scaling behavior of current Intel CPUs. FLOPS per instruction set on less conventional Revision 1.41, 2016/10/04 08:49:16 Index Terms—FLOPS hardware” [1] covers other computing devices. flop 9 I. INTRODUCTION > operation> High performance computing thrives on fast com- > > putations and high memory bandwidth. But before > operations => any code or even benchmark is run, the very first × micro − architecture instruction number to evaluate a system is the theoretical peak > > - how many floating-point operations the system > can theoretically execute in a given time. -

ESC-470: ARM 9 Instruction Set Architecture with Performance

ARM 9 Instruction Set Architecture Introduction with Performance Perspective Joe-Ming Cheng, Ph.D. ARM-family processors are positioned among the leaders in key embedded applications. Many presentations and short lectures have already addressed the ARM’s applications and capabilities. In this introduction, we intend to discuss the ARM’s instruction set uniqueness from the performance prospective. This introduction is also trying to follow the approaches established by two outstanding textbooks of David Patterson and John Hennessey [PetHen00] [HenPet02]. 1.0 ARM Instruction Set Architecture Processor instruction set architecture (ISA) choices have evolved from accumulator, stack, register-to- memory, to register-register (load-store) organization. ARM 9 ISA is a load-store machine. ARM 9 ISA takes advantage of its smaller set of registers (16 vs. many 32-register processors) to incorporate more direct controls and achieve high encoding density. ARM’s load or store multiple register instruction, for example , allows enlisting of all possible registers and conditional execution in one instruction. The Thumb mode instruction set is another exa mple of how ARM ISA facilitates higher encode density. Rather than compressing the code, Thumb -mode instructions are two 16-bit instructions packed in a 32-bit ARM-mode instruction space. The Thumb -mode instructions are a subset of ARM instructions. When executing in Thumb mode, a single 32-bit instruction fetch cycle effectively brings in two instructions. Thumb code reduces access bandwidth, code size, and improves instruction cache hit rate. Another way ARM achieves cycle time reduction is by using Harvard architecture. The architecture facilitates independent data and instruction buses.