Duplication in Biological Databases Further Limit the Development of the Related Duplicate Detection Methods

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Selecting Molecular Markers for a Specific Phylogenetic Problem

MOJ Proteomics & Bioinformatics Review Article Open Access Selecting molecular markers for a specific phylogenetic problem Abstract Volume 6 Issue 3 - 2017 In a molecular phylogenetic analysis, different markers may yield contradictory Claudia AM Russo, Bárbara Aguiar, Alexandre topologies for the same diversity group. Therefore, it is important to select suitable markers for a reliable topological estimate. Issues such as length and rate of evolution P Selvatti Department of Genetics, Federal University of Rio de Janeiro, will play a role in the suitability of a particular molecular marker to unfold the Brazil phylogenetic relationships for a given set of taxa. In this review, we provide guidelines that will be useful to newcomers to the field of molecular phylogenetics weighing the Correspondence: Claudia AM Russo, Molecular Biodiversity suitability of molecular markers for a given phylogenetic problem. Laboratory, Department of Genetics, Institute of Biology, Block A, CCS, Federal University of Rio de Janeiro, Fundão Island, Keywords: phylogenetic trees, guideline, suitable genes, phylogenetics Rio de Janeiro, RJ, 21941-590, Brazil, Tel 21 991042148, Fax 21 39386397, Email [email protected] Received: March 14, 2017 | Published: November 03, 2017 Introduction concept that occupies a central position in evolutionary biology.15 Homology is a qualitative term, defined by equivalence of parts due Over the last three decades, the scientific field of molecular to inherited common origin.16–18 biology has experienced remarkable advancements -

An Automated Pipeline for Retrieving Orthologous DNA Sequences from Genbank in R

life Technical Note phylotaR: An Automated Pipeline for Retrieving Orthologous DNA Sequences from GenBank in R Dominic J. Bennett 1,2,* ID , Hannes Hettling 3, Daniele Silvestro 1,2, Alexander Zizka 1,2, Christine D. Bacon 1,2, Søren Faurby 1,2, Rutger A. Vos 3 ID and Alexandre Antonelli 1,2,4,5 ID 1 Gothenburg Global Biodiversity Centre, Box 461, SE-405 30 Gothenburg, Sweden; [email protected] (D.S.); [email protected] (A.Z.); [email protected] (C.D.B.); [email protected] (S.F.); [email protected] (A.A.) 2 Department of Biological and Environmental Sciences, University of Gothenburg, Box 461, SE-405 30 Gothenburg, Sweden 3 Naturalis Biodiversity Center, P.O. Box 9517, 2300 RA Leiden, The Netherlands; [email protected] (H.H.); [email protected] (R.A.V.) 4 Gothenburg Botanical Garden, Carl Skottsbergsgata 22A, SE-413 19 Gothenburg, Sweden 5 Department of Organismic and Evolutionary Biology, Harvard University, 26 Oxford St., Cambridge, MA 02138 USA * Correspondence: [email protected] Received: 28 March 2018; Accepted: 1 June 2018; Published: 5 June 2018 Abstract: The exceptional increase in molecular DNA sequence data in open repositories is mirrored by an ever-growing interest among evolutionary biologists to harvest and use those data for phylogenetic inference. Many quality issues, however, are known and the sheer amount and complexity of data available can pose considerable barriers to their usefulness. A key issue in this domain is the high frequency of sequence mislabeling encountered when searching for suitable sequences for phylogenetic analysis. -

"Phylogenetic Analysis of Protein Sequence Data Using The

Phylogenetic Analysis of Protein Sequence UNIT 19.11 Data Using the Randomized Axelerated Maximum Likelihood (RAXML) Program Antonis Rokas1 1Department of Biological Sciences, Vanderbilt University, Nashville, Tennessee ABSTRACT Phylogenetic analysis is the study of evolutionary relationships among molecules, phenotypes, and organisms. In the context of protein sequence data, phylogenetic analysis is one of the cornerstones of comparative sequence analysis and has many applications in the study of protein evolution and function. This unit provides a brief review of the principles of phylogenetic analysis and describes several different standard phylogenetic analyses of protein sequence data using the RAXML (Randomized Axelerated Maximum Likelihood) Program. Curr. Protoc. Mol. Biol. 96:19.11.1-19.11.14. C 2011 by John Wiley & Sons, Inc. Keywords: molecular evolution r bootstrap r multiple sequence alignment r amino acid substitution matrix r evolutionary relationship r systematics INTRODUCTION the baboon-colobus monkey lineage almost Phylogenetic analysis is a standard and es- 25 million years ago, whereas baboons and sential tool in any molecular biologist’s bioin- colobus monkeys diverged less than 15 mil- formatics toolkit that, in the context of pro- lion years ago (Sterner et al., 2006). Clearly, tein sequence analysis, enables us to study degree of sequence similarity does not equate the evolutionary history and change of pro- with degree of evolutionary relationship. teins and their function. Such analysis is es- A typical phylogenetic analysis of protein sential to understanding major evolutionary sequence data involves five distinct steps: (a) questions, such as the origins and history of data collection, (b) inference of homology, (c) macromolecules, developmental mechanisms, sequence alignment, (d) alignment trimming, phenotypes, and life itself. -

In Silico Analysis of Single Nucleotide Polymorphisms

Central JSM Bioinformatics, Genomics and Proteomics Original Research *Corresponding author Anfal Ibrahim Bahereldeen, Department of medical laboratory sciences, Sudan University of Science and In silico analysis of Single Technology, Khartoum, Sudan; Tel: 249916060120; Email: [email protected] Submitted: 14 October 2019 Nucleotide Polymorphisms Accepted: 23 January 2020 Published: 27 January 2020 (SNPs) in Human HFE Gene ISSN: 2576-1102 Copyright coding region © 2020 Bahereldeen AI, et ail. OPEN ACCESS Anfal Ibrahim Bahereldeen1*, Reel Gamal Alfadil2, Hala Mohammed Ali2, Randa Musa Nasser2, Nada Tagelsir Eisa2, Keywords Wesal Hassan Mahmoud2, and Shaymaa Mohammedazeem • In silico • HFE gene, Polyphen-2 2 Osman • I mutant 1Department of Medical laboratory sciences, Sudan University of science and • Project hope Technology, Sudan 2Department of Medical laboratory sciences, Al-Neelain University, Sudan Abstract Background: HFE gene is a HLA class1-like molecule, expressed in the different cells and tissues, mutations on this gene reported to cause about 80-90% of Hereditary haemochromatosis (HHC) and it is increasing the risk of different diseases. In this study; we aimed to analysis the SNPs in HFE gene by using different computational methods. Methodology: We obtained HFE gene nsSNPs from dbSNP/NCBI database, Deleterious nsSNPs predicted by different bioinformatics servers including; SIFT, polyphen-2, I-mutant and SNPs & GO servers. Protein structure analysis done by using Project hope and RaptorX tools then visualized by Chimera software and function, interaction and network of HFE gene analysis done by Gene MANIA program. Results: SIFT and Polyphen-2 servers predicted 75 deleterious nsSNPs and nine polymorphisms from them predicted as highly damaging and disease associated. -

New Syllabus

M.Sc. BIOINFORMATICS REGULATIONS AND SYLLABI (Effective from 2019-2020) Centre for Bioinformatics SCHOOL OF LIFE SCIENCES PONDICHERRY UNIVERSITY PUDUCHERRY 1 Pondicherry University School of Life Sciences Centre for Bioinformatics Master of Science in Bioinformatics The M.Sc Bioinformatics course started since 2007 under UGC Innovative program Program Objectives The main objective of the program is to train the students to learn an innovative and evolving field of bioinformatics with a multi-disciplinary approach. Hands-on sessions will be provided to train the students in both computer and experimental labs. Program Outcomes On completion of this program, students will be able to: Gain understanding of the principles and concepts of both biology along with computer science To use and describe bioinformatics data, information resource and also to use the software effectively from large databases To know how bioinformatics methods can be used to relate sequence to structure and function To develop problem-solving skills, new algorithms and analysis methods are learned to address a range of biological questions. 2 Eligibility for M.Sc. Bioinformatics Students from any of the below listed Bachelor degrees with minimum 55% of marks are eligible. Bachelor’s degree in any relevant area of Physics / Chemistry / Computer Science / Life Science with a minimum of 55% of marks 3 PONDICHERRY UNIVERSITY SCHOOL OF LIFE SCIENCES CENTRE FOR BIOINFORMATICS LIST OF HARD-CORE COURSES FOR M.Sc. BIOINFORMATICS (Academic Year 2019-2020 onwards) Course -

Molecular Phylogenetics Suggests a New Classification and Uncovers Convergent Evolution of Lithistid Demosponges

RESEARCH ARTICLE Deceptive Desmas: Molecular Phylogenetics Suggests a New Classification and Uncovers Convergent Evolution of Lithistid Demosponges Astrid Schuster1,2, Dirk Erpenbeck1,3, Andrzej Pisera4, John Hooper5,6, Monika Bryce5,7, Jane Fromont7, Gert Wo¨ rheide1,2,3* 1. Department of Earth- & Environmental Sciences, Palaeontology and Geobiology, Ludwig-Maximilians- Universita¨tMu¨nchen, Richard-Wagner Str. 10, 80333 Munich, Germany, 2. SNSB – Bavarian State Collections OPEN ACCESS of Palaeontology and Geology, Richard-Wagner Str. 10, 80333 Munich, Germany, 3. GeoBio-CenterLMU, Ludwig-Maximilians-Universita¨t Mu¨nchen, Richard-Wagner Str. 10, 80333 Munich, Germany, 4. Institute of Citation: Schuster A, Erpenbeck D, Pisera A, Paleobiology, Polish Academy of Sciences, ul. Twarda 51/55, 00-818 Warszawa, Poland, 5. Queensland Hooper J, Bryce M, et al. (2015) Deceptive Museum, PO Box 3300, South Brisbane, QLD 4101, Australia, 6. Eskitis Institute for Drug Discovery, Griffith Desmas: Molecular Phylogenetics Suggests a New Classification and Uncovers Convergent Evolution University, Nathan, QLD 4111, Australia, 7. Department of Aquatic Zoology, Western Australian Museum, of Lithistid Demosponges. PLoS ONE 10(1): Locked Bag 49, Welshpool DC, Western Australia, 6986, Australia e116038. doi:10.1371/journal.pone.0116038 *[email protected] Editor: Mikhail V. Matz, University of Texas, United States of America Received: July 3, 2014 Accepted: November 30, 2014 Abstract Published: January 7, 2015 Reconciling the fossil record with molecular phylogenies to enhance the Copyright: ß 2015 Schuster et al. This is an understanding of animal evolution is a challenging task, especially for taxa with a open-access article distributed under the terms of the Creative Commons Attribution License, which mostly poor fossil record, such as sponges (Porifera). -

Biological Databases

Biological Databases Biology Is A Data Science ● Hundreds of thousand of species ● Million of articles in scientific literature ● Genetic Information ○ Gene names ○ Phenotype of mutants ○ Location of genes/mutations on chromosomes ○ Linkage (relationships between genes) 2 Data and Metadata ● Data are “concrete” objects ○ e.g. number, tweet, nucleotide sequence ● Metadata describes properties of data ○ e.g. object is a number, each tweet has an author ● Database structure may contain metadata ○ Type of object (integer, float, string, etc) ○ Size of object (strings at most 4 characters long) ○ Relationships between data (chromosomes have zero or more genes) 3 What is a Database? ● A data collection that needs to be : ○ Organized ○ Searchable ○ Up-to-date ● Challenge: ○ change “meaningless” data into useful, accessible information 4 A spreadsheet can be a Database ● Rectangular data ● Structured ● No metadata ● Search tools: ○ Excel ○ grep ○ python/R 5 A filesystem can be a Database ● Hierarchical data ● Some metadata ○ File, symlink, etc ● Unstructured ● Search tools: ○ ls ○ find ○ locate 6 Organization and Types of Databases ● Every database has tools that: ○ Store ○ Extract ○ Modify ● Flat file databases (flat DBMS) ○ Simple, restrictive, table ● Hierarchical databases ○ Simple, restrictive, tables ● Relational databases (RDBMS) ○ Complex, versatile, tables ● Object-oriented databases (ODBMS) ● Data warehouses and distributed databases ● Unstructured databases (object store DBs) 7 Where do the data come from ? 8 Types of Biological Data -

Molecular Homology and Multiple-Sequence Alignment: an Analysis of Concepts and Practice

CSIRO PUBLISHING Australian Systematic Botany, 2015, 28, 46–62 LAS Johnson Review http://dx.doi.org/10.1071/SB15001 Molecular homology and multiple-sequence alignment: an analysis of concepts and practice David A. Morrison A,D, Matthew J. Morgan B and Scot A. Kelchner C ASystematic Biology, Uppsala University, Norbyvägen 18D, Uppsala 75236, Sweden. BCSIRO Ecosystem Sciences, GPO Box 1700, Canberra, ACT 2601, Australia. CDepartment of Biology, Utah State University, 5305 Old Main Hill, Logan, UT 84322-5305, USA. DCorresponding author. Email: [email protected] Abstract. Sequence alignment is just as much a part of phylogenetics as is tree building, although it is often viewed solely as a necessary tool to construct trees. However, alignment for the purpose of phylogenetic inference is primarily about homology, as it is the procedure that expresses homology relationships among the characters, rather than the historical relationships of the taxa. Molecular homology is rather vaguely defined and understood, despite its importance in the molecular age. Indeed, homology has rarely been evaluated with respect to nucleotide sequence alignments, in spite of the fact that nucleotides are the only data that directly represent genotype. All other molecular data represent phenotype, just as do morphology and anatomy. Thus, efforts to improve sequence alignment for phylogenetic purposes should involve a more refined use of the homology concept at a molecular level. To this end, we present examples of molecular-data levels at which homology might be considered, and arrange them in a hierarchy. The concept that we propose has many levels, which link directly to the developmental and morphological components of homology. -

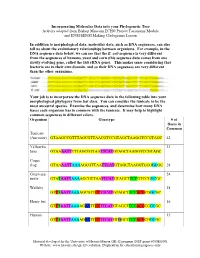

Incorporating Molecular Data Into Your Phylogenetic Tree Activity Adapted from Bishop Museum ECHO Project Taxonomy Module and ENSI/SENSI Making Cladograms Lesson

Incorporating Molecular Data into your Phylogenetic Tree Activity adapted from Bishop Museum ECHO Project Taxonomy Module and ENSI/SENSI Making Cladograms Lesson In addition to morphological data, molecular data, such as DNA sequences, can also tell us about the evolutionary relationships between organisms. For example, in the DNA sequence data below, we can see that the E. coli sequence is very different from the sequences of humans, yeast and corn (this sequence data comes from one slowly evolving gene, called the 16S rRNA gene). This makes sense considering that bacteria are in their own domain, and so their DNA sequences are very different than the other organisms. Your job is to incorporate the DNA sequence data in the following table into your morphological phylogeny from last class. You can consider the tunicate to be the most ancestral species. Examine the sequences, and determine how many DNA bases each organism has in common with the tunicate. It may help to highlight common sequences in different colors. Organism Genotype # of Bases in Common Tunicate (Ancestor) GTAAGCCGTTTAGCGTTAACGTCCGTAGCTAAGGTCCGTAGC 42 Yellowfin 33 tuna GTAAAATTTTTAGCGTTAATTCATGTAGCTAAGGTCCGTAGC Coqui frog GTAAAATTAAAAGCGTTAATTCATGTAGCTAAGGTCCGGCGC 28 Green sea 24 turtle GTATAATTAAAAGCGTTAATTCATGTAGCTTCCGTCCGGCGC Wallaby 18 GTTTAATTAAAAGCGTTCCTTCATGTAGCTTCCACGCGGCGC Hoary bat 16 GTTTAATTAAAAGATTTCCTTCATGTAGCTTCCACGCGGCGC Human 15 GTTTAATTAAAAGATTTCCTTCATGTGGCTTCCACGCGGCGC Material developed for the University of Hawaii-Manoa GK-12 program (NSF grant #05385500). Website: www.hawaii.edu/gk-12/evolution. Duplication for educational purposes only. Enter the number of bases each organism has in common with the tunicate into the phylogeny below: Tunicate Tuna Frog Turtle Wallaby Bat Human ____ bases ____ bases ____ bases ____ bases ____ bases ____ bases Does the molecular data agree with the morphological data? What would you do if the molecular and morphological data do not agree? Material developed for the University of Hawaii-Manoa GK-12 program (NSF grant #05385500). -

Fast Data Analytics by Learning

Fast Data Analytics by Learning by Yongjoo Park A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy (Computer Science and Engineering) in The University of Michigan 2017 Doctoral Committee: Associate Professor Michael Cafarella, Co-Chair Assistant Professor Barzan Mozafari, Co-Chair Associate Professor Eytan Adar Professor H. V. Jagadish Associate Professor Carl Lagoze Yongjoo Park [email protected] ORCID iD: 0000-0003-3786-6214 c Yongjoo Park 2017 To my mother ii Acknowledgements I thank my co-advisors, Michael Cafarella and Barzan Mozafari. I am deeply grateful that I could start my graduate studies with Mike Cafarella. Mike was the one of the nicest persons I have met (I can say, throughout my life). He also deeply cared of my personal life as well as my graduate study. He always encouraged and supported me so I could make the best decisions for my life. He was certainly more than a simple academic advisor. Mike also has excellent talents in presentation. His descriptions (on any subject) are well-organized and straightforward. Sometimes, I find myself trying to imitate his presentation styles when I give my own presentations, which, I believe, is natural since I have been advised by him for more than five years now. I started work with Barzan after a few years since I came to Michigan. He is excellent in writing research papers and presenting work in the most interesting, formal, and concise way. He devoted tremendous amount of time for me. I learned great amount of writing and presentation skills from him. -

Biocuration Experts on the Impact of Duplication and Other Data Quality Issues in Biological Databases

Genomics Proteomics Bioinformatics 18 (2020) 91–103 Genomics Proteomics Bioinformatics www.elsevier.com/locate/gpb www.sciencedirect.com PERSPECTIVE Quality Matters: Biocuration Experts on the Impact of Duplication and Other Data Quality Issues in Biological Databases Qingyu Chen 1,*, Ramona Britto 2, Ivan Erill 3, Constance J. Jeffery 4, Arthur Liberzon 5, Michele Magrane 2, Jun-ichi Onami 6,7, Marc Robinson-Rechavi 8,9, Jana Sponarova 10, Justin Zobel 1,*, Karin Verspoor 1,* 1 School of Computing and Information Systems, University of Melbourne, Melbourne, VIC 3010, Australia 2 European Molecular Biology Laboratory, European Bioinformatics Institute (EMBL-EBI), Wellcome Trust Genome Campus, Cambridge CB10 1SD, UK 3 Department of Biological Sciences, University of Maryland, Baltimore, MD 21250, USA 4 Department of Biological Sciences, University of Illinois at Chicago, Chicago, IL 60607, USA 5 Broad Institute of MIT and Harvard, Cambridge, MA 02142, USA 6 Japan Science and Technology Agency, National Bioscience Database Center, Tokyo 102-8666, Japan 7 National Institute of Health Sciences, Tokyo 158-8501, Japan 8 Swiss Institute of Bioinformatics, CH-1015 Lausanne, Switzerland 9 Department of Ecology and Evolution, University of Lausanne, CH-1015 Lausanne, Switzerland 10 Nebion AG, 8048 Zurich, Switzerland Received 8 December 2017; revised 24 October 2018; accepted 14 December 2018 Available online 9 July 2020 Handled by Zhang Zhang Introduction assembled, annotated, and ultimately submitted to primary nucleotide databases such as GenBank [2], European Nucleo- tide Archive (ENA) [3], and DNA Data Bank of Japan Biological databases represent an extraordinary collective vol- (DDBJ) [4] (collectively known as the International Nucleotide ume of work. -

Bioinformatics: a Practical Guide to the Analysis of Genes and Proteins, Second Edition Andreas D

BIOINFORMATICS A Practical Guide to the Analysis of Genes and Proteins SECOND EDITION Andreas D. Baxevanis Genome Technology Branch National Human Genome Research Institute National Institutes of Health Bethesda, Maryland USA B. F. Francis Ouellette Centre for Molecular Medicine and Therapeutics Children’s and Women’s Health Centre of British Columbia University of British Columbia Vancouver, British Columbia Canada A JOHN WILEY & SONS, INC., PUBLICATION New York • Chichester • Weinheim • Brisbane • Singapore • Toronto BIOINFORMATICS SECOND EDITION METHODS OF BIOCHEMICAL ANALYSIS Volume 43 BIOINFORMATICS A Practical Guide to the Analysis of Genes and Proteins SECOND EDITION Andreas D. Baxevanis Genome Technology Branch National Human Genome Research Institute National Institutes of Health Bethesda, Maryland USA B. F. Francis Ouellette Centre for Molecular Medicine and Therapeutics Children’s and Women’s Health Centre of British Columbia University of British Columbia Vancouver, British Columbia Canada A JOHN WILEY & SONS, INC., PUBLICATION New York • Chichester • Weinheim • Brisbane • Singapore • Toronto Designations used by companies to distinguish their products are often claimed as trademarks. In all instances where John Wiley & Sons, Inc., is aware of a claim, the product names appear in initial capital or ALL CAPITAL LETTERS. Readers, however, should contact the appropriate companies for more complete information regarding trademarks and registration. Copyright ᭧ 2001 by John Wiley & Sons, Inc. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic or mechanical, including uploading, downloading, printing, decompiling, recording or otherwise, except as permitted under Sections 107 or 108 of the 1976 United States Copyright Act, without the prior written permission of the Publisher.