2012 Consumer Security Products Performance Benchmarks (Edition 3) Antivirus, Internet Security & Total Security Windows 7

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hostscan 4.8.01064 Antimalware and Firewall Support Charts

HostScan 4.8.01064 Antimalware and Firewall Support Charts 10/1/19 © 2019 Cisco and/or its affiliates. All rights reserved. This document is Cisco public. Page 1 of 76 Contents HostScan Version 4.8.01064 Antimalware and Firewall Support Charts ............................................................................... 3 Antimalware and Firewall Attributes Supported by HostScan .................................................................................................. 3 OPSWAT Version Information ................................................................................................................................................. 5 Cisco AnyConnect HostScan Antimalware Compliance Module v4.3.890.0 for Windows .................................................. 5 Cisco AnyConnect HostScan Firewall Compliance Module v4.3.890.0 for Windows ........................................................ 44 Cisco AnyConnect HostScan Antimalware Compliance Module v4.3.824.0 for macos .................................................... 65 Cisco AnyConnect HostScan Firewall Compliance Module v4.3.824.0 for macOS ........................................................... 71 Cisco AnyConnect HostScan Antimalware Compliance Module v4.3.730.0 for Linux ...................................................... 73 Cisco AnyConnect HostScan Firewall Compliance Module v4.3.730.0 for Linux .............................................................. 76 ©201 9 Cisco and/or its affiliates. All rights reserved. This document is Cisco Public. -

K7 Endpoint Security

DATA SHEET K7 Endpoint Security STANDARD EDITION Cyber security is an important operational requirement for any business today – no matter how large or small the company. The effects of a targeted malware attack can bring a business to Key Features its knees, negatively affecting an organization’s efficiency and incurring lost productivity from the workforce. • Low cost, high performance endpoint Protecting a company’s intellectual property and securing customer data are good business protection and prevention for small practices, however, the cost of deploying, managing, and maintaining commercial IT management and medium businesses and security controls can be extremely expensive for an up and coming business. For IT Operations • Detect and mitigate real-world in small and medium businesses, price and performance are just as important as high detection threats such as viruses, spyware, rates and threat mitigation features – without compromising the overall security of the network. ransomware and phishing attacks Cost Effective Anti-Malware Solutions • Granular Firewall with integrated HIDS to block targeted system level Many enterprise-class malware solutions suffer from bloated features and functionality that attacks smaller organizations do not need and rarely use. K7 Endpoint Security provides small to medium businesses with cost-effective anti-malware capabilities - without the high purchase price, complex • Optimized performance and small deployment models, or expensive renewal and maintenance costs found in other vendor solutions. memory footprint extends the useful Available in both Standard and Advanced editions, K7’s Endpoint Security supports centralized life of older systems management models to simplify deployment, streamline IT operations, and meet both internal and • Flexible on-premise or Cloud-based external compliance requirements. -

Endpoint Integrity Check\221\316\211\236\210\352\227\227.Xlsx

エンドポイントインテグリティチェック - アンチウイルス対応メーカ一覧 360safe.com AEC, spol. s r.o. ALWIL Software AT & T AVG Technologies Agnitum Ltd AhnLab, Inc. Aliant America Online, Inc. Antiy Labs Authentium, Inc. Avanquest Publishing USA, Inc. Avira GmbH Beijing Rising Technology Corp. Ltd. Bell Bell Aliant BellSouth Bitdefender BullGuard Ltd. CJSC Returnil Software CMC Information Security Cat Computer Services Pvt. Ltd. Central Command , Inc. Check Point, Inc Cisco Systems, Inc. ClamAV ClamWin Comodo Group Computer Associates International, Inc Coranti, Inc Crawler LLC Cyber Defender Corp. Defender Pro LLC ESTsoft Corp. EathLink, Inc Emsi Software GmbH Eset Software F-Secure Corp. FairPoint Faronics Corporation Fortinet, Inc Frisk Software International GData Software AG GFI Software Grisoft, Inc. H+BEDV Datentechnik GmbH HAURI, Inc IKARUS Software GmbH Internet Security Systems, Inc. Jiangmin, Inc K7 Computing Pvt. Ltd. kaspersky Labs Kingsoft Corp. LANDesk Software, Ltd. Lavasoft, Inc. Lumension Security McAfee, Inc. MicroWorld Microsoft Corp. N-able Technologies Inc Nano Security New Technology Wave Inc. Norman ASA ONO Omniquad PC Tools Software PCSecurityShield Panda Software Parallels, Inc. Preventon Technologies Ltd. Prevx Ltd. Quick Heal Technologies (P) Ltd. Radialpoint Inc. Rogers SOFTWIN SalD Ltd. Security Coverage Inc. Sereniti, Inc. Shavlik Technologies Sophos, Plc Sunbelt Software Symantec Corp. TELUS Thirtyseven4, LLC. Tobit.Software Trend Micro, Inc. Troppus Software Corporation TrustPort, a.s. VCOM VMware, Inc. Verizon Videotron Virgin Broadband Virgin Media Virus BlokAda Ltd. Virus Buster Ltd. Webroot Software, Inc Yahoo!, Inc Zone Labs LLC e frontier, Inc. eEye Digital Security iolo technologies, LLC. -

NAIKON – Traces from a Military Cyber-Espionage Operation

WHITEPAPER Security NAIKON – Traces from a Military Cyber-Espionage Operation www.bitdefender.com Contents Introduction .................................................................................................................... 3 Victimology..................................................................................................................... 4 Toolset ............................................................................................................................ 5 Rainyday backdoor execution .............................................................................................. 6 Nebulae Backdoor ................................................................................................................ 9 Exfiltration Tools ................................................................................................................. 11 Credential Harvesting ......................................................................................................... 12 Network Tools ..................................................................................................................... 13 Other Tools .......................................................................................................................... 14 Attribution ..................................................................................................................... 14 IOCS ............................................................................................................................. -

Windows Antivirus Support Chart for Hostscan 3.1.06073 Product Name

Windows Antivirus Support Chart for HostScan 3.1.06073 Marketing Product Data File Engine Live Version Check RTP Set RTP Language Alias Name Time Version Update Names 360Safe.co m 360 1.x ✔ ✔ ✔ ✔ ✔ English 360杀毒 Antivirus 360 3.x ✔ ✘ ✔ ✘ ✘ English Antivirus 360 Total 4.x ✔ ✔ ✔ ✘ ✘ English Security Chinese 360 360杀毒 1.x ✔ ✔ ✔ ✘ ✘ Simplified Antivirus Chinese 360 360杀毒 2.x ✔ ✔ ✔ ✘ ✘ Simplified Antivirus Chinese 360杀毒 3.x ✘ ✘ ✔ ✘ ✘ Simplified Chinese 360 360杀毒 4.x ✘ ✘ ✔ ✘ ✘ Simplified Antivirus Chinese 360 360杀毒 5.x ✘ ✘ ✔ ✘ ✘ Simplified Antivirus Other 360Safe.co x ✘ ✘ ✘ ✘ ✘ English m Antivirus AEC, spol. s r.o. TrustPort 2.x ✘ ✘ ✔ ✔ ✘ English Antivirus Other AEC, spol. s r.o. x ✘ ✘ ✘ ✘ ✘ English Antivirus Agnitum Ltd. Outpost Antivirus 7.x ✔ ✔ ✔ ✔ ✘ English Pro Outpost Antivirus 8.x ✔ ✔ ✔ ✔ ✘ English Pro Outpost Antivirus 9.x ✔ ✔ ✔ ✔ ✔ English Pro Outpost Outpost Security Antivirus 6.x ✔ ✔ ✔ ✔ ✘ English Suite Pro Pro 2009 2009 Outpost Security 7.x ✘ ✘ ✔ ✘ ✘ English Suite Free Outpost Security 7.x ✔ ✔ ✔ ✔ ✘ English Suite Pro Outpost Security 8.x ✔ ✔ ✔ ✔ ✔ English Suite Pro Outpost Security 9.x ✔ ✔ ✔ ✔ ✔ English Suite Pro Other Agnitum x ✘ ✘ ✘ ✘ ✘ English Ltd. Antivirus AhnLab, Inc. AhnLab Security 2.x ✔ ✔ ✘ ✔ ✘ English Pack AhnLab V3 Internet 7.x ✔ ✔ ✔ ✔ ✘ English Security 2007 AhnLab V3 Internet Security 7.x ✔ ✔ ✔ ✔ ✘ English 2007 Platinum AhnLab V3 Internet Security 7.x ✔ ✔ ✔ ✔ ✘ English 2008 Platinum AhnLab V3 Internet Security 7.x ✔ ✔ ✔ ✔ ✔ English 2009 Platinum AhnLab V3 Internet Security 7.0 7.x ✔ ✔ ✔ ✔ ✘ English Platinum Enterprise AhnLab V3 Internet 8.x ✔ ✔ ✔ ✔ ✔ English Security 8.0 AhnLab V3 Internet 9.x ✔ ✔ ✔ ✔ ✔ English Security 9.0 AhnLab V3 VirusBlock Internet 7.x ✔ ✔ ✘ ✔ ✘ English Security 2007 AhnLab V3 VirusBlock Internet Security 7.x ✔ ✔ ✔ ✘ ✔ English 2007 Platinum Enterprise V3 Click 1.x ✔ ✘ ✘ ✘ ✘ English V3 Lite 1.x ✔ ✔ ✔ ✔ ✔ Korean V3 Lite 3.x ✔ ✔ ✔ ✘ ✘ Korean V3 VirusBlock 6.x ✔ ✔ ✘ ✘ ✘ English 2005 V3 ウイルスブ NA ✔ ✔ ✘ ✔ ✘ Japanese ロック V3Pro 2004 6.x ✔ ✔ ✔ ✔ ✘ English Other AhnLab, x ✘ ✘ ✘ ✘ ✘ English Inc. -

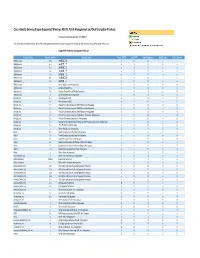

Cisco Identity Services Engine Supported Windows AV/AS/PM/DE

Cisco Identity Services Engine Supported Windows AS/AV, Patch Management and Disk Encryption Products Compliance Module Version 3.6.10363.2 This document provides Windows AS/AV, Patch Management and Disk Encryption support information on the the Cisco AnyConnect Agent Version 4.2. Supported Windows Antispyware Products Vendor_Name Product_Version Product_Name Check_FSRTP Set_FSRTP VirDef_Signature VirDef_Time VirDef_Version 360Safe.com 10.x 360安全卫士 vX X v v 360Safe.com 4.x 360安全卫士 vX X v v 360Safe.com 5.x 360安全卫士 vX X v v 360Safe.com 6.x 360安全卫士 vX X v v 360Safe.com 7.x 360安全卫士 vX X v v 360Safe.com 8.x 360安全卫士 vX X v v 360Safe.com 9.x 360安全卫士 vX X v v 360Safe.com x Other 360Safe.com Antispyware Z X X Z X Agnitum Ltd. 7.x Outpost Firewall Pro vX X X O Agnitum Ltd. 6.x Outpost Firewall Pro 2008 [AntiSpyware] v X X v O Agnitum Ltd. x Other Agnitum Ltd. Antispyware Z X X Z X AhnLab, Inc. 2.x AhnLab SpyZero 2.0 vv O v O AhnLab, Inc. 3.x AhnLab SpyZero 2007 X X O v O AhnLab, Inc. 7.x AhnLab V3 Internet Security 2007 Platinum AntiSpyware v X O v O AhnLab, Inc. 7.x AhnLab V3 Internet Security 2008 Platinum AntiSpyware v X O v O AhnLab, Inc. 7.x AhnLab V3 Internet Security 2009 Platinum AntiSpyware v v O v O AhnLab, Inc. 7.x AhnLab V3 Internet Security 7.0 Platinum Enterprise AntiSpyware v X O v O AhnLab, Inc. 8.x AhnLab V3 Internet Security 8.0 AntiSpyware v v O v O AhnLab, Inc. -

3.0.2 ESAP Release Notes

ESAP Release Notes Build Published September’ 2016 1.0 Document Version Contents Introduction 3 Interoperability and Supported Platforms 3 Noteworthy Changes 3 ESAP 3.0.2 and Pulse Connect Secure/ Pulse Policy Secure Compatibility Chart 3 Support has been added for the following products in ESAP3.0.2 4 V4 list of supported products: 4 V3 list of supported products: 6 V4 Issues Fixed in ESAP3.0.2 6 V3 Issues Fixed in ESAP3.0.2 7 Upgrade Issues in ESAP3.0.2 7 Known Issues/Limitations in ESAP3.0.2 8 Documentation 8 Documentation Feedback 8 Technical Support 9 Revision History 9 ESAP 3.0.2 Release Notes Introduction V2 Unified + V3 SDK Version: Mac and Windows 3.6.10826.2. V4 SDK Version: Windows 4.2.466.0, Mac 4.2.640.0 V3V4 Adapter Version: Windows 4.2.836.0, Mac 4.2.65.0 Note: The SDK embedded in this ESAP version has been tested and qualified to interoperate with an extensive list of endpoint security applications covering most products listed in the supported products list. Interoperability and Supported Platforms Please refer to the ESAP Supported Platforms Guide for supported versions of browsers and operating systems in this release. Noteworthy Changes Pulse Secure introduced support for consuming the latest OPSWAT SDK Version 4 in 8.2R5 PCS and PPS 5.3 R5 PPS release. o OPSWAT SDK’s are bundled within our Endpoint Security Assessment Plugin (ESAP). From ESAP 3.0.2 onwards, OPSWAT V4 SDK for Windows and Mac are packaged alongside existing V2 and V3 SDKs. -

Cisco Identity Services Engine Release 1.2 Supported Windows

Cisco Identity Services Engine Supported Windows AV/AS Products Compliance Module Version 3.5.6317.2 This document provides Windows 8/7/Vista/XP AV/AS support information on the Cisco NAC Agent version 4.9.0.x and later. For other support information and complete release updates, refer to the Release Notes for Cisco Identity Services Engine corresponding to your Cisco Identity Services Engine release version. Supported Windows AV/AS Product Summary Added New AV Definition Support: COMODO Antivirus 5.x COMODO Internet Security 3.5.x COMODO Internet Security 3.x COMODO Internet Security 4.x Kingsoft Internet Security 2013.x Added New AV Products Support: V3 Click 1.x avast! Internet Security 8.x avast! Premier 8.x avast! Pro Antivirus 8.x Gen-X Total Security 1.x K7UltimateSecurity 13.x Kaspersky Endpoint Security 10.x Kaspersky PURE 13.x Norman Security Suite 10.x Supported Windows AntiVirus Products Product Name Product Version Installation Virus Definition Live Update 360Safe.com 360 Antivirus 1.x 4.9.0.28 / 3.4.21.1 4.9.0.28 / 3.4.21.1 yes 360 Antivirus 3.x 4.9.0.29 / 3.5.5767.2 4.9.0.29 / 3.5.5767.2 - 360杀毒 1.x 4.9.0.28 / 3.4.21.1 4.9.0.28 / 3.4.21.1 - 360杀毒 2.x 4.9.0.29 / 3.4.25.1 4.9.0.29 / 3.4.25.1 - 360杀毒 3.x 4.9.0.29 / 3.5.2101.2 - Other 360Safe.com Antivirus x 4.9.0.29 / 3.5.2101.2 - AEC, spol. -

Cisco Anyconnect ISE Posture Linux Support Charts for Compliance Module V4.3.1709.0

Cisco AnyConnect ISE Posture Linux Support Charts for Compliance Module v4.3.1709.0 Introduction 2 Anti-Malware 2 Patch Management 6 Revised: August 30, 2021 Introduction This document lists the products that are supported by the Cisco ISE Posture Agent for Linux OS. Anti-Malware Product Name Product Definition Live Application Application Application Min. Compliance Version State Update Running Kill Uninstall Module Version Check Check 360.CN 360 天擎 6.x - - yes yes - 4.3.1415.0 ALWIL Software avast! Antivirus 1.x - - yes yes - 4.3.1415.0 AVG Technologies CZ, s.r.o. AVG Anti-Virus 13.x yes yes yes yes - 4.3.1415.0 AVG Anti-Virus 2013.x yes yes yes yes - 4.3.1415.0 Avira GmbH Avira AntiVir PersonalEdition 2.x yes yes yes yes - 4.3.1415.0 Classic Avira AntiVir PersonalEdition 3.x yes yes yes yes - 4.3.1415.0 Classic Bitdefender Bitdefender Endpoint Security 6.x yes yes yes yes - 4.3.1415.0 Tools Bytware, Inc Bytware Anti-Virus 1.x - - yes yes - 4.3.1415.0 Carbon Black, Inc. Carbon Black Defense Sensor 2.x yes - yes yes - 4.3.1415.0 Comodo Group Comodo Antivirus 1.x yes - yes yes - 4.3.1415.0 CrowdStrike, Inc. Crowdstrike Falcon 4.x yes - yes yes - 4.3.1415.0 Crowdstrike Falcon 5.x yes - yes yes - 4.3.1415.0 Crowdstrike Falcon 6.x yes - yes yes - 4.3.1709.0 2 Product Name Product Definition Live Application Application Application Min. Compliance Version State Update Running Kill Uninstall Module Version Check Check Cylance Inc. -

Quick Heal Endpoint Security 5.2.1

Administrator Guide Quick Heal Endpoint Security 5.2.1 QHEPS Total QHEPS Business Quick Heal Technologies (P) Ltd. http://www.quickheal.com Copyright Information Copyright Information Copyright © 2012 Quick Heal Technologies (P) Ltd. All Rights Reserved. All rights are reserved by Quick Heal Technologies (P) Ltd. No part of this software may be reproduced, duplicated or modified in any form or incorporated into any information retrieval system, electronic or any other or transmitted in any form without prior permission of Quick Heal Technologies (P) Ltd, 603 Mayfair Towers II, Wakdewadi, Shivajinagar, Pune-411 005, India. Marketing, distribution or use by anyone barring the people authorized by Quick Heal Technologies (P) Ltd. is liable to legal prosecution. Trademarks Quick Heal and DNAScan are registered trademarks of Quick Heal Technologies (P) Ltd. © 2012 Quick Heal Technologies (P) Ltd. i End-User License Agreement End-User License Agreement QUICK HEAL Endpoint Security 5.2.1 End-User License Agreement IMPORTANT PLEASE READ THIS END-USER LICENSE AGREEMENT CAREFULLY BEFORE USING THIS SOFTWARE. BY USING THIS SOFTWARE OR BY CLICKING THE "I AGREE" BUTTON OR LOADING OR ATTEMPTING TO LOAD THE QUICK HEAL SOFTWARE, IN ANY WAY (WILL CONSTITUTE A SYMBOL OF YOUR SIGNATURE), YOU ACKNOWLEDGE AND ADMIT THAT YOU HAVE READ, UNDERSTOOD AND AGREED TO ALL THE TERMS AND CONDITIONS OF THIS END- USER LICENSE AGREEMENT. IF YOU DO NOT AGREE TO THE TERMS AND CONDITIONS BELOW, DO NOT USE THIS SOFTWARE IN ANY WAY AND PROMPTLY RETURN IT OR DELETE ALL THE COPIES -

Most Tested. Most Awarded. Kaspersky Lab Protection.*

MOST TESTED. MOST AWARDED. KASPERSKY LAB PROTECTION.* In 2015 Kaspersky Lab products participated in 94 100% independent tests and reviews. Our products were awarded 60 firsts and achieved 77 top-three finishes. Kaspersky Lab Score of TOP 3 3 TOP places of Score 1st places – 60 80% Participation in 94 tests/reviews TOP 3 = 82% Avira ESET Bitdefender 60% Symantec * Notes: 40% • According to summary results of independent tests in 2015 for corporate, consumer and mobile Sophos products. Quick Heal • Summary includes independent tests conducted by : Avast AV-Comparatives, AV-Test, Dennis Technology Labs, MRG Effitas, NSS Labs, PC Security Labs, BullGuard VirusBulletin. Trend Micro • Tests performed in these programs assess all 20% AVG protection technologies against known, unknown and advanced threats. G DATA • The size of the bubble reflects the number of 1st Panda Security F-Secure Intel Security (McAfee) places achieved. Microsoft N of independent tests/reviews 0% 20 40 60 80 100 1 © 2016 Kaspersky Lab. All rights reserved. Registered trademarks and service marks are the property of their respective owners. 2015 TOP3 METRIC DESCRIPTION This TOP3 metric reflects the percentage of times a vendor gained a top- N of N of Score of N of three place in independent tests within a reported period (calendar year). tests entered TOP3 places TOP3 places (%) 1st places Kaspersky Lab 94 77 82% 60 Among the security vendors participating in the TOP3 measurements are: Avast, AVG, Avira, Bitdefender, BullGuard, ESET, F-Secure, G DATA, Intel Security (McAfee), Kaspersky Lab, Bitdefender 62 39 63% 32 Microsoft, Panda, Sophos, Symantec, Trend Micro and Quick Heal. -

Malware Protection Test March 2018

Anti -Virus Comparative Malware Protection Test Consumer Products File Detection Test with Execution including false alarm test Language: English March 2018 Last Revision: 13th April 2018 www.av-comparatives.org Malware Protection Test - March 2018 www.av-comparatives.org Table of Contents Tested Products 3 Introduction 4 Detection vs. Protection 5 Offline vs. Online Detection Rates 6 Results (Online Protection Rates) 7 False positive (false alarm) test 8 Ranking system 9 Award levels reached in this test 10 Copyright and Disclaimer 11 - 2 - Malware Protection Test - March 2018 www.av-comparatives.org Test ed Products • Avast Free Antivirus 18.2 • Kaspersky Internet Security 18.0 • AVG Free Antivirus 18.2 • McAfee Internet Security 20.7 • AVIRA Antivirus Pro 15.0 • Microsoft Windows Defender 4.12 • Bitdefender Internet Security 22.0 • Panda Free Antivirus 18.03 • BullGuard Internet Security 18.0 • Quick Heal Total Security 17.0 • Emsisoft Anti-Malware 2018.2 • Symantec Norton Security 22.12 • ESET Internet Security 11.0 • Tencent PC Manager 12.3 • F-Secure SAFE 17.204 • Trend Micro Internet Security 12.0 • K7 Total Security 15.1 • VIPRE Advanced Security 10.1 - 3 - Malware Protection Test - March 2018 www.av-comparatives.org Introduction The Malware Protection Test is an enhancement of the File Detection Test which was performed years ago. Due to the increased scope of the test, readers are advised to read the methodology described below. Please note that we do not recommend purchasing a product purely on the basis of one individual test or even one type of test. Rather, we would suggest that readers consult also our other recent test reports, and consider factors such as price, ease of use, compatibility and support.