Biocreative II.5 Workshop 2009 Special Session on Digital Annotations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

COVID-19 Evidence Update Date 7Th to 13Th September 2020

UHB Library & Knowledge Services COVID-19 Evidence Update Date 7th to 13th September 2020 Evidence search Healthcare Databases Advanced Search (HDAS) 1. Medical mask versus cotton mask for preventing respiratory droplet transmission in micro environments. Author(s): Ho, Kin-Fai; Lin, Lian-Yu; Weng, Shao-Ping; Chuang, Kai-Jen Source: The Science of the total environment; Sep 2020; vol. 735 ; p. 139510 Publication Date: Sep 2020 Publication Type(s): Journal Article PubMedID: 32480154 DOI 10.1016/j.scitotenv.2020.139510 Database: MEDLINE 2. Update on neurological manifestations of COVID-19. Author(s): Yavarpour-Bali, Hanie; Ghasemi-Kasman, Maryam Source: Life sciences; Sep 2020; vol. 257 ; p. 118063 Publication Date: Sep 2020 Publication Type(s): Journal Article Review PubMedID: 32652139 Available at Life sciences from Unpaywall Database: MEDLINE 3. Forecasting the Future of Urology Practice: A Comprehensive Review of the Recommendations by International and European Associations on Priority Procedures During the COVID-19 Pandemic. Author(s): Amparore, Daniele; Campi, Riccardo; Checcucci, Enrico; Sessa, Francesco; Pecoraro, Angela; Minervini, Andrea; Fiori, Cristian; Ficarra, Vincenzo; Novara, Giacomo; Serni, Sergio; Porpiglia, Francesco Source: European urology focus; Sep 2020; vol. 6 (no. 5); p. 1032-1048 Publication Date: Sep 2020 Publication Type(s): Journal Article Review PubMedID: 32553544 Available at European urology focus from ClinicalKey You will need to register (free of charge) with Clinical Key the first time you use it. Available at European urology focus from Unpaywall Database: MEDLINE 4. Tongue Ulcers Associated with SARS-COV-2 Infection: A case-series. Author(s): Riad, Abanoub; Kassem, Islam; Hockova, Barbora; Badrah, Mai; Klugar, Miloslav Source: Oral diseases; Sep 2020 Publication Date: Sep 2020 Publication Type(s): Letter PubMedID: 32889763 Available at Oral diseases from Wiley Online Library Medicine and Nursing Collection 2020 Database: MEDLINE 5. -

Issue 1/January, 2006

Issue 1/January, 2006 by Camilla Krogh Lauritzen, FEBS Information Manager, Editor of FEBS News FEBS Secretariat, co/the Danish Cancer Society Strandboulevarden 49, DK-2100, Denmark E-mail: [email protected] FEBS website: www.FEBS.org Dear Colleagues, A new year has taken its start, and the 2006 FEBS Congress is only approx. six month away. This year, the Con- gress will take place in Istanbul, and again this year, the programme is packed with exiting topics and people — if you have not already done so, have a look at the Congress website, www.FEBS2006.org. Furthermore, please re- member that the FEBS Forum for Young Scientists will take place in connection with the congress (p. 3). 2006 marks "a changing of the guards" in terms of FEBS officers — on page 3 you will get a brief presentation of some known and some new faces in FEBS. Furthermore, you will be presented with the face of Luc Van Dyke (ELSF/ISE), as well as to his article, "Science policy — working together to shape our future". Luc is the debater in this issue of FEBS News, and will elaborate on the current status and importance of science policy science making within Europe (p.9). Finally, this issue will also bring you news from FEBS Letters and FEBS Journal, as well as new opportunities, such as up-coming FEBS courses (p. 8) and job opportunities (p. 10-13). I wish all of you a successful and happy 2006! Kind regards, Camilla About FEBS News: FEBS News is published every second Monday in every second month (starting January). -

Semantic Web

SEMANTIC WEB: REVOLUTIONIZING KNOWLEDGE DISCOVERY IN THE LIFE SCIENCES SEMANTIC WEB: REVOLUTIONIZING KNOWLEDGE DISCOVERY IN THE LIFE SCIENCES Edited by Christopher J. O. Baker1 and Kei-Hoi Cheung2 1Knowledge Discovery Department, Institute for Infocomm Research, Singapore, Singapore; 2Center for Medical Informatics, Yale University School of Medicine, New Haven, CT, USA Kluwer Academic Publishers Boston/Dordrecht/London Contents PART I: Database and Literature Integration Semantic web approach to database integration in the life sciences KEI-HOI CHEUNG, ANDREW K. SMITH, KEVIN Y. L. YIP, CHRISTOPHER J. O. BAKER AND MARK B. GERSTEIN Querying Semantic Web Contents: A case study LOIC ROYER, BENEDIKT LINSE, THOMAS WÄCHTER, TIM FURCH, FRANCOIS BRY, AND MICHAEL SCHROEDER Knowledge Acquisition from the Biomedical Literature LYNETTE HIRSCHMAN, WILLIAM HAYES AND ALFONSO VALENCIA PART II: Ontologies in the Life Sciences Biological Ontologies PATRICK LAMBRIX, HE TAN, VAIDA JAKONIENE, AND LENA STRÖMBÄCK Clinical Ontologies YVES LUSSIER AND OLIVIER BODENREIDER Ontology Engineering For Biological Applications vi Revolutionizing knowledge discovery in the life sciences LARISA N. SOLDATOVA AND ROSS D. KING The Evaluation of Ontologies: Toward Improved Semantic Interoperability LEO OBRST, WERNER CEUSTERS, INDERJEET MANI, STEVE RAY AND BARRY SMITH OWL for the Novice JEFF PAN PART III: Ontology Visualization Techniques for Ontology Visualization XIAOSHU WANG AND JONAS ALMEIDA On Vizualization of OWL Ontologies SERGUEI KRIVOV, FERDINANDO VILLA, RICHARD WILLIAMS, AND XINDONG WU PART IV: Ontologies in Action Applying OWL Reasoning to Genomics: A Case Study KATY WOLSTENCROFT, ROBERT STEVENS AND VOLKER HAARSLEV Can Semantic Web Technologies enable Translational Medicine? VIPUL KASHYAP, TONYA HONGSERMEIER AND SAMUEL J. ARONSON Ontology Design for Biomedical Text Mining RENÉ WITTE, THOMAS KAPPLER, AND CHRISTOPHER J. -

Aggregation and Correlation Toolbox for Analyses of Genome Tracks Justin Jee Yale University

University of Massachusetts eM dical School eScholarship@UMMS Program in Bioinformatics and Integrative Biology Program in Bioinformatics and Integrative Biology Publications and Presentations 4-15-2011 ACT: aggregation and correlation toolbox for analyses of genome tracks Justin Jee Yale University Joel Rozowsky Yale University Kevin Y. Yip Yale University See next page for additional authors Follow this and additional works at: http://escholarship.umassmed.edu/bioinformatics_pubs Part of the Bioinformatics Commons, Computational Biology Commons, and the Systems Biology Commons Repository Citation Jee, Justin; Rozowsky, Joel; Yip, Kevin Y.; Lochovsky, Lucas; Bjornson, Robert; Zhong, Guoneng; Zhang, Zhengdong; Fu, Yutao; Wang, Jie; Weng, Zhiping; and Gerstein, Mark B., "ACT: aggregation and correlation toolbox for analyses of genome tracks" (2011). Program in Bioinformatics and Integrative Biology Publications and Presentations. Paper 26. http://escholarship.umassmed.edu/bioinformatics_pubs/26 This material is brought to you by eScholarship@UMMS. It has been accepted for inclusion in Program in Bioinformatics and Integrative Biology Publications and Presentations by an authorized administrator of eScholarship@UMMS. For more information, please contact [email protected]. ACT: aggregation and correlation toolbox for analyses of genome tracks Authors Justin Jee, Joel Rozowsky, Kevin Y. Yip, Lucas Lochovsky, Robert Bjornson, Guoneng Zhong, Zhengdong Zhang, Yutao Fu, Jie Wang, Zhiping Weng, and Mark B. Gerstein Comments © The Author(s) 2011. Published by Oxford University Press. This is an Open Access article distributed under the terms of the Creative Commons Attribution Non- Commercial License (http://creativecommons.org/licenses/by-nc/2.5), which permits unrestricted non- commercial use, distribution, and reproduction in any medium, provided the original work is properly cited. -

Download (142Kb)

Volume 9 Supplement 1 July 2019 TALKS 13 Intracellular ion channels and transporters 15 RNA processing Table of Contents 16 Signal transduction PLENARY LECTURES 17 Mitochondria and signaling 18 DNA architecture Saturday 6 July 19 RNA transcription 3 Opening Plenary Lecture Monday 8 July Sunday 7 July 20 DNA editing and modification 3 IUBMB Lecture 22 RNA transport and translation 3 The FEBS Journal Richard Perham Prize Lecture 24 Single cell analysis and imaging 3 FEBS/EMBO Women in Science Award Lecture 25 Calcium and ROS signaling Monday 8 July 26 Sulfur metabolism and cellular regulation 27 Molecular neurobiology 4 FEBS Datta Lecture 29 RNA turnover 4 FEBS Sir Hans Krebs Lecture 30 Cytoskeleton and molecular mechanisms of motility Tuesday 9 July 31 Rare diseases 4 FEBS Theodor Bücher Lecture Tuesday 9 July 5 FEBS 2019 Plenary Lecture 32 Signaling in brain cancer Wednesday 10 July 34 Synthetic biopolymers for biomedicine 5 PABMB Lecture 35 Integrative approaches to structural and synthetic biology 5 EMBO Lecture 37 Induced pluripotent cells Thursday 11 July 39 Long noncoding RNA 40 Neurodegeneration 5 Closing Plenary Lecture 42 Cell therapy and regenerative medicine FEBS SPECIAL SESSIONS 44 Small noncoding RNA Sunday 7 July Wednesday 10 July 6 Gender issues in science 45 Proteins: structure, disorder and dynamics 47 Plant biotechnology Monday 8 July 48 Natural networks and systems 6 Education 1 – Creative teaching: effective learning in life sciences 50 RNA in pathogenesis and therapy education 51 Biochemistry, a success story Tuesday -

Accounts of Chemical Research 8 (1975) - 21, 22 {1-11}, 23 - 30 (1997)

A Accounts of Chemical Research 8 (1975) - 21, 22 {1-11}, 23 - 30 (1997) Acta Chemica Scandinavica 1 (1947) - 27 (1973) : Acta Chemica Scandinavica. 1974 - 1988 : Ser. Aと Ser. B . に分離 A 28 (1974) - A 42 (1988) : Ser. A : Physical and Inorganic Chemistry B 28 (1974) - B 42 (1988) : Ser. B : Organic Chemistry and Biochemistry 43 (1989) : 合併 Acta Chemica Scandinavica. 1 (1947) - 51 (1997) Acta Phytotaxonomica et Geobotanica →APG * Advances in Analytical Chemistry and Instrumentation 1 (1960) - 11 (1973) * Advances in Biological and Medical Physics 10 (1965) - 17 (1980) * Advances in Carbohydrate Chemistry → Advances in Carbohydrate Chemistry and Biochemistry * Advances in Carbohydrate Chemistry and Biochemistry 1 (1945) - 23 (1968) : Advances in Carbohydrate Chemistry 1 (1945) - 61 (2007) 62 (2008) + : 電子ジャーナル * Advances in Catalysis 1 (1948) - 21 (1970) : Advances in Catalysis and Related Subjects 1 (1948) - 41 (1996) * Advances in Catalysis and Related Subjects → Advances in Catalysis * Advances in Chemical Engineering 1 (1956) - 23 (1996) * Advances in Chemical Physics 1 (1958) - 58, 60 - 93, 95 - 96 (1996) * Advances in Clinical Chemistry 1 (1958) - 31 (1994) * Advances in Drug Research 1 (1964) - 12 (1977) * Advances in Electrochemistry and Electrochemical Engineering 1 1 (1961) - 13 (1984) * Advances in Enzyme Regulation 1 (1963) - 33 (1993) * Advances in Enzymology * Advances in Enzymology and Related Subjects * Advances in Enzymology and Related Subjects of Biochemistry → Advances in Enzymology and Related Areas of Molecular Biology -

Tporthmm : Predicting the Substrate Class Of

TPORTHMM : PREDICTING THE SUBSTRATE CLASS OF TRANSMEMBRANE TRANSPORT PROTEINS USING PROFILE HIDDEN MARKOV MODELS Shiva Shamloo A thesis in The Department of Computer Science Presented in Partial Fulfillment of the Requirements For the Degree of Master of Computer Science Concordia University Montréal, Québec, Canada December 2020 © Shiva Shamloo, 2020 Concordia University School of Graduate Studies This is to certify that the thesis prepared By: Shiva Shamloo Entitled: TportHMM : Predicting the substrate class of transmembrane transport proteins using profile Hidden Markov Models and submitted in partial fulfillment of the requirements for the degree of Master of Computer Science complies with the regulations of this University and meets the accepted standards with respect to originality and quality. Signed by the final examining commitee: Examiner Dr. Sabine Bergler Examiner Dr. Andrew Delong Supervisor Dr. Gregory Butler Approved Dr. Lata Narayanan, Chair Department of Computer Science and Software Engineering 20 Dean Dr. Mourad Debbabi Faculty of Engineering and Computer Science Abstract TportHMM : Predicting the substrate class of transmembrane transport proteins using profile Hidden Markov Models Shiva Shamloo Transporters make up a large proportion of proteins in a cell, and play important roles in metabolism, regulation, and signal transduction by mediating movement of compounds across membranes but they are among the least characterized proteins due to their hydropho- bic surfaces and lack of conformational stability. There is a need for tools that predict the substrates which are transported at the level of substrate class and the level of specific substrate. This work develops a predictor, TportHMM, using profile Hidden Markov Model (HMM) and Multiple Sequence Alignment (MSA). -

BIOINFORMATICS Doi:10.1093/Bioinformatics/Btq499

Vol. 26 ECCB 2010, pages i409–i411 BIOINFORMATICS doi:10.1093/bioinformatics/btq499 ECCB 2010 Organization CONFERENCE CHAIR B. Comparative Genomics, Phylogeny, and Evolution Yves Moreau, Katholieke Universiteit Leuven, Belgium Martijn Huynen, Radboud University Nijmegen Medical Centre, The Netherlands PROCEEDINGS CHAIR Yves Van de Peer, Ghent University & VIB, Belgium Jaap Heringa, Free University of Amsterdam, The Netherlands C. Protein and Nucleotide Structure LOCAL ORGANIZING COMMITTEE Anna Tramontano, University of Rome ‘La Sapienza’, Italy Jan Gorodkin, University of Copenhagen, Denmark Yves Moreau, Katholieke Universiteit Leuven, Belgium Jaap Heringa, Free University of Amsterdam, The Netherlands D. Annotation and Prediction of Molecular Function Gert Vriend, Radboud University, Nijmegen, The Netherlands Yves Van de Peer, University of Ghent & VIB, Belgium Nir Ben-Tal, Tel-Aviv University, Israel Kathleen Marchal, Katholieke Universiteit Leuven, Belgium Fritz Roth, Harvard Medical School, USA Jacques van Helden, Université Libre de Bruxelles, Belgium Louis Wehenkel, Université de Liège, Belgium E. Gene Regulation and Transcriptomics Antoine van Kampen, University of Amsterdam & Netherlands Jaak Vilo, University of Tartu, Estonia Bioinformatics Center (NBIC) Zohar Yakhini, Agilent Laboratories, Tel-Aviv & the Tech-nion, Peter van der Spek, Erasmus MC, Rotterdam, The Netherlands Haifa, Israel STEERING COMMITTEE F. Text Mining, Ontologies, and Databases Michal Linial (Chair), Hebrew University, Jerusalem, Israel Alfonso Valencia, National -

MPGM: Scalable and Accurate Multiple Network Alignment

MPGM: Scalable and Accurate Multiple Network Alignment Ehsan Kazemi1 and Matthias Grossglauser2 1Yale Institute for Network Science, Yale University 2School of Computer and Communication Sciences, EPFL Abstract Protein-protein interaction (PPI) network alignment is a canonical operation to transfer biological knowledge among species. The alignment of PPI-networks has many applica- tions, such as the prediction of protein function, detection of conserved network motifs, and the reconstruction of species’ phylogenetic relationships. A good multiple-network align- ment (MNA), by considering the data related to several species, provides a deep understand- ing of biological networks and system-level cellular processes. With the massive amounts of available PPI data and the increasing number of known PPI networks, the problem of MNA is gaining more attention in the systems-biology studies. In this paper, we introduce a new scalable and accurate algorithm, called MPGM, for aligning multiple networks. The MPGM algorithm has two main steps: (i) SEEDGENERA- TION and (ii) MULTIPLEPERCOLATION. In the first step, to generate an initial set of seed tuples, the SEEDGENERATION algorithm uses only protein sequence similarities. In the second step, to align remaining unmatched nodes, the MULTIPLEPERCOLATION algorithm uses network structures and the seed tuples generated from the first step. We show that, with respect to different evaluation criteria, MPGM outperforms the other state-of-the-art algo- rithms. In addition, we guarantee the performance of MPGM under certain classes of net- work models. We introduce a sampling-based stochastic model for generating k correlated networks. We prove that for this model if a sufficient number of seed tuples are available, the MULTIPLEPERCOLATION algorithm correctly aligns almost all the nodes. -

You Are Cordially Invited to a Talk in the Edmond J. Safra Center for Bioinformatics Distinguished Speaker Series

You are cordially invited to a talk in the Edmond J. Safra Center for Bioinformatics Distinguished Speaker Series. The speaker is Prof. Alfonso Valencia, Spanish National Cancer Research Centre, CNIO. Title: "A mESC epigenetics network that combines chromatin localization data and evolutionary information" Time: Wednesday, November 11 2015, at 11:00 (refreshments from 10:50) Place: Green building seminar room, ground floor, Biotechnology Department. Host: Prof. Ron Shamir, [email protected], School of Computer Science Abstract: The description of biological systems in terms of networks is by now a well- accepted paradigm. Networks offer the possibility of combining complex information and the system to analyse the properties of individual components in relation to the ones of their interaction partners. In this context, we have analysed the relations between the known components of mouse Embryonic Stem Cells (mESC) epigenome at two orthogonal levels of information, i.e., co-localization in the genome and concerted evolution (co-evolution). Public repositories contain results of ChIP-Seq experiments for more than 60 Chromatin Related Proteins (CRPs), 14 for Histone modifications, and three different types of DNA methylation, including 5-Hydroxymethylcytosine (5hmc). All this information can be summarized in a network in which the nodes are the CRPs, histone marks and DNA modifications and the arcs connect components that significantly co-localize in the genome. In this network co-localization preferences are specific of chromatin states, such as promoters and enhancers. A second network was build by connecting proteins that are part of the mESC epigenetic regulatory system and show signals of co-evolution (concerted evolution of the CRPs protein families). -

ISCB's Initial Reaction to the New England Journal of Medicine

MESSAGE FROM ISCB ISCB’s Initial Reaction to The New England Journal of Medicine Editorial on Data Sharing Bonnie Berger, Terry Gaasterland, Thomas Lengauer, Christine Orengo, Bruno Gaeta, Scott Markel, Alfonso Valencia* International Society for Computational Biology, Inc. (ISCB) * [email protected] The recent editorial by Drs. Longo and Drazen in The New England Journal of Medicine (NEJM) [1] has stirred up quite a bit of controversy. As Executive Officers of the International Society of Computational Biology, Inc. (ISCB), we express our deep concern about the restric- tive and potentially damaging opinions voiced in this editorial, and while ISCB works to write a detailed response, we felt it necessary to promptly address the editorial with this reaction. While some of the concerns voiced by the authors of the editorial are worth considering, large parts of the statement purport an obsolete view of hegemony over data that is neither in line with today’s spirit of open access nor furthering an atmosphere in which the potential of data can be fully realized. ISCB acknowledges that the additional comment on the editorial [2] eases some of the polemics, but unfortunately it does so without addressing some of the core issues. We still feel, however, that we need to contrast the opinion voiced in the editorial with what we consider the axioms of our scientific society, statements that lead into a fruitful future of data-driven science: • Data produced with public money should be public in benefit of the science and society • Restrictions to the use of public data hamper science and slow progress OPEN ACCESS • Open data is the best way to combat fraud and misinterpretations Citation: Berger B, Gaasterland T, Lengauer T, Orengo C, Gaeta B, Markel S, et al. -

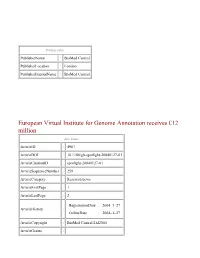

Springer A++ Viewer

PublisherInfo PublisherName : BioMed Central PublisherLocation : London PublisherImprintName : BioMed Central European Virtual Institute for Genome Annotation receives €12 million ArticleInfo ArticleID : 4907 ArticleDOI : 10.1186/gb-spotlight-20040127-01 ArticleCitationID : spotlight-20040127-01 ArticleSequenceNumber : 259 ArticleCategory : Research news ArticleFirstPage : 1 ArticleLastPage : 2 RegistrationDate : 2004–1–27 ArticleHistory : OnlineDate : 2004–1–27 ArticleCopyright : BioMed Central Ltd2004 ArticleGrants : ArticleContext : 130594411 Genome Biology Email: [email protected] Janet Thornton, Director of the European Bioinformatics Institute(EBI; Hinxton, UK), is coordinator of the BioSapiens project. "The BioSapiens Network of Excellence ... will coordinate and focus excellent research in bioinformatics, by creating a Virtual Institute for Genome Annotation. The Institute will also establish a permanent European School of Bioinformatics, to train bioinformaticians and to encourage best practice in the exploitation of genome annotation data for biologists," she adds. The annotations will be integrated and made freely accessible via a web portal, and will be used to guide future experiments. Annotations will be integrated using the Open Source Distributed Annotation System(DAS) developed by Lincoln Stein and colleagues at Cold Spring Harbor Laboratory (USA) for exchanging genome annotations. "The development of methods, tools, and servers in close interaction with experimentalists is one feature that distinguishes the