Mipspro 64-Bit Porting and Transition Guide

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mipspro C++ Programmer's Guide

MIPSproTM C++ Programmer’s Guide 007–0704–150 CONTRIBUTORS Rewritten in 2002 by Jean Wilson with engineering support from John Wilkinson and editing support from Susan Wilkening. COPYRIGHT Copyright © 1995, 1999, 2002 - 2003 Silicon Graphics, Inc. All rights reserved; provided portions may be copyright in third parties, as indicated elsewhere herein. No permission is granted to copy, distribute, or create derivative works from the contents of this electronic documentation in any manner, in whole or in part, without the prior written permission of Silicon Graphics, Inc. LIMITED RIGHTS LEGEND The electronic (software) version of this document was developed at private expense; if acquired under an agreement with the USA government or any contractor thereto, it is acquired as "commercial computer software" subject to the provisions of its applicable license agreement, as specified in (a) 48 CFR 12.212 of the FAR; or, if acquired for Department of Defense units, (b) 48 CFR 227-7202 of the DoD FAR Supplement; or sections succeeding thereto. Contractor/manufacturer is Silicon Graphics, Inc., 1600 Amphitheatre Pkwy 2E, Mountain View, CA 94043-1351. TRADEMARKS AND ATTRIBUTIONS Silicon Graphics, SGI, the SGI logo, IRIX, O2, Octane, and Origin are registered trademarks and OpenMP and ProDev are trademarks of Silicon Graphics, Inc. in the United States and/or other countries worldwide. MIPS, MIPS I, MIPS II, MIPS III, MIPS IV, R2000, R3000, R4000, R4400, R4600, R5000, and R8000 are registered or unregistered trademarks and MIPSpro, R10000, R12000, R1400 are trademarks of MIPS Technologies, Inc., used under license by Silicon Graphics, Inc. Portions of this publication may have been derived from the OpenMP Language Application Program Interface Specification. -

MIPS IV Instruction Set

MIPS IV Instruction Set Revision 3.2 September, 1995 Charles Price MIPS Technologies, Inc. All Right Reserved RESTRICTED RIGHTS LEGEND Use, duplication, or disclosure of the technical data contained in this document by the Government is subject to restrictions as set forth in subdivision (c) (1) (ii) of the Rights in Technical Data and Computer Software clause at DFARS 52.227-7013 and / or in similar or successor clauses in the FAR, or in the DOD or NASA FAR Supplement. Unpublished rights reserved under the Copyright Laws of the United States. Contractor / manufacturer is MIPS Technologies, Inc., 2011 N. Shoreline Blvd., Mountain View, CA 94039-7311. R2000, R3000, R6000, R4000, R4400, R4200, R8000, R4300 and R10000 are trademarks of MIPS Technologies, Inc. MIPS and R3000 are registered trademarks of MIPS Technologies, Inc. The information in this document is preliminary and subject to change without notice. MIPS Technologies, Inc. (MTI) reserves the right to change any portion of the product described herein to improve function or design. MTI does not assume liability arising out of the application or use of any product or circuit described herein. Information on MIPS products is available electronically: (a) Through the World Wide Web. Point your WWW client to: http://www.mips.com (b) Through ftp from the internet site “sgigate.sgi.com”. Login as “ftp” or “anonymous” and then cd to the directory “pub/doc”. (c) Through an automated FAX service: Inside the USA toll free: (800) 446-6477 (800-IGO-MIPS) Outside the USA: (415) 688-4321 (call from a FAX machine) MIPS Technologies, Inc. -

Optimal Software Pipelining: Integer Linear Programming Approach

OPTIMAL SOFTWARE PIPELINING: INTEGER LINEAR PROGRAMMING APPROACH by Artour V. Stoutchinin Schooi of Cornputer Science McGill University, Montréal A THESIS SUBMITTED TO THE FACULTYOF GRADUATESTUDIES AND RESEARCH IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF MASTEROF SCIENCE Copyright @ by Artour V. Stoutchinin Acquisitions and Acquisitions et Bibliographie Services services bibliographiques 395 Wellington Street 395. nie Wellington Ottawa ON K1A ON4 Ottawa ON KI A ON4 Canada Canada The author has granted a non- L'auteur a accordé une licence non exclusive licence allowing the exclusive permettant à la National Library of Canada to Bibliothèque nationale du Canada de reproduce, loan, distribute or sell reproduire, prêter, distribuer ou copies of this thesis in microform, vendre des copies de cette thèse sous paper or electronic formats. la fome de microfiche/^, de reproduction sur papier ou sur format électronique. The author retains ownership of the L'auteur conserve la propriété du copyright in this thesis. Neither the droit d'auteur qui protège cette thèse. thesis nor substantialextracts fiom it Ni la thèse ni des extraits substantiels may be printed or otherwise de celle-ci ne doivent être imprimés reproduced without the author's ou autrement reproduits sans son permission. autorisation- Acknowledgements First, 1 thank my family - my Mom, Nina Stoutcliinina, my brother Mark Stoutchinine, and my sister-in-law, Nina Denisova, for their love, support, and infinite patience that comes with having to put up with someone like myself. I also thank rny father, Viatcheslav Stoutchinin, who is no longer with us. Without them this thesis could not have had happened. -

Pluggable Interface Relays CR-M Miniature Relays

Data sheet Pluggable interface relays CR-M Miniature relays Pluggable interface relays are used for electrical isolation, amplification and signal matching between the electronic controlling, e.g. PLC (programmable logic controller), PC or field bus systems and the sensor / actuator level. They don’t use additional internal protective circuits and thus are overload-proof against short-time variations like current or voltage peaks. 2CDC 291 002 S0015 Characteristics Approvals – Standard miniature relays with mechanical status indication H ANSI/UL 508, CAN/CSA C22.2 No.14 – 13 different rated control supply voltages: F CAN/CSA C22.2 No.14 DC versions: 12 V, 24 V, 48 V, 60 V, 110 V, 125 V, 220 V J VDE (except 125 V DC devices) AC versions: 24 V, 48 V, 60 V, 110 V, 120 V, 230 V EAC – Output: 2 c/o (SPDT) contacts (12 A), 3 c/o (SPDT) R contacts (10 A) or 4 c/o (SPDT) contacts (6 A) P Lloyds Register (only devices with 4 c/o (SPDT) – Available with or without LED contacts) CCC – 4 c/o (SPDT) contact version optionally equipped with E gold contacts, LED and free wheeling diode L RMRS (except 60 V and 125 V devices) – Integrated test button for manual actuation and locking of output contacts (DC coil = blue, AC coil = orange) that Marks can be removed if necessary a CE – Cadmium-free contact material – Suited for logical and standard sockets – Width on socket: 27 mm (1.063 in) – Pluggable function modules: reverse polarity protection/ free wheeling diode, LED indication, RC elements, overvoltage protection Order data Packing unit = 10 pieces -

C++ Programmer's Guide

C++ Programmer’s Guide Document Number 007–0704–130 St. Peter’s Basilica image courtesy of ENEL SpA and InfoByte SpA. Disk Thrower image courtesy of Xavier Berenguer, Animatica. Copyright © 1995, 1999 Silicon Graphics, Inc. All Rights Reserved. This document or parts thereof may not be reproduced in any form unless permitted by contract or by written permission of Silicon Graphics, Inc. LIMITED AND RESTRICTED RIGHTS LEGEND Use, duplication, or disclosure by the Government is subject to restrictions as set forth in the Rights in Data clause at FAR 52.227-14 and/or in similar or successor clauses in the FAR, or in the DOD, DOE or NASA FAR Supplements. Unpublished rights reserved under the Copyright Laws of the United States. Contractor/manufacturer is Silicon Graphics, Inc., 1600 Amphitheatre Pkwy., Mountain View, CA 94043-1351. Autotasking, CF77, CRAY, Cray Ada, CraySoft, CRAY Y-MP, CRAY-1, CRInform, CRI/TurboKiva, HSX, LibSci, MPP Apprentice, SSD, SUPERCLUSTER, UNICOS, X-MP EA, and UNICOS/mk are federally registered trademarks and Because no workstation is an island, CCI, CCMT, CF90, CFT, CFT2, CFT77, ConCurrent Maintenance Tools, COS, Cray Animation Theater, CRAY APP, CRAY C90, CRAY C90D, Cray C++ Compiling System, CrayDoc, CRAY EL, CRAY J90, CRAY J90se, CrayLink, Cray NQS, Cray/REELlibrarian, CRAY S-MP, CRAY SSD-T90, CRAY SV1, CRAY T90, CRAY T3D, CRAY T3E, CrayTutor, CRAY X-MP, CRAY XMS, CRAY-2, CSIM, CVT, Delivering the power . ., DGauss, Docview, EMDS, GigaRing, HEXAR, IOS, ND Series Network Disk Array, Network Queuing Environment, Network Queuing Tools, OLNET, RQS, SEGLDR, SMARTE, SUPERLINK, System Maintenance and Remote Testing Environment, Trusted UNICOS, and UNICOS MAX are trademarks of Cray Research, Inc., a wholly owned subsidiary of Silicon Graphics, Inc. -

Computer Architectures an Overview

Computer Architectures An Overview PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 25 Feb 2012 22:35:32 UTC Contents Articles Microarchitecture 1 x86 7 PowerPC 23 IBM POWER 33 MIPS architecture 39 SPARC 57 ARM architecture 65 DEC Alpha 80 AlphaStation 92 AlphaServer 95 Very long instruction word 103 Instruction-level parallelism 107 Explicitly parallel instruction computing 108 References Article Sources and Contributors 111 Image Sources, Licenses and Contributors 113 Article Licenses License 114 Microarchitecture 1 Microarchitecture In computer engineering, microarchitecture (sometimes abbreviated to µarch or uarch), also called computer organization, is the way a given instruction set architecture (ISA) is implemented on a processor. A given ISA may be implemented with different microarchitectures.[1] Implementations might vary due to different goals of a given design or due to shifts in technology.[2] Computer architecture is the combination of microarchitecture and instruction set design. Relation to instruction set architecture The ISA is roughly the same as the programming model of a processor as seen by an assembly language programmer or compiler writer. The ISA includes the execution model, processor registers, address and data formats among other things. The Intel Core microarchitecture microarchitecture includes the constituent parts of the processor and how these interconnect and interoperate to implement the ISA. The microarchitecture of a machine is usually represented as (more or less detailed) diagrams that describe the interconnections of the various microarchitectural elements of the machine, which may be everything from single gates and registers, to complete arithmetic logic units (ALU)s and even larger elements. -

Electronic Products and Relays Selection Table Interface Relays CR-Range and R600 / R500 Range Pluggable Interface Relays

Electronic Products and Relays Selection Table Interface Relays CR-Range and R600 / R500 Range Pluggable Interface Relays CR-M Range Order number number Order 1SVR 405 611 R4000 1SVR 405 611 R1000 1SVR 405 611 R6000 1SVR 405 611 R4200 1SVR 405 611 R8000 1SVR 405 611 R8200 1SVR 405 611 R9000 1SVR 405 611 R0000 1SVR 405 611 R5000 1SVR 405 611 R7000 1SVR 405 611 R2000 1SVR 405 611 R3000 1SVR 405 612 R4000 1SVR 405 612 R1000 1SVR 405 612 R6000 1SVR 405 612 R4200 1SVR 405 612 R8000 1SVR 405 612 R8200 1SVR 405 612 R9000 1SVR 405 612 R0000 1SVR 405 612 R5000 1SVR 405 612 R5200 1SVR 405 612 R7000 1SVR 405 612 R2000 1SVR 405 612 R3000 1SVR 405 613 R4000 1SVR 405 613 R1000 1SVR 405 613 R6000 1SVR 405 613 R4200 1SVR 405 613 R8000 1SVR 405 613 R8200 1SVR 405 613 R9000 1SVR 405 613 R0000 1SVR 405 613 R5000 1SVR 405 613 R7000 1SVR 405 613 R2000 1SVR 405 613 R3000 1SVR 405 611 R4100 1SVR 405 611 R1100 1SVR 405 611 R6100 1SVR 405 611 R4300 1SVR 405 611 R8100 1SVR 405 611 R8300 1SVR 405 611 R9100 1SVR 405 611 R0100 1SVR 405 611 R5100 1SVR 405 611 R7100 1SVR 405 611 R2100 1SVR 405 611 R3100 1SVR 405 612 R4100 1SVR 405 612 R1100 1SVR 405 612 R6100 1SVR 405 612 R4300 1SVR 405 612 R8100 1SVR 405 612 R8300 1SVR 405 612 R9100 1SVR 405 612 R0100 1SVR 405 612 R5100 1SVR 405 612 R7100 1SVR 405 612 R2100 1SVR 405 612 R3100 1SVR 405 613 R4100 1SVR 405 613 R1100 1SVR 405 613 R6100 1SVR 405 613 R4300 1SVR 405 613 R8100 1SVR 405 613 R8300 1SVR 405 613 R9100 1SVR 405 613 R0100 1SVR 405 613 R5100 1SVR 405 613 R7100 1SVR 405 613 R2100 1SVR 405 613 R3100 1SVR 405 614 R1100 -

Parallel Processing Techniques: History and Usage of MIPS Approach for Implementation of Fast CPU

Vol-3 Issue-2 2017 IJARIIE-ISSN(O)-2395-4396 Parallel Processing Techniques: History and usage of MIPS approach for implementation of Fast CPU Drashti Joshi 1, Pooja Thakar 2 1 M.E. Student, Communication System Engineering, SAL Institute of Technology and Engineering Research, Gujarat, India. 2Assistant Professor, Communication System Engineering, SAL Institute of Technology and Engineering Research, Gujarat, India. ABSTRACT Parallel Processing is a term used to denote a class of technique that are used to provide simultaneous data processing task for the purpose of increasing computational speed of a computer system. Instead of processing each instruction sequentially as in conventional computer, a parallel processing system is able to perform concurrent data processing to achieve faster execution time. The Purpose of parallel processing techniques such as Pipeline processing, Vector processing and Array processing is to speed up the computer processing capability and increase its throughput, that is, the amount of processing that can be accomplished during a given interval of time. The amount of hardware increases with parallel processing, and with it, the cost of the system increases, However, technological developments have been reduced hardware cost to the point where parallel processing techniques are economically feasible. Advantage of parallel processing will be implemented using MIPS architecture for efficient implementation and of course its cost. Keyword: - Parallel processing, Pipelining, MIPS, and Interlock. 1. INTRODUCTION Basic computer architectures have been designed using two philosophies : 1) CISC (Complex Instruction set computer) 2) RISC (Reduced Instruction set computer) CISC support variety of addressing modes ,variable number of operands in various location in instruction set, wide variety of instructions with varying lengths and execution time and thus demanding complex control unit, which occupies large area on chip. -

Pluggable Interface Relays CR-M Miniature Relays Data Sheet

Pluggable interface relays CR-M Miniature relays Data sheet Features ½ Standard miniature relays with mechanical status indication ½ 12 different supply voltages: 1 DC versions: 12 V, 24 V, 48 V, 60 V, 110 V, 125 V, 220 V AC versions: 24 V, 48 V, 110 V, 120 V, 230 V ½ 2 Output contacts: 2 c/o (12 A), 3 c/o (10 A) or 4 c/o (6 A) ½ 2CDC 293 035 F0004 Available with or without LED 4 ½ 4-c/o version optionally equipped with gold contacts ½ Integrated test button for manual actuation and locking of output contacts (blue =DC, orange = AC) 3 ½ Cadmium-free contact material ½ Width on socket: 27 mm 5 ½ Suited for logical and standard sockets ½ Pluggable function modules: reverse polarity protection, LED indication, RC elements, overvoltage protection CR-M Approvals ቢ Interface relay ባ Pluggable function module n ቤ Socket l (not 125 V DC versions) ብ Holder j ቦ Marker e (only 4-c/o contact version) Marks g Ordering data Type Supply voltage Order code Interface relays without LED 2 c/o contacts: 250 V, 12 A CR-M012DC2 12 V DC 1SVR 405 611 R4000 CR-M024DC2 24 V DC 1SVR 405 611 R1000 CR-M048DC2 48 V DC 1SVR 405 611 R6000 CR-M060DC2 60 V DC 1SVR 405 611 R4200 CR-M110DC2 110 V DC 1SVR 405 611 R8000 CR-M125DC2 125 V DC 1SVR 405 611 R8200 CR-M220DC2 220 V DC 1SVR 405 611 R9000 CR-M024AC2 24 V AC 1SVR 405 611 R0000 CR-M048AC2 48 V AC 1SVR 405 611 R5000 CR-M110AC2 110 V AC 1SVR 405 611 R7000 CR-M120AC2 120 V AC 1SVR 405 611 R2000 CR-M230AC2 230 V AC 1SVR 405 611 R3000 Bold printed products = stocked products. -

MIPS R5000 Microprocessor Technical Backgrounder

MIPS R5000 Microprocessor Technical Backgrounder Performance: SPECint95 5.5 SPECfp95 5.5 Instruction Set MIPS-IV ISA Compatibility MIPS-I, MIPS-II, AND MIPS-III Pipeline Clock 200 MHz System Interface clock Up to 100 MHz Caches 32 kB I-cache and 32 kB D-cache, each 2-way set associative TLB 48 dual entries; Variable Page size (4 kB to 16 MB in 4x increments) Power dissipation: 10 watts (peak). at maximum operating frequency Supply voltage min. 3.0 Vtyp. 3.3 Vmax. 3.6 V Packaging: 272-pin cavity-down Ball Grid Array (BGA) 223-pin ceramic Pin Grid Array (PGA) Fabrication Technology: Vendor specific process including 0.35 micron Die Size: 80-90 mm2 (Vendor Dependent) Number of Transistors: 3.6 million (4 Transistor SRAM cell), 5.0 million (6 Transistor SRAM cell) (Of these totals, logic transistors number 800,000). mips 1 Open RISC Technology Overview This backgrounder introduces the R5000 microprocessor from MIPS Technologies, Inc. The information presented in this paper discusses new features in the R5000, i.e. how the R5000 differs from previous microprocessors from MIPS. This section provides general information on the R5000, including: • Introduction • The R5000 microprocessor • Packaging • Future upgrades • Block Diagram Introduction to RISC Reduced instruction-set computing (RISC) architectures differ from older complex instruction-set computing (CISC) architectures by streamlining instruction execution. The MIPS architecture, developed by MIPS Technologies, is firmly established as the leading RISC architecture today. On introduction, RISC microprocessors were used for high performance computing applications. Lately, these processors have found their way into the consumer electronics and embedded systems markets as well. -

Selection Guides and Order References for Pluggable Interface Relays CR-P

Pluggable interface relays CR-P, CR-M, CR-U range Selection guides and order references for pluggable interface relays CR-P, CR-M, CR-U range Pluggable pcb relays CR-P Pluggable universal relays CR-U Type Rated control Order code Type Rated control Order code supply voltage supply voltage Interface relays without LED 1 c/o contact: 250 V, 16 A Pluggable miniature relays CR-M without LED Pluggable miniature relays CR-M with LED 2 c/o contacts: 250 V, 10 A CR-P012DC1 12 V DC 1SVR 405 600 R4000 2CDC 291 047 F0004 2CDC 291 045 F0004 CR-U012DC2 12 V DC 1SVR 405 621 R4000 Type Rated control Order code Type Rated control Order code CR-P024DC1 24 V DC 1SVR 405 600 R1000 supply voltage supply voltage CR-U024DC2 24 V DC 1SVR 405 621 R1000 CR-P048DC1 48 V DC 1SVR 405 600 R6000 Interface relays without LED Interface relays with LED CR-U048DC2 48 V DC 1SVR 405 621 R6000 2 c/o contacts : 250 V, 12 A 2 c/o contacts: 250 V, 12 A CR-U110DC2 110 V DC 1SVR 405 621 R8000 CR-P110DC1 110 V DC 1SVR 405 600 R8000 2CDC 291 046 F0004 CR-M012DC2 12 V DC 1SVR 405 611 R4000 CR-M012DC2L 12 V DC 1SVR 405 611 R4100 Accessories for miniature relays CR-M CR-U220DC2 220 V DC 1SVR 405 621 R9000 CR-P024AC1 24 V AC 1SVR 405 600 R0000 CR-M024DC2 24 V DC 1SVR 405 611 R1000 CR-M024DC2L 24 V DC 1SVR 405 611 R1100 CR-U024AC2 24 V AC 1SVR 405 621 R0000 CR-P048AC1 48 V AC 1SVR 405 600 R5000 CR-M048DC2 48 V DC 1SVR 405 611 R6000 CR-M048DC2L 48 V DC 1SVR 405 611 R6100 Type Version Connection Order code CR-U048AC2 48 V AC 1SVR 405 621 R5000 CR-U110AC2 110 V AC 1SVR 405 621 R7000 CR-P110AC1 -

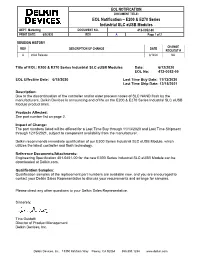

EOL Notification – E200 & E270 Series Industrial SLC Eusb Modules

EOL NOTIFICATION DOCUMENT TITLE: EOL Notification – E200 & E270 Series Industrial SLC eUSB Modules DEPT: Marketing DOCUMENT NO. 412-0052-00 PRINT DATE: 6/9/2020 REV A Page 1 of 2 REVISION HISTORY CHANGE REV DESCRIPTION OF CHANGE DATE REQUEST # A Initial Release 6/12/20 NA Title of EOL: E200 & E270 Series Industrial SLC eUSB Modules Date: 6/12/2020 EOL No: 412-0052-00 EOL Effective Date: 6/15/2020 Last Time Buy Date: 11/13/2020 Last Time Ship Date: 12/15/2021 Description: Due to the discontinuation of the controller and/or older process nodes of SLC NAND flash by the manufacturers, Delkin Devices is announcing end of life on the E200 & E270 Series Industrial SLC eUSB Module product lines. Products Affected: See part number list on page 2. Impact of Change: The part numbers listed will be offered for a Last Time Buy through 11/13/2020 and Last Time Shipment through 12/15/2021, subject to component availability from the manufacturer. Delkin recommends immediate qualification of our E300 Series Industrial SLC eUSB Module, which utilizes the latest controller and flash technology. Reference Documents/Attachments: Engineering Specification 401-0451-00 for the new E300 Series Industrial SLC eUSB Module can be downloaded at Delkin.com. Qualification Samples: Qualification samples of the replacement part numbers are available now, and you are encouraged to contact your Delkin Sales Representative to discuss your requirements and arrange for samples. Please direct any other questions to your Delkin Sales Representative. Sincerely, Tina Guidotti Director of Product Management Delkin Devices, Inc.