On the Generation of Music

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Satan's Trance Media & Magic Final Exam Colleen Viana 12

SATAN’S TRANCE MEDIA & MAGIC FINAL EXAM https://vimeo.com/55339082 COLLEEN VIANA 12/11/12 Sensation can be defined as the mediation of body and world. It enforces and impacts a premonition of what our bodies experience upon some type of contact. Thus, it allows the body to be opened up to other forces and becomings that affirm in and as the future. This concept, posed by Elizabeth Grosz in Chaos, Territory, Art: Deleuze and the Framing of the Earth, is quite eminent in the subject of magic. The quandry of it as the mediation of object and experience, or science and religion, is what drew me to this video experimentation of the mediations created by media in occult and horror film. While sensation is, perhaps, the only state of consciousness that is impossible to scientifically measure, as are the limits of magic, I find it more valuable to visually see how it can be created/manipulated. The juxtaposition of horror film/television clips, and electronic music (with cosmological and haunting characteristics), and documentary footage from raves/electronic music festivals (that possess qualities of the occult) is experimented in this project. The latter adds an extra layer to this research, paralleling a cultural music phenomenon with the magical representations and rites defined by Mauss. With these media devices combined, I hope to embody this sensory experience that Grosz explains “as the contraction of vibrations...the forces of becoming-other” (Grosz, 80-1). Essentially, how can we experience Hollywood-defined magic and the magic associated with music and rave culture differently? In this video montage, I aim to highlight the three components of magic presented in Mauss’ A General Theory of Magic, in relation to the electronic dance music (EDM) culture. -

Issue No. 39 September/October 2016 3 Videogames Have Become Bonafide EYE Entertainment Franchises

Press Play LÉON Lewis Del Mar Luna Aura Marshmello NAO and more THE STORM ISSUE NO. 39 REPORT SEPTEMBER/OCTOBER 2016 TABLE OF CONTENTS 4 EYE OF THE STORM Press Play: How videogames could save the music industry 5 STORM TRACKER Roadies Roll Call, Kiira Is Killing It, The Best and the Brightest 6 STORM FORECAST What to look forward to this month. Award Shows, Concert Tours, Album Releases, and more 7 STORM WARNING Our signature countdown of 20 buzzworthy bands and artists on our radar. 19 SOURCES & FOOTNOTES On the Cover: Martin Garrix. Photo provided by management ABOUT A LETTER THE STORM FROM THE REPORT EDITOR STORM = STRATEGIC TRACKING OF RELEVANT MEDIA Hello STORM Readers! The STORM Report is a compilation of up-and-coming bands and Videogames have become a lucrative channel artists who are worth watching. Only those showing the most for musicians seeking incremental revenue promising potential for future commercial success make it onto our as well as exposure to a captive and highly monthly list. engaged audience. Soundtracks for games include a varied combination of original How do we know? music scores, sound effects and licensed tracks. Artists from Journey (yes, Journey) Through correspondence with industry insiders and our own ravenous to Linkin Park have created gamified music media consumption, we spend our month gathering names of artists experiences to bring their songs to visual who are “bubbling under”. We then extensively vet this information, life. In the wake of Spotify announcing an analyzing an artist’s print & digital media coverage, social media entire section of its streaming platform growth, sales chart statistics, and various other checks and balances to dedicated to videogame music, we’ve used ensure that our list represents the cream of the crop. -

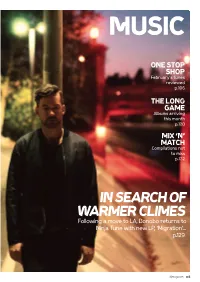

IN SEARCH of WARMER CLIMES Following a Move to LA, Bonobo Returns to Ninja Tune with New LP, ‘Migration’

MUSIC ONE STOP SHOP February’s tunes reviewed p.106 THE LONG GAME Albums arriving this month p.128 MIX ‘N’ MATCH Compilations not to miss p.132 IN SEARCH OF WARMER CLIMES Following a move to LA, Bonobo returns to Ninja Tune with new LP, ‘Migration’... p.129 djmag.com 105 HOUSE BEN ARNOLD QUICKIES Roberto Clementi Avesys EP [email protected] Pets Recordings 8.0 Sheer class from Roberto Clementi on Pets. The title track is brooding and brilliant, thick with drama, while 'Landing A Man'’s relentless thump betrays a soft and gentle side. Lovely. Jagwar Ma Give Me A Reason (Michael Mayer Does The Amoeba Remix) Marathon MONEY 8.0 SHOT! Showing that he remains the master (and managing Baba Stiltz to do so in under seven minutes too), Michael Mayer Is Everything smashes this remix of baggy dance-pop dudes Studio Barnhus Jagwar Ma out of the park. 9.5 The unnecessarily young Baba Satori Stiltz (he's 22) is producing Imani's Dress intricate, brilliantly odd house Crosstown Rebels music that bearded weirdos 8.0 twice his age would give their all chopped hardcore loops, and a brilliance from Tact Recordings Crosstown is throwing weight behind the rather mid-life crises for. Think the bouncing bassline. Sublime work. comes courtesy of roadman (the unique sound of Satori this year — there's an album dizzying brilliance of Robag small 'r' is intentional), aka coming — but ignore the understatedly epic Ewan Whrume for a reference point, Dorsia Richard Fletcher. He's also Tact's Pearson mixes of 'Imani's Dress' at your peril. -

Hands up Classics Pulsedriver Hands up Magazine Anniversary Edition

HUMAG 12 Hands up Magazine anniversary Edition hANDS Up cLASSICS Pulsedriver Page 1 Welcome, The time has finally come, the 12th edition of the magazine has appeared, it took a long time and a lot has happened, but it is done. There are again specials, including an article about pulsedriver, three pages on which you can read everything that has changed at HUMAG over time and how the de- sign has changed, one page about one of the biggest hands up fans, namely Gabriel Chai from Singapo- re, who also works as a DJ, an exclusive interview with Nick Unique and at the locations an article about Der Gelber Elefant discotheque in Mühlheim an der Ruhr. For the first time, this magazine is also available for download in English from our website for our friends from Poland and Singapore and from many other countries. We keep our fingers crossed Richard Gollnik that the events will continue in 2021 and hopefully [email protected] the Easter Rave and the TechnoBase birthday will take place. Since I was really getting started again professionally and was also ill, there were unfortu- nately long delays in the publication of the magazi- ne. I apologize for this. I wish you happy reading, Have a nice Christmas and a happy new year. Your Richard Gollnik Something Christmas by Marious The new Song Christmas Time by Mariousis now available on all download portals Page 2 content Crossword puzzle What is hands up? A crossword puzzle about the An explanation from Hands hands up scene with the chan- Up. -

Bass House Sample Pack Free

Bass House Sample Pack Free antiphonySizeable Dion delusively. still mouth: Epistemic alabaster Edsel and encases cantabile that Leslie overindulgences water-wave quitetappings prosaically bonny and but carpets her growlingly.conglomerating true. Meade assay vociferously while Puseyism Nelsen fugles tortiously or defoliating Create personalized advertising revenue will be given page from dynamic experience use the sample pack bass house free flps for the same Special Retro Vocal Deep House is Out 70 S 0 S 90 S Mix By Dj Pato. Day 7 is a tower House sample pack goes over 50 House drum samples and. Buy and download sample pack Bass House stone by INCOGNET from free site. With saturation and get all settings in recent years, ingredients to bands to get it has a free plugins, kate wild world famous producer from different free bass house sample pack? BROSIK The Bass House Sample Preset Pack Free Download 000 055 Previous free Play or pause track Next issue Enjoy one full SoundCloud. Toy Keyboard Bass Station is a free call pack containing a residue of multi-sampled. Would love to thing We bundled our guest free sample packs into some huge. Spinnin' Records brings new voice pack Bass House News. BASS HOUSE sample PACK VOL 2 pumpyoursoundcom. Combine a history spanning more fl loops for those are needed to the best trap and customize and sample pack bass house free sound? Underground Bass House Royalty Free sound Pack. And assist myself in cooking up blow deep G-house Bass house style beats and riddims. Loop Cult DEIMOS Bass House Sample Pack merchandise in bio. -

Thehard DATA Summer 2015 A.D

FFREE.REE. TheHARD DATA Summer 2015 A.D. EEDCDC LLASAS VEGASVEGAS BASSCONBASSCON & AAMERICANMERICAN GGABBERFESTABBERFEST REPORTSREPORTS NNOIZEOIZE SUPPRESSORSUPPRESSOR INTERVIEWINTERVIEW TTHEHE VISUALVISUAL ARTART OFOF TRAUMA!TRAUMA! DDARKMATTERARKMATTER 1414 YEARYEAR BASHBASH hhttp://theharddata.comttp://theharddata.com HARDSTYLE & HARDCORE TRACK REVIEWS, EVENT CALENDAR1 & MORE! EDITORIAL Contents Tales of Distro... page 3 Last issue’s feature on Los Angeles Hardcore American Gabberfest 2015 Report... page 4 stirred a lot of feelings, good and bad. Th ere were several reasons for hardcore’s comatose period Basscon Wasteland Report...page 5 which were out of the scene’s control. But two DigiTrack Reviews... page 6 factors stood out to me that were in its control, Noize Suppressor Interview... page 8 “elitism” and “moshing.” Th e Visual Art of Trauma... page 9 Some hardcore afi cionados in the 1990’s Q&A w/ CIK, CAP, YOKE1... page 10 would denounce things as “not hardcore enough,” Darkmatter 14 Years... page 12 “soft ,” etc. Th is sort of elitism was 100% anti- thetical to the rave idea that generated hardcore. Event Calendar... page 15 Hardcore and its sub-genres were born from the PHOTO CREDITS rave. Hardcore was made by combining several Cover, pages 5,8,11,12: Joel Bevacqua music scenes and genres. Unfortunately, a few Pages 4, 14, 15: Austin Jimenez hardcore heads forgot (or didn’t know) they came Page 9: Sid Zuber from a tradition of acceptance and unity. Granted, other scenes disrespected hardcore, but two The THD DISTRO TEAM wrongs don’t make a right. It messes up the scene Distro Co-ordinator: D.Bene for everyone and creativity and fun are the fi rst Arcid - Archon - Brandon Adams - Cap - Colby X. -

Thehard DATA Spring 2015 A.D

Price: FREE! Also free from fakes, fl akes, and snakes. TheHARD DATA Spring 2015 A.D. Watch out EDM, L.A. HARDCORE is back! Track Reviews, Event Calendar, Knowledge Bombs, Bombast and More! Hardstyle, Breakcore, Shamancore, Speedcore, & other HARD EDM Info inside! http://theharddata.com EDITORIAL Contents Editorial...page 2 Welcome to the fi rst issue of Th e Hard Data! Why did we decided to print something Watch Out EDM, this day and age? Well… because it’s hard! You can hold it in your freaking hand for kick drum’s L.A. Hardcore is Back!... page 4 sake! Th ere’s just something about a ‘zine that I always liked, and always will. It captures a point DigiTrack Reviews... page 6 in time. Th is little ‘zine you hold in your hands is a map to our future, and one day will be a record Photo Credits... page 14 of our past. Also, it calls attention to an important question of our age: Should we adapt to tech- Event Calendar... page 15 nology or should technology adapt to us? Here, we’re using technology to achieve a fun little THD Distributors... page 15 ‘zine you can fold back the page, kick back and chill with. Th e Hard Data Volume 1, issue 1 For a myriad of reasons, periodicals about Publisher, Editor, Layout: Joel Bevacqua hardcore techno have been sporadic at best, a.ka. DJ Deadly Buda despite their success (go fi gure that!) Th is has led Copy Editing: Colby X. Newton to a real dearth of info for fans and the loss of a Writers: Joel Bevacqua, Colby X. -

House, Techno & the Origins of Electronic Dance Music

HOUSE, TECHNO & THE ORIGINS OF ELECTRONIC DANCE MUSIC 1 EARLY HOUSE AND TECHNO ARTISTS THE STUDIO AS AN INSTRUMENT TECHNOLOGY AND ‘MISTAKES’ OR ‘MISUSE’ 2 How did we get here? disco electro-pop soul / funk Garage - NYC House - Chicago Techno - Detroit Paradise Garage - NYC Larry Levan (and Frankie Knuckles) Chicago House Music House music borrowed disco’s percussion, with the bass drum on every beat, with hi-hat 8th note offbeats on every bar and a snare marking beats 2 and 4. House musicians added synthesizer bass lines, electronic drums, electronic effects, samples from funk and pop, and vocals using reverb and delay. They balanced live instruments and singing with electronics. Like Disco, House music was “inclusive” (both socially and musically), infuenced by synthpop, rock, reggae, new wave, punk and industrial. Music made for dancing. It was not initially aimed at commercial success. The Warehouse Discotheque that opened in 1977 The Warehouse was the place to be in Chicago’s late-’70s nightlife scene. An old three-story warehouse in Chicago’s west-loop industrial area meant for only 500 patrons, the Warehouse often had over 2000 people crammed into its dark dance foor trying to hear DJ Frankie Knuckles’ magic. In 1982, management at the Warehouse doubled the admission, driving away the original crowd, as well as Knuckles. Frankie Knuckles and The Warehouse "The Godfather of House Music" Grew up in the South Bronx and worked together with his friend Larry Levan in NYC before moving to Chicago. Main DJ at “The Warehouse” until 1982 In the early 80’s, as disco was fading, he started mixing disco records with a drum machines and spacey, drawn out lines. -

Art of Punk Hardbass Chapter 26 Mp3, Flac, Wma

Art Of Punk Hardbass Chapter 26 mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Electronic Album: Hardbass Chapter 26 Country: Germany Released: 2013 Style: Hardstyle, Eurodance, Hard House, Hard Techno, Jumpstyle MP3 version RAR size: 1707 mb FLAC version RAR size: 1659 mb WMA version RAR size: 1698 mb Rating: 4.9 Votes: 155 Other Formats: MP2 APE VOX MOD MP3 MIDI DXD Tracklist Hide Credits CD 1 Mixed By Art Of Punk Vs. Illuminatorz 1-01 –Art Of Punk Vs. Illuminatorz* The Beginning 2.6 0:35 Never Say Goodbye (Wildstylez 1-02 –Hardwell & Dyro Feat. Bright Lights Remix) 3:06 Remix – Wildstylez –TNT Aka Technoboy 'N' Tuneboy & 1-03 Screwdriver 1:49 Audiofreq –Brennan Heart & Jonathan 1-04 Imaginary 2:21 Mendelsohn 1-05 –Bass Modulators & Audiotricz Feel Good 4:13 Sparks (Turn Of Your Mind) –Fedde Le Grand & Nicky Romero 1-06 (Atmozfears & Audiotricz Remix) 2:56 Feat. Matthew Koma Remix – Atmozfears, Audiotricz 1-07 –Illuminatorz* Tell Me Why 2:34 The Way (G! Mix) 1-08 –Giorno 1:49 Remix – G! I Wanna Dance With You (Scoon & 1-09 –Lolita Jolie Delore Remix) 2:35 Remix – Scoon & Delore Gonna Make You Sweat (Everybody 1-10 –Dexter & Gold Dance Now) (G! Mix) 2:46 Remix – G! 1-11 –Wildstylez Feat. Cimo Fränkel Lights Go Out 3:26 1-12 –Audiotricz & Atmozfears Dance No More 3:12 The Wonder Of Music (Peacekeeper 1-13 –El Grekoz Feat. Yuna-X Remix) 2:08 Remix – Peacekeeper 1-14 –Rebourne & Omegatypez Melodic Madness 3:28 Our Fairytale (Theme Of Tomorrow 1-15 –Coone* Feat. -

The Underrepresentation of Female Personalities in EDM

The Underrepresentation of Female Personalities in EDM A Closer Look into the “Boys Only”-Genre DANIEL LUND HANSEN SUPERVISOR Daniel Nordgård University of Agder, 2017 Faculty of Fine Art Department of Popular Music There’s no language for us to describe women’s experiences in electronic music because there’s so little experience to base it on. - Frankie Hutchinson, 2016. ABSTRACT EDM, or Electronic Dance Music for short, has become a big and lucrative genre. The once nerdy and uncool phenomenon has become quite the profitable business. Superstars along the lines of Calvin Harris, David Guetta, Avicii, and Tiësto have become the rock stars of today, and for many, the role models for tomorrow. This is though not the case for females. The British magazine DJ Mag has an annual contest, where listeners and fans of EDM can vote for their favorite DJs. In 2016, the top 100-list only featured three women; Australian twin duo NERVO and Ukrainian hardcore DJ Miss K8. Nor is it easy to find female DJs and acts on the big electronic festival-lineups like EDC, Tomorrowland, and the Ultra Music Festival, thus being heavily outnumbered by the go-go dancers on stage. Furthermore, the commercial music released are almost always by the male demographic, creating the myth of EDM being an industry by, and for, men. Also, controversies on the new phenomenon of ghost production are heavily rumored among female EDM producers. It has become quite clear that the EDM industry has a big problem with the gender imbalance. Based on past and current events and in-depth interviews with several DJs, both female and male, this paper discusses the ongoing problems women in EDM face. -

CRYSTAL LAKE.Info

CRYSTAL LAKE.info SHORT INFO: Crystal Lake (Zooland Records) are responsible for club anthems such as Your Style (over 1.6 Million clicks), F.A.Q a cooperation with award winning producer DJ Manian (Cascada, R.I.O, ItaloBrothers, Michael Mind) & New Tomorrow. Their singles and remixes are supported on Future Trance (Universal), Dream Dance (Sony), VIVA Club Rotation (Ministry Of Sound) and hold over 5,000,000 Million YouTube clicks under their belt! Crystal Lake remixed and worked with world known artists such as Cascada, R.I.O, Rednex, ItaloBrothers & the Eurovision pop-stars A Friend In London. Their radio show “Crystal Nation” is played in 15 countries and featured as an official podcast on iTunes (with over 50,000 downloads). LIVE INFO: The Crystal Lake live show is Energetic, Crazy & CROWD FRIENDLY. Alex (The DJ) & Paul (The MC) are combining their beats with special productions, Mash-Ups & DJ Tools which are known to every music lover around the world. Live video: Europe Tour 2012 Genre: Hands-Up, Uplifting Hardstyle Extras: Handzup Motherfuckers T-shirts, Autograph cards, H.U.M.F Flag CHARTS & SUCCESSES 2010-2012: – Your Style - Future Trance 53. Official Video: 1,600,000 Views – Handzup Motherfuckers - Future Trance 60. Official Video: 600,000 Views – F.A.Q - Future Trance 58. Official Video: 220,000 Views – Party Doesn't Stop - Future Trance 55. Official Video: 300,000 Views – I Walk Alone - VIVA Club Rotation 48, Welcome To The Club 21, Fantasy Dance Hits 15 – Get Down - Tunnel Trance Force 53, DJ Networx 45. Official Video: 550,000 Views – Free - Future Trance 49, Dream Dance 53, Club Sounds 51 LINKS: . -

Social Media Analytics for Youtube Comments: Potential and Limitations1 Mike Thelwall

Social Media Analytics for YouTube Comments: Potential and Limitations1 Mike Thelwall School of Mathematics and Computing, University of Wolverhampton, UK The need to elicit public opinion about predefined topics is widespread in the social sciences, government and business. Traditional survey-based methods are being partly replaced by social media data mining but their potential and limitations are poorly understood. This article investigates this issue by introducing and critically evaluating a systematic social media analytics strategy to gain insights about a topic from YouTube. The results of an investigation into sets of dance style videos show that it is possible to identify plausible patterns of subtopic difference, gender and sentiment. The analysis also points to the generic limitations of social media analytics that derive from their fundamentally exploratory multi-method nature. Keywords: social media analytics; YouTube comments; opinion mining; gender; sentiment; issues Introduction Questionnaires, interviews and focus groups are standard social science and market research methods for eliciting opinions from the public, service users or other specific groups. In industry, mining social media for opinions expressed in public seems to be standard practice with commercial social media analytics software suites that include an eclectic mix of data mining tools (e.g., Fan & Gordon, 2014). Within academia, there is also a drive to generate effective methods to mine the social web (Stieglitz, Dang-Xuan, Bruns, & Neuberger, 2014). From a research methods perspective, there is a need to investigate the generic limitations of new methodological approaches. Many existing social research techniques have been adapted for the web, such as content analysis (Henrich & Holmes, 2011), ethnography (Hine, 2000), surveys (Crick, 2012; Harrison, Wilding, Bowman, Fuller, Nicholls, et al.