Non-Printable and Special Characters? … BYTE Me!

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hieroglyphs for the Information Age: Images As a Replacement for Characters for Languages Not Written in the Latin-1 Alphabet Akira Hasegawa

Rochester Institute of Technology RIT Scholar Works Theses Thesis/Dissertation Collections 5-1-1999 Hieroglyphs for the information age: Images as a replacement for characters for languages not written in the Latin-1 alphabet Akira Hasegawa Follow this and additional works at: http://scholarworks.rit.edu/theses Recommended Citation Hasegawa, Akira, "Hieroglyphs for the information age: Images as a replacement for characters for languages not written in the Latin-1 alphabet" (1999). Thesis. Rochester Institute of Technology. Accessed from This Thesis is brought to you for free and open access by the Thesis/Dissertation Collections at RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. Hieroglyphs for the Information Age: Images as a Replacement for Characters for Languages not Written in the Latin- 1 Alphabet by Akira Hasegawa A thesis project submitted in partial fulfillment of the requirements for the degree of Master of Science in the School of Printing Management and Sciences in the College of Imaging Arts and Sciences of the Rochester Institute ofTechnology May, 1999 Thesis Advisor: Professor Frank Romano School of Printing Management and Sciences Rochester Institute ofTechnology Rochester, New York Certificate ofApproval Master's Thesis This is to certify that the Master's Thesis of Akira Hasegawa With a major in Graphic Arts Publishing has been approved by the Thesis Committee as satisfactory for the thesis requirement for the Master ofScience degree at the convocation of May 1999 Thesis Committee: Frank Romano Thesis Advisor Marie Freckleton Gr:lduate Program Coordinator C. -

Package 'Pinsplus'

Package ‘PINSPlus’ August 6, 2020 Encoding UTF-8 Type Package Title Clustering Algorithm for Data Integration and Disease Subtyping Version 2.0.5 Date 2020-08-06 Author Hung Nguyen, Bang Tran, Duc Tran and Tin Nguyen Maintainer Hung Nguyen <[email protected]> Description Provides a robust approach for omics data integration and disease subtyping. PIN- SPlus is fast and supports the analysis of large datasets with hundreds of thousands of sam- ples and features. The software automatically determines the optimal number of clus- ters and then partitions the samples in a way such that the results are ro- bust against noise and data perturbation (Nguyen et.al. (2019) <DOI: 10.1093/bioinformat- ics/bty1049>, Nguyen et.al. (2017)<DOI: 10.1101/gr.215129.116>). License LGPL Depends R (>= 2.10) Imports foreach, entropy , doParallel, matrixStats, Rcpp, RcppParallel, FNN, cluster, irlba, mclust RoxygenNote 7.1.0 Suggests knitr, rmarkdown, survival, markdown LinkingTo Rcpp, RcppArmadillo, RcppParallel VignetteBuilder knitr NeedsCompilation yes Repository CRAN Date/Publication 2020-08-06 21:20:02 UTC R topics documented: PINSPlus-package . .2 AML2004 . .2 KIRC ............................................3 PerturbationClustering . .4 SubtypingOmicsData . .9 1 2 AML2004 Index 13 PINSPlus-package Perturbation Clustering for data INtegration and disease Subtyping Description This package implements clustering algorithms proposed by Nguyen et al. (2017, 2019). Pertur- bation Clustering for data INtegration and disease Subtyping (PINS) is an approach for integraton of data and classification of diseases into various subtypes. PINS+ provides algorithms support- ing both single data type clustering and multi-omics data type. PINSPlus is an improved version of PINS by allowing users to customize the based clustering algorithm and perturbation methods. -

INTERSKILL MAINFRAME QUARTERLY December 2011

INTERSKILL MAINFRAME QUARTERLY December 2011 Retaining Data Center Skills Inside This Issue and Knowledge Retaining Data Center Skills and Knowledge 1 Interskill Releases - December 2011 2 By Greg Hamlyn Vendor Briefs 3 This the final chapter of this four part series that briefly Taking Care of Storage 4 explains the data center skills crisis and the pros and cons of Learning Spotlight – Managing Projects 5 implementing a coaching or mentoring program. In this installment we will look at some of the steps to Tech-Head Knowledge Test – Utilizing ISPF 5 implementing a program such as this into your data center. OPINION: The Case for a Fresh Technical If you missed these earlier installments, click the links Opinion 6 below. TECHNICAL: Lost in Translation Part 1 - EBCDIC Code Pages 7 Part 1 – The Data Center Skills Crisis MAINFRAME – Weird and Unusual! 10 Part 2 – How Can I Prevent Skills Loss in My Data Center? Part 3 – Barriers to Implementing a Coaching or Mentoring Program should consider is the GROW model - Determine whether an external consultant should be Part Four – Implementing a Successful Coaching used (include pros and cons) - Create a basic timeline of the project or Mentoring Program - Identify how you will measure the effectiveness of the project The success of any project comes down to its planning. If - Provide some basic steps describing the coaching you already believe that your data center can benefit from and mentoring activities skills and knowledge transfer and that coaching and - Next phase if the pilot program is deemed successful mentoring will assist with this, then outlining a solid (i.e. -

IPDS Technical Reference 1

IPDS Technical Reference 1 TABLE OF CONTENTS Manuals for the IPDS card.................................................................................................................................4 Notice..................................................................................................................................................................5 Important.........................................................................................................................................................5 How to Read This Manual................................................................................................................................. 6 Symbols...........................................................................................................................................................6 About This Book..................................................................................................................................................7 Audience.........................................................................................................................................................7 Terminology.................................................................................................................................................... 7 About IPDS.......................................................................................................................................................... 8 Capabilities of IPDS............................................................................................................................................9 -

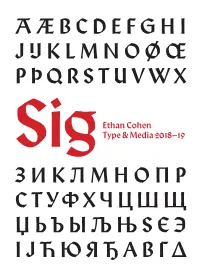

Sig Process Book

A Æ B C D E F G H I J IJ K L M N O Ø Œ P Þ Q R S T U V W X Ethan Cohen Type & Media 2018–19 SigY Z А Б В Г Ґ Д Е Ж З И К Л М Н О П Р С Т У Ф Х Ч Ц Ш Щ Џ Ь Ъ Ы Љ Њ Ѕ Є Э І Ј Ћ Ю Я Ђ Α Β Γ Δ SIG: A Revival of Rudolf Koch’s Wallau Type & Media 2018–19 ЯREthan Cohen ‡ Submitted as part of Paul van der Laan’s Revival class for the Master of Arts in Type & Media course at Koninklijke Academie von Beeldende Kunsten (Royal Academy of Art, The Hague) INTRODUCTION “I feel such a closeness to William Project Overview Morris that I always have the feeling Sig is a revival of Rudolf Koch’s Wallau Halbfette. My primary source that he cannot be an Englishman, material was the Klingspor Kalender für das Jahr 1933 (Klingspor Calen- dar for the Year 1933), a 17.5 × 9.6 cm book set in various cuts of Wallau. he must be a German.” The Klingspor Kalender was an annual promotional keepsake printed by the Klingspor Type Foundry in Offenbach am Main that featured different Klingspor typefaces every year. This edition has a daily cal- endar set in Magere Wallau (Wallau Light) and an 18-page collection RUDOLF KOCH of fables set in 9 pt Wallau Halbfette (Wallau Semibold) with woodcut illustrations by Willi Harwerth, who worked as a draftsman at the Klingspor Type Foundry. -

IBM Db2 High Performance Unload for Z/OS User's Guide

5.1 IBM Db2 High Performance Unload for z/OS User's Guide IBM SC19-3777-03 Note: Before using this information and the product it supports, read the "Notices" topic at the end of this information. Subsequent editions of this PDF will not be delivered in IBM Publications Center. Always download the latest edition from the Db2 Tools Product Documentation page. This edition applies to Version 5 Release 1 of Db2 High Performance Unload for z/OS (product number 5655-AA1) and to all subsequent releases and modifications until otherwise indicated in new editions. © Copyright IBM® Corporation 1999, 2021; Copyright Infotel 1999, 2021. All Rights Reserved. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. © Copyright International Business Machines Corporation . US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents About this information......................................................................................... vii Chapter 1. Db2 High Performance Unload overview................................................ 1 What does Db2 HPU do?..............................................................................................................................1 Db2 HPU benefits.........................................................................................................................................1 Db2 HPU process and components.............................................................................................................2 -

Package Mathfont V. 1.6 User Guide Conrad Kosowsky December 2019 [email protected]

Package mathfont v. 1.6 User Guide Conrad Kosowsky December 2019 [email protected] For easy, off-the-shelf use, type the following in your docu- ment preamble and compile using X LE ATEX or LuaLATEX: \usepackage[hfont namei]{mathfont} Abstract The mathfont package provides a flexible interface for changing the font of math- mode characters. The package allows the user to specify a default unicode font for each of six basic classes of Latin and Greek characters, and it provides additional support for unicode math and alphanumeric symbols, including punctuation. Crucially, mathfont is compatible with both X LE ATEX and LuaLATEX, and it provides several font-loading commands that allow the user to change fonts locally or for individual characters within math mode. Handling fonts in TEX and LATEX is a notoriously difficult task. Donald Knuth origi- nally designed TEX to support fonts created with Metafont, and while subsequent versions of TEX extended this functionality to postscript fonts, Plain TEX's font-loading capabilities remain limited. Many, if not most, LATEX users are unfamiliar with the fd files that must be used in font declaration, and the minutiae of TEX's \font primitive can be esoteric and confusing. LATEX 2"'s New Font Selection System (nfss) implemented a straightforward syn- tax for loading and managing fonts, but LATEX macros overlaying a TEX core face the same versatility issues as Plain TEX itself. Fonts in math mode present a double challenge: after loading a font either in Plain TEX or through the nfss, defining math symbols can be unin- tuitive for users who are unfamiliar with TEX's \mathcode primitive. -

DEC Text Processing Utility Reference Manual

DEC Text Processing Utility Reference Manual Order Number: AA–PWCCD–TE April 2001 This manual describes the elements of the DEC Text Processing Utility (DECTPU). It is intended as a reference manual for experienced programmers. Revision/Update Information: This manual supersedes the DEC Text Processing Utility Reference Manual, Version 3.1 for OpenVMS Version 7.2. Software Version: DEC Text Processing Utility Version 3.1 for OpenVMS Alpha Version 7.3 and OpenVMS VAX Version 7.3 The content of this document has not changed since OpenVMS Version 7.1. Compaq Computer Corporation Houston, Texas © 2001 Compaq Computer Corporation COMPAQ, VAX, VMS, and the Compaq logo Registered in U.S. Patent and Trademark Office. OpenVMS is a trademark of Compaq Information Technologies Group, L.P. Motif is a trademark of The Open Group. PostScript is a registered trademark of Adobe Systems Incorporated. All other product names mentioned herein may be the trademarks or registered trademarks of their respective companies. Confidential computer software. Valid license from Compaq or authorized sublicensor required for possession, use, or copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under vendor’s standard commercial license. Compaq shall not be liable for technical or editorial errors or omissions contained herein. The information in this document is provided "as is" without warranty of any kind and is subject to change without notice. The warranties for Compaq products are set forth in the express limited warranty statements accompanying such products. -

ISO Basic Latin Alphabet

ISO basic Latin alphabet The ISO basic Latin alphabet is a Latin-script alphabet and consists of two sets of 26 letters, codified in[1] various national and international standards and used widely in international communication. The two sets contain the following 26 letters each:[1][2] ISO basic Latin alphabet Uppercase Latin A B C D E F G H I J K L M N O P Q R S T U V W X Y Z alphabet Lowercase Latin a b c d e f g h i j k l m n o p q r s t u v w x y z alphabet Contents History Terminology Name for Unicode block that contains all letters Names for the two subsets Names for the letters Timeline for encoding standards Timeline for widely used computer codes supporting the alphabet Representation Usage Alphabets containing the same set of letters Column numbering See also References History By the 1960s it became apparent to thecomputer and telecommunications industries in the First World that a non-proprietary method of encoding characters was needed. The International Organization for Standardization (ISO) encapsulated the Latin script in their (ISO/IEC 646) 7-bit character-encoding standard. To achieve widespread acceptance, this encapsulation was based on popular usage. The standard was based on the already published American Standard Code for Information Interchange, better known as ASCII, which included in the character set the 26 × 2 letters of the English alphabet. Later standards issued by the ISO, for example ISO/IEC 8859 (8-bit character encoding) and ISO/IEC 10646 (Unicode Latin), have continued to define the 26 × 2 letters of the English alphabet as the basic Latin script with extensions to handle other letters in other languages.[1] Terminology Name for Unicode block that contains all letters The Unicode block that contains the alphabet is called "C0 Controls and Basic Latin". -

Unicode and Code Page Support

Natural for Mainframes Unicode and Code Page Support Version 4.2.6 for Mainframes October 2009 This document applies to Natural Version 4.2.6 for Mainframes and to all subsequent releases. Specifications contained herein are subject to change and these changes will be reported in subsequent release notes or new editions. Copyright © Software AG 1979-2009. All rights reserved. The name Software AG, webMethods and all Software AG product names are either trademarks or registered trademarks of Software AG and/or Software AG USA, Inc. Other company and product names mentioned herein may be trademarks of their respective owners. Table of Contents 1 Unicode and Code Page Support .................................................................................... 1 2 Introduction ..................................................................................................................... 3 About Code Pages and Unicode ................................................................................ 4 About Unicode and Code Page Support in Natural .................................................. 5 ICU on Mainframe Platforms ..................................................................................... 6 3 Unicode and Code Page Support in the Natural Programming Language .................... 7 Natural Data Format U for Unicode-Based Data ....................................................... 8 Statements .................................................................................................................. 9 Logical -

United States Patent (19) 11 Patent Number: 5,689,723 Lim Et Al

US005689723A United States Patent (19) 11 Patent Number: 5,689,723 Lim et al. 45) Date of Patent: Nov. 18, 1997 (54) METHOD FOR ALLOWINGSINGLE-BYTE 5,091,878 2/1992 Nagasawa et al. ..................... 364/419 CHARACTER SET AND DOUBLE-BYTE 5,257,351 10/1993 Leonard et al. ... ... 395/150 CHARACTER SET FONTS IN ADOUBLE 5,287,094 2/1994 Yi....................... ... 345/143 BYTE CHARACTER SET CODE PAGE 5,309,358 5/1994 Andrews et al. ... 364/419.01 5,317,509 5/1994 Caldwell ............................ 364/419.08 75 Inventors: Chan S. Lim, Potomac; Gregg A. OTHER PUBLICATIONS Salsi, Germantown, both of Md.; Isao Nozaki, Yamato, Japan Japanese PUPA number 1-261774, Oct. 18, 1989, pp. 1-2. Inside Macintosh, vol. VI, Apple Computer, Inc., Cupertino, (73) Assignee: International Business Machines CA, Second printing, Jun. 1991, pp. 15-4 through 15-39. Corp, Armonk, N.Y. Karew Acerson, WordPerfect: The Complete Reference, Eds., p. 177-179, 1988. 21) Appl. No.: 13,271 IBM Manual, "DOSBunsho (Language) Program II Opera 22 Filed: Feb. 3, 1993 tion Guide” (N:SH 18-2131-2) (Partial Translation of p. 79). 51 Int. Cl. ... G09G 1/00 Primary Examiner-Phu K. Nguyen 52) U.S. Cl. .................. 395/805; 395/798 Assistant Examiner-Cliff N. Vo (58) Field of Search ..................................... 395/144-151, Attorney, Agent, or Firm-Edward H. Duffield 395/792, 793, 798, 805, 774; 34.5/171, 127-130, 23-26, 143, 116, 192-195: 364/419 57 ABSTRACT The method of the invention allows both single-byte char 56) References Cited acter set (SBCS) and double-byte character set (DBCS) U.S. -

Lecture 2: Variables and Primitive Data Types

Lecture 2: Variables and Primitive Data Types MIT-AITI Kenya 2005 1 In this lecture, you will learn… • What a variable is – Types of variables – Naming of variables – Variable assignment • What a primitive data type is • Other data types (ex. String) MIT-Africa Internet Technology Initiative 2 ©2005 What is a Variable? • In basic algebra, variables are symbols that can represent values in formulas. • For example the variable x in the formula f(x)=x2+2 can represent any number value. • Similarly, variables in computer program are symbols for arbitrary data. MIT-Africa Internet Technology Initiative 3 ©2005 A Variable Analogy • Think of variables as an empty box that you can put values in. • We can label the box with a name like “Box X” and re-use it many times. • Can perform tasks on the box without caring about what’s inside: – “Move Box X to Shelf A” – “Put item Z in box” – “Open Box X” – “Remove contents from Box X” MIT-Africa Internet Technology Initiative 4 ©2005 Variables Types in Java • Variables in Java have a type. • The type defines what kinds of values a variable is allowed to store. • Think of a variable’s type as the size or shape of the empty box. • The variable x in f(x)=x2+2 is implicitly a number. • If x is a symbol representing the word “Fish”, the formula doesn’t make sense. MIT-Africa Internet Technology Initiative 5 ©2005 Java Types • Integer Types: – int: Most numbers you’ll deal with. – long: Big integers; science, finance, computing. – short: Small integers.