Differentiable Vector Graphics Rasterization for Editing and Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Two-Dimensional Analysis of Digital Images Through Vector Graphic Editors in Dentistry: New Calibration and Analysis Protocol Based on a Scoping Review

International Journal of Environmental Research and Public Health Review Two-Dimensional Analysis of Digital Images through Vector Graphic Editors in Dentistry: New Calibration and Analysis Protocol Based on a Scoping Review Samuel Rodríguez-López 1,* , Matías Ferrán Escobedo Martínez 1 , Luis Junquera 2 and María García-Pola 2 1 Department of Operative Dentistry, School of Dentistry, University of Oviedo, C/. Catedrático Serrano s/n., 33006 Oviedo, Spain; [email protected] 2 Department of Oral and Maxillofacial Surgery and Oral Medicine, School of Dentistry, University of Oviedo, C/. Catedrático Serrano s/n., 33006 Oviedo, Spain; [email protected] (L.J.); [email protected] (M.G.-P.) * Correspondence: [email protected]; Tel.: +34-600-74-27-58 Abstract: This review was carried out to analyse the functions of three Vector Graphic Editor applications (VGEs) applicable to clinical or research practice, and through this we propose a two- dimensional image analysis protocol in a VGE. We adapted the review method from the PRISMA-ScR protocol. Pubmed, Embase, Web of Science, and Scopus were searched until June 2020 with the following keywords: Vector Graphics Editor, Vector Graphics Editor Dentistry, Adobe Illustrator, Adobe Illustrator Dentistry, Coreldraw, Coreldraw Dentistry, Inkscape, Inkscape Dentistry. The publications found described the functions of the following VGEs: Adobe Illustrator, CorelDRAW, and Inkscape. The possibility of replicating the procedures to perform the VGE functions was Citation: Rodríguez-López, S.; analysed using each study’s data. The search yielded 1032 publications. After the selection, 21 articles Escobedo Martínez, M.F.; Junquera, met the eligibility criteria. They described eight VGE functions: line tracing, landmarks tracing, L.; García-Pola, M. -

Svg2key Manual a Guide for Creating Shapes in Keynote

svg2key Manual A Guide for Creating Shapes in Keynote I. About svg2key is a simple command-line utility that extracts shapes from scalable vector graphic (SVG) files and imports them into Keynote, Apple’s new presentation program. By importing SVG shapes you can extend Keynote palette of shapes and use programs such as Illustrator, Omni Graffle and Inkscape as drawing tools for Keynote. II. System Requirements Mac OS X 10.3 or later Keynote 1 or later SVG editor (i.e. Inkscape, Illustrator, Omni Graffle, etc...) III. Installation Double-click the svg2key-0.2.dmg file to mount the disk image. Then drag the files over to your home folder. Launch the Terminal.app (in /Applications/Utilities) and type: chmod a+x svg2key Convert one of the sample SVG files to make sure everything works by typing: svg2key SVG_Examples/testfile.svg There will be a slight pause followed by: “Keynote file saved as Untitled.key”. To view the resulting file type: open Untitled.key IV. Usage svg2key [-f] [-o outputfile.key] file1.svg file2.svg ... Optional Flags: -o specify a output file name. The default file name is Untitled.key -f force svg2key to save Keynote file regardless of whether the file exists. -h display help and exit Examples: • Specify an output file using the -o flag svg2key -o outputfile.key file.svg if the file “output.key” exists you will be prompted for a new file name. To override the interaction mode you can use the -f flag like so: svg2key -f -o outputfile.key file.svg • Convert multiple svg files at once: svg2key file1.svg file2.svg file3.svg Here a separate slide will be created for each svg file. -

Effective Clipart Image Vectorization Through Direct Optimization of Bezigons

IEEE TRANSACTIONS ON VISUALIZATION AND COMPUTER GRAPHICS 1 Effective Clipart Image Vectorization Through Direct Optimization of Bezigons Ming Yang, Hongyang Chao, Chi Zhang, Jun Guo, Lu Yuan, and Jian Sun Abstract—Bezigons, i.e., closed paths composed of Bezier´ curves, have been widely employed to describe shapes in image vectorization results. However, most existing vectorization techniques infer the bezigons by simply approximating an intermediate vector representation (such as polygons). Consequently, the resultant bezigons are sometimes imperfect due to accumulated errors, fitting ambiguities, and a lack of curve priors, especially for low-resolution images. In this paper, we describe a novel method for vectorizing clipart images. In contrast to previous methods, we directly optimize the bezigons rather than using other intermediate representations; therefore, the resultant bezigons are not only of higher fidelity compared with the original raster image but also more reasonable because they were traced by a proficient expert. To enable such optimization, we have overcome several challenges and have devised a differentiable data energy as well as several curve-based prior terms. To improve the efficiency of the optimization, we also take advantage of the local control property of bezigons and adopt an overlapped piecewise optimization strategy. The experimental results show that our method outperforms both the current state-of-the-art method and commonly used commercial software in terms of bezigon quality. Index Terms—clipart vectorization, clipart tracing, bezigon optimization F 1 INTRODUCTION eration of intermediate polygons (Figure 1b) and con- sequently estimate bezigons (Figure 1c) that repro- MAGE vectorization, also known as image tracing, duce these polygons rather than the original image I is the process of converting a bitmap image into [1], [2], [4]. -

VECTOR IMAGES for Use with Coreldraw Graphics Suite X6

VECTOR IMAGES For use with CorelDRAW Graphics Suite X6 Table of Contents OVERVIEW 4 CREATING A NEW IMAGE 6 EDITING AN EXISTING IMAGE 11 USING POWERTRACE 15 LASER CUTTING A VECTOR IMAGE 19 VINYL CUTTING A VECTOR IMAGE 22 TROUBLESHOOTING 23 GLOSSARY 26 Overview CorelDRAW is a vector-based illustration program with tools for graph- ics, illustration, image tracing, photo editing, and more. CorelDRAW can be used to edit and create either vector or raster images. A raster or bitmap image is composed of individual pixels which can be seen as squares of color when magnified and let you display subtle changes in tones and colors. Vector objects, such as lines and shapes, vector text or vector groups, are composed of geometric characteristic and can eas- ily be edited. CorelDRAW is the base program for any files that go through the vinyl cutter or laser cutter in the CEID. It supports the following formats: .cdr (CorelDRAW native file); .eps, .pdf, .tiff, .jpg, and .gif. Other file types such as .ai, .svg, or .dxf, can be imported into CorelDRAW. 4 Vector images are better for logos, graphics, illustrations, and print layouts due to their resolution independence. Zooming in on a vector image Zooming in on a raster image Raster images are better for photorealism and color blending. Raster image photorealism Vector image photorealism 5 Creating a New Image Opening a new image: Create a new document by selecting File > New. In the Create a New Document dialog box, choose you canvas size, orientation, color mode, and DPI. The RGB color mode is more suited for digital images, while CMYK is typically used for printed images. -

Using Inkscape and Scribus to Increase Business Productivity

MultiSpectra Consultants White Paper Using Inkscape and Scribus to Increase Business Productivity Dr. Amartya Kumar Bhattacharya BCE (Hons.) ( Jadavpur ), MTech ( Civil ) ( IIT Kharagpur ), PhD ( Civil ) ( IIT Kharagpur ), Cert.MTERM ( AIT Bangkok ), CEng(I), FIE, FACCE(I), FISH, FIWRS, FIPHE, FIAH, FAE, MIGS, MIGS – Kolkata Chapter, MIGS – Chennai Chapter, MISTE, MAHI, MISCA, MIAHS, MISTAM, MNSFMFP, MIIBE, MICI, MIEES, MCITP, MISRS, MISRMTT, MAGGS, MCSI, MIAENG, MMBSI, MBMSM Chairman and Managing Director, MultiSpectra Consultants, 23, Biplabi Ambika Chakraborty Sarani, Kolkata – 700029, West Bengal, INDIA. E-mail: [email protected] Website: https://multispectraconsultants.com Businesses are under continuous pressure to cut costs and increase productivity. In modern businesses, computer hardware and software and ancillaries are a major cost factor. It is worthwhile for every business to investigate how best it can leverage the power of open source software to reduce expenses and increase revenues. Businesses that restrict themselves to proprietary software like Microsoft Office get a raw deal. Not only do they have to pay for the software but they have to factor-in the cost incurred in every instance the software becomes corrupt. This includes the fee required to be paid to the computer technician to re-install the software. All this creates a vicious environment where cost and delays keep mounting. It should be a primary aim of every business to develop a system where maintenance becomes automated to the maximum possible extent. This is where open source software like LibreOffice, Apache OpenOffice, Scribus, GIMP, Inkscape, Firefox, Thunderbird, WordPress, VLC media player, etc. come in. My company, MultiSpectra Consultants, uses open source software to the maximum possible extent thereby streamlining business processes. -

TRABAJO FIN DE GRADO Sistema Para La Vectorización De Imágenes

UNIVERSIDAD DE CASTILLA-LA MANCHA ESCUELA SUPERIOR DE INFORMÁTICA GRADO EN INGENIERÍA INFORMÁTICA TECNOLOGÍA ESPECÍFICA DE INGENIERÍA DE COMPUTADORES TRABAJO FIN DE GRADO Sistema para la vectorización de imágenes representadas en mapa de bits Dionisio Moreno Cañas Julio, 2018 UNIVERSIDAD DE CASTILLA-LA MANCHA ESCUELA SUPERIOR DE INFORMÁTICA Grupo Imaging. Leandro Guijarro Casado Departamento de Matemáticas. José Luis Espinosa Aranda TECNOLOGÍA ESPECÍFICA DE INGENIERÍA DE COMPUTADORES TRABAJO FIN DE GRADO Sistema para la vectorización de imágenes representadas en mapa de bits Autor(a): Dionisio Moreno Cañas Director(a): Leandro Guijarro Casado Director(a): José Luis Espinosa Aranda Julio, 2018 Sistema para la vectorización de imágenes representadas en mapa de bits © Dionisio Moreno Cañas, 2018 El escudo de Informática utilizado por este documento ha sido realizado por P. Moya, D. Villa e I. Díez, su inclusión debe respetar los derechos de autor y las licencias a las que se vea sometido. La copia y distribución de esta obra está permitida en todo el mundo, sin regalías y por cualquier medio, siempre que esta nota sea preservada. Se concede permiso para copiar y distribuir traducciones de este libro desde el español original a otro idioma, siempre que la traducción sea aprobada por el autor del libro y tanto el aviso de copyright como esta nota de permiso, sean preservados en todas las copias. Tribunal: Presidente: Vocal: Secretario: Fecha de defensa: Calificación: Presidente Vocal Secretario Fdo.: Fdo.: Fdo.: Dedicado a mis padres. Resumen n este Trabajo Fin de Grado (TFG) se desarrolla un sistema de vectorización de imágenes E representadas en mapa de bits para la empresa Indra Software Labs. -

Free Image Outline Tracer

Free image outline tracer Automatically trace photos and pictures into a stencil, pattern, line drawing, or sketch. Great for painting, wood working, stained glass, or other craft designs. Turn It into a Design. Automatically convert a picture to a PDF, DXF, AI or EPS vector drawing. Trace outer- or center-lines. If you have a color photo, put it through our free photo to drawing converter before vectorizing. Outline Centerline. Smoothness. SVG, DXF. Upload a bitmap image and we automatically figure out what settings to use and trace the image for you. Outline of the vector result overlaid on the bitmap. Autotracer is a free online image vectorizer. It can convert raster images like JPEGs, GIFs and PNGs to scalable vector graphics (EPS, SVG, AI and PDF). Trace text or outlines for the basis of a tattoo stencil. Welcome to Tattoo Tracer, this site lets you input an image and outputs a FREE Tattoo stencils maker. It traces the image (vector image) is free online photo editor can edit in the painting looks like one that led to the approximate color. Abstract Outlines Free Online Photo Editor. Photo, scketch and paint effects. For Tumblr, Facebook, Twitter or Your WebSite. Lunapics Image software free image. raster images into scalable vector files. The output formats include SVG, EPS, PS, PDF, DXF. Save yourself some time and give this free image autotracer a try. With frontline you are able to set trace options via GUI and preview trace results quickly. Support of image libraries as freeimage, gdk-pixbuf or paintlib(). The Trace Outline command converts thick black lines and areas into thin lines, usually one pixel wide. -

Engraving Images with a Laser Cutter

Engraving Images with a Laser Cutter IDeATe Laser Micro Part 5 Susan Finger 1. Using Adobe Illustrator to create vector files Download the image of the fern frond from Canvas. This picture was taken by Carl Davies and is made available under the Creative Common License. (I tried to take a picture of a fern in my garden but the picture was too busy to use.) http://www.scienceimage.csiro.au/image/3465/ Note that it is a jpeg (bitmapped image) which we are going to turn it into a vector image. You can do everything in this handout using Inkscape, which is free open source software. There is separate module on Canvas with all of the handouts if you prefer to use Inkscape. If it isn’t obvious how to do a particular step, use what I call “Google Help.” That is, search for something along the lines of “Inkscape change stroke color” and you will find many tutorials explaining the process. 1.1 Setting up an Illustrator Artboard Open Adobe Illustrator and select File→ New → Custom → Create. Accept the defaults for the custom file. We will change the size, orientation, etc. using the Artboard tool in a few minutes. Since we’ve been using inches for the units for all our files, let’s set the units to Inches through the Preferences window. Select File → Preferences → Units. While you have the Preferences window open, look at all the other settings you can change in your preferences. Next, we’ll change the size of the Artboard for this project. -

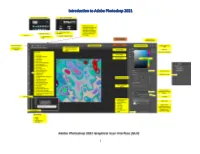

Introduction to Adobe Photoshop 2021

Introduction to Adobe Photoshop 2021 1 The Training Session: How to Get the Most out of the Training Approaching the training. An ideal way to use the training is to think about how you use Photoshop (or think about what you use it for in the workplace)… then pay attention to the capabilities of the software tool. Do not try to memorize the sequences. But try to remember the general name of the capability or the function. Remember that there are 3-4 at least different ways to achieve a particular objective in Adobe Photoshop. Interactions encouraged. Please ask questions or make comments as they arise…and are constructive for the group. Post-training. Then, after the training, try to get as much practice as possible using the software. It helps to have actual projects to work on. Use the context-sensitive help with the search query (top right of the Adobe Photoshop workspace) to find where tools are. And if you have tougher challenges, go to the Google with questions. Troubleshooting yourself makes you better at your work. Hour 1: Fidelity to an Original Image • Selecting a work space (to define toolbar and properties panels): Essentials (the simplest, often to start); 3D (soon to be deprecated because of incompatibility with current OSes…and no plans to update); Graphic and Web; Motion; Painting; Photography; and customized “New Workspace” • Engaging digital images: o Opening a digital image (and some types of digital image formats) o Cropping an image o Changing image resolution (to avoid pixelation and avoid aliasing and to enable scaling), through upsampling and pixel interpolation (but ideally would have the best original image to work from for meaningful details) o Resizing an image without affecting aspect ratio (or stretching the image); delinking height and width to enable changing aspect ratio o Setting color outputs (RGB or CMYK?.. -

Image Design and Animation

COURSE MATERIAL Image Design and Animation National Open University of Nigeria Faculty of Sciences, Department of Computer Science Copyright This course has been developed as part of the collaborative advanced ICT course development project of the Commonwealth of Learning (COL). COL is an intergovernmental organization created by Commonwealth Heads of Government to promote the development and sharing of open learning and distance education knowledge, resources and technologies. The National Open University of Nigeria (NOUN) is a full-fledged, autonomous and accredited public University. It offers its certificate, diploma, degree and postgraduate courses through the open and distance learning system which includes various means of communication such as face-to-face, broadcasting, telecasting, correspondence, seminars, e-learning as well as a blended mode. The NOUN’s academic programmes are quality-assured and centrally regulated by the National Universities Commission (NUC). © 2017 by the Commonwealth of Learning and The National Open University of Nigeria. Except where otherwise noted, Image Design and Animation is made available under Creative Commons Attribution-ShareAlike 4.0 International (CC BY-SA 4.0) License: https://creativecommons.org/licenses/by-sa/4.0/legalcode. For the avoidance of doubt, by applying this license the Commonwealth of Learning does not waive any privileges or immunities from claims that it may be entitled to assert, nor does the Commonwealth of Learning submit itself to the jurisdiction, courts, legal processes or laws of any jurisdiction. The ideas and opinions expressed in this publication are those of the author/s; they are not necessarily those of Commonwealth of Learning and do not commit the organization. -

Single Image Super-Resolution Using Vectorization and Texture Synthesis

Single Image Super-resolution using Vectorization and Texture Synthesis Kaoning Hu1, Dongeun Lee1 and Tianyang Wang2 1Department of Computer Science and Information Systems, Texas A&M University - Commerce, Commerce, Texas, U.S.A. 2Department of Computer Science and Information Technology, Austin Peay State University, Clarksville, Tennessee, U.S.A. Keywords: SISR, Image Vectorization, Texture Synthesis, KS Test. Abstract: Image super-resolution is a very useful tool in science and art. In this paper, we propose a novel method for single image super-resolution that combines image vectorization and texture synthesis. Image vectorization is the conversion from a raster image to a vector image. While image vectorization algorithms can trace the fine edges of images, they will sacrifice color and texture information. In contrast, texture synthesis techniques, which have been previously used in image super-resolution, can reasonably create high-resolution color and texture information, except that they sometimes fail to trace the edges of images correctly. In this work, we adopt the image vectorization to the edges of the original image, and the texture synthesis based on the Kolmogorov–Smirnov test (KS test) to the non-edge regions of the original image. The goal is to generate a plausible, visually pleasing detailed higher resolution version of the original image. In particular, our method works very well on the images of natural animals. 1 INTRODUCTION on the test set of low-resolution images to create a high-resolution image (Yang et al., 2019; Zhang et al., Image super-resolution is a technique that enhances 2019). With deep neural networks, they can perform the resolution of digital images. -

DIAGRAM SVG RPT-NBP FINAL.Docx

Assessments of Raster-‐to-‐Vector (SVG) Conversion software and 3D Printers for Tactile Graphics By Brian Mac Donald, National Braille Press, and Robert Hertig, Engineer-‐Northeastern University Executive Summary: The National Braille Press (NBP) subcontract is to research and assess SVG conversion software (from bitmap or raster images to SVG) to determine what is best overall choice for DIAGRAM’s future partners (content providers, publishers, authors, teachers and students). The goal is to identify a version that is affordable, and easy to use for publishers and individual content providers, so that they can incorporate SVGs in materials at the beginning of the production cycle. Similarly, this tool could be used for downstream accessibility providers to convert images to an SVG, and through simple software conversions, one can manipulate these images for tactile graphic renderings. Once a good conversion tool is identified, it will be easier for DIAGRAM to reach out and engage content providers to consider incorporating SVGs at the beginning of the production cycle, through the development of a process that is logical, is free or affordable, and is easy to implement. After filtering through much SVG software, we selected five SVG conversion programs that were compared based on ease-of-use, cost, editing features, and quality of SVG output, by converting two images representing different types of textbook diagrams. A second component of the subcontract is to provide an update on the potential use of 3D printers in the production of tactile graphics .Currently there is an explosion in the popularity of 3D printers, for schools and hobbyists, and this tool has the potential to be used for the development of tactile graphics for tests and textbooks.