Independent Reporter a Halcrow Group Limited

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

31645 Highspeed Rail Bill.Indd

31645 HighSpeed Rail Bill Modified—26 July 2013 4:45 AM Public Bill Committee HIGH SPEED RAIL (preparation) BILL WRITTEN EVIDENCE PUBLISHED BY AUTHORITY OF THE HOUSE OF COMMONS LONDON – THE STATIONERY OFFICE LIMITED PBC (Bill 010) 2013 - 2014 31645 HighSpeed Rail Bill Modified—26 July 2013 4:45 AM © Parliamentary Copyright House of Commons 2013 This publication may be reproduced under the terms of the Open Parliament Licence, which is published at www.parliament.uk/site-information/copyright/ Enquiries to the Office of Public Sector Information, Kew, Richmond, Surrey TW9 4DU; e-mail: [email protected] Distributed by TSO (The Stationery Office) and available from: Online The Houses of Parliament Shop www.tsoshop.co.uk 12 Bridge Street, Parliament Square London SW1A 2JX Mail, Telephone, Fax & E-mail Telephone orders: 020 7219 3890 TSO General enquiries: 020 7219 3890 PO Box 29, Norwich NR3 1GN Fax orders: 020 7219 3866 Telephone orders/General enquiries: 0870 600 5522 Email: shopwparliament.uk Order through the Parliamentary Hotline Lo-call 0845 7 023474 Internet: Fax orders: 0870 600 5533 http://www.shop.parliament.uk E-mail: customer.serviceswtso.co.uk Textphone: 0870 240 3701 TSOwBlackwell and other Accredited Agents 31645 31645 HighSpeed Rail Bill Modified—26 July 2013 4:45 AM High Speed Rail (Preparation) Bill: Written evidence 3 Contents Camden London Borough Council (HSR 01) Dr Paul Hoad (HSR 02) Dr Chris Eaglen LLB (HSR 03) Andrew Bodman (HSR 04) Wendover HS2 action group (WHS2) (HSR 05) HS2 Action Alliance (HSR 06) Greengauge -

Gender Pay Gap Report 2019

Gender Pay Gap Report 2019 Gender Pay Gap Report 2019 for England, Wales and Scotland JACOBS | GENDER PAY GAP REPORT 2019 This document represents a full report of Jacobs 2019 Gender Pay Gap (GPG) and meets all statutory requirements. Foreword We are pleased to present our We took a closer look at the diversity of the We have eight thriving employee networks latest Gender Pay Gap Report. top level of our company, appointing the that have a shared mission to celebrate Over the past 12 months, inclusion most diverse board in our company’s history and develop Jacobs’ diverse employee and diversity (I&D) has continued to be at the and increasing the number of women on population, provide career enriching heart of everything we do. Since our last U.K. our Executive Leadership Team. We have development opportunities for all levels of Gender Pay Gap Report in April 2019, we’ve 27% women on our board of directors – talent, assist in the attraction and retention undertaken an extensive rebranding exercise, ultimately achieving overall board diversity of top diverse talent, facilitate innovation at and I&D remains central to our brand, our of 45%. We also achieved executive team Jacobs, and contribute to the communities values and our strategy. ‘We live inclusion’ is a diversity of 75% (with six of eight positions in which we live and work. core value, demonstrating our commitment to held by women or people from an ethnic We are proud of our ranking in the top quartile embracing all perspectives and collaborating background). of Forbes Best Employer for Diversity 2020, to make a positive impact. -

Chiltern Main Line: London-Birmingham

Chiltern Main Line: London-Birmingham Streckenbeschreibung zur Chiltern Main Line London-Birmingham Zitat von Aerosoft Die Strecke von London nach Birmingham ist als Chiltern Main Line bekannt und führt von der Londoner Endstation Marylebone nordwestlich zum Birmingham Snow Hill. Diese hochpräzise Chiltern Main Line Route führt Sie etwa 112 Meilen von London nach Birmingham, durch 40 detaillierte Stationen und bietet zahlreiche kundenspezifische Gebäude und Sehenswürdigkeiten. Strecken Features: Streckenklasse: Modern Streckenlänge: ca. 112 Meilen Wunderschöne, detaillierte und eindrucksvolle Landschaften von London Marylebone bis Birmingham Snow Hill 14 Standardszenarien enthalten Quick Drive fähig 40 individuell gefertige Stationen Viele Gebäude und Objekte Stationen: London Marylebone West Hampstead* (London Underground) Kilburn* (London Underground) Willesden Green* (London Underground) Dollis Hill* (London Underground) Neasden* (London Underground) Wembley Stadium Sudbury & Harrow Road Sudbury Hill Harrow Northolt Park South Ruislip* (London Underground) Ruislip Gardens* (London Underground) West Ruislip Denham Denham Golf Club Gerrards Cross Seer Green and Jordans Beaconsfield High Wycombe Saunderton Princes Risborough Haddenham and Thame Parkway Bicester Village Bicester North Kings Sutton Banbury Leamington Spa Warwick Warwick Parkway Hatton Lapworth Dorridge Widney Manor Solihull Olton Acocks Green Tyseley Small Heath Birmingham Moor Street Birmingham Snow Hill *Hinweis: Die mit einem Sternchen gekennzeichneten Stationen der Londoner -

Annual Report 2006

widening markets 2006 providing value… annual report JOHN LAING plc annual report 2006 . ed Office: +44 (0)20 7901 3200 +44 (0)20 7901 3520 el: Register Allington House, 150 Victoria Street, London SW1E 5LB England No. 1345670 Registered T Fax: JOHN LAING plc Further copies of this statement are available from the address below or website at by visiting the Company’s www.laing.com email: [email protected] www.laing.com Forward-looking statements Certain statements contained in this document, including those in the “Operating and Financial Review” constitute statements that are, or may be deemed to be, “forward-looking statements”. In some cases, these forward-looking statements can be identified by the use of forward-looking terminology, including the terms “believes”, “estimates”, “forecasts”, “plans”, “anticipates”, “targets”, “aims”, “continues”, “expects”, “intends”, “may”, “will”, “would” or “should” or, in each case, their negative or other variations or comparable terminology. These forward- looking statements involve known and unknown risks, uncertainties and other factors and include statements regarding the Group’s intentions, beliefs or current expectations concerning, among other things, the Group’s results of operations, financial condition, liquidity, prospects, growth, strategies and the industries in which the Group operates. By their nature, forward-looking statements involve risk and uncertainty because they relate to future events and circumstances. A number of factors could cause actual results and developments to differ materially from those expressed or implied by the forward-looking statements including, without limitation: conditions in the operating margins, anticipated investments and capital expenditures, changing business or other market conditions and general economic conditions. -

THAMES HUB an Integrated Vision for Britain

THAMES HUB An integrated vision for Britain “ We need to recapture the foresight and political courage of our 19th century forebears if we are to establish a modern transport and energy infrastructure in Britain for this century and beyond.” Lord Foster of Thames Bank OM THAMES HUB: An integrated vision for Britain 1 Introduction 3 Executive summary 4 Infrastructure challenges for Britain 6 An integrated approach 10 Improving Britain’s trade with the world 12 Avoiding London’s congestion 14 Protecting against fl oods 16 Creating a new global aviation hub 18 Generating clean energy 23 Integrating infrastructure 24 Balancing the regions 28 Moving forward 30 The team 32 2 Introduction Britain can no longer trade on its inadequate and aged infrastructure. A fast- growing population and an evolving global economy demand that the quality of the UK’s infrastructure be improved and its capacity increased dramatically. This report describes proposals for a Spine which That will also require political imagination and combines energy, communications and data joined-up decision making. The challenges are running the length of the UK. The Spine is fed also opportunities. This report sets out how these by a Thames Hub, which brings together a new opportunities can be explored and how creative, river barrier and crossing, an international airport, environmentally sensitive solutions can be devised shipping and rail complex. It represents a holistic to serve Britain’s long-term infrastructure needs. vision for infrastructure development in Britain. The study was instigated and funded by The Spine is unique as a project, in both architects, Foster + Partners, infrastructure its conception and ambition. -

2012-02-01.Pdf

CONTENTSCONTENTS February 2012 On the Cover Two Become One CH2M HILL, Halcrow combine forces. By Jim Rush Features Advanced Assessment 20 New technology can help reduce risk of damage to structures. By Thomas A. Winant 16 Threading the Eye 22 Jay Dee/Coluccio JV completes Brightwater contract. By Jack Burke Miami-Dade Government Cut 26 Pipeline Replacement Microtunneling, HDD used to replace shallow utility mainlines. By Robin Dill, Ken Watson and Eduardo A. Vega Technical Paper 30 Ventilating Partially Submerged Subway Stations By Rob States, Dan McKinney and Bruce Dandie The Big Bore 32 USA Latest to Use Large-diameter TBM for Highway Project 22 By Jim Rush Columns Editor’s Message.................................... 4 TBM: Tunnel Business Magazine (ISSN 1553-2917) is published six times per year. Copyright 2011, Benjamin Media Inc., P.O. Box 190, Peninsula, OH 44264. USA All rights reserved. No part of this publication may be reproduced or transmitted by any Departments means without written permission from the publisher. One year subscription rates: complimentary in the United States and Canada, and $69 in other foreign countries. Single copy rate: $10. Subscriptions and classified advertising should be addressed Business Briefs ....................................... 6 to the Peninsula office. POSTMASTER: send Changes of Address to TBM: Tunnel Business Magazine, P.O. Box 190, Peninsula OH 44264 USA. Global News ....................................... 13 Canadian Subscriptions: Canada Post Agreement Number 7178957. Send change UCA of SME Newsletter ............................ 14 address information and blocks of undeliverable copies to Canada Express; 7686 Kimble Street, Units 21 & 22, Mississauga, ON L5S 1E9 Canada Upcoming Projects ................................ 34 Calendar........................................... 41 Ad Index .......................................... -

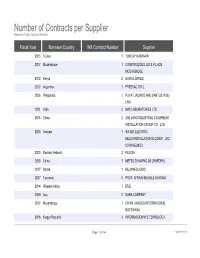

Number of Contracts Per Supplier Based on Major Contract Awards

Number of Contracts per Supplier Based on Major Contract Awards Fiscal Year Borrower Country WB Contract Number Supplier 2015 Turkey 1 TUNCAY KARAMAN 2017 Mozambique 1 CONSTRUÇÕES JJR & FILHOS MOÇAMBIQUE 2012 Kenya 2 DAWA LIMITED 2012 Argentina 1 PRESVAC S.R.L. 2006 Philippines 1 PUYAT JACINTO AND SANTOS (PJS) LAW 2001 India 2 MAC LABORATORIES LTD. 2015 China 2 ZHEJIANG INDUSTRIAL EQUIPMENT INSTALLATION GROUP CO., LTD. 2008 Vietnam 1 WATER ELECTRIC MECH.INSTALLATION & CONST. JSC (COWAELMIC) 2000 Russian Federat 3 PEG SA 2005 China 1 METSO DYNAPAC AB (SWEDEN) 2017 Serbia 1 BILJANA DJOKIC 2007 Tanzania 4 PROF. AFRAIM MUHALE HAYUMA 2014 Western Africa 1 BRLI 2009 Iraq 2 DARA COMPANY 2012 Mozambique 1 CHINA JIANGSU INTERNATIONAL BOTSWANA 2016 Kyrgyz Republic 3 INFORMACIONNYE TEHNOLOGII Page 1 of 564 09/27/2021 Number of Contracts per Supplier Based on Major Contract Awards Total Contract Amount (USD) 17831.00 6059207.00 1270093.00 2113218.00 100352.00 345198.00 3407309.00 6670902.00 259200.00 335880.00 5969.00 185492.00 306749.00 36126.00 1330504.00 169792.00 Page 2 of 564 09/27/2021 Number of Contracts per Supplier Based on Major Contract Awards 2006 Yemen, Republic 1 GAYTH AQUATECH LTD 2013 Tanzania 3 M/S Q & A COMPANY 2016 Bangladesh 1 THAKRAL INFORMATION SYSTEMS PVT. LTD. 2012 Bosnia and Herz 1 SENAD DZANANOVIC 2002 Timor-Leste 2 BARRETT COMMUNICATIONS PTY LTD 2011 Paraguay 1 MANPOWER PARAGUAY S.R.L. 2014 Moldova 1 PUBLICATIA PERIODICA REVISTA URMA TA 2016 Western Africa 1 HUGUES SERGE SEGLA GUIDIGBI 2005 Lebanon 3 SOUTH CONTRACTING COMPANY 2015 Cote d'Ivoire 1 KPOLO MAPRI DOMINIQUE 2005 Morocco 6 SCET-MAROC 2001 China 2 XIAMEN JIAN BANG GROUP CO. -

Chapter 07: Travel

Chapter 7 Travel Here are a few of the more memorable events relating to people encountered whilst travelling. In recent years Margaret and I have taken to cruises. Margaret enjoys these because of the high level of service; for me, the interest is meeting people from diverse places. Some of the experiences include: Rio-de-Janeiro In Carnival, I rate this the number one location to return to. I find it impossible to put into words the all-pervading atmosphere. The whole thing just oozed sensuality and two hundred drummers at one hit got your attention. At 8.0am when the last, and poorest of the poor, Samba School paraded, there was such a welling up of support that it had me, of all people, in tears. The downside of course is law and order. One night a youth tried to snatch my watch, but maybe he was attempting to get some money to buy some bread to feed his mother in the flavella. A more serious problem was on the only occasion when I left the hotel with any money in order to buy presents. I strayed from the main street, felt insecure as a press of people assembled round me and started to put my hand into my pocket containing the wallet. On feeling my hand withdrawn from the pocket I spun round to be confronted by three men. Good morning! The police were quite efficient and I then had to go to American Express for a voucher for more funds, with my passport (they gave me 98 cents to the pound!). -

THAMES HUB an Integrated Vision for Britain

THAMES HUB An integrated vision for Britain “ We need to recapture the foresight and political courage of our 19th century forebears if we are to establish a modern transport and energy infrastructure in Britain for this century and beyond.” Lord Foster of Thames Bank OM THAMES HUB: An integrated vision for Britain 1 Introduction 3 Executive summary 4 Infrastructure challenges for Britain 6 An integrated approach 10 Improving Britain’s trade with the world 12 Avoiding London’s congestion 14 Protecting against fl oods 16 Creating a new global aviation hub 18 Generating clean energy 23 Integrating infrastructure 24 Balancing the regions 28 Moving forward 30 The team 32 2 Introduction Britain can no longer trade on its inadequate and aged infrastructure. A fast- growing population and an evolving global economy demand that the quality of the UK’s infrastructure be improved and its capacity increased dramatically. This report describes proposals for a Spine which That will also require political imagination and combines energy, communications and data joined-up decision making. The challenges are running the length of the UK. The Spine is fed also opportunities. This report sets out how these by a Thames Hub, which brings together a new opportunities can be explored and how creative, river barrier and crossing, an international airport, environmentally sensitive solutions can be devised shipping and rail complex. It represents a holistic to serve Britain’s long-term infrastructure needs. vision for infrastructure development in Britain. The study was instigated and funded by The Spine is unique as a project, in both architects, Foster + Partners, infrastructure its conception and ambition. -

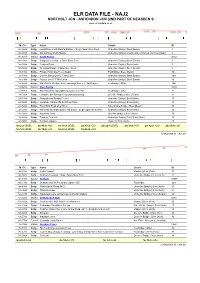

Elr Data File - Naj2 Northolt Jcn - Ashendon Jcn (2Nd Part of Neasden S

ELR DATA FILE - NAJ2 NORTHOLT JCN - ASHENDON JCN (2ND PART OF NEASDEN S www.railwaydata.co.uk M. Ch. Type Name Details ID 0m 02ch Bridge Long Drive (south Ruislip Station) - Single Span Steel Deck Underline Bridge | Steel (Beam) 1 0m 04ch Bridge Stn Subway South Ruislip Underline Bridge | Cast in-situ reinforced Concrete (Slab) 2 0m 07ch Station South Ruislip SRU 0m 38ch Bridge Bridgewater Road - 2 Span Steel Deck Underline Bridge | Steel (Beam) 3 0m 55ch Bridge Yeading Brook Underline Bridge | Brick (Arch) 5 0m 58ch Bridge West End Road - 2 Span Steel Deck Underline Bridge | Steel (Beam) 6 1m 31ch Bridge Private Foobridge To Lul Depot Footbridge | Steel (Beam) 8A 1m 51ch Bridge Carries Siding Over L T Met Lines Underline Bridge | Steel (Beam) 10A 1m 51ch Bridge Passes Over L T Met Lines Underline Bridge | Steel (Beam) 10 1m 68ch Bridge Stn Ft Br W Ruislip- 3moreopenings Over L .t. (lul Bridge) Footbridge | Other 10B 1m 67ch Station West Ruislip WRU 1m 77ch Bridge Stn Ft Br W Ruislipadjbr12wasonceat1m75c Footbridge | Other 11 1m 78ch Bridge Longlane Stn Rd 4openingsunderrdiadjshop Overline Bridge | Steel (Beam) 12 2m 17ch Bridge Footpath And Ruislip Feeder Underline Bridge | Brick (Arch) 13 2m 50ch Bridge Ickenham Garden Rd And River Pinn Underline Bridge | Brick (Arch) 14 2m 50ch Bridge River Pinn Road Over River Side of Line Bridge | Steel (Beam) 15 2m 58ch Bridge Harefield Rd. Brakespeare Rd South - Single Span Brick Arch Underline Bridge | Brick (Arch) 16 3m 32ch Bridge Harefield Road Overline Bridge | Brick (Arch) 18 3m 47ch Bridge Denham East Jct Underline Bridge | Early Steel (Slab) 19 4m 00ch Bridge Denham Viaduct Viaduct | Brick (Arch) 20 0m 2ch LEVEL 0m 45ch +430 1m 50ch LEVEL 2m 10ch -520 2m 22ch LEVEL 2m 39ch +660 2m 42ch +260 2m 50ch +225 3m 3ch LEVEL 3m 18ch -225 3m 52ch LEVEL 3m 66ch +225 1 (NAJ2 000.01 - 004.03) M. -

Halcrow Group Limited Fort William Active Travel Audit Final Summary Report July 2010

Halcrow Group Limited Fort William Active Travel Audit Final Summary Report July 2010 HITRANS Halcrow Group Limited Fort William Active Travel Audit Final Summary Report July 2010 HITRANS Halcrow Group Limited City Park 368 Alexandra Parade Glasgow G31 3AU Scotland Tel +44 (0)141 552 2000 Fax +44 (0)141 559 4452 / 552 2525 www.halcrow.com Halcrow Group Limited has prepared this report in accordance with the instructions of their client, , for their sole and specific use. Any other persons who use any information contained herein do so at their own risk. © Halcrow Group Limited 2011 Halcrow Group Limited City Park 368 Alexandra Parade Glasgow G31 3AU Scotland Tel +44 (0)141 552 2000 Fax +44 (0)141 559 4452 / 552 2525 www.halcrow.com HITRANS Fort William Active Travel Audit Final Summary Report Contents Amendment Record This report has been issued and amended as follows: Issue Revision Description Date Approved by 01 - Draft Summary Report 20/07/09 CK/JP 02 - Final Summary Report 14/07/10 CK 03 - Plans moved to Appendix 05/08/11 JP Contents 1 Introduction 1 1.1 Background 1 2 Active Travel Methodology 2 2.1 What is the methodology? 2 3 Walking and Cycling in the Fort William Area 4 3.1 Overview of current conditions for active travel 4 3.2 Study area 6 3.3 Existing provision for cycling 10 3.4 Existing cycle parking 10 3.5 Traffic flow and accident data 12 3.6 Public transport 12 3.7 Fort William mountain bike championships 16 3.8 The Highland Council policy documents 17 3.9 Fort William Waterfront Master Plan 18 3.10 Current issues 19 -

Partnering: the Right Procurement Tool for Risky Contracts

Partnering: The Right Procurement Tool for Risky Contracts Joep Athmer, Ben Hamer, Tim Kersley and Phillip Sanderson Partnering: The Right Procurement Tool for Risky Contracts Abstract Introduction Partnering has been increasingly used both in the UK Despite the recent progress, partnering in the UK and internationally as a means to facilitate a proactive continues to mean different things to different people. approach to problem solving as a project team rather The difference between “project” and “strategic” than adopting a reactive, adversarial approach partnering is fundamental and could reflect the commonly associated with the more traditional forms difference between a client’s desire to partner on a of contract. short-term or long-term basis. The Environment Agency, Halcrow Group Ltd and There are a number of purists who would argue that Van Oord UK Ltd are partners who have been active “project partnering” is a contradiction in terms: indeed, in promoting the use of partnering type procurement, the 1991 National Economic Development Council design and construction since 1996. All partners (NEDC) Report adopts a definition of partnering, which participated in three distinct ‘Frameworks’ for the requires that partnering be a “long-term commitment”. programme of flood defences in England and Wales. A proactive partnering approach opposed to a reactive, Namely, the National Environmental and Engineering sometimes adversarial approach certainly gives many Consultancy Agreement; the National Contractors’ clear advantages. These include better forward Framework; and, the Combined Beach Management planning of resources, better cost control and value for Framework. These ‘Frameworks’ apply the principles money. Partnering should be voluntary and willing on all of Partnering by using the tools available within the sides, based on joint ownership of common objectives New Engineering Contract (NEC), comprising both and commitment.